Virtual Memory

Learned in SE350.

Also called secondary memory.

Virtual memory is a facility that allows programs to address memory from a logical point of view, without regard to the amount of Main Memory physically available. Virtual memory was conceived to meet the requirement of having multiple user jobs reside in main memory concurrently, so that there would not be a hiatus between the execution of successive processes while one process was written out to secondary store and the successor process was read in.

Hardware and Control Structures

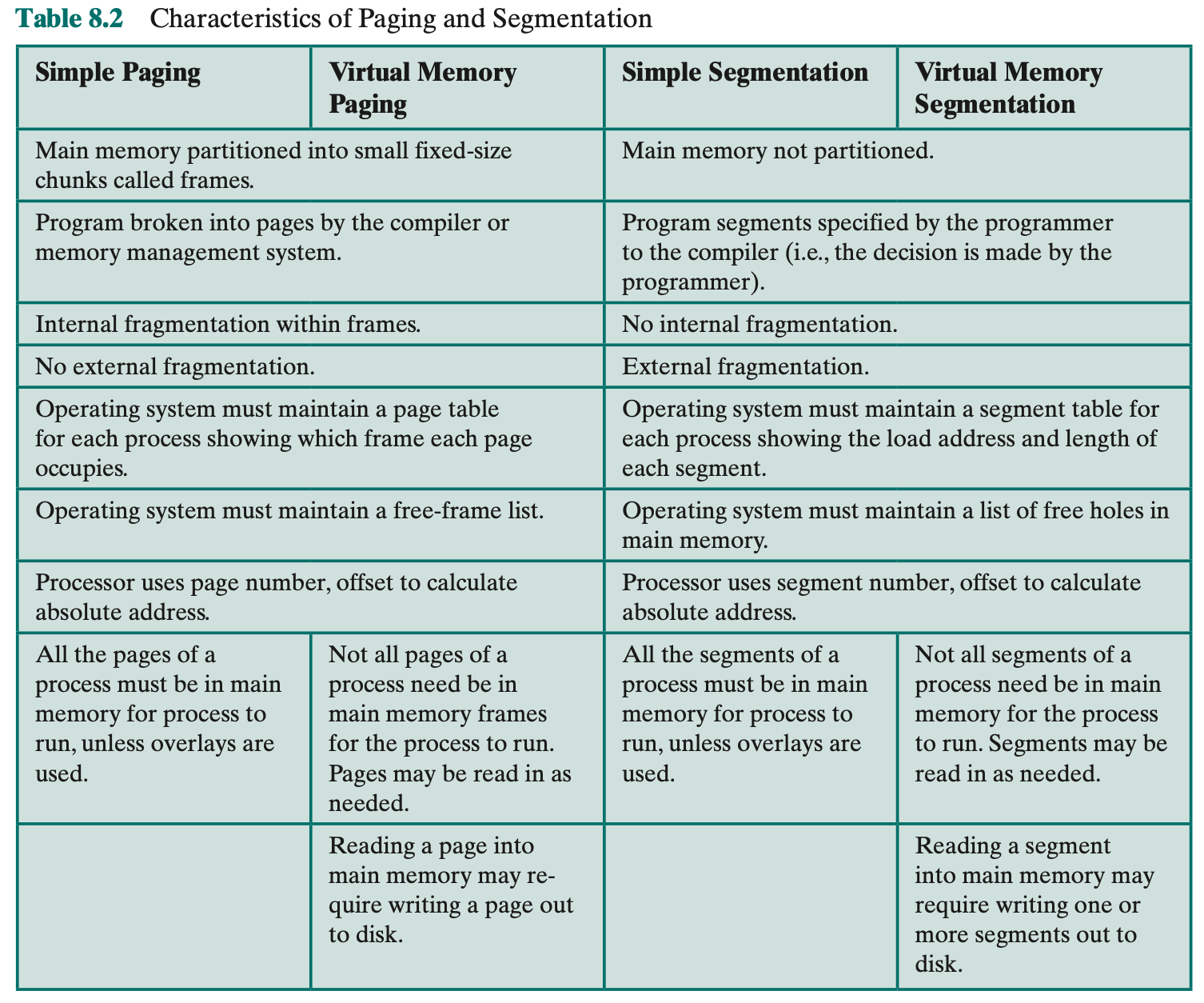

Two characteristics of Paging and Segmentation are the keys to this breakthrough:

- All memory references within a process are logical addresses that are dynamically translated into physical addresses at run time. This means that a process may be swapped in and out of main memory such that it occupies different regions of main memory at different times during the course of execution.

- A process may be broken up into a number of pieces (pages or segments) and these pieces need not be contiguously located in main memory during execution. The combination of dynamic run-time address translation and the use of a page or segment table permits this.

If the preceding two characteristics are present, then it is not necessary that all of the pages or all of the segments of a process be in main memory during execution.

- The OS begins by bringing in only one or a few pieces, to include the initial program piece and the initial data piece to which those instructions refer. Portion of a process that is actually in main memory at any time is called the resident set of the process.

- If the processor encounters a logical address that is not in main memory, it generates an interrupt indicating a memory access fault. Put the interrupted process in Blocking State. process to proceed later, the OS must bring into main memory the piece of the process that contains the logical address that caused the access fault. OS issues a disk I/O (input/output) read request. OS can dispatch another process to run while the disk I/O is performed. Once the desired piece has been brought into main memory, an I/O interrupt is issued, giving control back to the OS, which places the affected process back into a Ready state.

Two implications:

- More processes may be maintained in main memory.

- A process may be larger than all of main memory

- that job is left to the OS and the hardware

- The OS automatically loads pieces of a process into main memory as required

Because a process executes only in main memory, that memory is referred to as real memory. But a programmer or user perceives a potentially much larger memory—that which is allocated on disk. This latter is referred to as virtual memory.

Locality and Virtual Memory

Let’s examine the task of the OS with respect to the virtual memory.

- We can make better use of memory by loading in just a few pieces. Then, if the program branches to an instruction or references a data item on a piece not in main memory, a fault is triggered. This tells the OS to bring in the desired piece.

- only a few pieces of any given process are in memory, and therefore more processes can be maintained in memory

- time is saved because unused pieces are not swapped in and out of memory

- Thus, when the OS brings one piece in, it must throw another out. If it throws out a piece just before it is used, then it will just have to go get that piece again almost immediately. Too much of this leads to a condition known as thrashing: The system spends most of its time swapping pieces rather than executing instructions

- OS tries to guess, based on recent history, which pieces are least likely to be used in the near future

- Based on principle of locality, the principle of locality states that program and data references within a process tend to cluster. Hence, the assumption that only a few pieces of a process will be needed over a short period of time is valid. Also, it should be possible to make intelligent guesses about which pieces of a process will be needed in the near future, which avoids thrashing

- For virtual memory to be practical and effective, two ingredients are needed.

- First, there must be hardware support for the paging and/or segmentation scheme to be employed.

- Second, the OS must include software for managing the movement of pages and/or segments between secondary memory and main memory

Paging

- A page table is also needed for a virtual memory scheme based on paging.

- More complex, because only some of the pages of a process may be in main memory, a bit is needed in each page table entry to indicate whether the corresponding page is present (P) in main memory or not. If the bit indicates that the page is in memory, then the entry also includes the frame number of that page.

- The page table entry includes a modify (M) bit, indicating whether the contents of the corresponding page have been altered since the page was last loaded into main memory

Page Table Structure

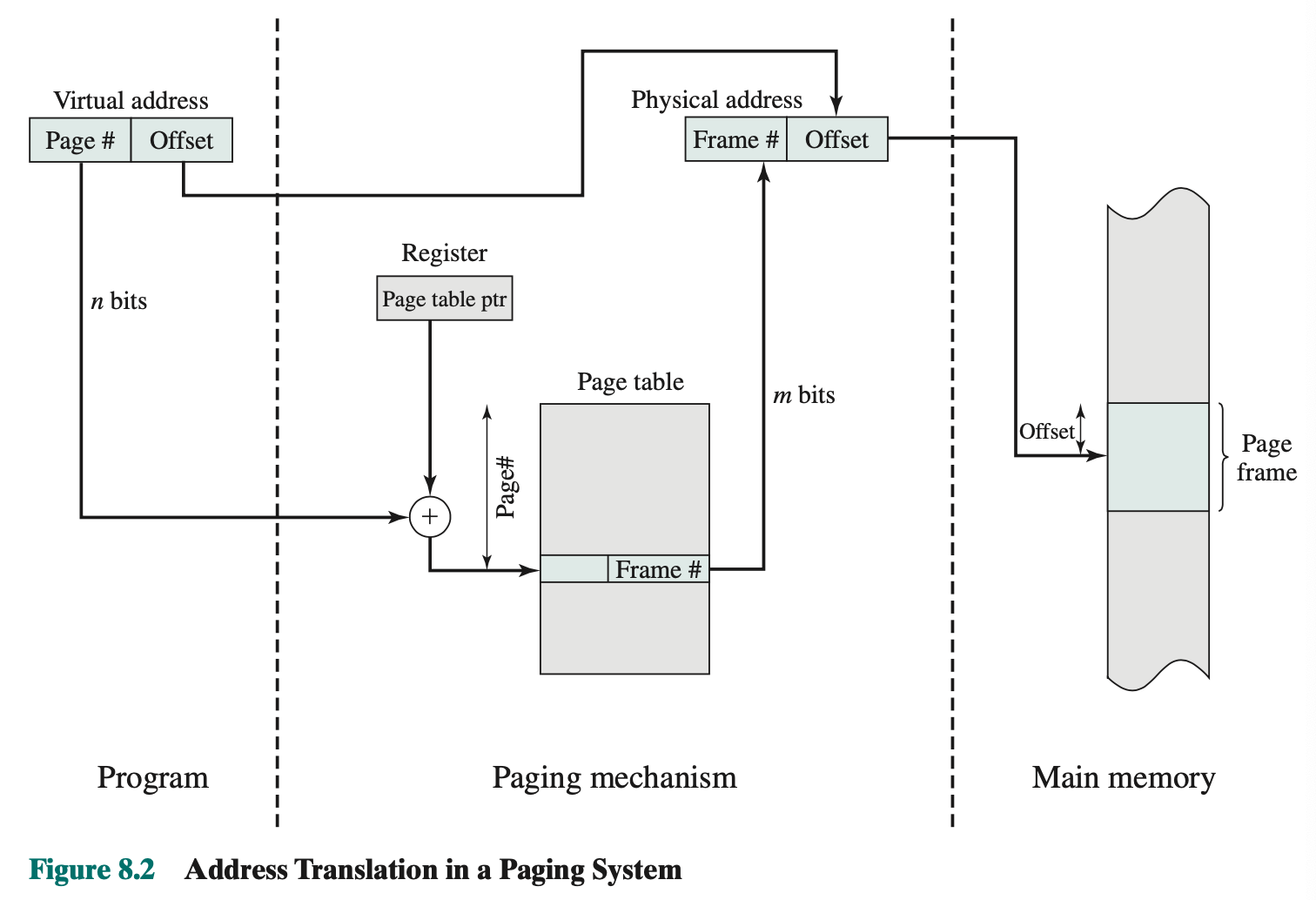

- The basic mechanism for reading a word from memory involves the translation of a virtual, or logical, address, consisting of page number and offset, into a physical address, consisting of frame number and offset, using a page table.

- Since page table is of variable length, depending on the size of the process, we cannot expect to hold it in registers. Instead, it must be in main memory to be accessed.

- The page number of a virtual address is used to index that table and look up the corresponding frame number.

- combined with the offset portion of the virtual address to produce the desired real address

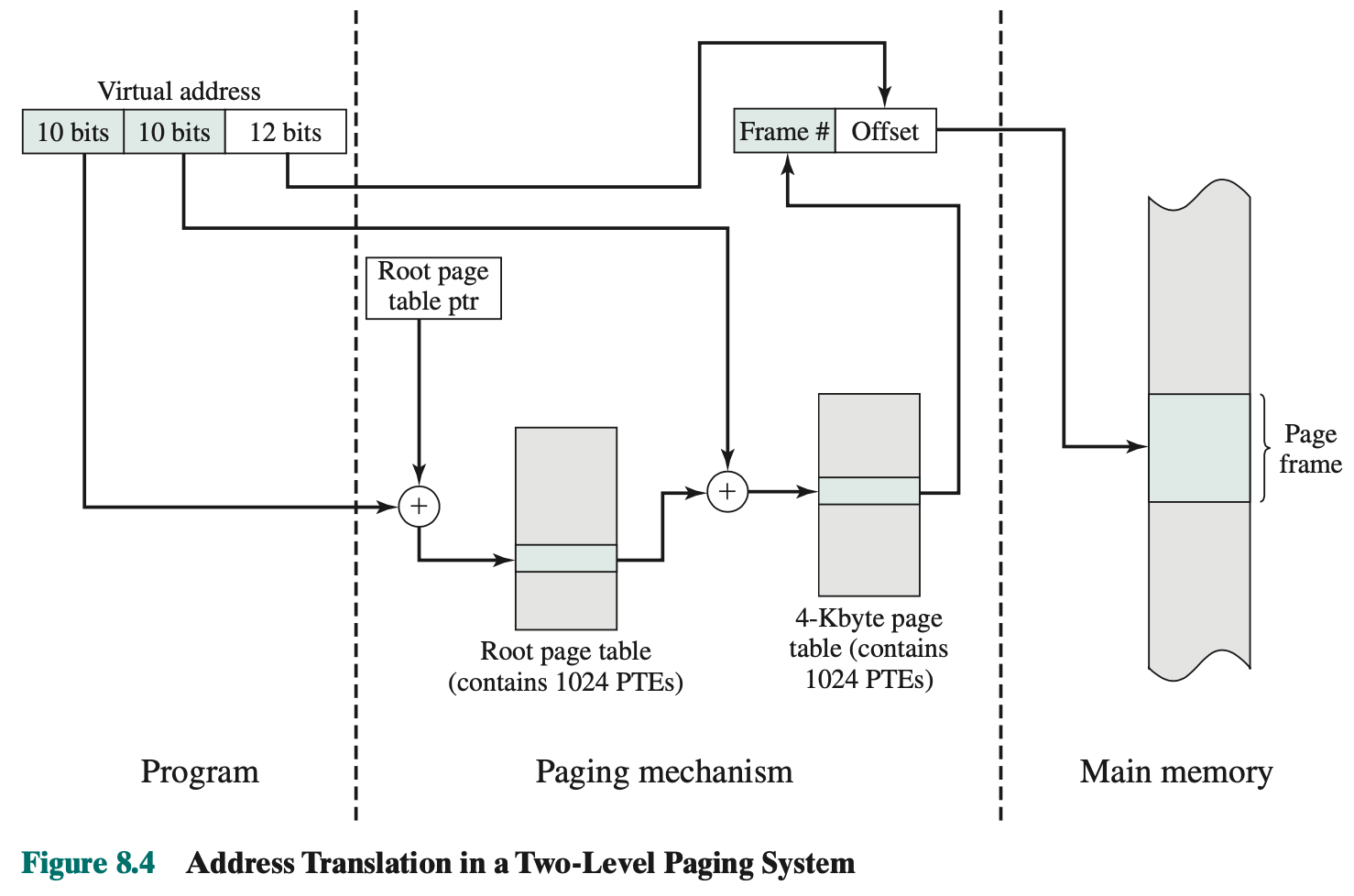

- most virtual memory schemes store page tables in virtual memory rather than real memory

- page tables are subject to paging just as other pages are. When a process is running, at least a part of its page table must be in main memory, including the page table entry of the currently executing page

- the maximum length of a page table is restricted to be equal to one page.

- The root page always remains in main memory. The first 10 bits of a virtual address are used to index into the root page to find a PTE for a page of the user page table. If that page is not in main memory, a page fault occurs. If that page is in main memory, then the next 10 bits of the virtual address index into the user PTE page to find the PTE for the page that is referenced by the virtual address.

Inverted Page Table

- An alternative approach to the use of one or multiple-level page tables is the use of an inverted page table structure

- The page number portion of a virtual address is mapped into a hash value using a simple hashing function.

- hash value is a pointer to the inverted page table, which contains the page table entries.

- There is one entry in the inverted page table for each real memory page frame rather than one per virtual page.

- The page table’s structure is called inverted because it indexes page table entries by frame number rather than by virtual page number.

- What does it mean frame number???

- The hash function maps the n-bit page number into an m-bit quantity, which is used to index into the inverted page table.

Each entry in the page table includes:

- Page number

- Process identifier

- Control bits

- Chain pointer

Translation Lookaside Buffer

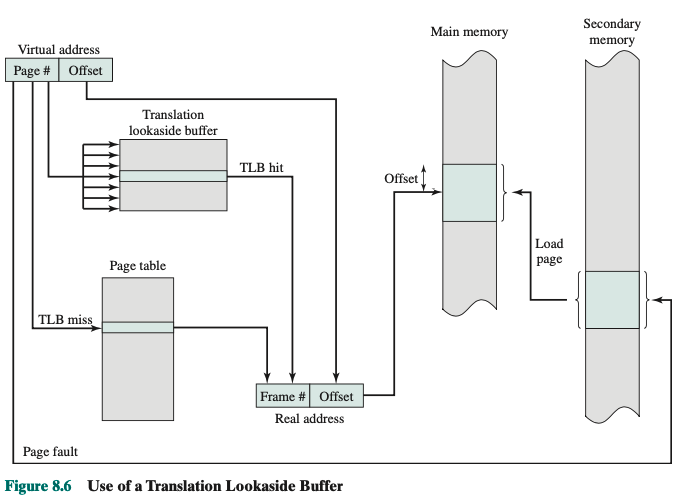

- Every virtual memory reference can cause two physical memory accesses: one to fetch the appropriate page table entry and another to fetch the desired data.

- Most virtual memory schemes make use of a special high-speed cache for page table entries, usually called a translation lookaside buffer (TLB)

- This cache functions in the same way as a memory cache and contains those page table entries that have been most recently used

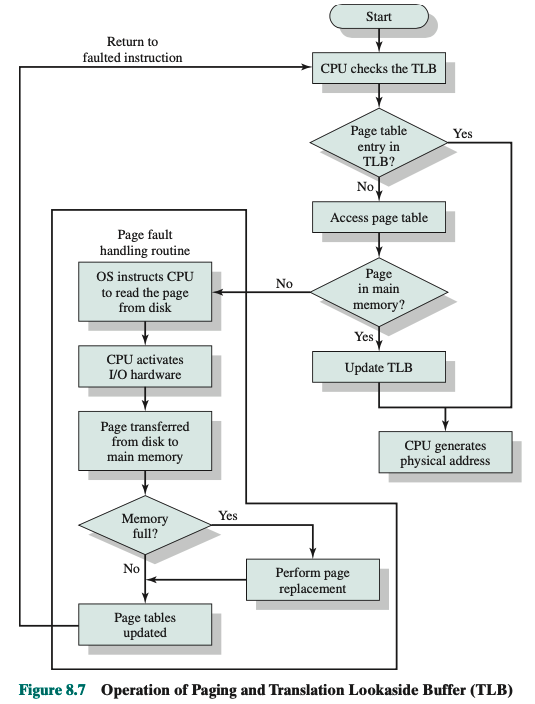

- Given a virtual address, the processor will first examine the TLB. If the desired page table entry is present (TLB hit), then the frame number is retrieved and the real address is formed.

- If the desired page table entry is not found (TLB miss), then the processor uses the page number to index the process page table and examine the corresponding page table entry.

- If the “present bit” is set, then the page is in main memory, and the processor can retrieve the frame number from the page table entry to form the real address.

- The processor also updates the TLB to include this new page table entry

- If the present bit is not set, then the desired page is not in main memory and a memory access fault, called a page fault.

- leave the realm of hardware and invoke the OS, which loads the needed page and updates the page table.

- To keep the flowchart simple, the fact that the OS may dispatch another process while disk I/O is underway is not shown. By the principle of locality, most virtual memory references will be to locations in recently used pages.

- Most references will involve page table entries in the cache.

- each entry in the TLB must include the page number as well as the complete page table entry.

- associative mapping

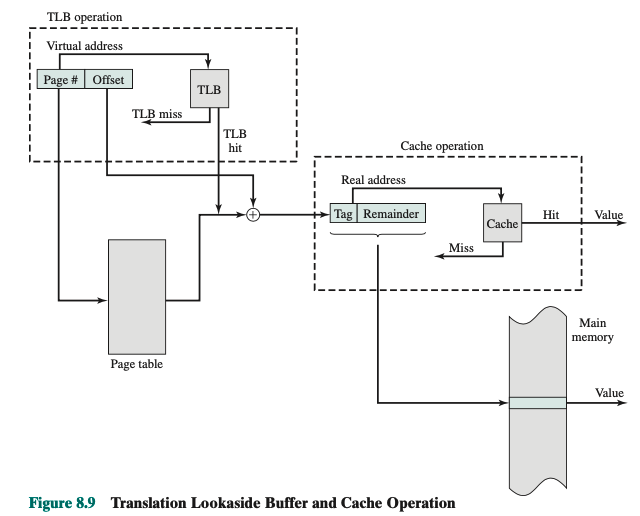

- Finally, the virtual memory mechanism must interact with the cache system (not the TLB cache, but the main memory cache). This is illustrated in Figure 8.9. A virtual address will generally be in the form of a page number, offset.

- First, the memory system consults the TLB to see if the matching page table entry is present.

- If it is, the real (physical) address is generated by combining the frame number with the offset.

- If not, the entry is accessed from a page table.

- Once the real address is generated, which is in the form of a tag and a remainder, the cache is consulted to see if the block containing that word is present.

- If so, it is returned to the CPU. If not, the word is retrieved from main memory.

- The virtual address is translated into a real address. This involves reference to a page table entry, which may be in the TLB, in main memory, or on disk. The referenced word may be in cache, main memory, or on disk.

- If the referenced word is only on disk, the page containing the word must be loaded into main memory and its block loaded into the cache. In addition, the page table entry for that page must be updated.

Page Size

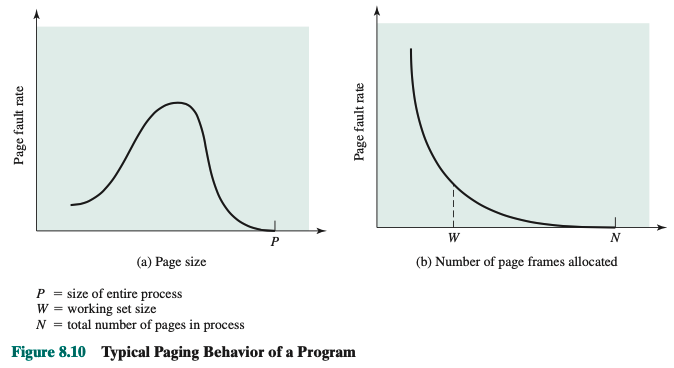

- The smaller the page size, the lesser is the amount of internal fragmentation.

- To optimize the use of main memory, we would like to reduce internal fragmentation.

- The smaller the page, the greater is the number of pages required per process. More pages per process means larger page tables.

- If the page size is very small, then ordinarily a relatively large number of pages will be available in main memory for a process.

- After a time, the pages in memory will all contain portions of the process near recent references. Thus, the page fault rate should be low.

- As the size of the page is increased, each individual page will contain locations further and further from any particular recent reference. Thus, the effect of the principle of locality is weakened and the page fault rate begins to rise.

- When a single page encompasses the entire process, there will be no page faults.

- In Figure 8.10b, shows that for a fixed page size, the fault rate drops as the number of pages maintained in main memory grows.

- Finally, the design issue of page size is related to the size of physical main memory and program size.

Most commercial operating systems will support only one page size, regardless of the capacity of the underlying hardware. Reason: page size affects many aspects of the OS, thus a change to multiple page sizes is a complex undertaking.

Segmentation

- Segmentation allows the programmer to view memory as consisting of multiple address spaces or segments.

- Segments may be unequal, dynamic size.

- Memory references consist of a (segment number, offset) form of address.

Advantages:

- Simplifies handling of growing data structures. With segmented virtual memory, the data structure can be assigned its own segment, and the OS will expand or shrink the segment as needed. 1. If a segment that needs to be expanded is in main memory and there is insufficient room, the OS may move the segment to a larger area of main memory, if available, or swap it out. In the latter case, the enlarged segment would be swapped back in at the next opportunity.

- Allows programs to be altered and recompiled independently, without requiring the entire set of programs to be relinked and reloaded.

- Allows sharing among processes. Can place a utility program or a useful table of data in a segment that can be referenced by other processes.

- Allows protection