SE464: Software testing and Quality Assurance

Useless class that we have to take. No midterm. Taken with Wei Yi Shang.

Topic 1: Working with existing tests

- How much does your test cover the code

- How good is existing test?

Topic 2: Writing new tests

- How to write good new test?

- Different types of tests to write beyond Junit

Topic 3: Testing practice during development

- Testing in a release pipeline

- Adapting tests for ever evolving code

Topic 4: Testing in the field

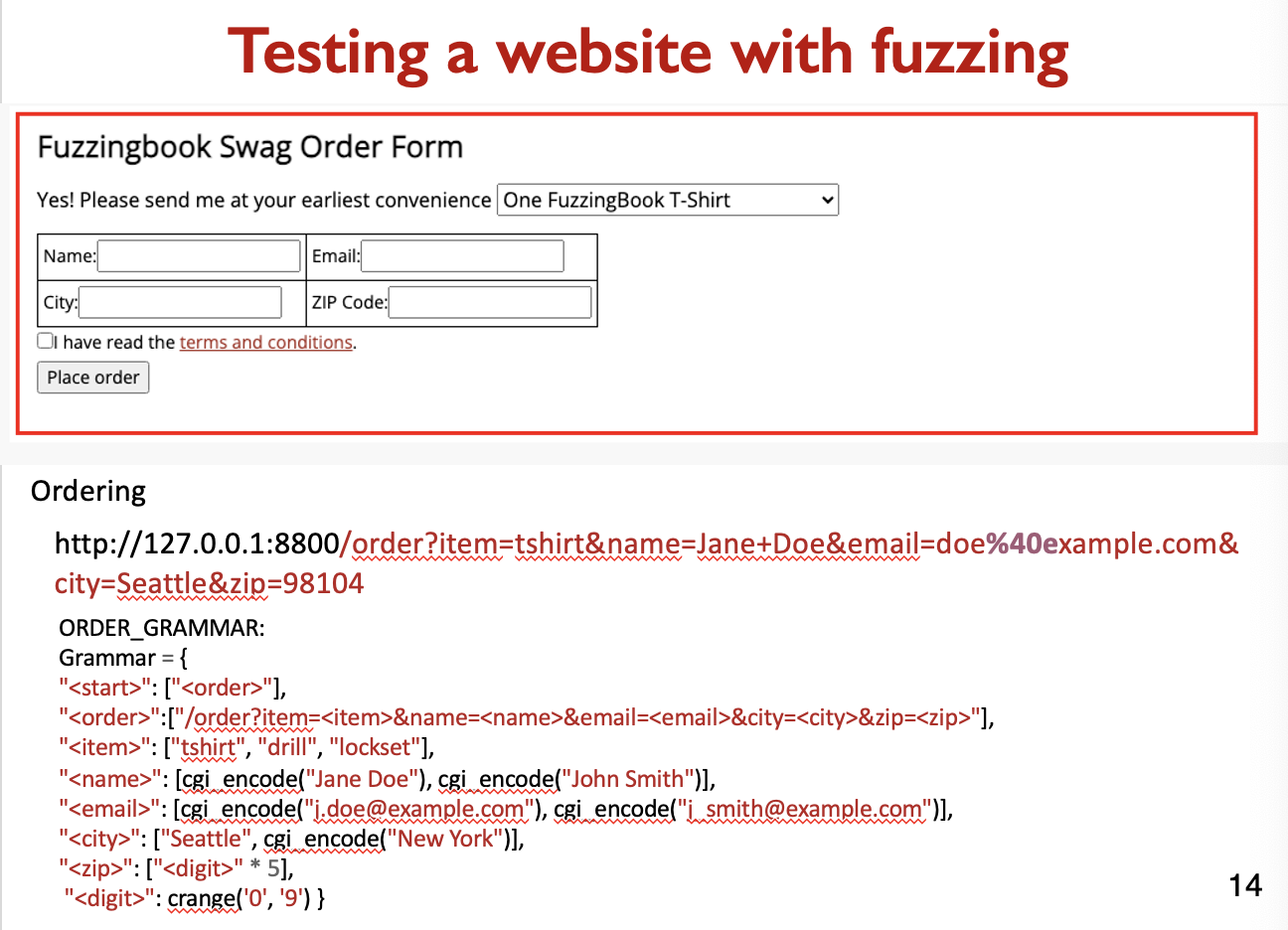

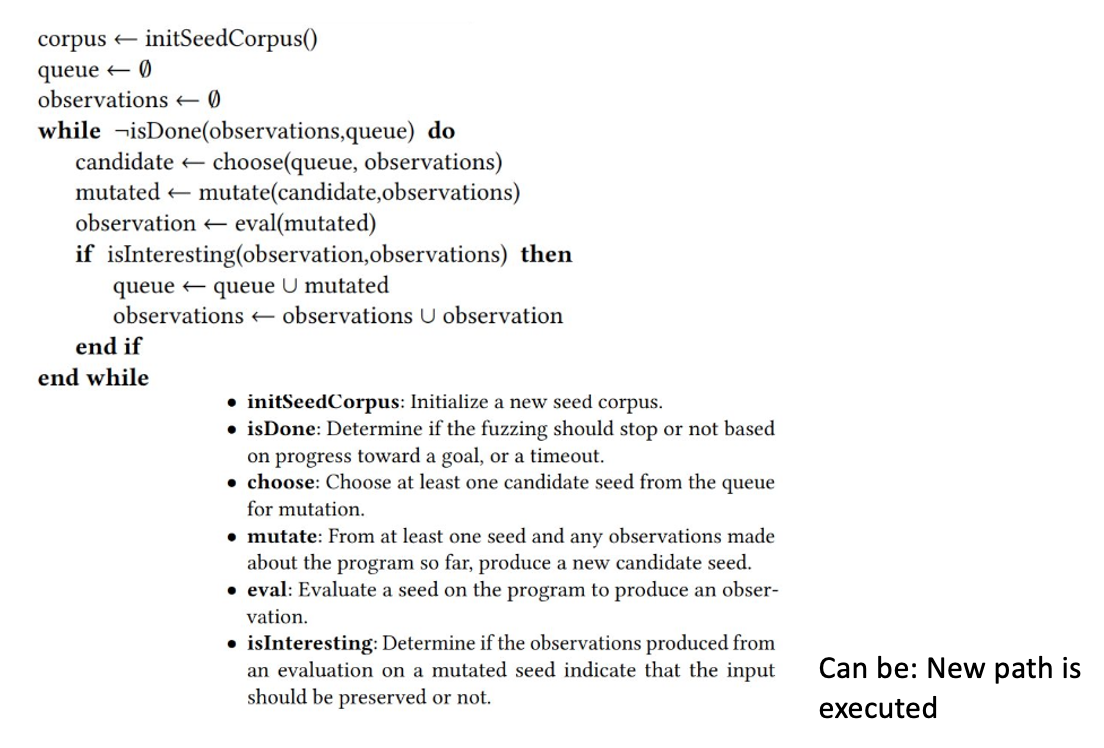

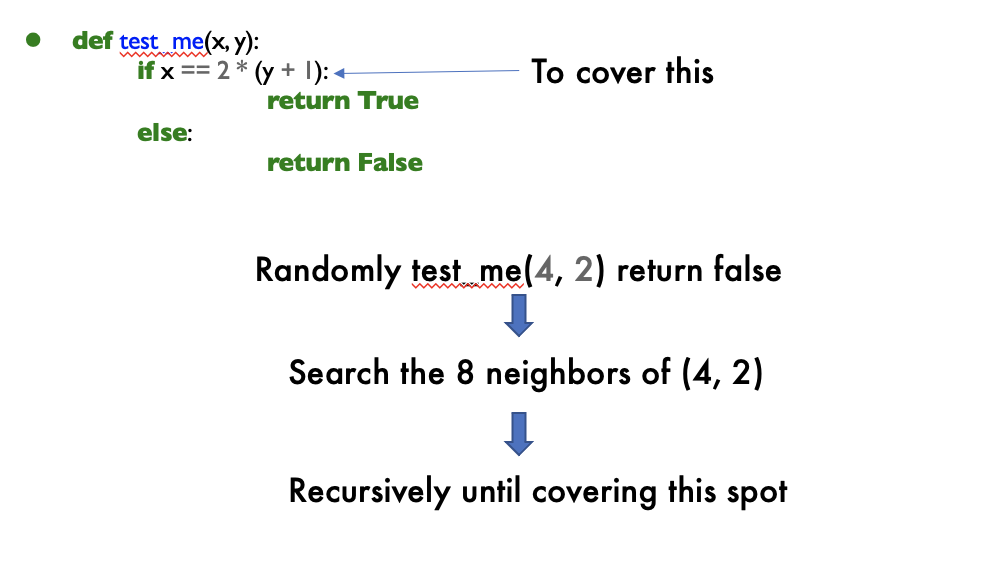

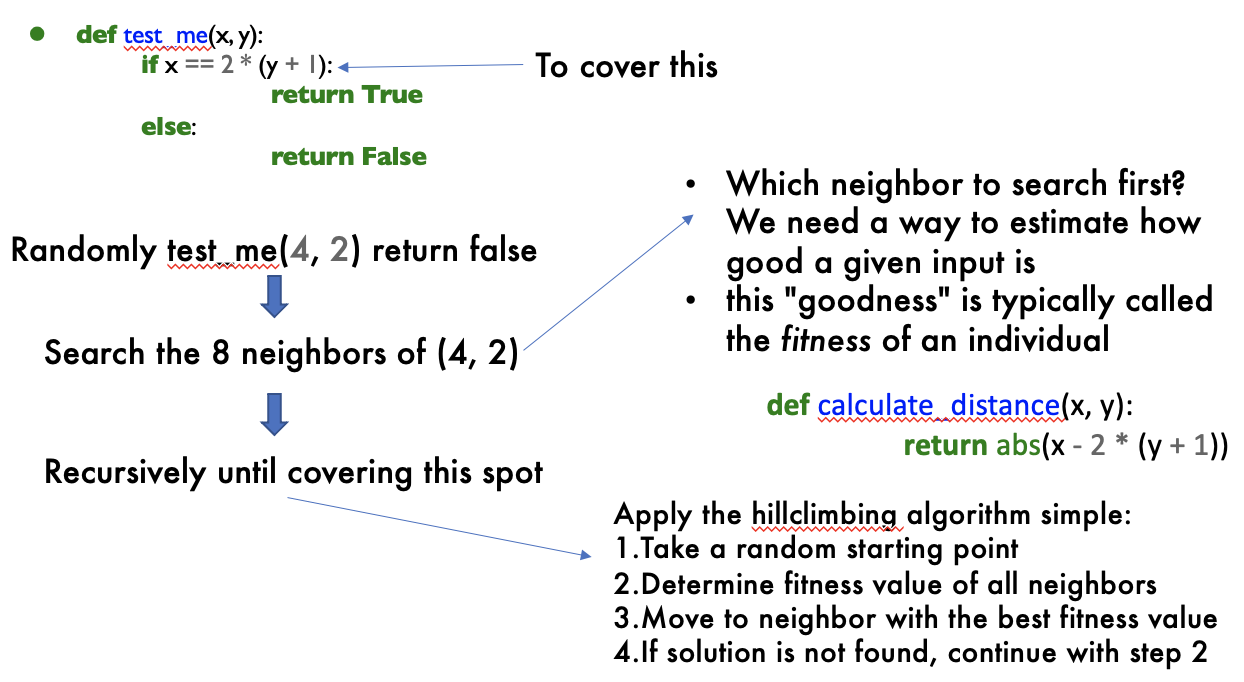

- Logging, A/B testing, Fuzzing, chaos testing

Concepts

- JUnit

- Software Errors

- Software Testing

- Software Quality

- Software Quality Assurance

- Control Flow Graph

- Code Coverage

- Data Flow Graph

- Mutation Testing

- Mocking

- User Interface Testing

Week 11: refactoring, before logging

Assignments

- A3 Mock

- A4 User Interface Testing

- A5 Fuzzing

- A6 Release Pipeline

- A7 Automated Testing Results Analysis

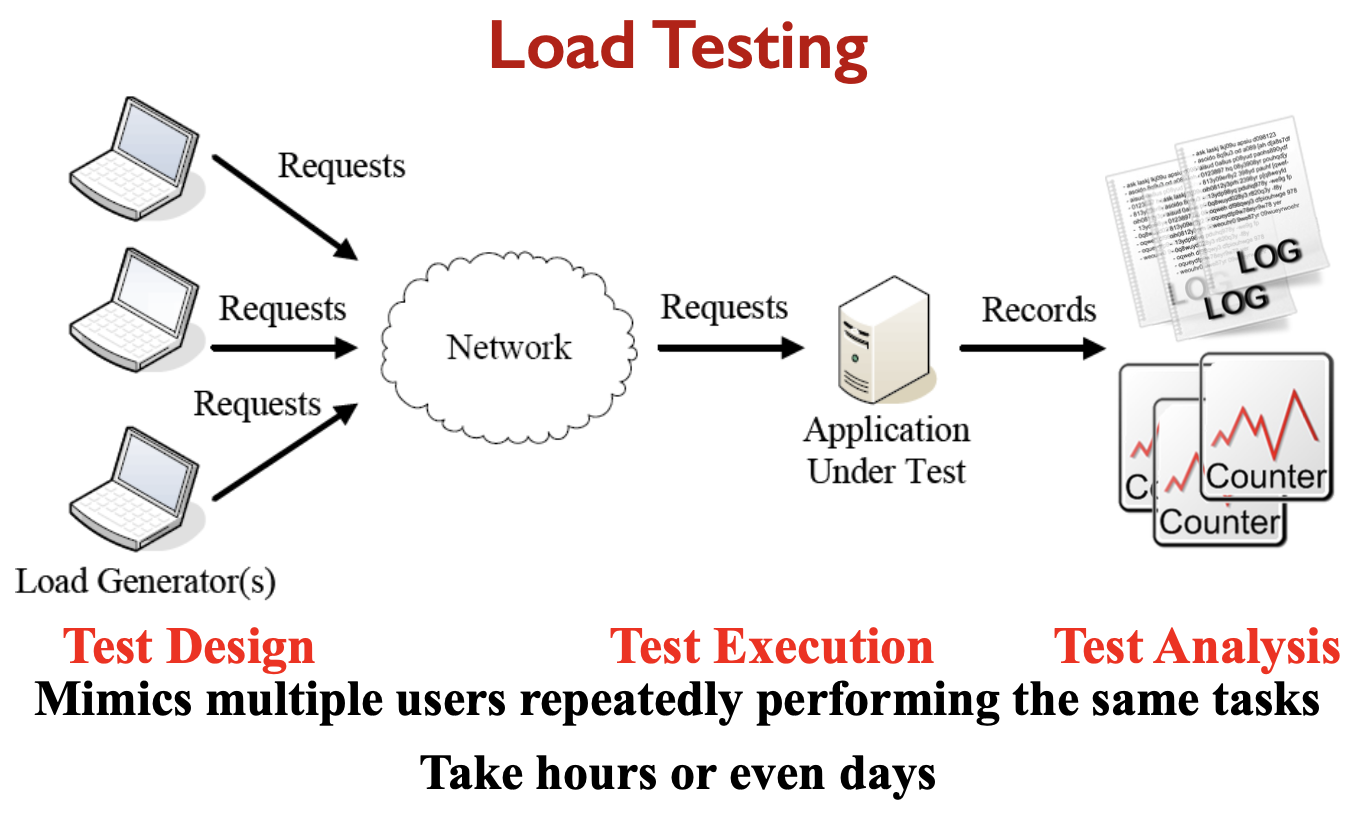

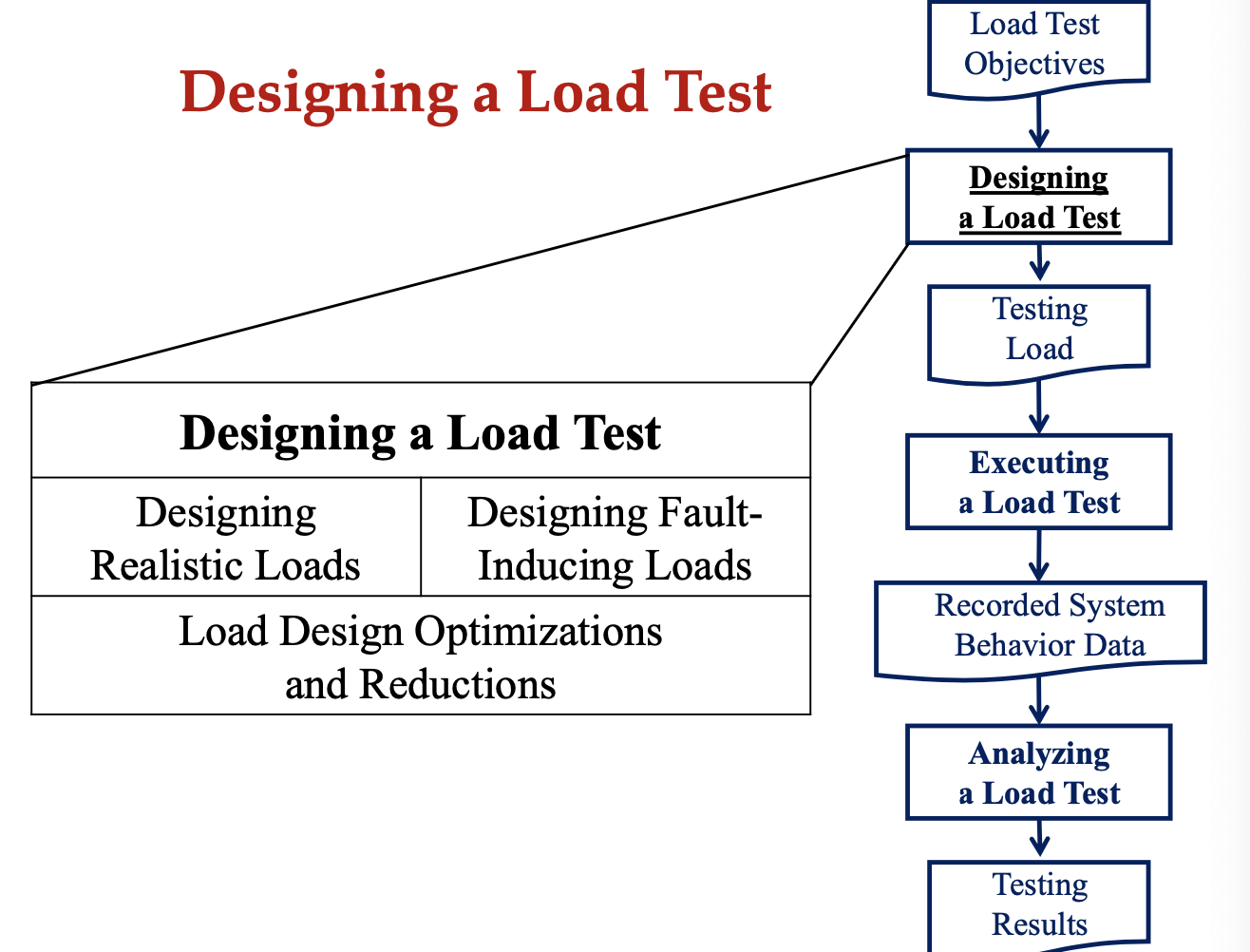

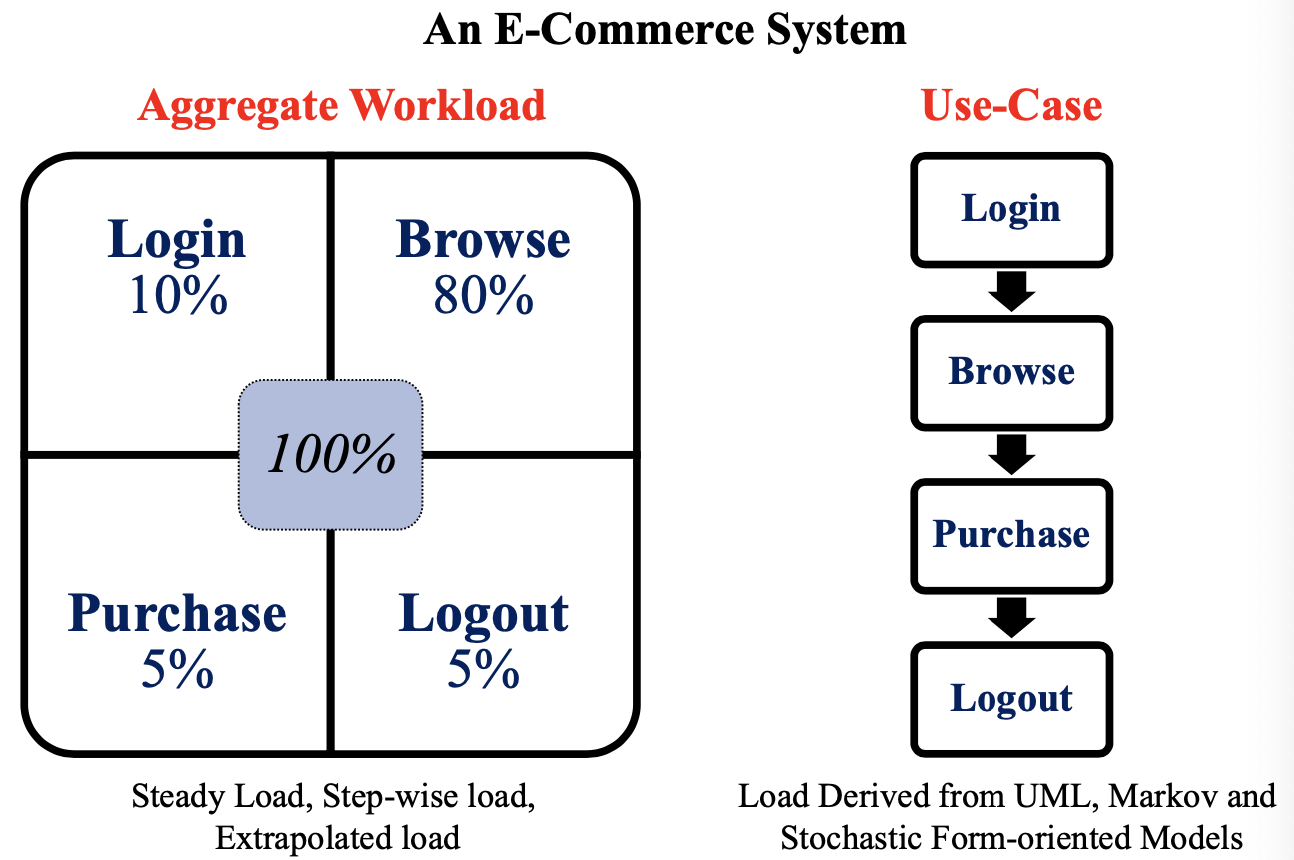

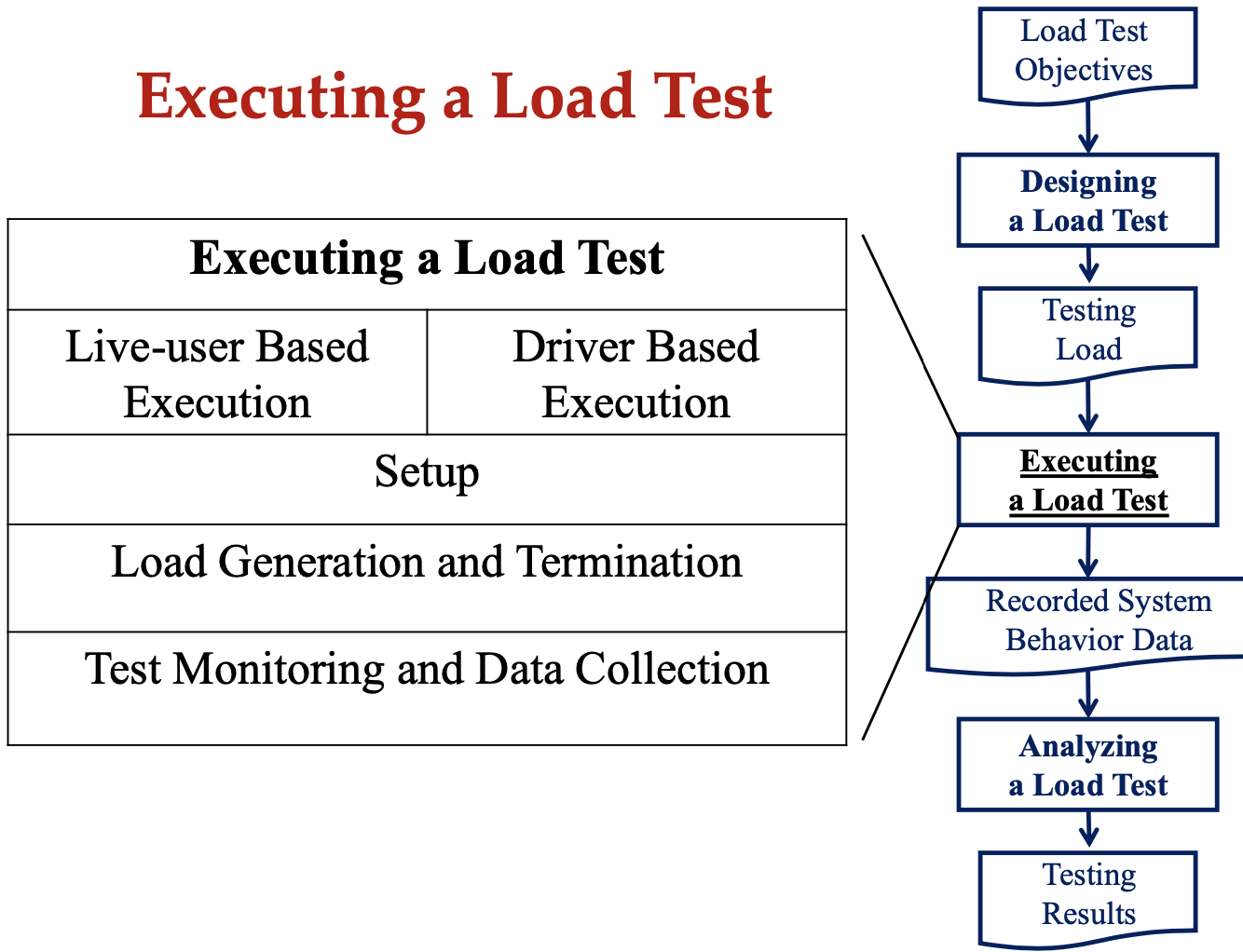

- A8 Load Testing - Performance

Final

Mostly consisted of MCQ and T/F Questions. Had 4 long answer questions that wasn’t worth a lot of marks.

Study using his review slides and consult Patrick’s repo: https://github.com/patricklam/stqam-2019/tree/master for practice.

Week 2

Control Flow

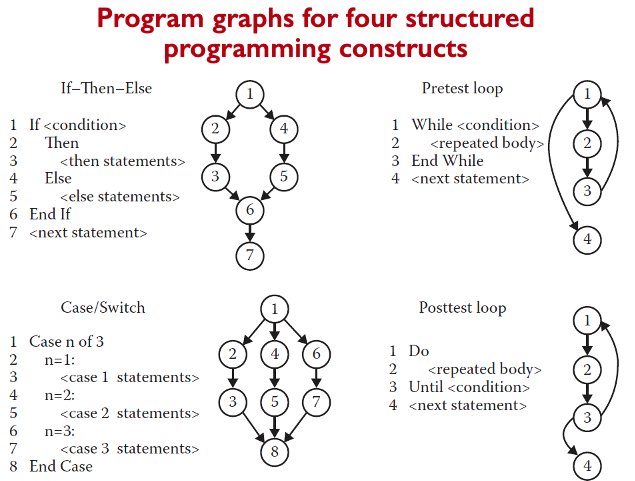

Program Graph

- Given a program written in an imperative programming language, its program graph is a directed graph in which nodes are statement fragments, and edges represent flow of control.

- A complete statement is also considered a statement fragment.

- Each circle is called a node, it corresponds to a statement fragment.

Control Flow Graphs (CFGs)

- A CFG models all executions of a method by describing control structures

- Nodes:

- Statements or sequences of statements (basic blocks)

- Edges:

- Transfers of control. an edge (s1, s2) indicates that s1 may be followed by s2 in an execution.

- Basic Block:

- A sequence of statements such that if the first statement is executed, all statements will be (no branches)

- Intermediate:

- Statements in a sequence of statements are not shown, as a simplification, as long as there is not more than one exiting edge and not more than one entering edge.

CFGs are sometimes annotated with extra information: branch predicates, defs, uses.

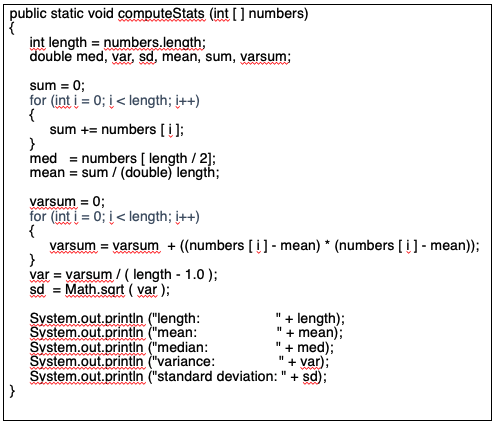

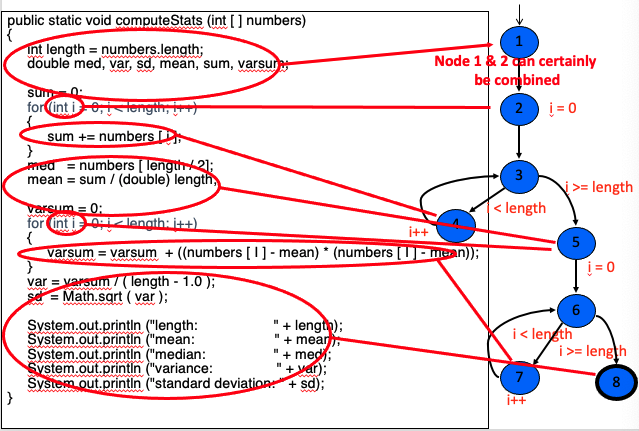

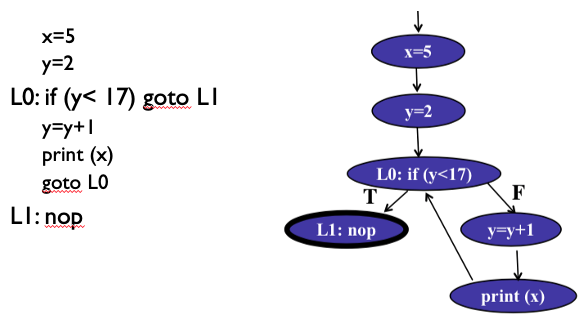

CFG Example:

I understand this example.

I understand this example.

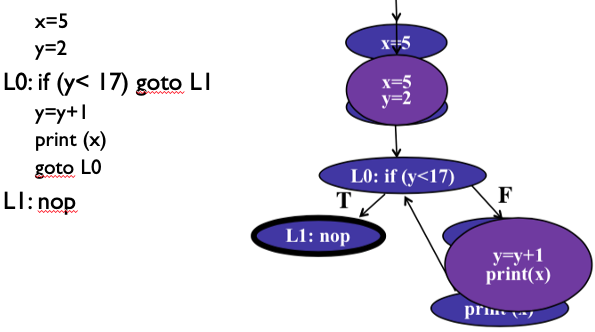

Basic Block

- We can simply a CFG by grouping together statements which always execute together (in sequential programs)

- A basic block has one entry point and one exit point.

CFG Example (continued with basic block):

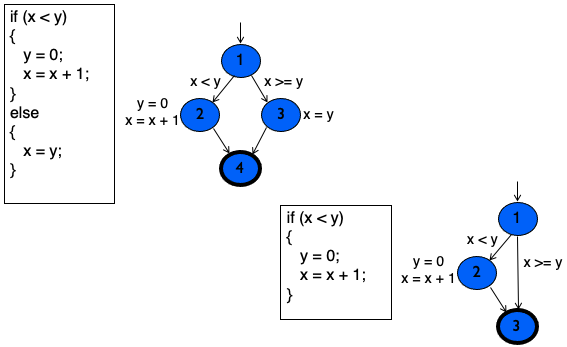

CFG: The if Statement

-

Notice, we label the edges to be the conditions, and next to the nodes, we write what it’s executed.

-

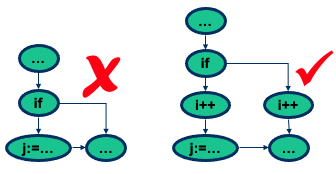

From source code to control flow graph - issue about branching

-

In a control flow graph, nodes corresponding to branching (if, while, …) should not contain any assignments

-

This paramount for the identification of data flow information

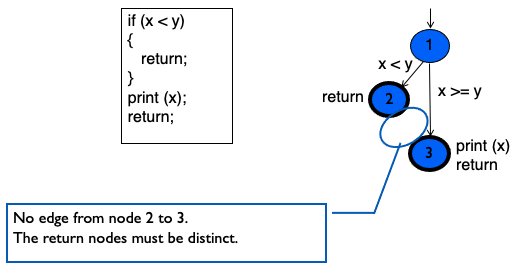

CFG: The if-Return Statement

Makes sense, when you return, you do not execute what it is outside of the if() condition if it’s true and went inside.

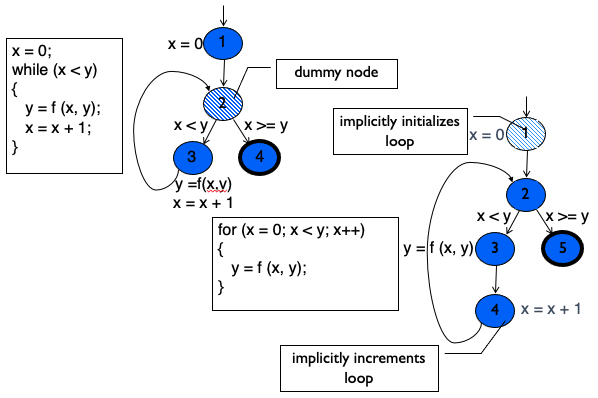

CFG: while and for Loops

Notice:

For the for loops, there is a node that implicitly initializes the loop and implicitly increments the loop.

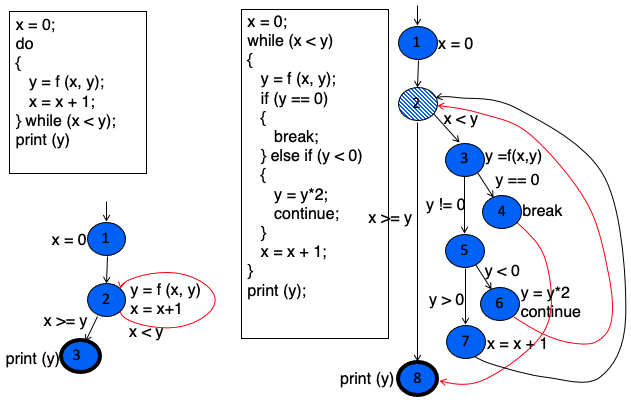

CFG: do Loop, break and continue

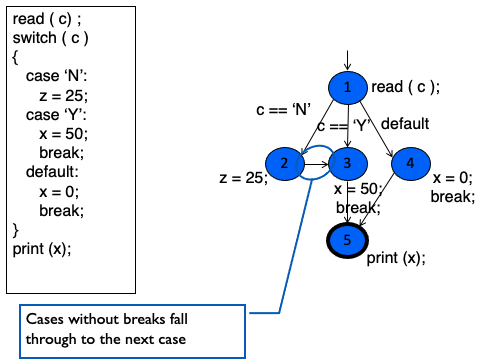

CFG: The case (switch) Structure

Notice

Cases without breaks fall through the next case.

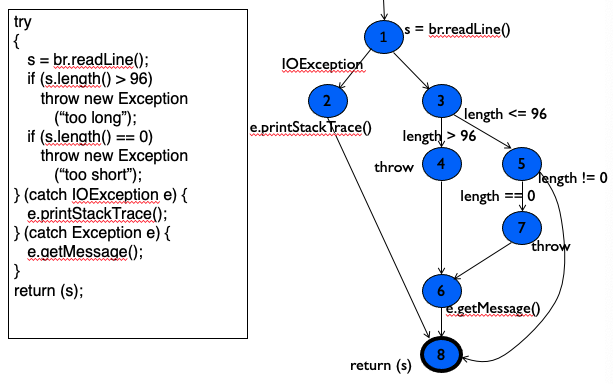

CFG: Exceptions (try-catch)

Why is there no line between nodes 1 and 3?

What does the arrow from node 1 to 2 mean? And when would it follow this path?

I don’t understand the flow of this diagram…to-understand

Example Control Flow Stats. Draw it out.

Nodes 1 and 2 could certainly be combined. We just separated them to emphasize two points:

- Initializations have to be included in the graph. They are also defs in data flow. In java, primitive types get default values, so even declarations have implicit definitions.

- The for loop control variable (i) is initialized before the test!

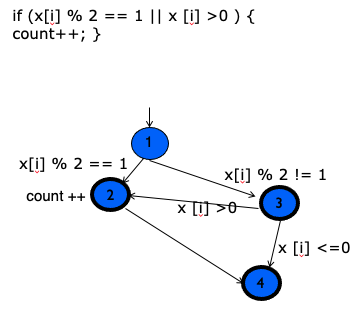

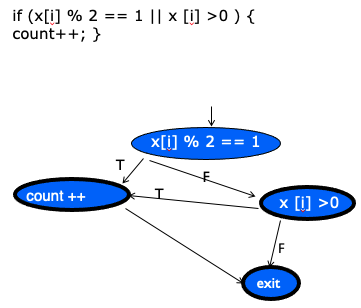

Short circuit

Oh I understand, there is a path that satisfies the first condition in the if statement (1→2). Then we have (1→3) that doesn’t satisfy the first statement, so we have to check the if it satisfies the second statement.

CFG can also be drawn as:

- What’s the purpose of this slide????to-understand

Code Coverage

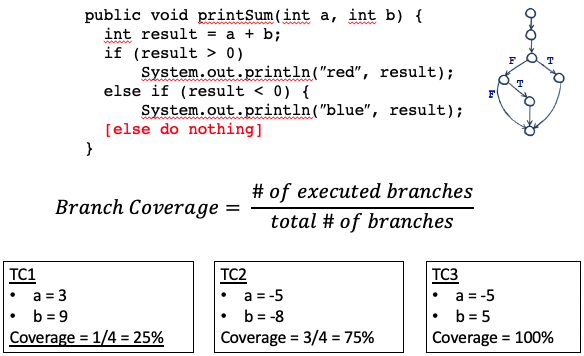

Code Coverage models

- Statement Coverage

- Segment (basic block) Coverage

- Branch Coverage

- Condition Coverage

- Condition/Decision Coverage

- Modified Condition/Decision Coverage

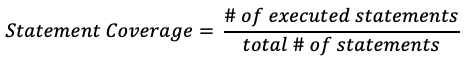

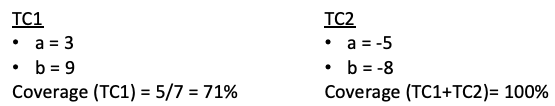

Statement Coverage:

- Achieved when all statements in a method have been executed at least once

- Faults cannot be discovered if the parts containing them are not executed

- Equivalent to covering all nodes in control flow graph (actual % of coverage would be different)

- Executing a statement is a weak guarantee of correctness, but easy to achieve

- In general, several inputs execute the same statements — important question in practice is how we can minimize test cases?

Statement Coverage Measure

public void printSum(int a, int b){

int result = a + b;

if(result > 0)

System.out.println("red", result);

else if( result < 0)

System.out.println("blue", result);

}

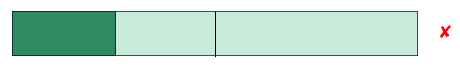

Initially for , , coverage is . After , , coverage is

Segment (basic block) Coverage

- Segment (basic block) coverage counts segments rather than statements

- May produce drastically different numbers

- Assume two segments and

- has one statement, has nine

- Exercising only one of the segments will give or statement coverage

- Segment coverage will be in both case

Statement coverage in practice:

- Statement coverage is most used in industry. Why???

- Typical coverage target is

- Why not aim for ?

- Since there can be dead code, as well sometimes not make sense to test on getter and setter methods

- Why not aim for ?

Statement coverage problems:

- Predicate may be tested for only one value (misses many bugs)

- Loop bodies may only be iterated once

- Statement coverage can be achieved without branch coverage. Important cases may be missed

Statement coverage does not call for testing simple if statements. A simple if statement has no else-clause. To attain full statement coverage requires testing with the controlling decision true, but not with a false outcome. No source code exists for the false outcome, so statement coverage cannot measure it.

If you only execute a simple if statement with the decision true, you are not testing the if statement itself. You could remove the if statement, leaving the body (that would otherwise execute conditionally), and your testing would give the same results.

Since simple if statements occur frequently, this shortcoming presents a serious risk. #to-understand

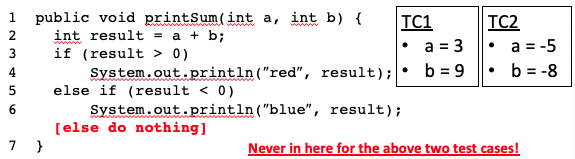

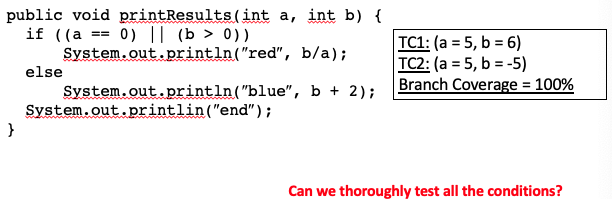

Branch Coverage

- Achieved when every branch from a node is executed at least once

- At least one true and one false evaluation for each predicate

- Can be achieved with paths in a control flow graph with 2-way branching nodes and no loops

- Even less if there are loops

The “+1” is for the “else” branch, when all the above are not taken

Branch Coverage Measure:

Branch coverage problems:

- Short-circuit evaluation means that many predicates might not be evaluated

- A compound predicate is treated as a single statement. If clauses, combinations, but only are tested

- Only a subset for all entry-exit paths is tested

???

???

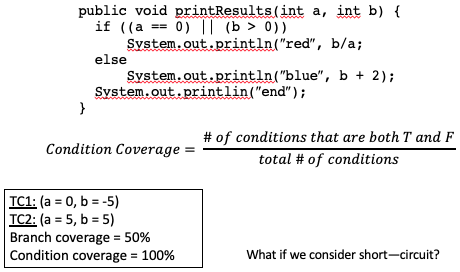

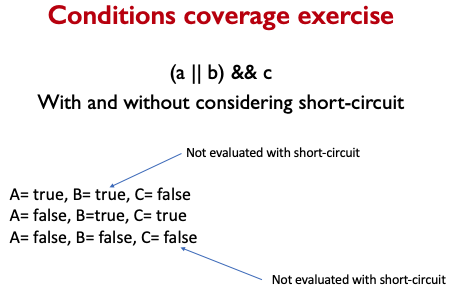

Condition Coverage

- Condition coverage reports the true or false outcome of each condition.

- Condition coverage measures the conditions independently of each other.

Condition Coverage Measure:

In this case, A and B should be evaluated at least once against “true” and “false”.

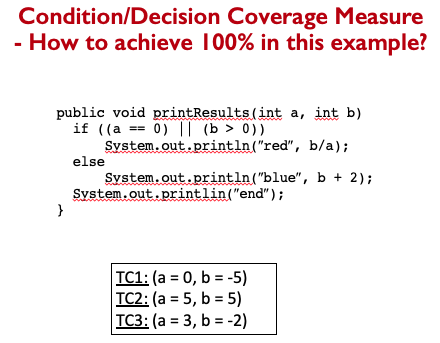

Condition/Decision Coverage

- Sometimes also called branch and condition coverage

- It is computed by considering both branch and condition coverage measures

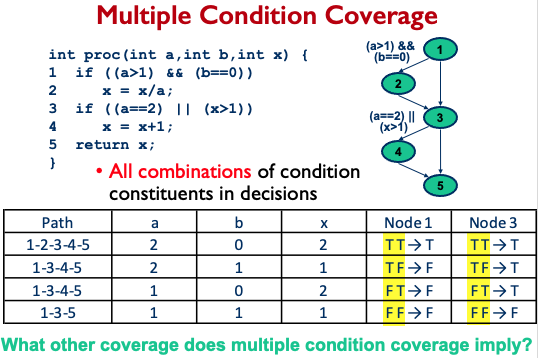

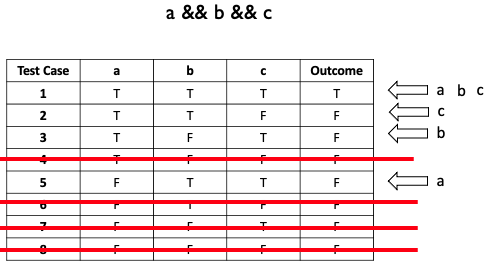

Modified Condition/Decision Coverage (MC/DC)

- Key Idea: test important combinations of conditions and limiting testing costs

- Extend branch and decision coverage with the requirement that each condition should affect the decision outcome independently

- In other words, each condition should be evaluated one time to “true” and one time to “false”, and this with affecting the decision’s outcome

- Often required for the mission-critical systems #to-understand

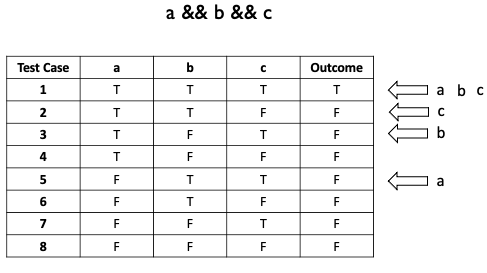

MC/DC Example:

For value of independently, test case 1 and 5 impact the results

For value of independently, test case 1 and 3 impact the results

For value of independently, test case 1 and 2 impact the results

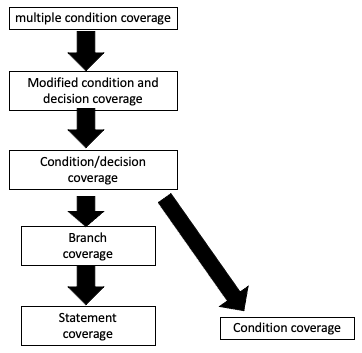

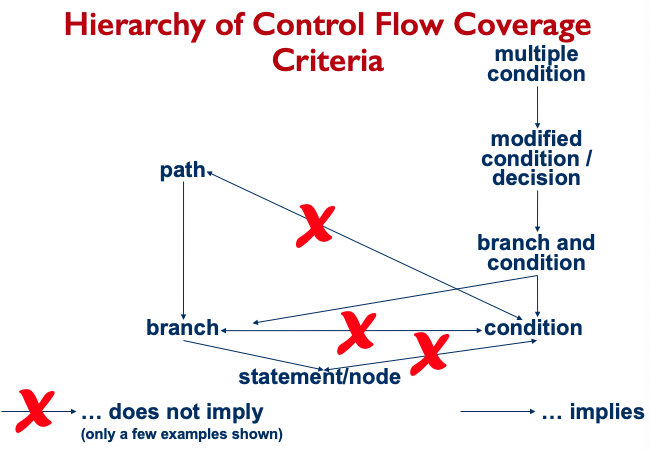

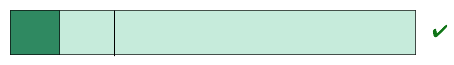

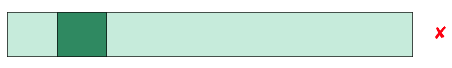

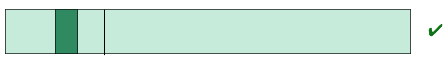

Test Criteria Subsumption

The way to read this is “branch coverage implies statement coverage”.

The way to read this is “branch coverage implies statement coverage”.

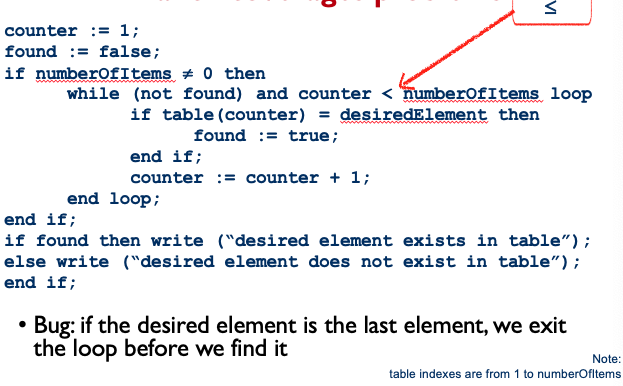

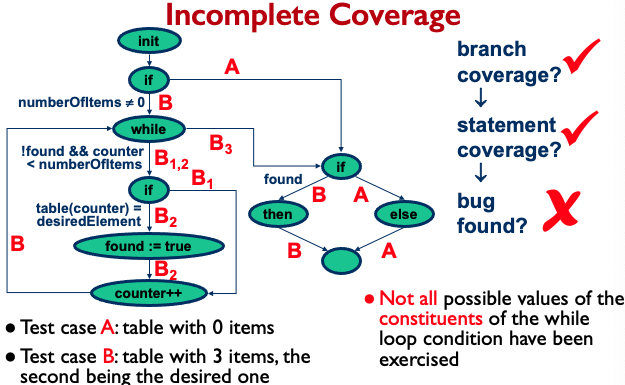

Dealing with Loops

- Loops are highly fault-prone, so they need to be tested carefully

- Simple view: Every loop involves a decision to traverse the loop or not

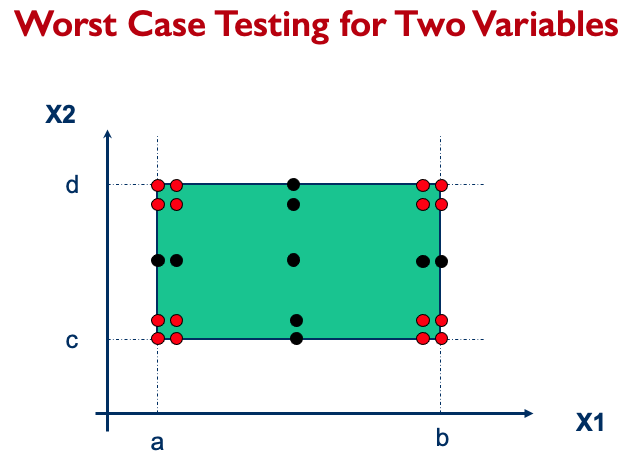

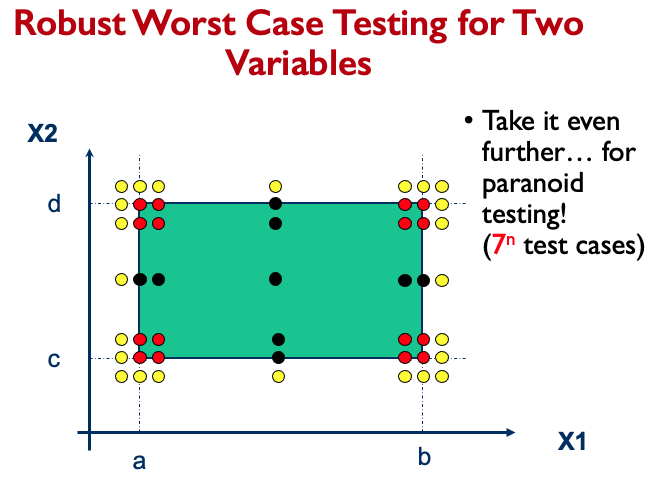

- A bit better: Boundary value analysis on the index variable

- Nested loops have to be tested separately starting with the innermost

Loop Coverageto-understand

- Minimal coverage should, when possible, execute the loop body:

- Zero times (do not enter the loop)

- Once (do not repeat it)

- Twice or more times (repeat it once or more times)

- Simple loop more extensive coverage, set loop control variable to:

- Minimum - I

- Minimum

- Minimum + I

- Typical

- Maximum - I

- Maximum

- Maximum + I Boundary value analysis for robustness testing.

This will come back in later topics 🙂

- Nested loop

- Start at innermost loop

- Set all the outer loops to their minimum values

- Set all other loops to their typical values

- Test cases for single loop (e.g., minimum, minimum + I, typical, maximum - I, maximum) for the innermost loop

- Move up in nested loop level

- If outermost loop done, do cases for single loop for all loops in the nest simultaneously (i.e., from minimum - I to maximum + I) Base choice for category partitioning, combining several boundary values (keep all but one variable at their nominal values)

- Start at innermost loop

DataFlow

From last class:

Summary

- Statement coverage

- Covering every node in CFG

- Branch coverage

- For every branching node in CFG, at least one true and one false

- Condition coverage

- For every condition in all branching node in CFG, at least one true and one false for each condition

- Condition/decision

- Cover both all branch and all condition

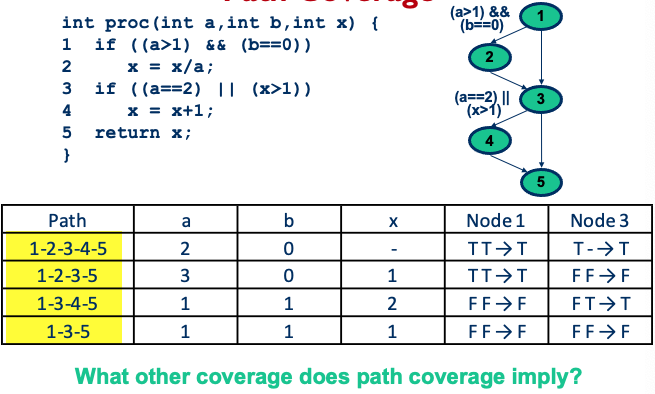

Path Coverage

Path Coverage

- Test case for each possible path

- In practice, however, the number of paths is too large, if not infinite

- Some paths are infeasible

- It is key to determine “critical paths”

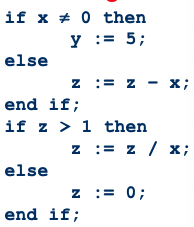

- •Test suite 1 = {<x=0, z=1>, <x=1, z=3>} (executes all edges but does not show risk of division by 0)

- Test suite 2 = {<x=0, z=3>, <x=1, z=1>} (would find the problem by exercising the remaining possible flows of control through the program)

- Test suite I Test suite 2 all paths covered

Exercise: Path Coverage Show that path coverage does not imply condition coverage by changing the if statement below:

if (a > 0)

x = 1;

else

x = 2;

end if;Path coverage: condition coverage

Now show that condition coverage does not imply path coverage by changing the if statement.

Data flow and coverage

Data flow analysis

- CFG paths that are significant for the data flow in the program

- Focuses on the assignment of values to variables and their uses, i.e., where data is defined and used

- Analyze occurrences of variables

- Definition occurrence: value of variable is referred

- Use occurrence: variable used to decide whether predicate is true

- Computational use: compute a value for defining other variables or output value

Definitions and Uses:

- A program written in a programming language such as C and Java contains variables

- Variables are defined by assigning values to them and are used in expressions

- Statement defines variable and uses variables and

- Statement

scanf("%d %d", &x, &y)defines variablesxandy - Statement

printf("Output: %d \n", x + y)uses variablesxandy

Pointers

- Consider the following sequence of statements that use pointers:

z = &x;

y = z + 1;

*z = 25;

y = *z + 1;- 1st statement: defines a pointer variable

zbut does not usex - 2nd statement: defines

yand usesz - 3rd statement: defines

xaccessed through the pointer variablez - 4th statement: defines

yand usesxaccessed through the pointer variablez

Arrays

- Arrays are also tricky – consider the following declaration and two statements in C:

int A[10];

A[i] = x + y;- 2nd statement: defines

Aand usesi,x, andy - Alternative view for 2nd statement: defines

A[i], not the entire arrayA(the choice of whether to consider the entire arrayAas defines or the specific element depends upon how stringent the requirement is for coverage analysis) - The same kind of reasoning also applies to fields of a class

First statement, whether A[10] is a def, not a clear answer ⇒ depending on language and Compiler.

c-use

Uses of a variable that occur within an expression as part of an assignment statement, in an output statement, as a parameter within a function call, and in subscript expressions, are classified as c-use, where the “c” in c-use stands for computational.

How many c-uses of

xcan you find in the following statements?

z = x+1; A[x+1] = B[2]; foo(x*x); output(x)

In general, a definition of

A[E]is interpreted as a c-use of variables inEfollowed by a def ofAIn general, a reference to

A[E]is interpreted as a use of variables inEfollowed by a use ofAThere are one / one / two / one c-uses of x.

p-use

The occurrence of a variable in an expression used as a conditional in a branch statement such as an

ifand awhile, is considered as a p-use, where the “p” in p-use stands for predicate.

How many p-uses of

zandxcan you find in the following statements?

if(z>0) (output(x)}; while(z>x){...}There are 2 p-uses of z and 1 p-use of x (and another c-use of x). Since

output(x)is c-use ofx.

p-use: Possible Confusion

- Consider the statement:

if(A[x+1]>0) {output(x)};- The use of

Ais clearly a p-use Is the use ofxin the subscript, a c-use or a p-use???? The use of x is definitely a c-use because it is used in the computation of the index. However, it is also a p-use, because it affects which array element is used in the condition. C-use⇒p-use

- The use of

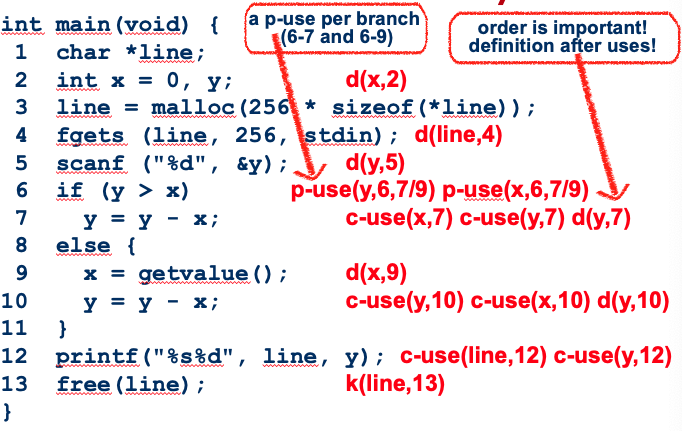

Basics of Data Flow Analysis

- Variable definition:

- d(v, n): value is assigned to at node (e.g., LHS of assignment, input statement)

- Variable use, i.e., variable reference:

- c-use(v ,n): variable v used in a computation at node n (e.g., RHS of assignment, argument of procedure call, output statement)

- p-use(v, m, n) variable v used in predicate from node m to n (e.g., as part of an expression used for a decision)

- Variable kill:

- k(v, n): variable v deallocated at node n

I understand what it’s doing, but it might be easier to draw it out with with circles with numbers.

I understand what it’s doing, but it might be easier to draw it out with with circles with numbers.

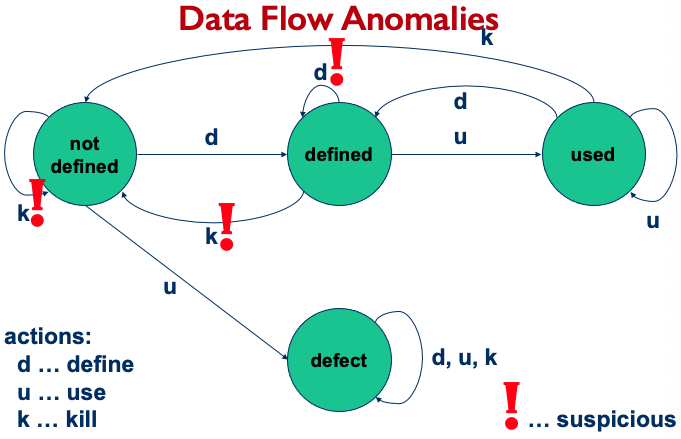

Data Flow Anomalies:

Data Flow Actions Checklist

- For each successive pair of actions

- dd suspicious

- dk probably defect

- du normal case

- kd okay

- kk probably defect (undefined behaviour)

- ku a defect

- ud okay

- uk okay

- uu okay

- First occurrence of action on a variable:

- k suspicious

- d okay

- u suspicious (may be global)

- Last occurrence of action on a variable:

- k okay

- d suspicious (defined but never used after)

- u okay (but maybe deallocation forgotten)

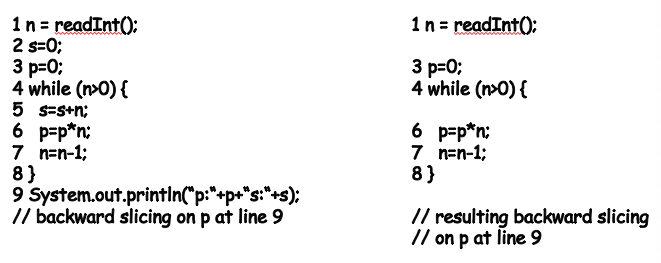

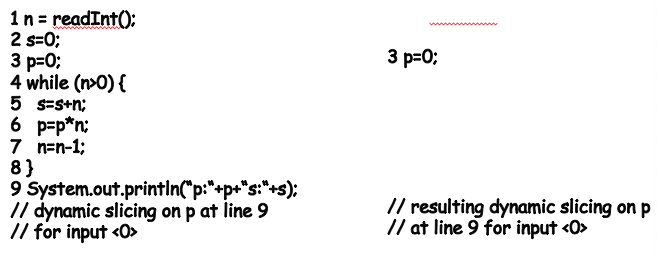

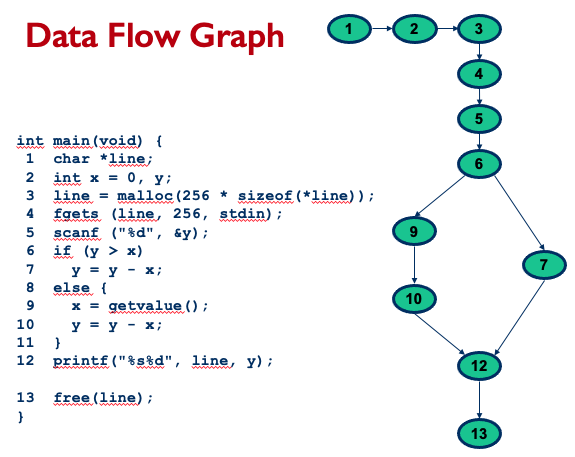

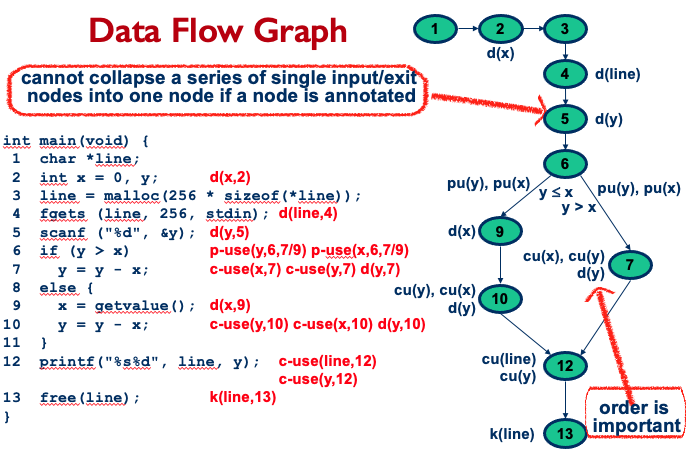

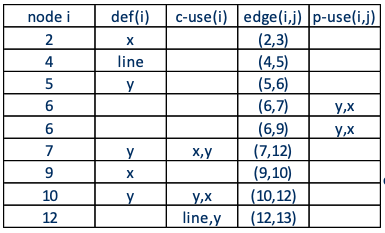

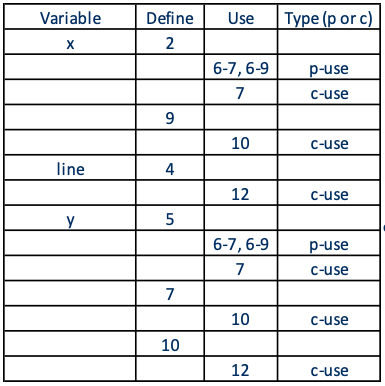

Data Flow Graph

Data Flow Graph

A data flow graph (DFG) of a program, also known as def-use graph, captures the flow of definitions (also known as defs) and use across basic blocks in a program.

- It is similar to a control flow graph of a program in that the nodes, edges, and all paths in the control flow graph are preserved in the data flow graph

- Annotate each node with def and c-use as needed, and each edge with p-use as needed

- Label each edge with condition which when true causes the edge to be taken

Note

- Cannot collapse a series of single input/exit nodes into one node if a node is annotated

- Order is important if you have several p(), d(), c() in a node.

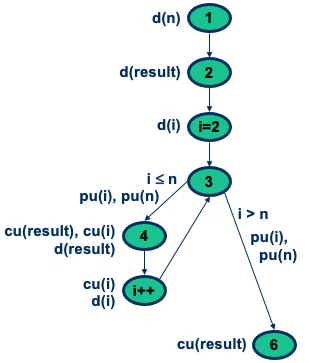

Exercise: DFG for Factorial

1 public int factorial(int n){

2 int i, result = 1;

3 for (i=2; i£n; i++) {

4 result = result * i;

5 }

6 return result;

7 }Answer:

- Attempt it yourself as practice for exam!

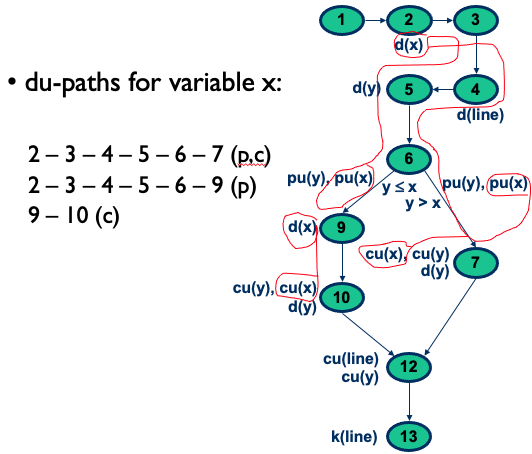

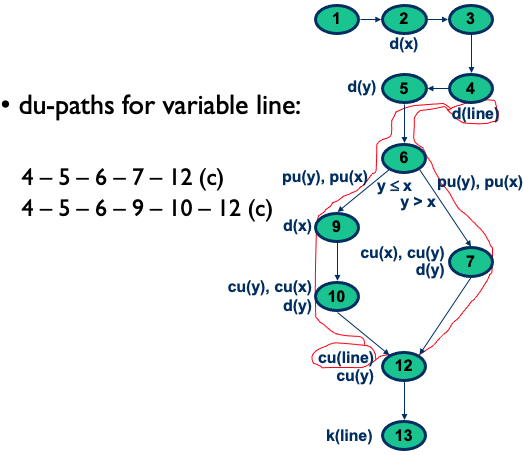

Data Flow Graph: Paths and Pairs

- Complete path: initial node is start node, final node is exit node

- Simple path: all nodes except possibly first and last are distinct

- Loop free path: all nodes are distinct (cycle free?)

- def-clear path with respect to v: any path starting from a node at which variable v is defined and ending at a node at which v is used, without redefining v anywhere else along the path

- du-pair with respect to v: (d, u)

- d … node where v is defined

- u … node where v is used

- def-clear path with respect to v from d to u

- Also referred as “reach”: def to v at d reaches the use at u.

- Definition-use path (du-path) with respect to v: a path such that and either one of the following two cases:

- c-use of v at node , and is a def-clear simple path with respect to v (i.e., at the most single loop traversal)

- p-use of v on edge to and is a def-clear loop-free path (i.e., cycle free)

Data Flow Coverage:

- all-definitions (all-defs)

- all-uses

- all-p-uses/some-c-uses

- all-c-uses/some-p-uses

- all-du-paths

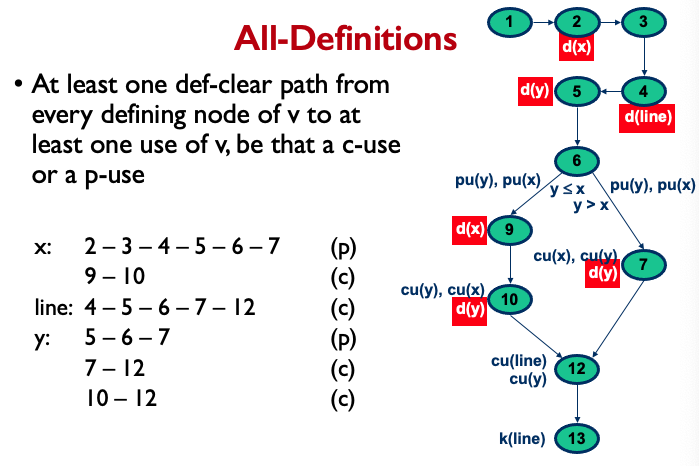

All Definitions:

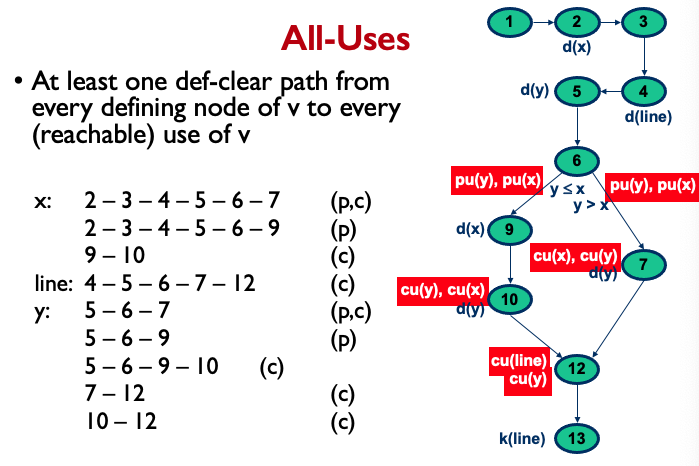

All-Uses:

- What does reachable use of v mean? Where it simply uses it?

- Why is there not a line: 4-5-6-9-10-12?to-understand

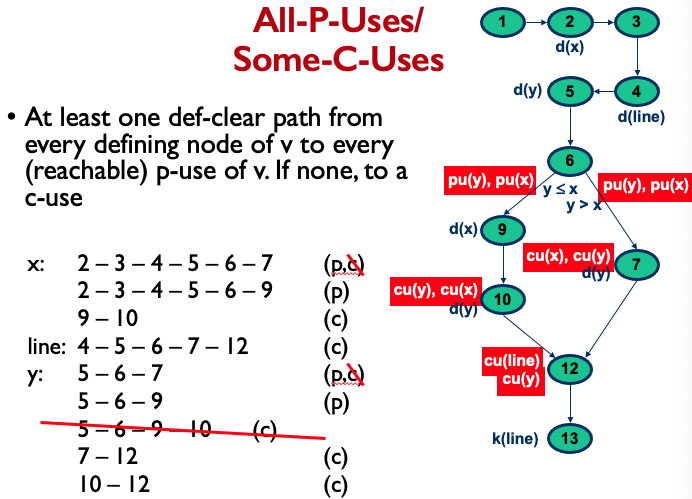

All-P-Uses/Some-C-Uses:

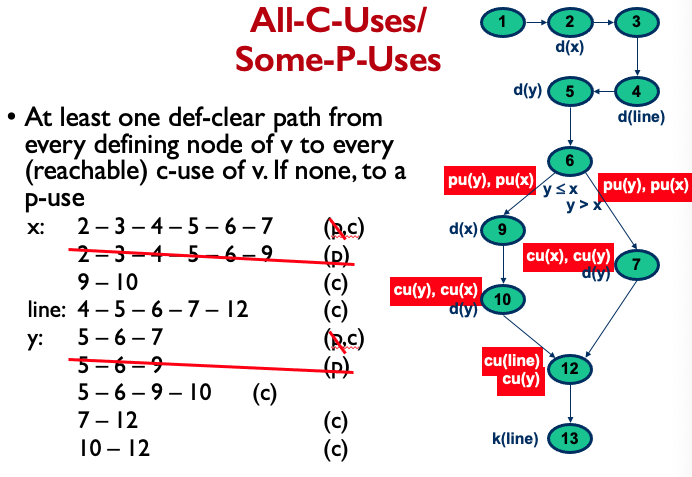

All-C-Uses/Some-P-Uses:

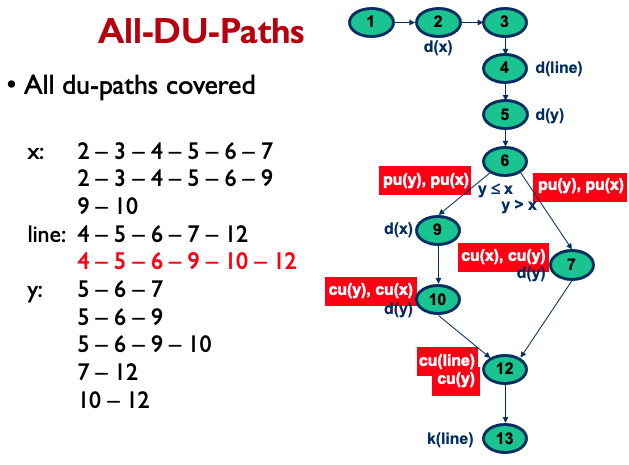

All-DU-Paths:

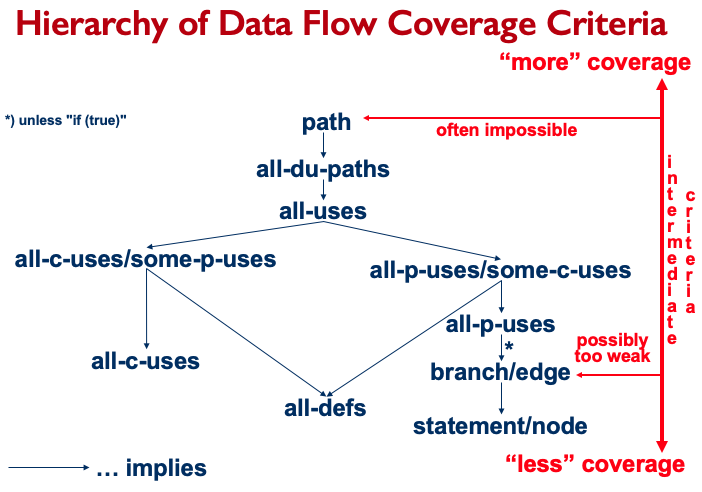

Hierarchy of Data Flow Coverage Criteria:

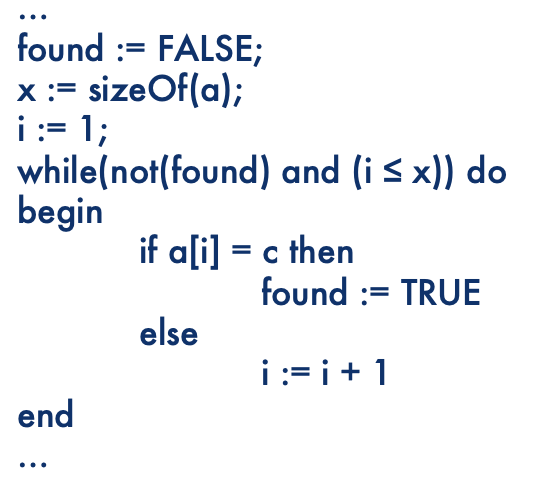

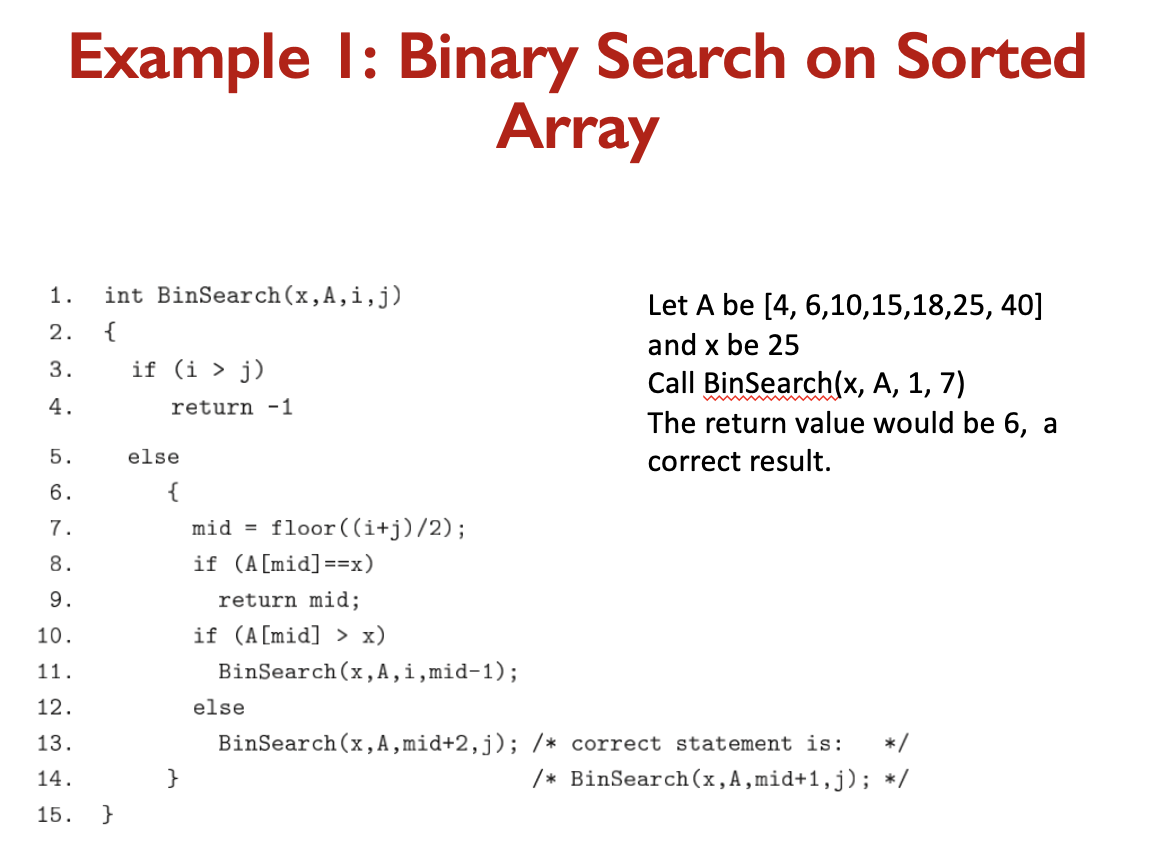

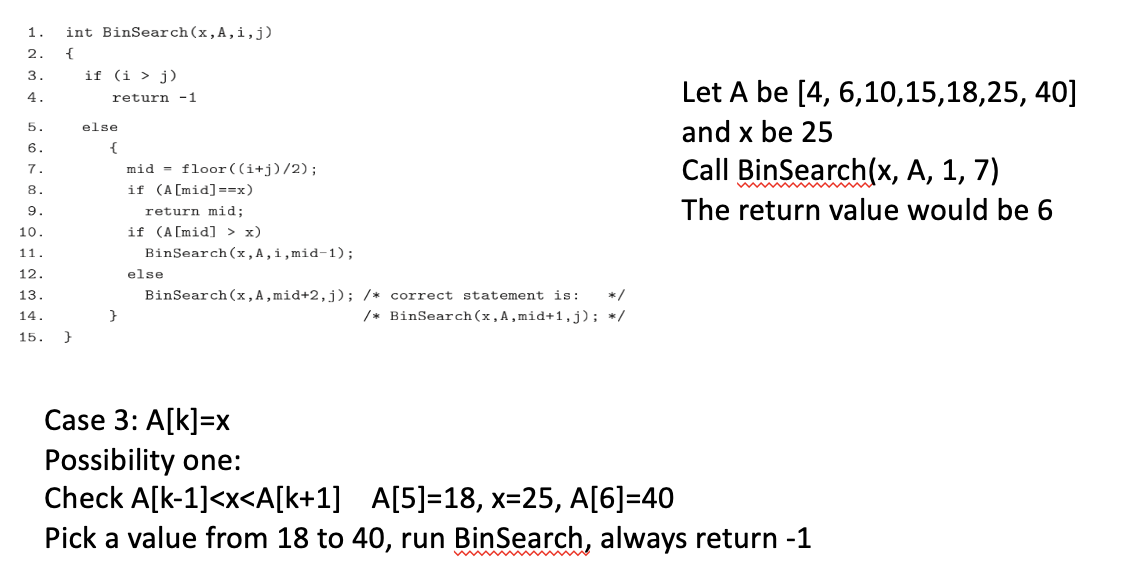

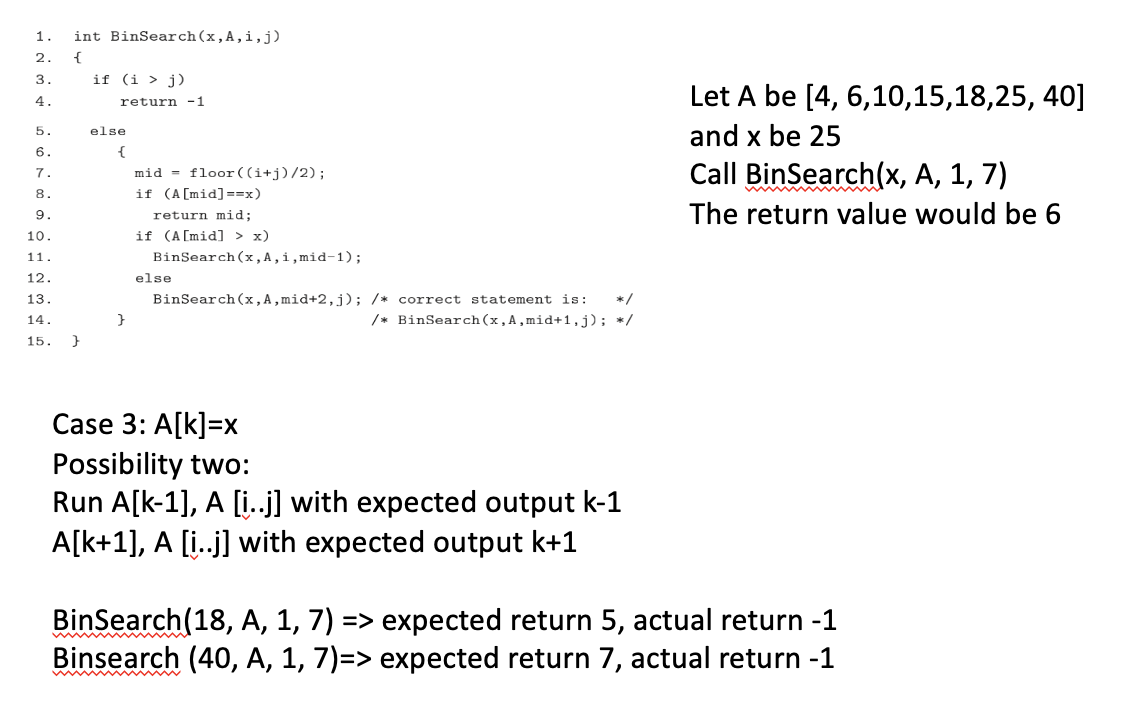

Exercise: Search

Look at the exercise on slide 49 of Week2 dataflow lecture.

Week 3

Creating Tests

Creating test cases:

- In order to increase the coverage of a test suite, one needs to generate test cases that exercise certain statements or follow a specific path

- Not alway easy

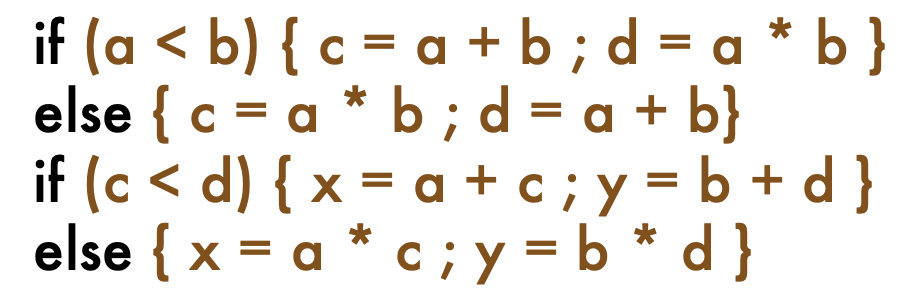

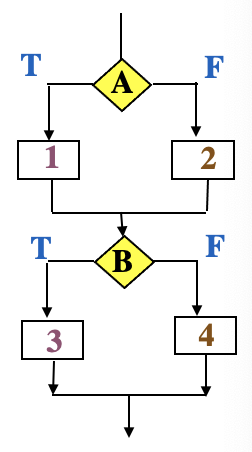

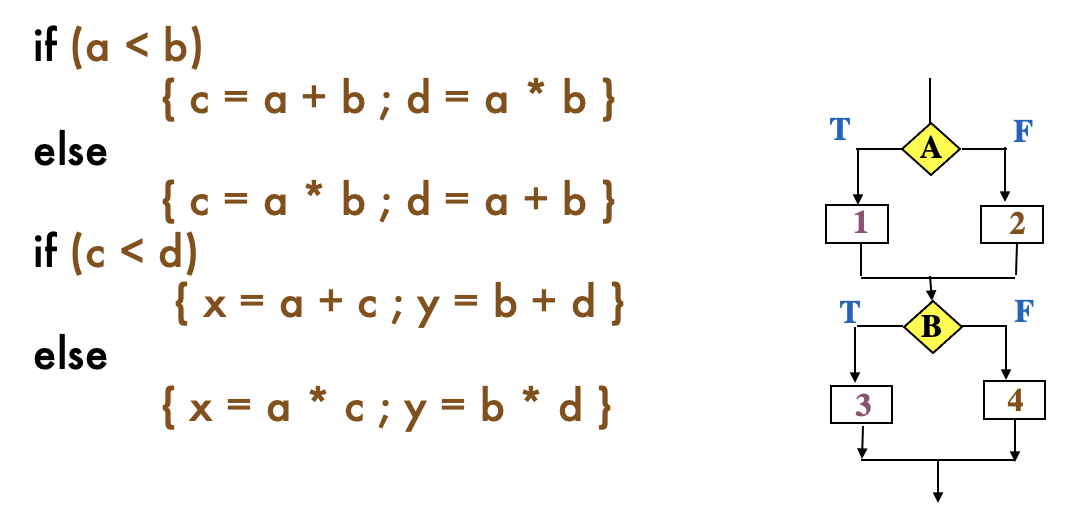

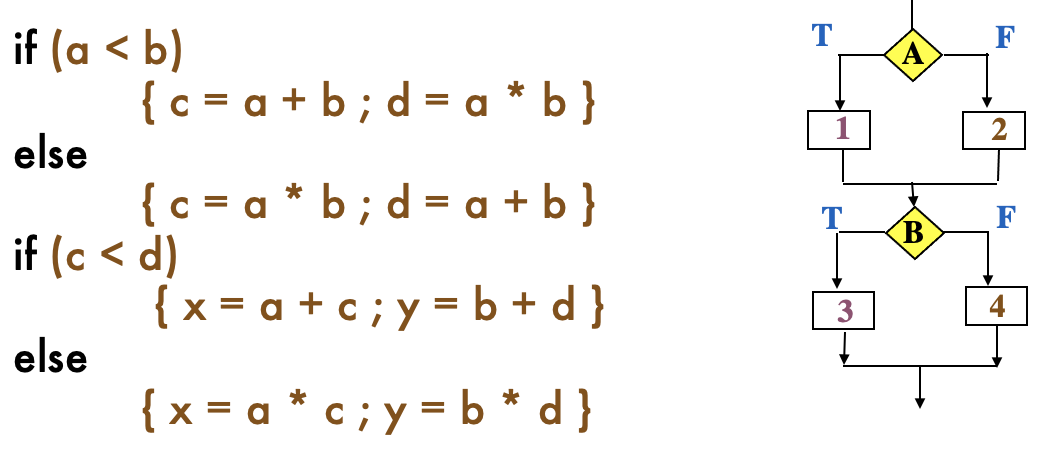

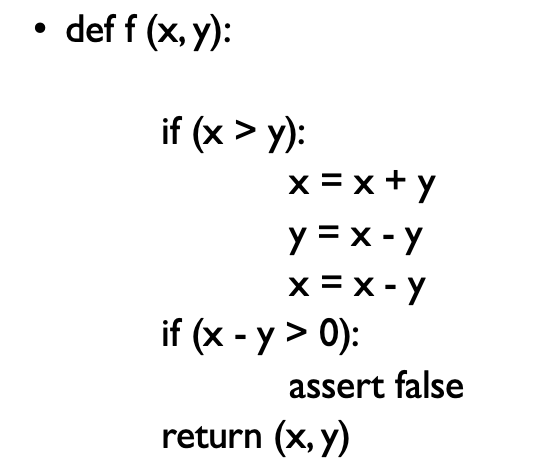

CFG question

What is the control flow graph of the following?

Answer

- The key question for creating a test for a path is:

- How to make the path execute, if possible.

- Generate input data that satisfies all the conditions on the path

- How to make the path execute, if possible.

- The key items you need to generate a test case for a path:

- Input vector

- Predicate

- Path predicate

- Predicate interpretation

- Path predicate expression

- Create test input from path predicate expression

Input Vector

A collection of all data entities read by the routine whose values must be fixed prior to entering the routine.

The members of an input vector are:

- Input arguments and constants

- Files

- Network connections

- Timers

Predicate

A logical function evaluated at a decision point.

In the following example, each of and are predicates:

Pat Predicate

A path predicate is the set of predicates associated with a path.

Example: In the following and is a path predicate for path 4.

Predicate Interpretation

- A path predicate may contain local variables

- Local variables play no role in selecting inputs that force a path to execute

- Local variables cannot be selected independently of the input variables

- Local variables are eliminated with symbolic execution

Understanding the meaning and implications of each predicate is crucial for generating appropriate test inputs.

- A Symbolic Execution is

- Symbolically substituting operations along a path in order to express the predicate solely in terms of the input vector and a constant vector.

- A predicate may have different interpretations depending on how control reaches the predicate

Symbolic Execution

Key idea:

- Evaluate the program on symbolic input values

- Use an automated theorem to check whether there are corresponding concrete input values that make the program fail.

Symbolic execution is a technique used in software testing and verification to evaluate a program’s behaviour on symbolic input values rather than concrete values. The idea is to substitute symbolic values for variables and execute the program symbolically along different paths. This allows you to express the path predicate solely in terms of the input vector and a constant vector, effectively eliminating the influence of local variables.

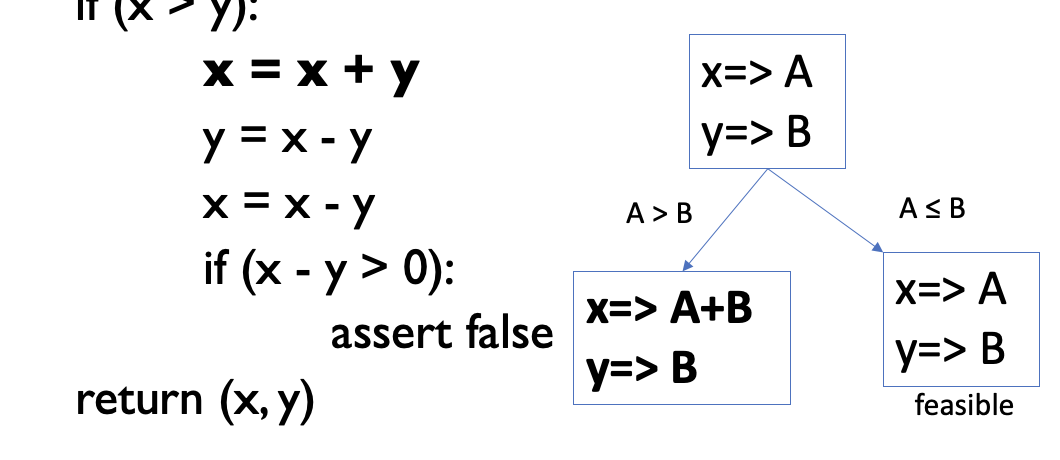

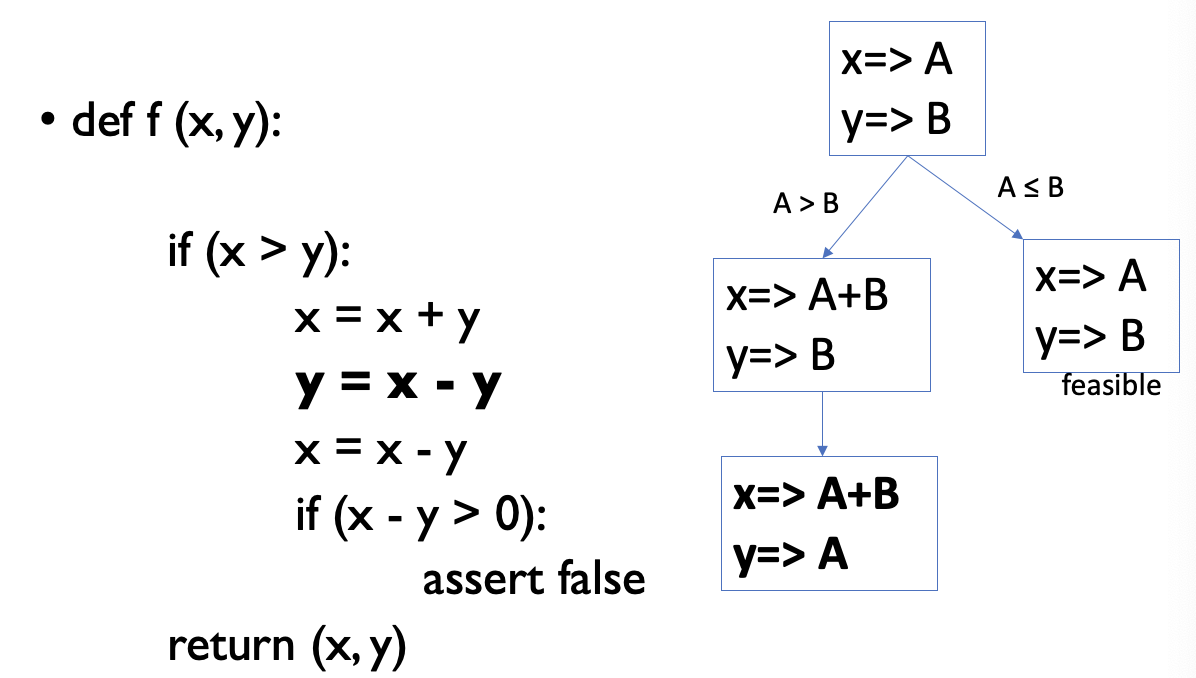

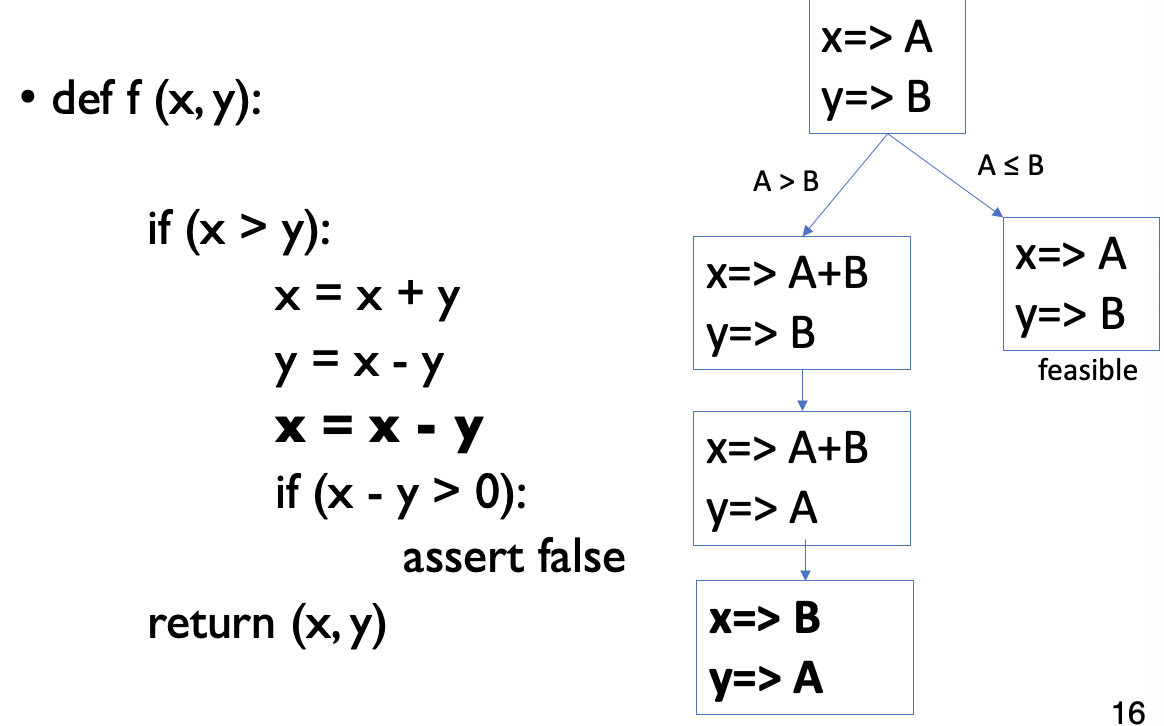

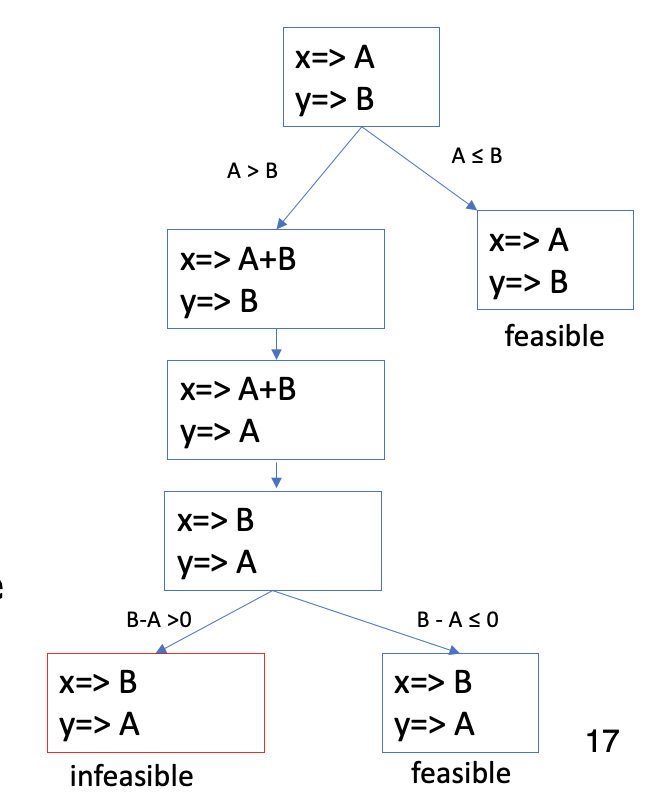

Example: Symbolic execution

- Execute the program on symbolic values

- Symbolic state maps variables to symbolic values

- and

- Path condition is a logical formula over the symbolic inputs that encodes all branch decisions taken so far.

- All paths in the program forms its execution tree, in which some paths are feasible and some are infeasible

- why is it y ⇒ A ?

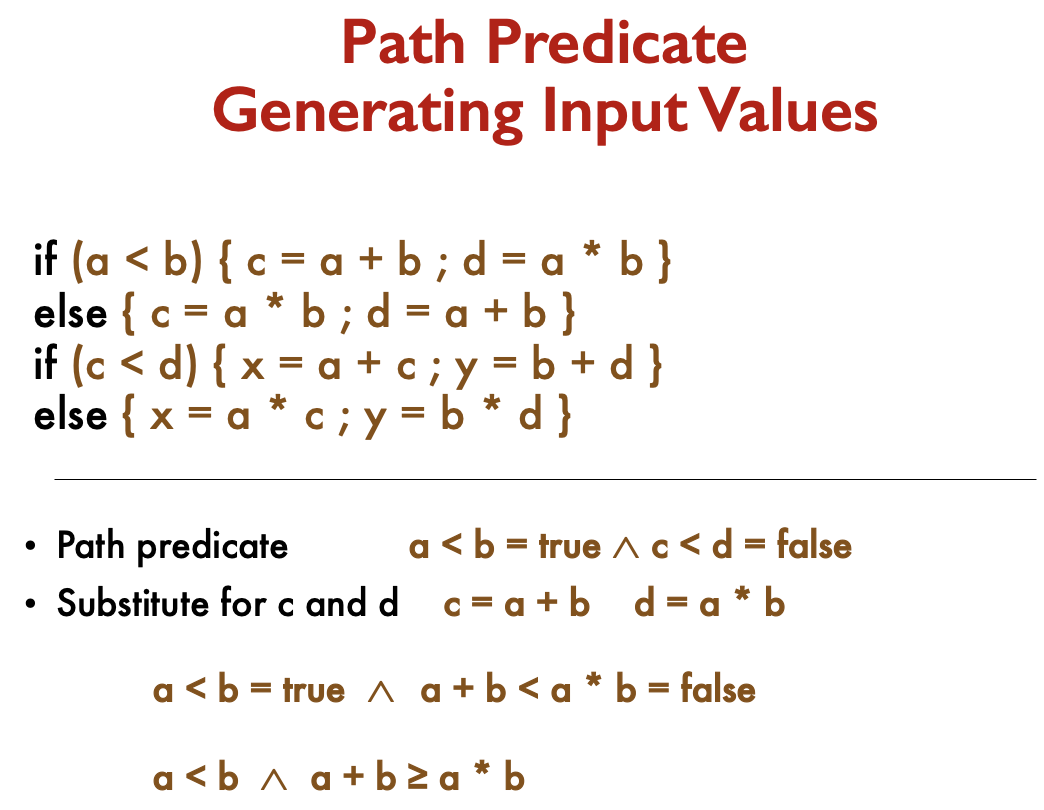

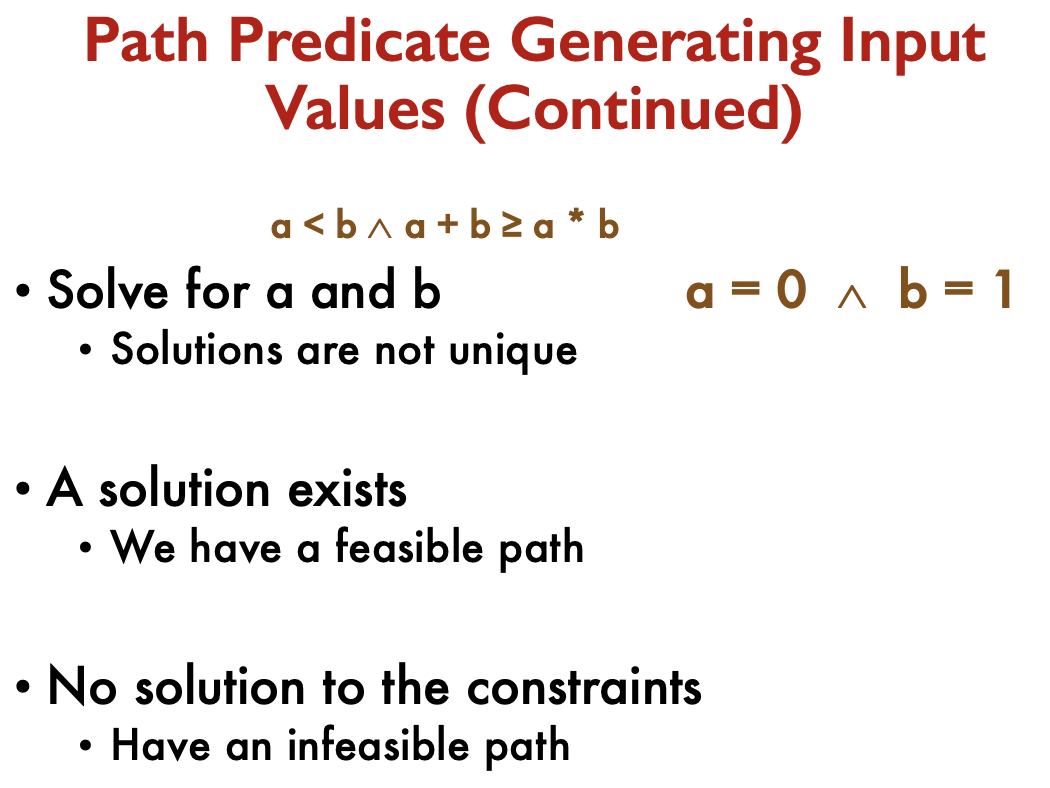

Path Predicate Expression

- An interpreted path predicate is called a path predicate expression.

- A path predicate expression has the following attributes

- No local variables

- It is a set of constraints in terms of the input vector, and, maybe, constants

- By solving the constraints, inputs force each path can be generated

- If a path predicate expression has no solution, the path is infeasible

- We have feasible path, since a solution exists

- Can have infeasible paths, if there is no solution to the constraints

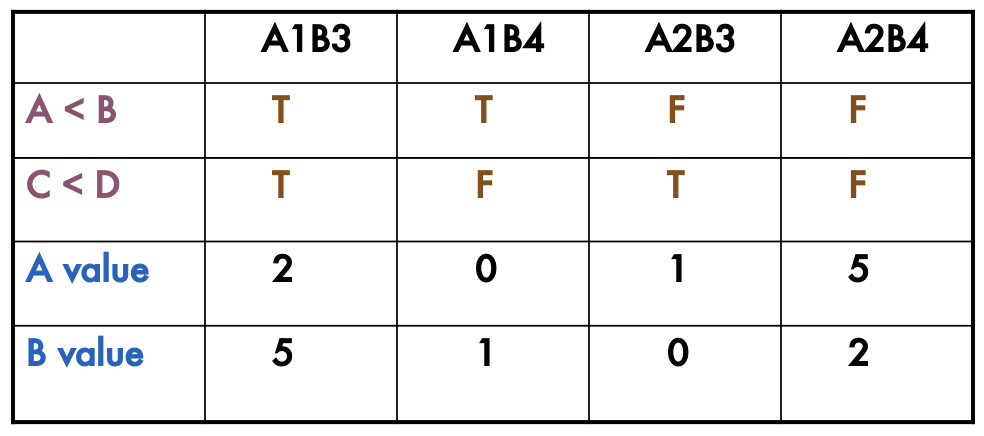

Organizing path predicates:

- We can organize the set of path predicates using a decision table

- Paths A1B3 and A2B4 give statement coverage or Paths A1B4 and A2B3 give statement coverage

- Why???

Selecting Paths:

- A program unit may contain a large number of paths.

- Path selection becomes a problem

- Some selected paths may be infeasible

- What strategy would you use to select paths?

- Select as many short paths as possible

- Tradeoffs?

- Choose longer paths

- Tradeoffs?

- Select as many short paths as possible

- What about infeasible paths?

- What would you do about them?

- Make an effort to write a program text with fewer or no infeasible paths.

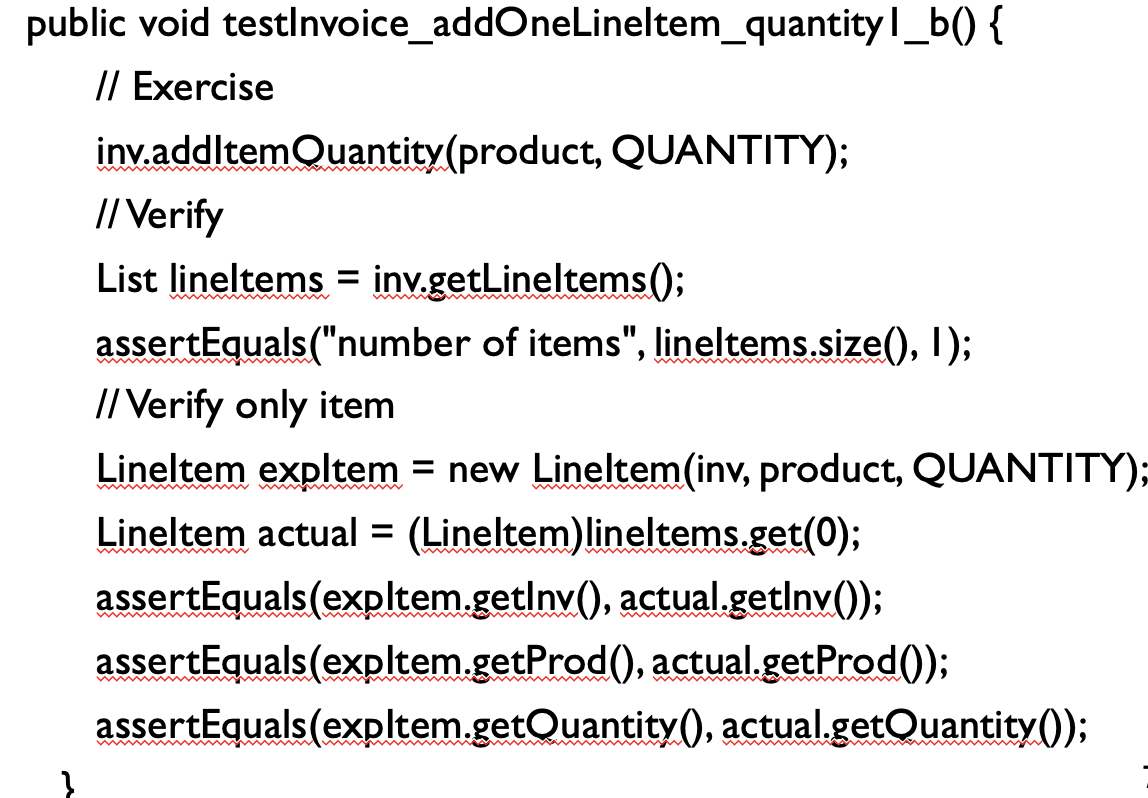

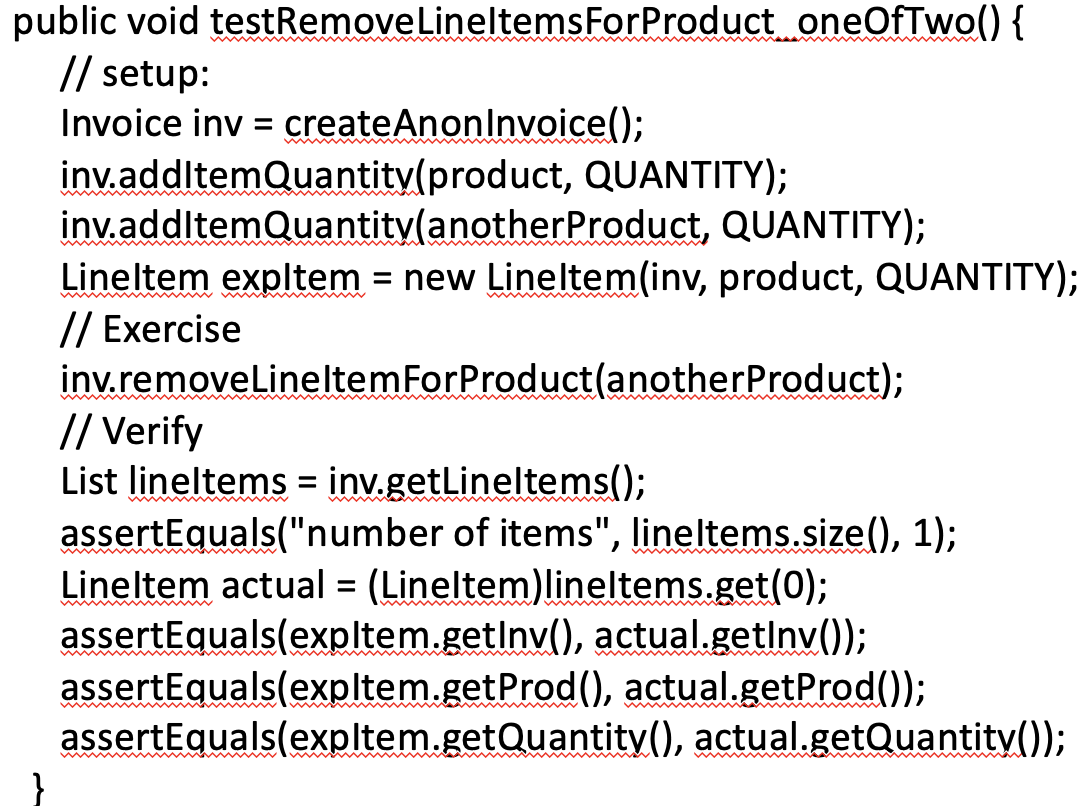

Principles of maintainable test code

Test should be fast

- The faster we get feedback from the test code, the better. Slower test suites force us to run the tests less often, making them less effective.

- If you are facing a slow test, consider the following:

- Using mocks or stubs to replace slower components that are part of the test

- Redesigning the production code so slower pieces of code can be tested separately from fast pieces of code

- Moving slower tests to a different test suite that you can run less often

Tests should be cohesive, independent, and isolate

- Ideally, a single test method should test a single functionality or behaviour of the system. Complex test code reduces our ability to understand what is being tested at a glance and makes future maintenance more difficult.

- Tests should not depend on other tests to succeed. The test result should be the same whether the test is executed in isolation or together with the rest of the test suite.

- Test should clean up their messes, which will force tests to sup up things themselves and not rely on data that was already there.

Note

It is not uncommon to see cases where test B only works if test A is executed first. This is often the case when test B relies on the work of test A to set up the environment for it. Such tests become highly unreliable.

Tests should have a reason to exist

- You want tests that either help you find bugs or help you document behaviour. You do not want tests that, for example, increase code coverage. (Why? I know that sometimes you need to get code coverage as high as possible)

- If a test does not have a good reason to exist, it should not exist, since you need to maintain it.

- The perfect test suite is one that can detect all the bugs with the minimum number of tests.

Tests should be repeatable and not flaky

- A repeatable test gives the same result no matter how many times it is executed.

- Companies like Google and Facebook have publicly talked about problems caused by flaky tests.

- Because it depends on external or shared resources

- Due to improper time-outs

- Because of a hidden interaction between different test methods

- Other observations:

- Most flaky tests are flaky from the time they are written

- Flaky tests are rarely due to the platform-specifics (they do not fail because of different operating system)

Note

Developers lose their trust in tests that present flaky behavior (sometimes pass and sometimes fail, without any changes in the system or test code).

Tests should have strong assertions

- Tests exist to assert that the exercised code behaved as expected. Writing good assertions is therefore key to a good test!

- An extreme example of a test bad assertions is one with no assertions

- Assertions should be as strong as possible. You want your tests to fully validate the behaviour and break if there is any slight change in the output.

For example:

calculateFinalPrice()in aShoppingCartclass changes two properties:finalPriceand the taxPaid.- If your tests only ensure the value of the

finalPriceproperty, a bug may happen in the waytaxPaidis set, and your tests will not notice it.

Tests should break if the behaviour changes

- Tests let you know that you broke the expected behaviour

- If you break the behaviour and the test suite is still green, something is wrong with your tests

- Maybe weak assertions

- Maybe not really tested

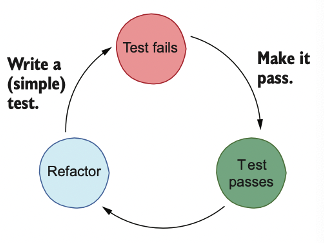

- Test driven development (TDD) would help

Tests should have a single and clear reason to fail

- Test failure is the first step toward understanding and fixing the bug. Your test code should help you understand what caused the bug.

- The test is cohesive and exercises only one (hopefully small) behaviour of the software system.

- Give your test a name that indicates its intention and the behaviour it exercises.

- Make sure anyone can understand the input values passed to the method under test.

- Finally, make sure the assertions are clear, and explain why a value is expected.

Tests should be easy to write

- There should be no friction when it comes to writing tests; or it is too easy for you to give up and not do it.

- Writing unit tests tends to be easy most of the time, but it may get complicated when the class under test requires too much setup or depends on too many other classes. Integration and system tests also require each test to set up and tear down the (external) infrastructure.

- Make sure tests are always easy to write. Give developers all the tools to do that. If tests require a database to be set up, provide developers with an API that requires one or two method calls and voilà—the database is ready for tests.

- Investing time in writing good test infrastructure is fundamental and pays off in the long term.

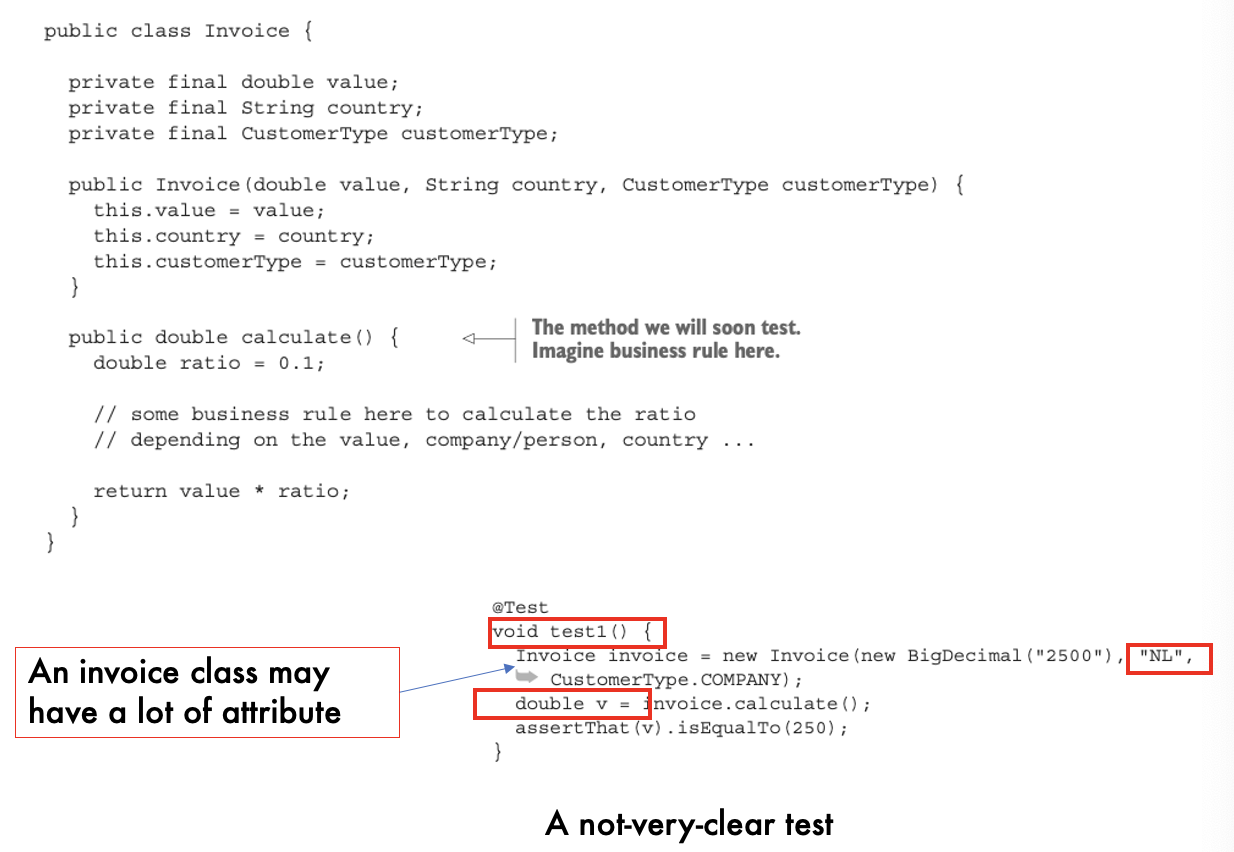

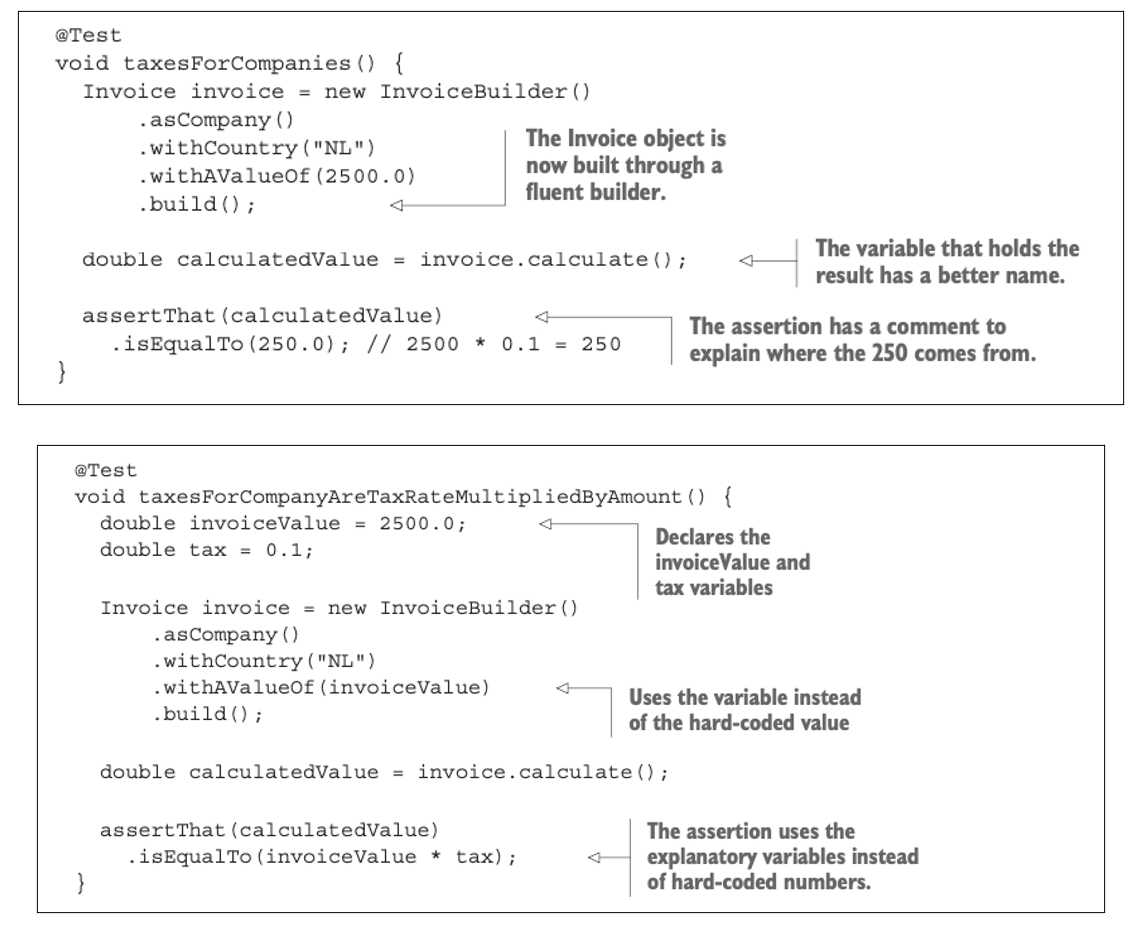

Tests should be easy to read

- Your test code base will grow significantly. But you probably will not read it until there is a bug or you add another test to the suite.

- make sure all the information (especially the inputs and assertions) is clear enough

- use test data builders whenever I build complex data structures.

- What are test data builders?

- test data builders are a valuable tool for improving the readability, maintainability, and clarity of test code by providing a clean and concise way to construct complex test data structure

Tests should be easy to change and evolve

- Although we like to think that we always design stable classes with single responsibilities that are closed for modification but open for extension, in practice, that does not always happen.

- Your task when implementing test code is to ensure that changing it will not be too painful.

- For example, if you see the same snippet of code in 10 different test methods, consider extracting it.

- Your tests are coupled to the production code in one way or another. That is a fact. The more your tests know about how the production code works, the harder it is to change them.

Note:

a clear disadvantage of using mocks is the significant coupling with the production code. Determining how much your tests need to know about the production code to test it properly is a significant challenge.

Test Smells (Anti-Patterns)

Code and test smells (anti-patterns)

- The term code smell indicates symptoms that may indicate deeper problems in the system’s source code.

- For example: Long Method, Long Class, and God Class

- Study shows that code smells hinder the comprehensibility and maintainability of software systems

- The community has been developing catalogs of smells that are specific to test code

- Test smells are prevalent in real life and, unsurprisingly, often hurt the maintenance and comprehensibility of the test suite.

Test Smells

- Mystery Guest

- Resource Optimism

- Test Run War

- General Fixture

- Eager Test

- Lazy Test

- Assertion Roulette

- Indirect Testing

- For Testers Only

- Sensitive Equality

- Test Code Duplication

Note

In effective software testing book, Test code duplication⇒ excessive duplication, Unclear assertion⇒ assertion roulette, Resource optimism⇒ Bad handling of complex or external resources, General fixture⇒ fixtures that are too general, Sensitive assertion⇒ sensitive equality

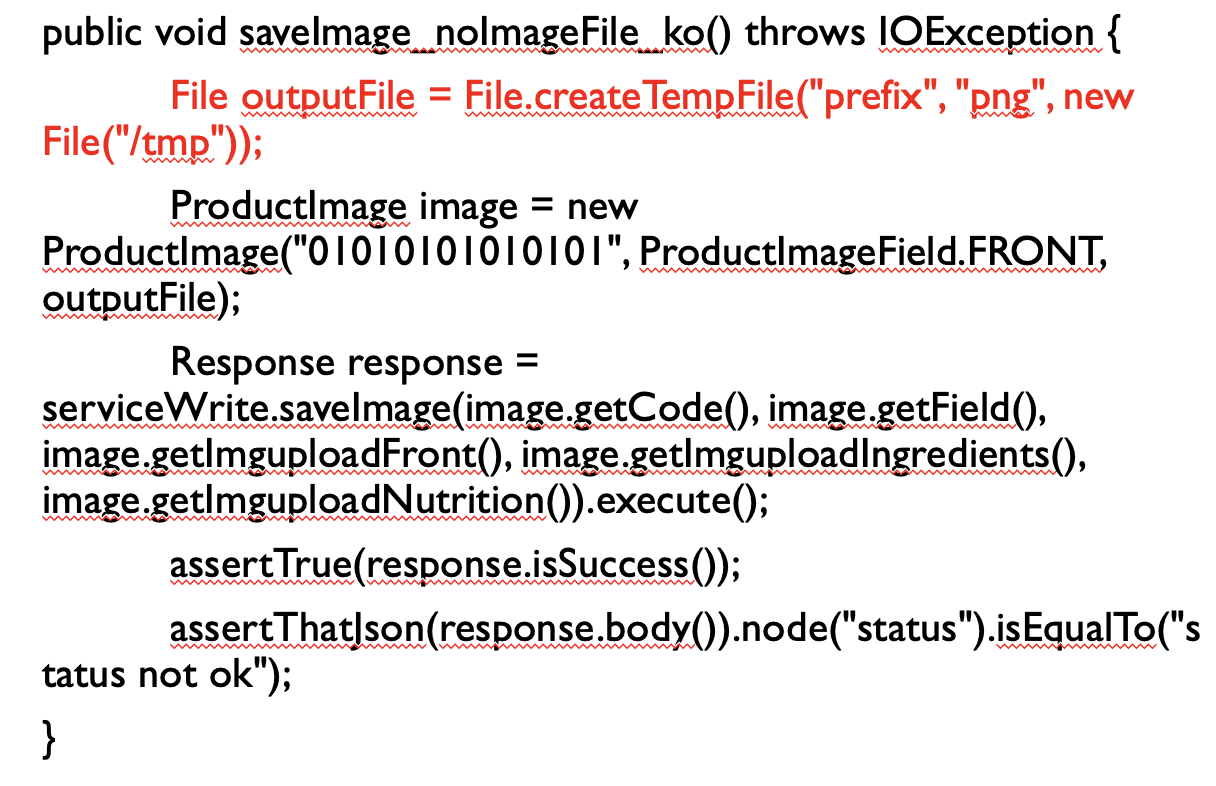

Eager Test

When a test method checks several methods of the object to be tested, it is hard to read and understand, and therefore more difficult to be used as documentation. Moreover, it makes tests more dependent on each other and harder to maintain.

The solution is simple:

- separate the test code into test methods that test only one method, using a meaningful name highlighting the purpose of the test. Note that splitting into smaller methods can slow down the tests due to increased setup/teardown overhead.

Lazy Tests

- This occurs when several test methods check the same method using the same fixture (but for example check the values of different instance variables).

- Such tests often only have meaning when considering them together, so they are easier to use when joined using Inline Method

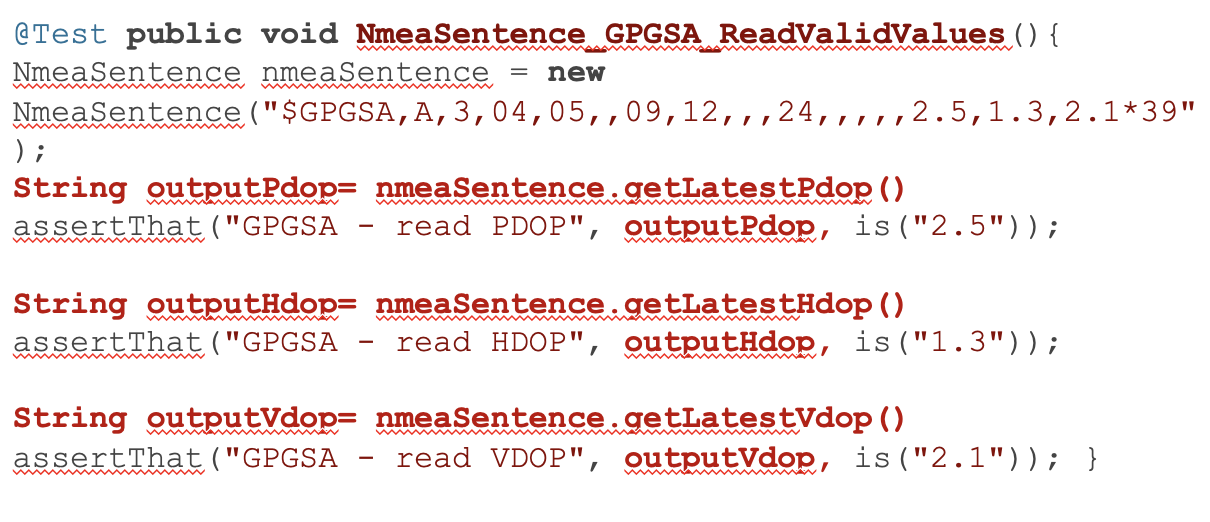

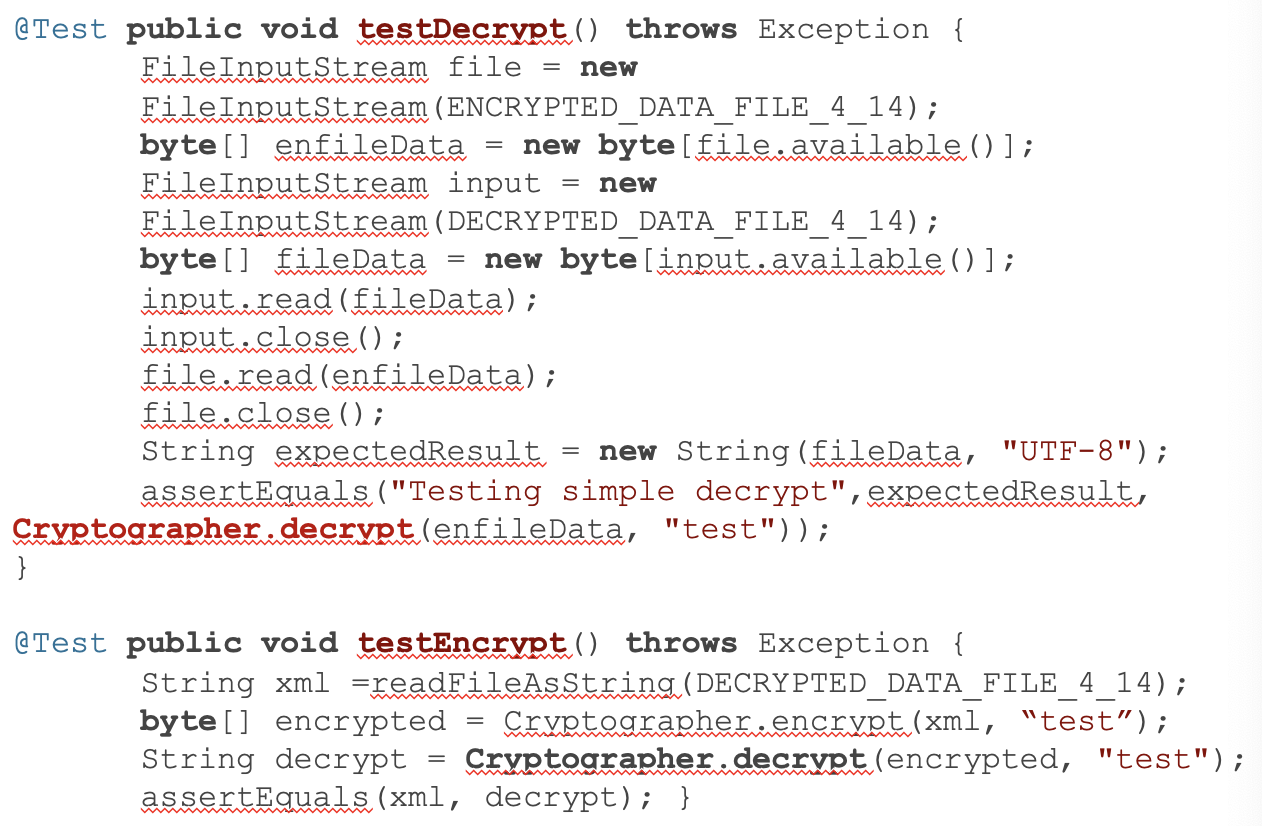

- Both test methods, testDecrypt() and testEncrypt(), call the same SUT method, Cryptographer.decrypt().

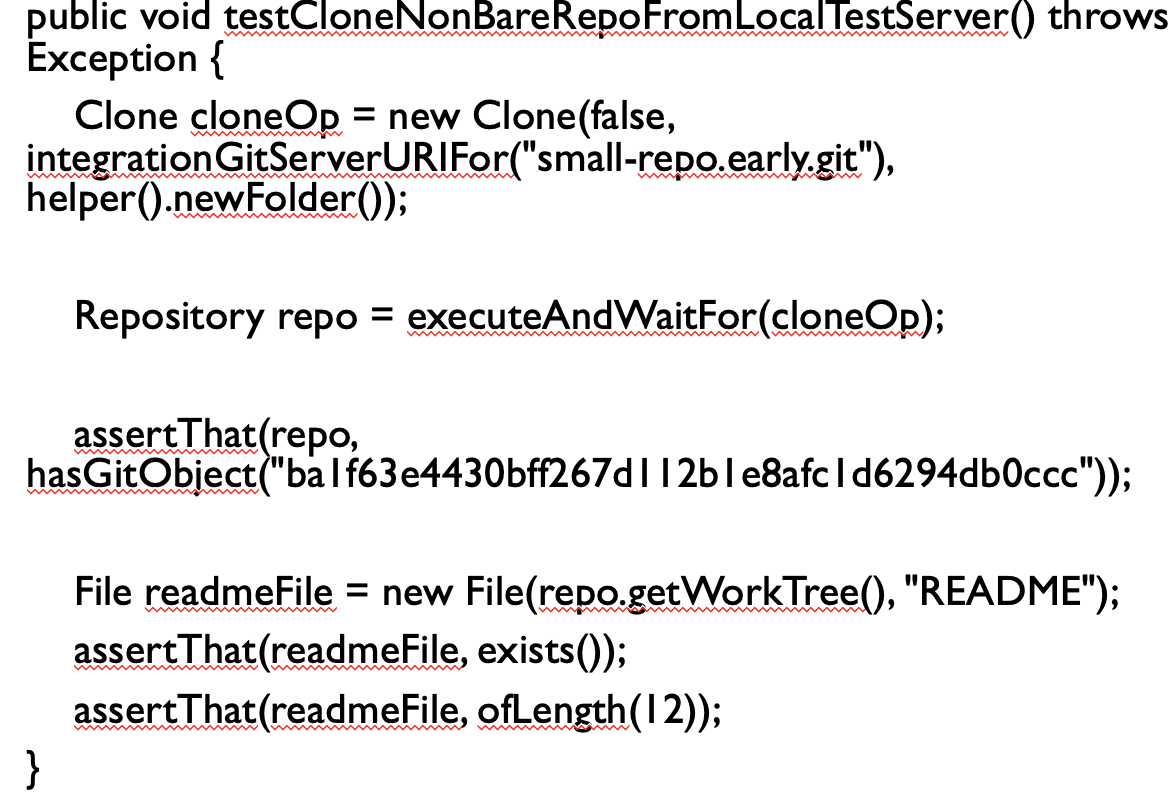

Mystery Guest

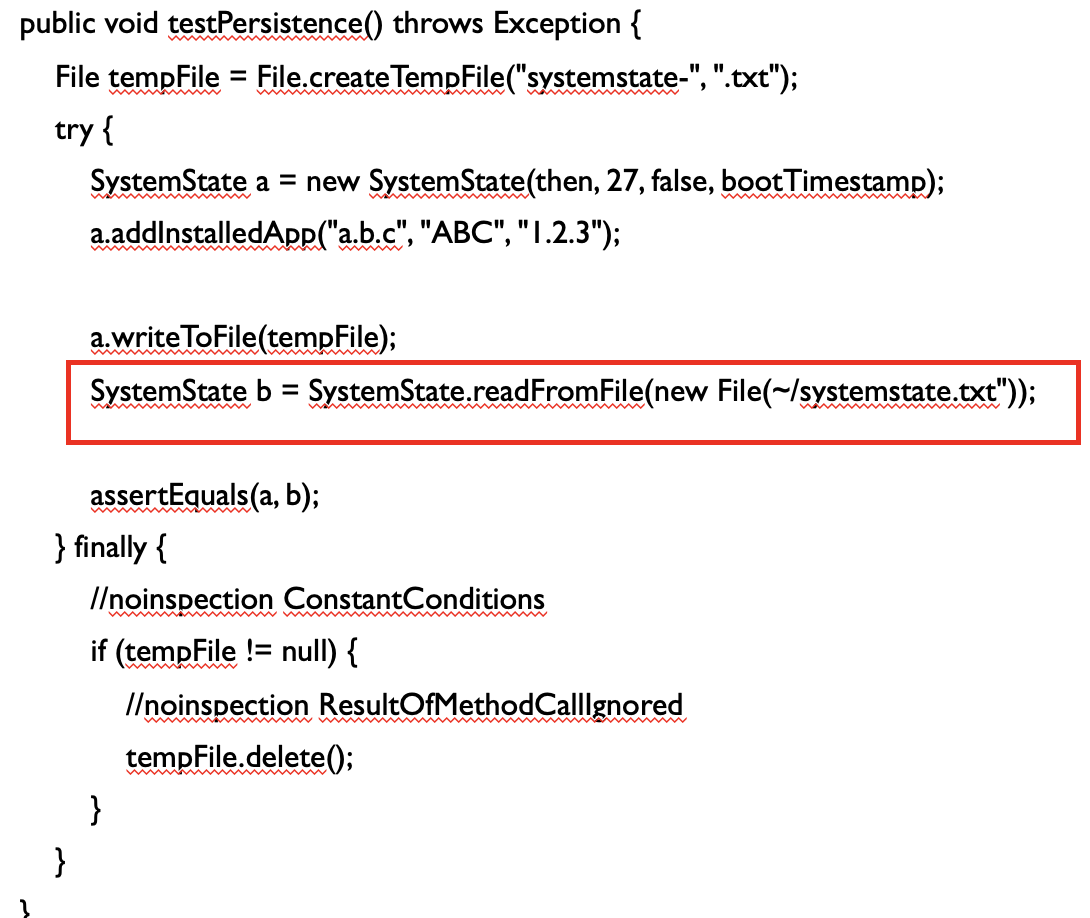

- When a test uses external resources, such as a file containing test data, the test is no longer self contained. Consequently, there is not enough information to understand the tested functionality, making it hard to use that test as documentation.

- Moreover, using external resources introduces hidden dependencies: if some force changes or deletes such a resource, tests start failing. Chances for this increase when more tests use the same resource.

- The use of external resources can be eliminated using the refactoring Inline Resource (1). If external resources are needed, you can apply Setup External Resource (2) to remove hidden dependencies.

- As part of the test, the test method, testPersistence() depends on external file “~/systemstate.txt”

What is Refactoring: Inline Resource

To remove the dependency between a test method and some external resource, we incorporate that resource in the test code.

This is done by setting up a fixture in the test code that holds the same contents as the resource. This fixture is then used instead of the resource to run the test.

A simple example of this refactoring is putting the contents of a file that is used into some string in the test code. If the contents of the resource are large, chances are high that you are also suffering from Eager Test smell. Consider conducting Extract Method or Reduce Data refactoring.

What is Refactoring: Setup External Resource

- If it is necessary for a test to rely on external resources, such as directories, databases, or files, make sure the test that uses them explicitly creates or allocates these resources before testing, and releases them when done (take precautions to ensure the resource is also released when tests fail).

Resource Optimism

Test code that makes optimistic assumptions about the existence (or absence) and state of external resources (such as particular directories or database tables) can cause non-deterministic behaviour in test outcomes.

The situation where tests run fine at one time and fail miserably the other time is not a situation you want to find yourself in.

- Use Setup External Resource to allocate and/or initialize all resources that are used.

- The test method accesses a file without verifying if the file exists before using it in the test operations.

- You can just have test relying on the existence or inexistance of external resources and dependencies! Makes sense to me

Test Run War

Such wars arise when the tests run fine as long as you are the only one testing but fail when more programmers run them.

This is most likely caused by resource interference:

- some tests in your suite allocate resources such as temporary files that are also used by others.

- Apply Make Resource Unique to overcome interference.

What is Refactoring: Make Resource Unique?

- A lot of problems originate from the use of overlapping resource names, either between different tests run done by the same user or between simultaneous test runs done by different users.

- Such problems can easily be prevented (or repaired) by using unique identifiers for all resources that are allocated, for example by including a time-stamp. When you also include the name of the test responsible for allocating the resource in this identifier, you will have less problems finding tests that do not properly release their resources.

General Fixture

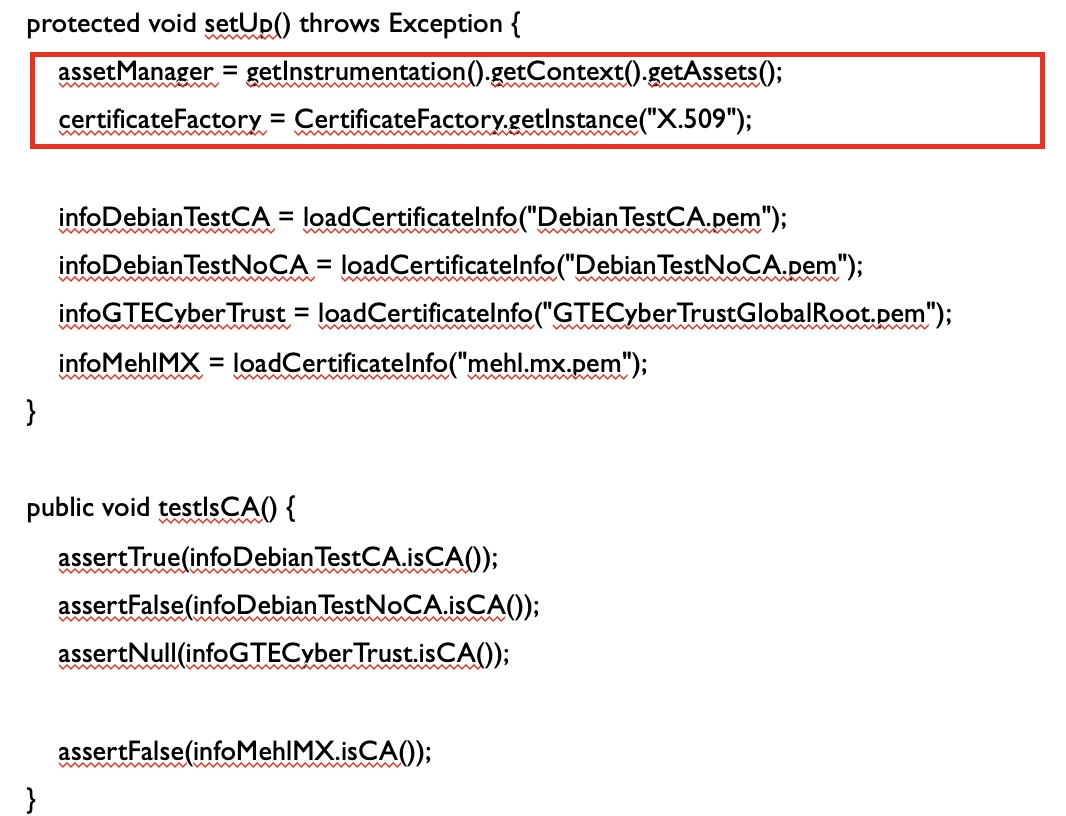

In the JUnit framework a programmer can write a setUp method that will be executed before each test method to create a fixture for the tests to run in.

Things start to smell when the setUp fixture is too general and different tests only access part of the fixture. Such setUps are harder to read and understand. Moreover, they may make tests run more slowly (because they do unnecessary work). The danger of having tests that take too much time to complete is that testing starts interfering with the rest of the programming process and programmers eventually may not run the tests at all.

Solution:

use setUp only for that part of the fixture that is shared by all tests using Extract Method and put the rest of the fixture in the method that uses it using Inline Method

- for example, two different groups of tests require different fixtures, consider setting these up in separate methods that are explicitly invoked for each test, or spin off two separate test classes using Extract Class.

- I don’t really understand what it means by fixture????to-understand

- And I don’t really understand this test smell.

- So from looking at the example, it seems like it has to do with the fixture doing to general things and the actual test only needing parts of what is done in the fixture.

- The setup/fixture method initializes a total of 6 fields (variables). However, the test method, testIsCA(), only utilizes 4 fields.

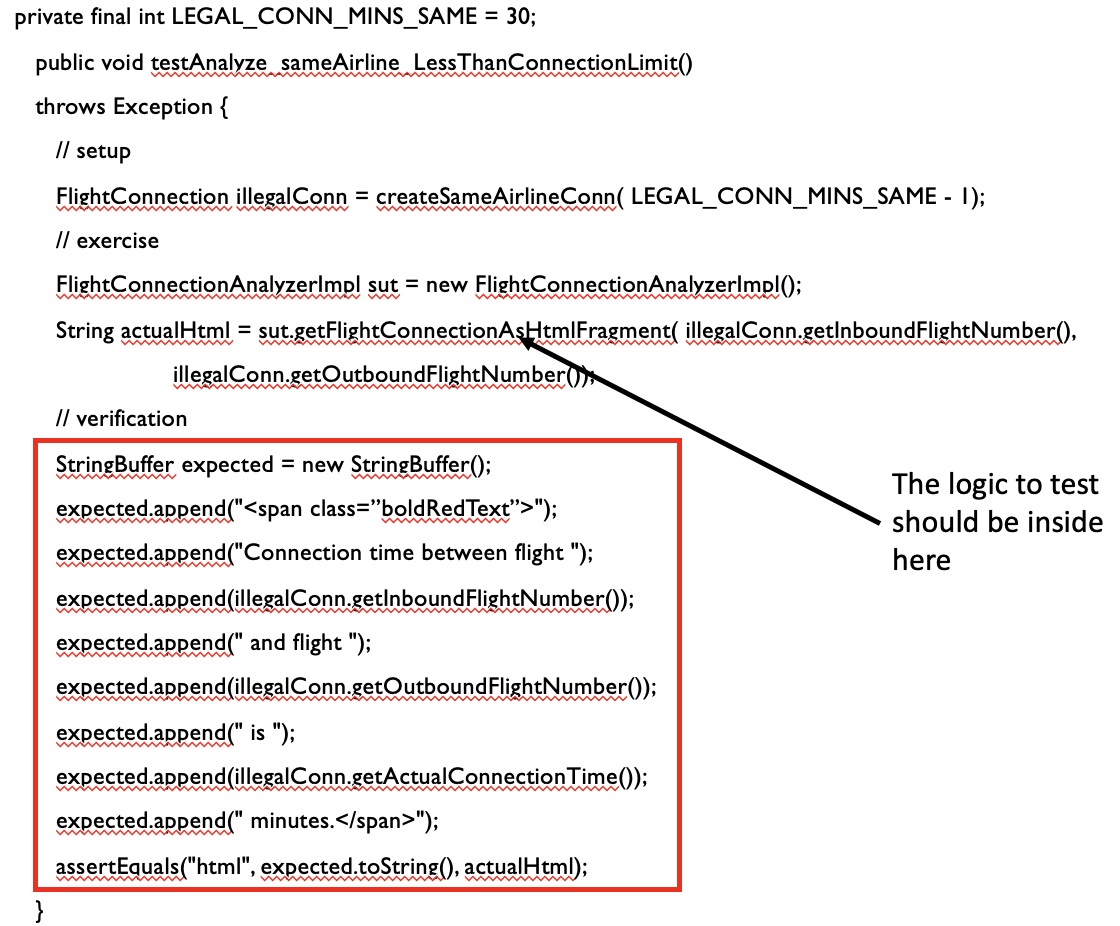

Assertion Roulette

This smell comes from having a number of assertions in a test method that have no explanation. If one of the assertions fails, you do not know which one it is.

- Use Add Assertion Explanation to remove this smell.

- As we can see, there are a lot of asserts.

Assertion Roulette vs. Eager Test?

Eager test:

- Testing multiple functionality

Assertion Roulette:

- One functionality but many assertions without explanation

What is Refactoring: Add Assertion Explanation

Assertions in the JUnit framework have an optional first argument to give an explanatory message to the user when the assertion fails.

Testing becomes much easier when you use this message to distinguish between different assertions that occur in the same test. Maybe this argument should not have been optional.

Very self explanatory

Indirect Testing

A test class is supposed to test its counterpart in the production code. It starts to smell when a test class contains methods that actually perform tests on other objects (for example because there are references to them in the class-to-be-tested).

Such indirection can be moved to the appropriate test class by applying Extract Method followed by Move Method on that part of the test. The fact that this smell arises also indicates that there might be problems with data hiding in the production code.

Note:

- that opinions differ on indirect testing. Some people do not consider it a smell but a way to guard tests against changes in the “lower” classes.

- In general, people feel that there are more losses than gains to this approach: It is much harder to test anything that can break in an object from a higher level. Moreover, understanding and debugging indirect tests is much harder.

- Should check if the system finds the less than threshold connection time, instead of the html output.

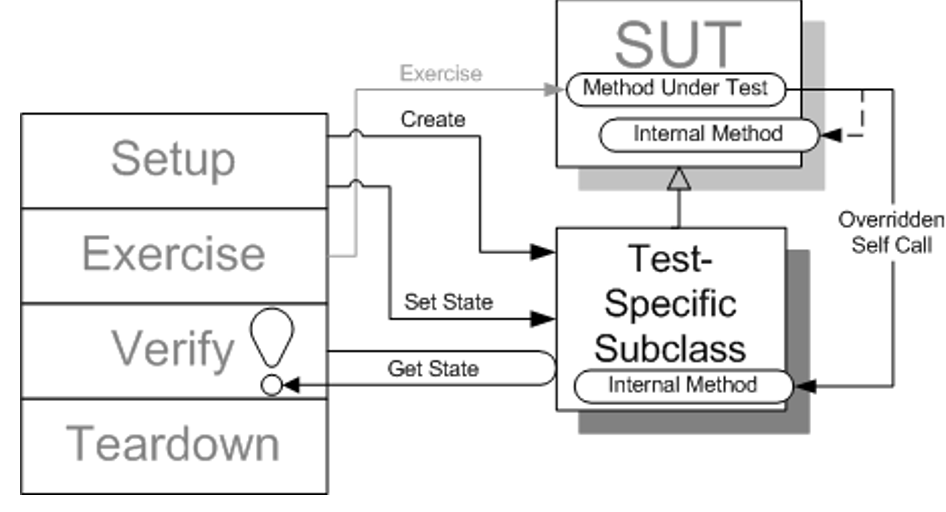

For Testers Only

When a production class contains methods that are only used by test methods, these methods either

- (1) are not needed and can be removed, or

- (2) are only needed to set up a fixture for testing.

Depending on functionality of those methods, you may not want them in production code where others can use them.

If this is the case, apply Extract Subclass to move these methods from the class to a (new) subclass in the test code and use that subclass to perform the tests on. You will often find that these methods have names or comments stressing that they should only be used for testing.

-

Fear of this smell may lead to another undesirable situation: a class without corresponding test class. The reason then is that the developer

- (1) does not know how to test the class without adding methods that are specifically needed for the test and

- (2) does not want to pollute his production class with test code. Creating a separate subclass helps to deal with this problem.

-

I’m a bit confused, just don’t include it? When we don’t want it in production code. Or move to a subclass. Pollution of production code…

Sensitive Equality

It is fast and easy to write equality checks using the toString method. A typical way is to compute an actual result, map it to a string, which is then compared to a string literal representing the expected value. Such tests, however may depend on many irrelevant details such as commas, quotes, spaces, etc. Whenever the toString method for an object is changed, tests start failing.

The solution is to replace toString equality checks by real equality checks using Introduce Equality Method (6).

- Use of the default value returned by an objects toString() method, to perform string comparisons, runs the risk of failure in the future due to changes in the objects implementation of the toString() method.

What is Refactoring: Introduce Equality Method

If an object structure needs to be checked for equality in tests, add an implementation for the “equals” method for the object’s class.

You then can rewrite the tests that use string equality to use this method. If an expected test value is only represented as a string, explicitly construct an object containing the expected value, and use the new equals method to compare it to the actually computed object.

- Implement equal’s functions for class object

- This allows tests to use real equality checks instead of relying on string comparisons.

Test Code Duplication

- Test code may contain undesirable duplication. In particular the parts that set up test fixtures are susceptible to this problem. The most common case for test code will be duplication of code in the same test class.

- This can be removed using Extract Method. For duplication across test classes, it may provide helpful to mirror the class hierarchy of the production code into the test class hierarchy.

- A special case of code duplication is test implication:

- test A and B cover the same production code, and A fails if and only if B fails.

- A typical example occurs when the production code gets refactored: before this refactoring, A and B covered different code, but afterwards they deal with the same code and it is not necessary anymore to maintain both tests.

Mutation Copy

Measuring Test Cases

- What we have learned so far?

- Quantitatively measure how much code is covered by test cases?

- Do the coverage metrics directly measure the effectiveness of test cases?

Test Case Effectiveness

- Test suite A can detect more bugs than test suite B.

- Given one bug, some test cases in suite A fail, but all test cases in suite B pass.

- The best test suite can detect every bug; whenever a bug is introduced, at least one test case will fail.

Measure test suite effectiveness

- A simple solution: Find all bugs in a software.

- The chicken or the eggs causality dilemma

- The state-of-the-art solution: artificially inject bugs

- Mutation testing

- Modify (mutate) some statements in the code, so we have many different versions of the code. Each version is a bug.

- Check if the test cases can find the bug, i.e., some test cases fail and some do NOT fail.

- Mutation testing

The metrics we covered so far are based on the contents we can obtain in software, whether requirements, code implementation,

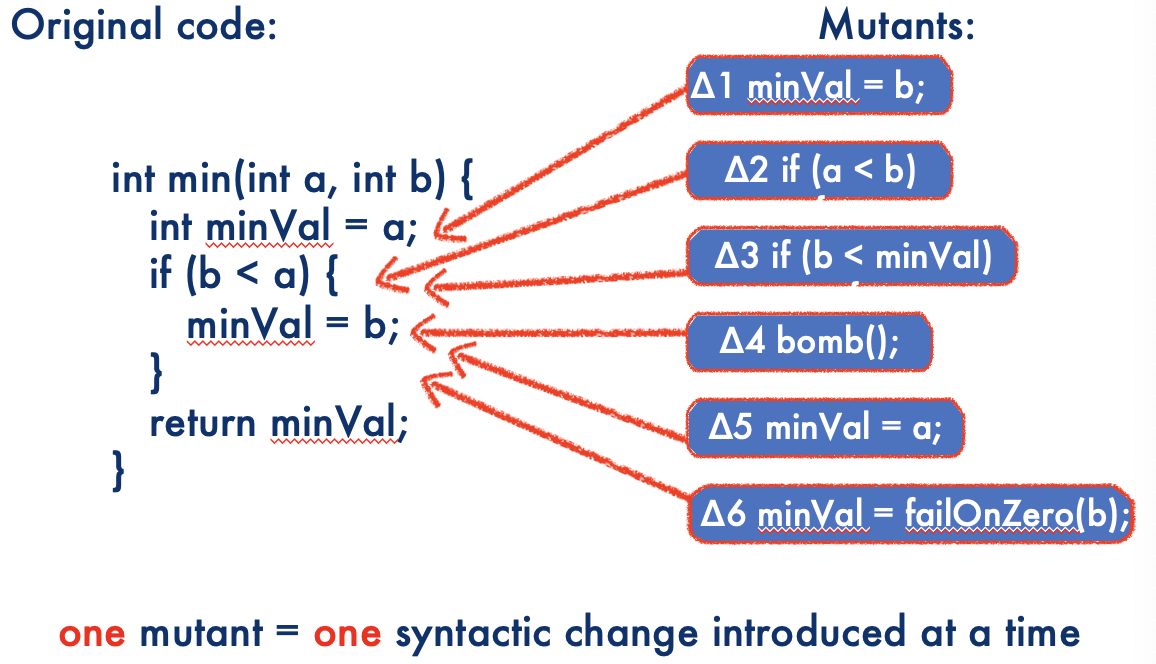

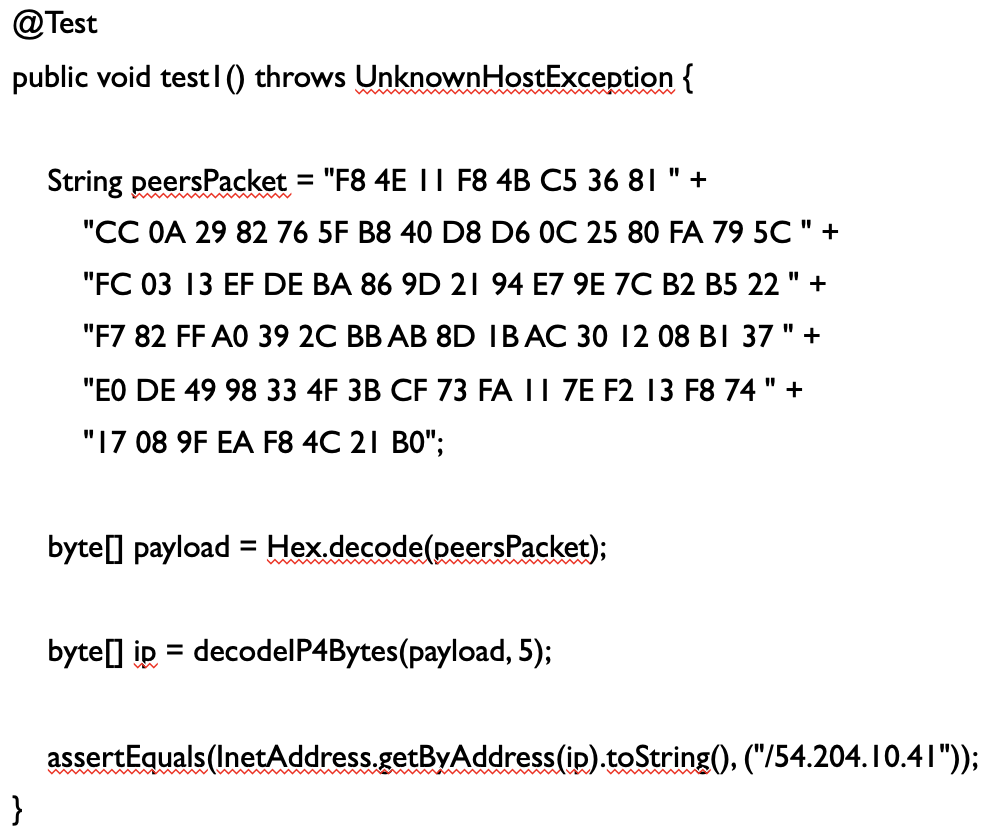

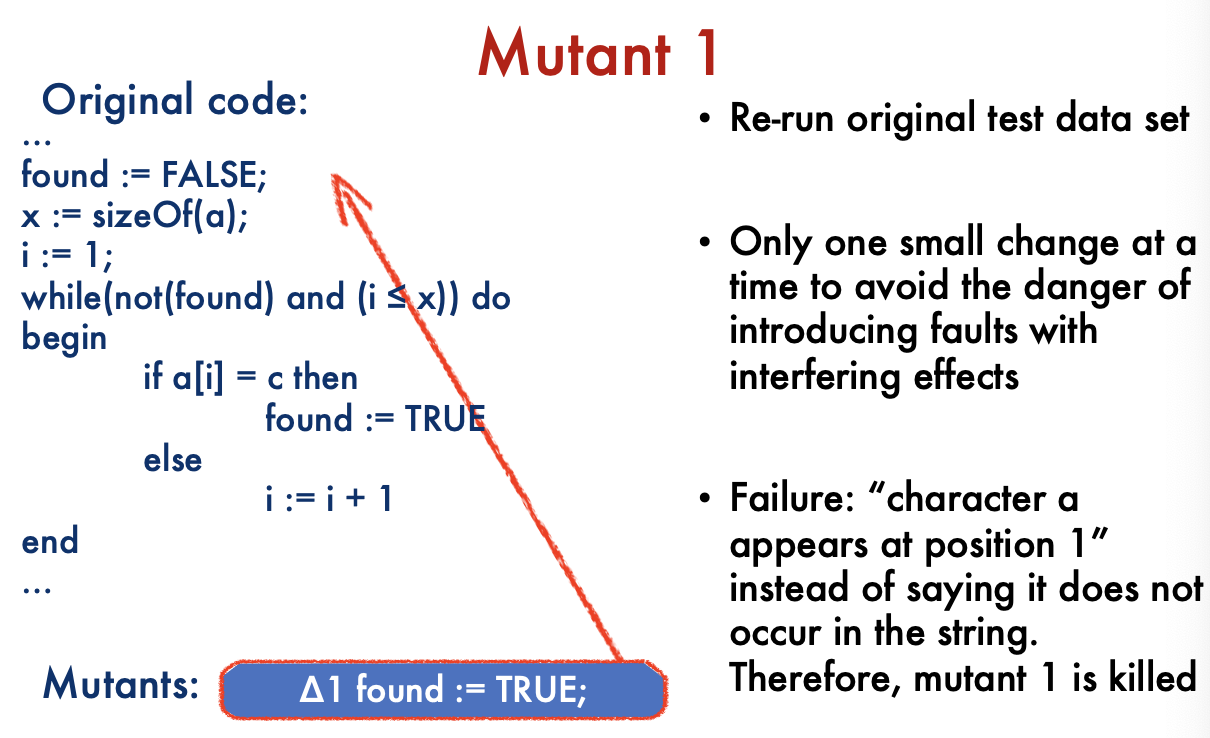

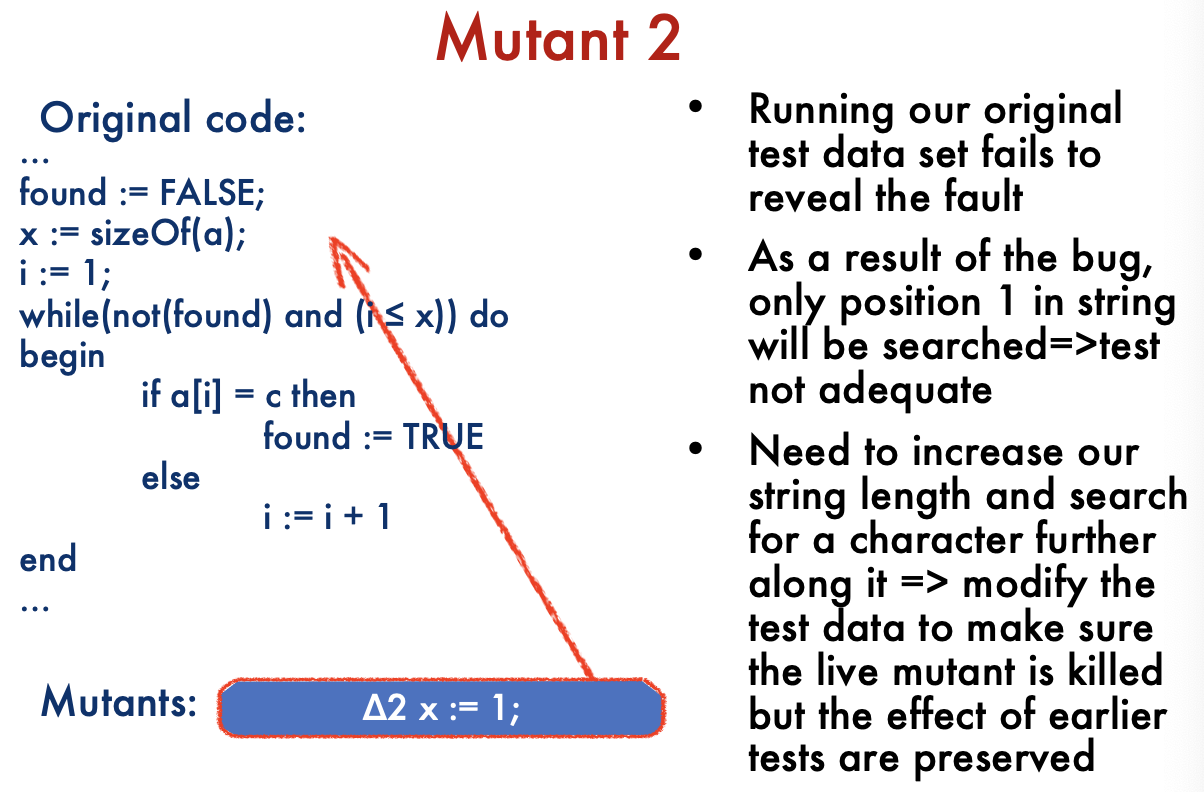

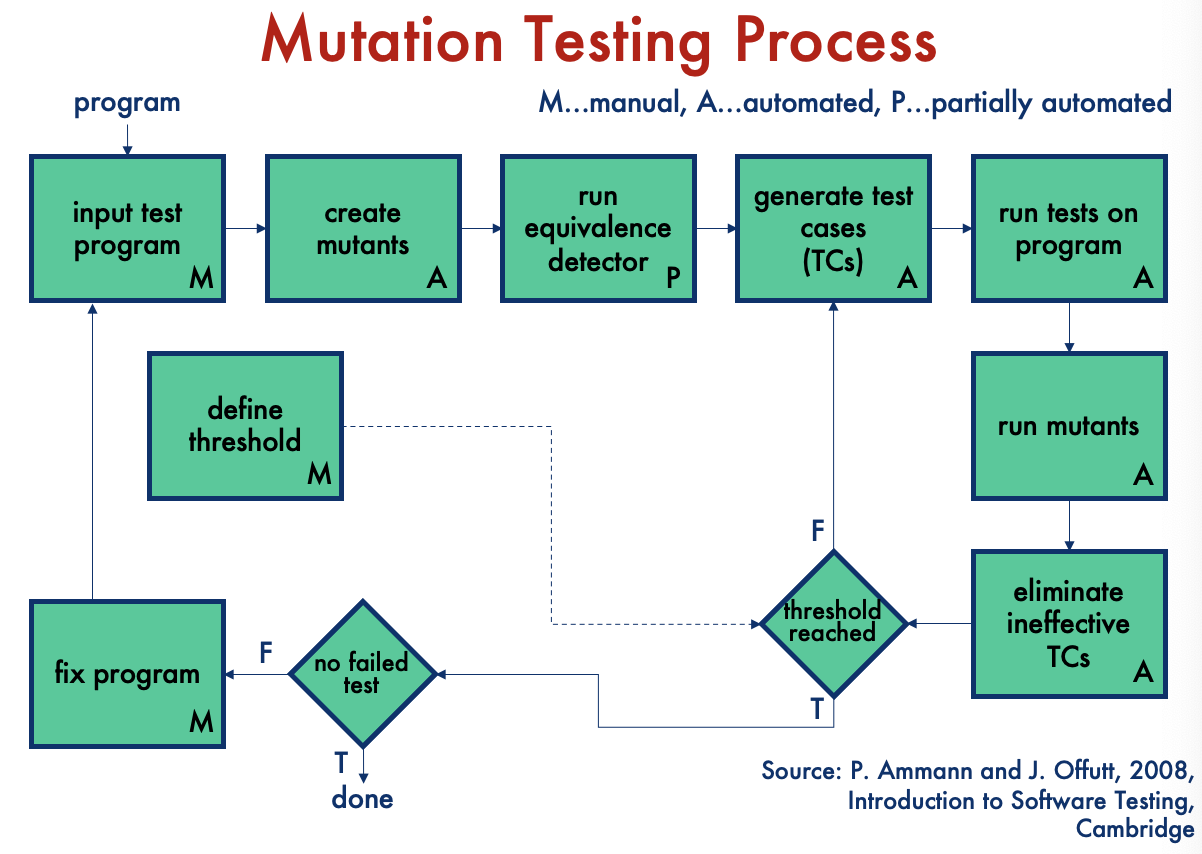

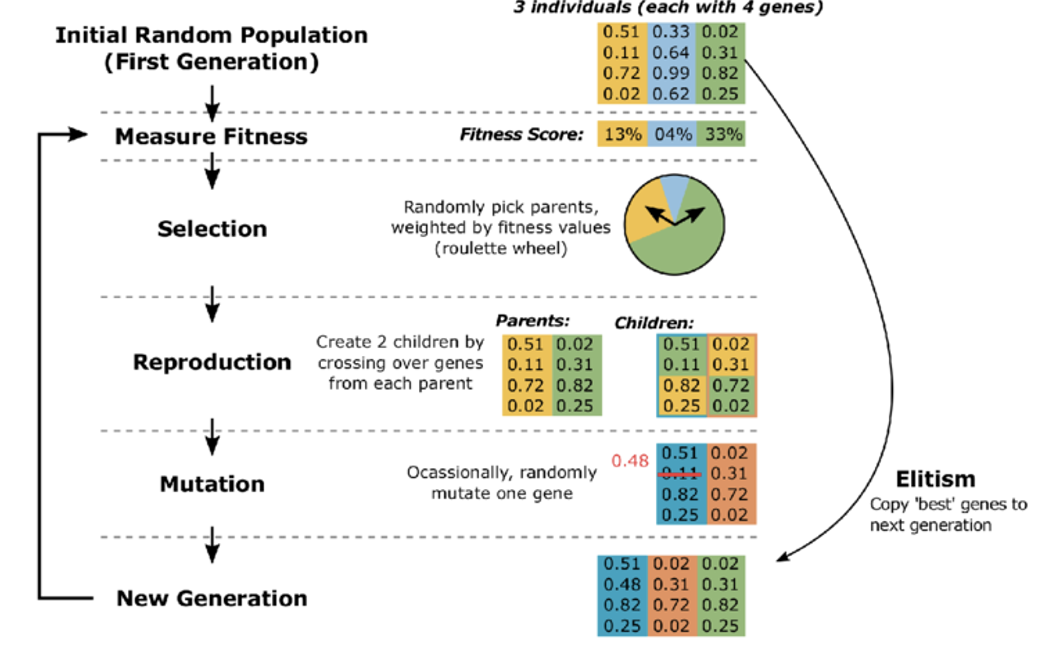

Mutation Testing

Mutation Testingt

- Fault-based testing: directed towards “typical” faults that could occur in a program

- Basic idea:

- Take a program and test data generated for that program

- Create a number of similar programs (mutants), each differing from the original in one small way, i.e., each possessing a fault (e.g., replace addition operator by multiplication operator)

- The original test data is then run through the mutants

- If test data detects all differences in mutants, then the mutants are said to be dead, and the test set is adequate

- Mutation Testing is NOT a testing strategy like Boundary Value or Data Flow Testing. It does not outline test data selection criteria.

- Mutation Testing should be used in conjunction with traditional testing techniques, not instead of them.

Note: Mutation testing is based on two hypotheses.

The first is the competent programmer hypothesis. This hypothesis states that most software faults introduced by experienced programmers are due to small syntactic errors.[1] The second hypothesis is called the coupling effect. The coupling effect asserts that simple faults can cascade or couple to form other emergent faults.[6][7]

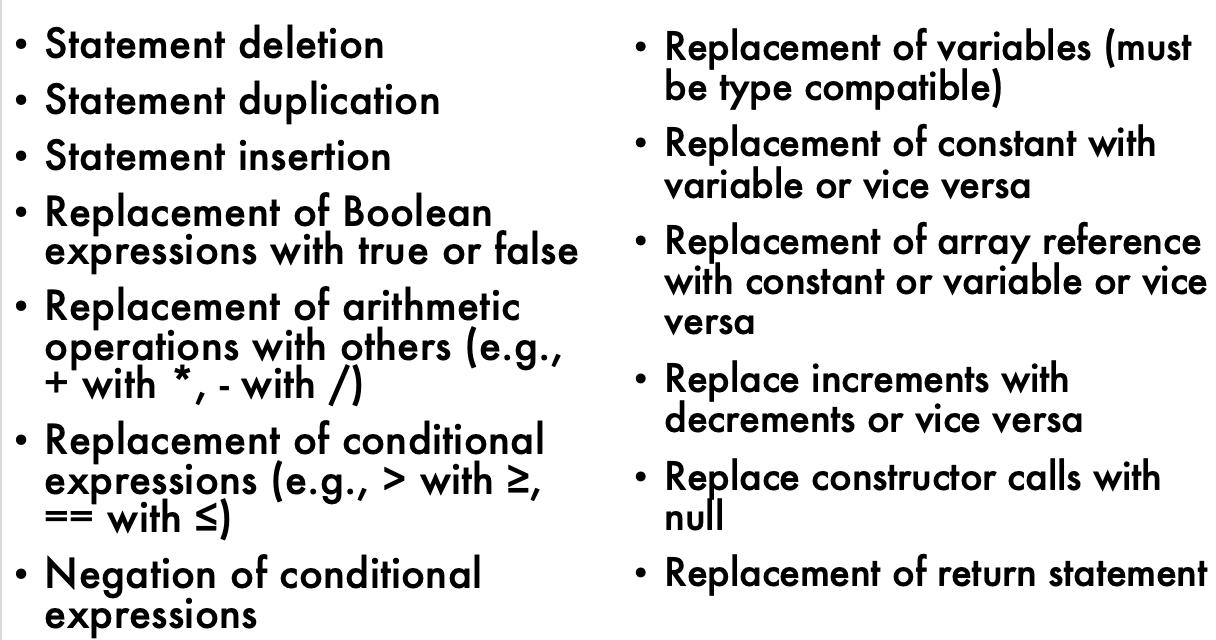

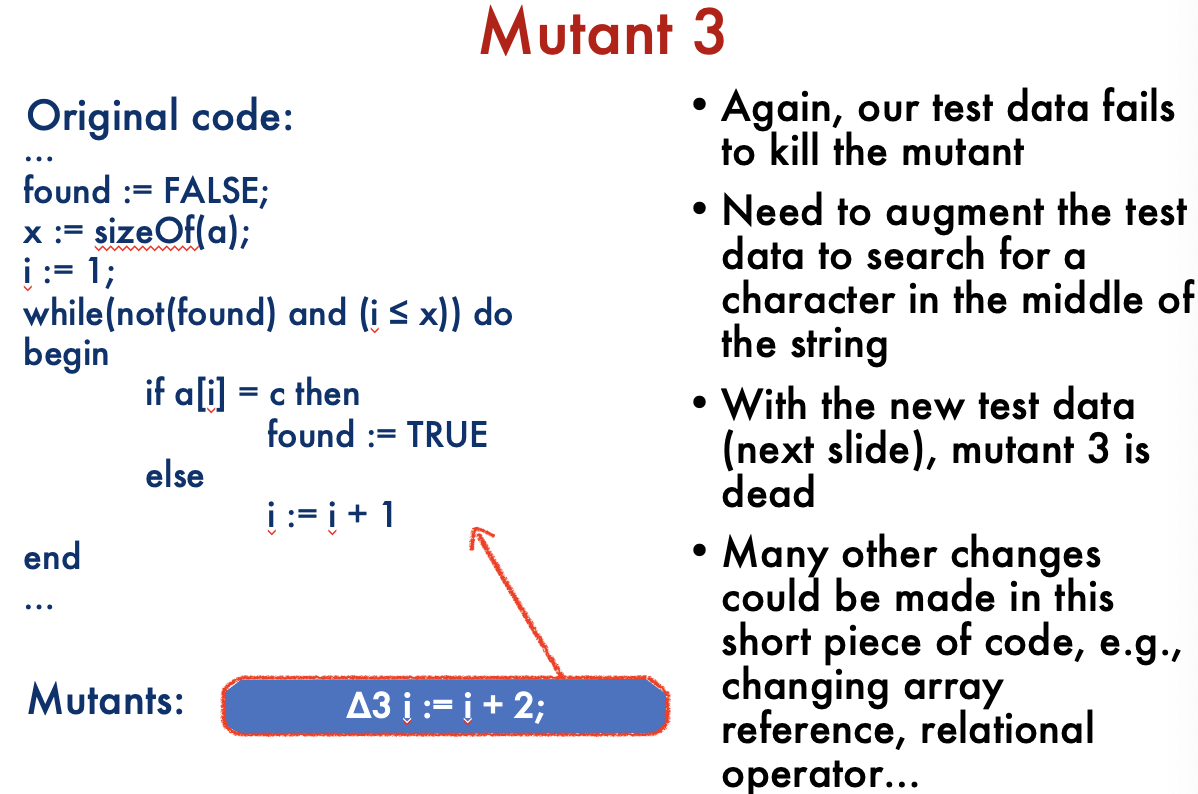

Steps in Mutation Testing

- Step 1: Modify statements in the code and create mutants

- Step 2: Test cases are run through the generated mutants

- Step 3: Compare the results of the original and the mutant

- Step 4: The mutant is killed if different results are found. The test case is good as it detects the change (i.e., injected defect). The mutant is alive if the results are the same: the test case is NOT effective.

A mutant remains live either because it is equivalent to the original program (functionally identical though syntactically different – equivalent mutant) or the test set is inadequate to kill the mutant.

- In the latter case, the test data need to be re-examined, possibly augmented to kill the live mutant.

- For the automated generation of mutants, we use mutation operators, i.e., predefined program modification rules (corresponding to a fault model).

Different Mutants

- Stillborn mutant: syntactically incorrect, killed by compiler

- Trivial mutant: killed by almost any test case

- Equivalent mutant: always produces the same output as the original program

- None of the above are interesting from a mutation testing perspective. We want to have mutants that represent hard to detect faults

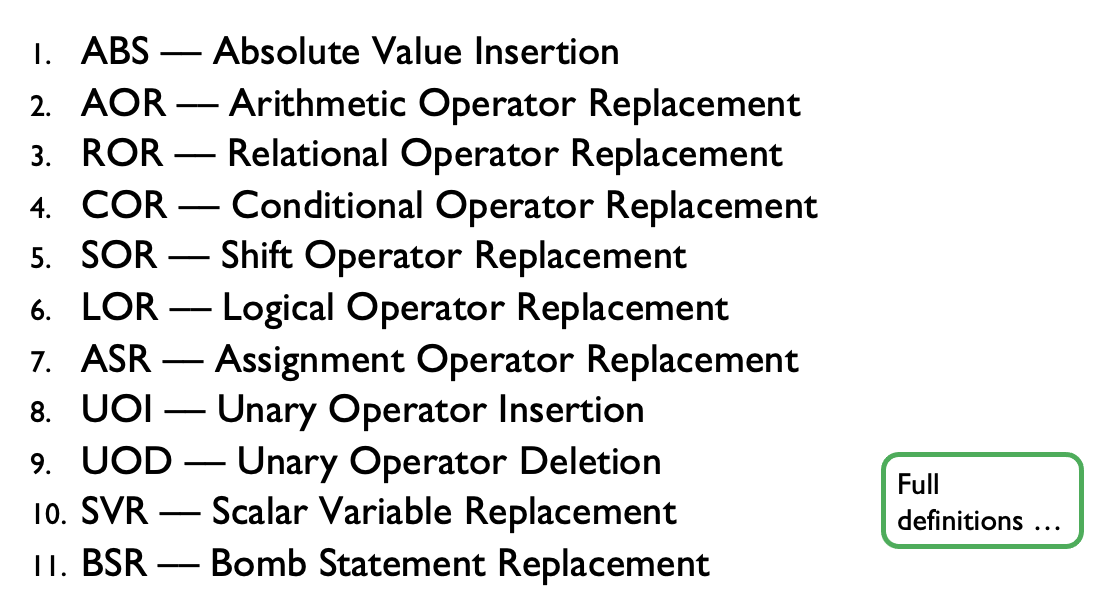

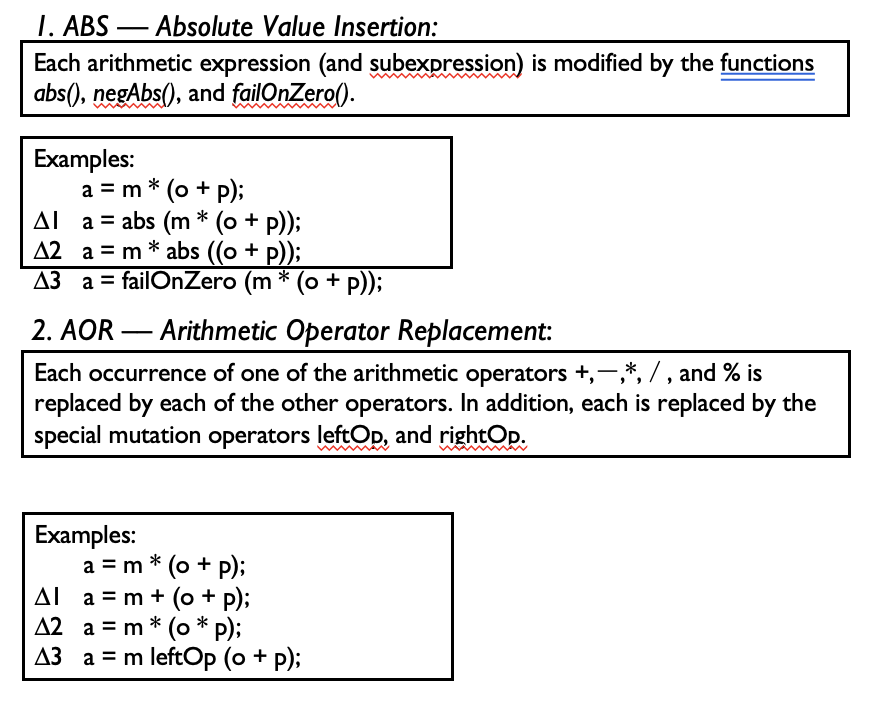

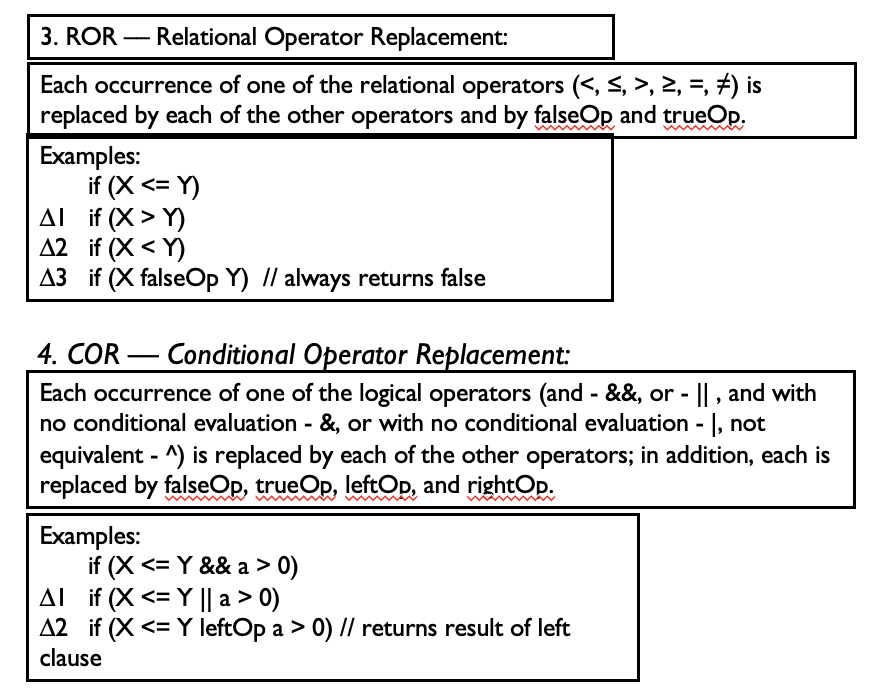

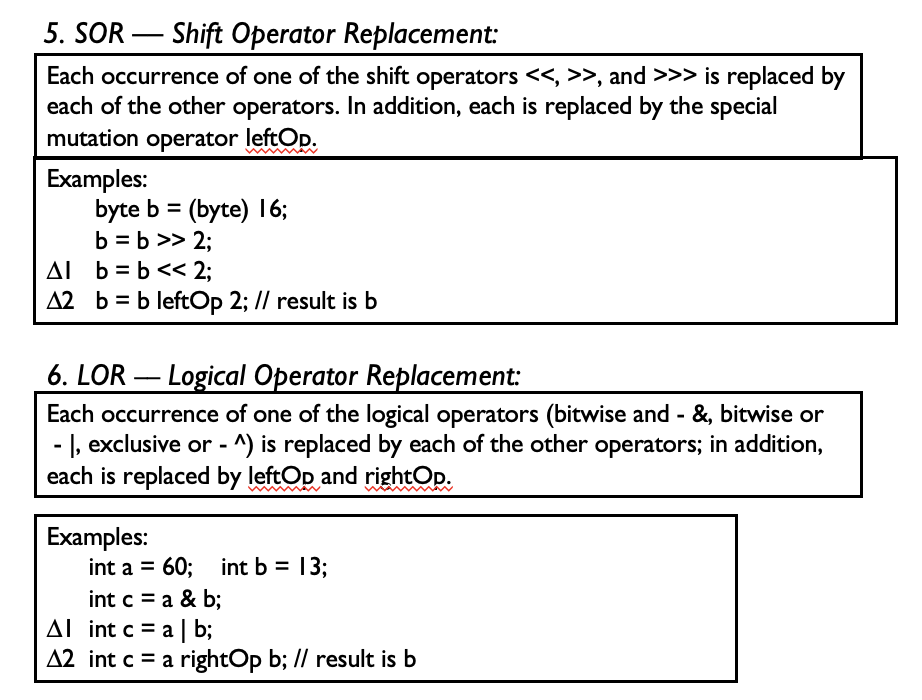

Example of Mutation Operators:

e.g., see http://pitest.org/quickstart/mutators/

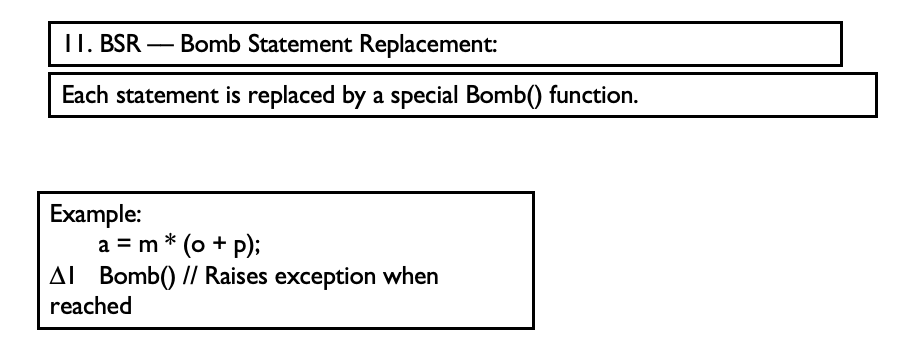

Mutation Operators

- The failOnZero() method is a special mutation operator that causes a failure if the parameter is zero and does nothing if the parameter is not zero (it returns the value of the parameter)

- leftOp returns the left operand (the right is ignored), rightOp returns the right operand

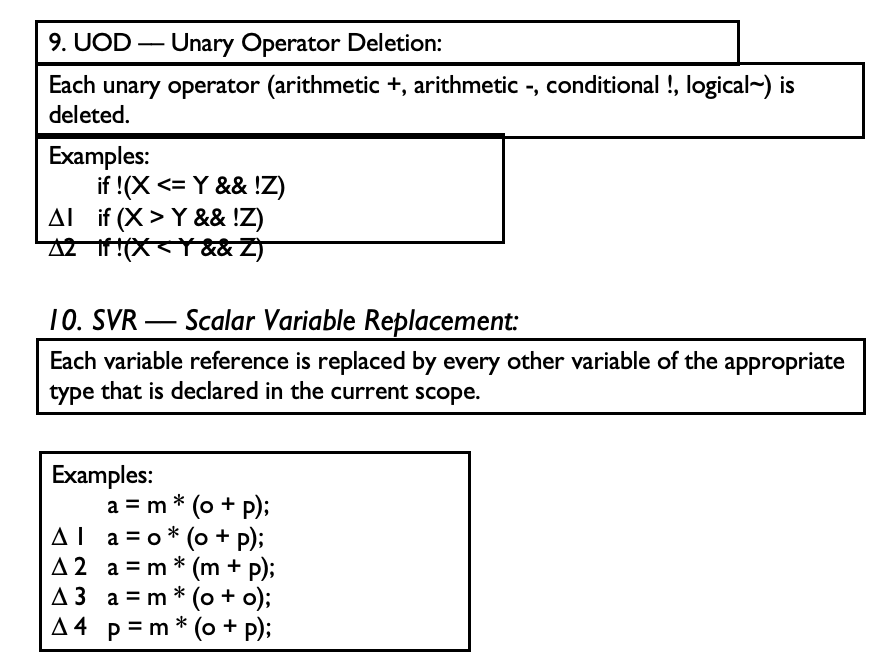

- UOD

- For example, the statement “if !(a > -b)” is mutated to create the following two statements:

if (a > -b) if !(a > b)

Example of Mutation Operators

- Specific to Object-Oriented Programming languages:

- Replacing a type with a compatible subtype (inheritance)

- Changing the access modifier of an attribute or method

- Changing the instance creation expression (inheritance)

- Changing the order of parameters in the definition of a method

- Changing the order of parameters in a call

- Removing an overloading method

- Reducing the number of parameters

- Removing an overriding method

Assumptions

- What about more complex errors, involving several statements?

- Competent programmer assumption:

- given a specification a programmer develops a program that is either correct or differs from the correct program by a combination of simple errors

- Coupling effect assumption:

- test data that distinguishes all programs differing from a correct one by only simple errors is so sensitive that it also implicitly distinguishes more complex errors

- The assumption is extended later with: complex faults are coupled to simple faults in such a way that a test data set that detects all simple faults in a program will detect a high percentage of the complex faults

- test data that distinguishes all programs differing from a correct one by only simple errors is so sensitive that it also implicitly distinguishes more complex errors

Specifying Mutation Operators

- Ideally, we would like the mutation operators to be representative of (and generate) all realistic types of faults that could occur in practice

- Mutation operators change with programming languages, design and specification paradigms

- In general, the number of mutation operators is large as they are supposed to capture all possible syntactic variations in a program

- Mutants are considered to be good indicators of test effectiveness

Mutation Coverage

- Complete coverage equates to killing all non-equivalent mutants

- The amount of coverage is called mutation score (ratio of dead mutants over all non-equivalent mutants)

- For a set of test cases is the percentage of non-equivalent mutants killed by the test data

- Mutation Score = 100 * D / (N - E)

- D: Dead mutants

- N: Number of mutants

- E: Number of equivalent mutants

- A set of test cases is mutation adequate if its mutation score is 100%.

- We can see each mutant as a test requirement

- The number of mutants depends on the definition of mutation operators and the software syntax/structure

- Number of mutants tends to be large, even for small programs (random sampling?)

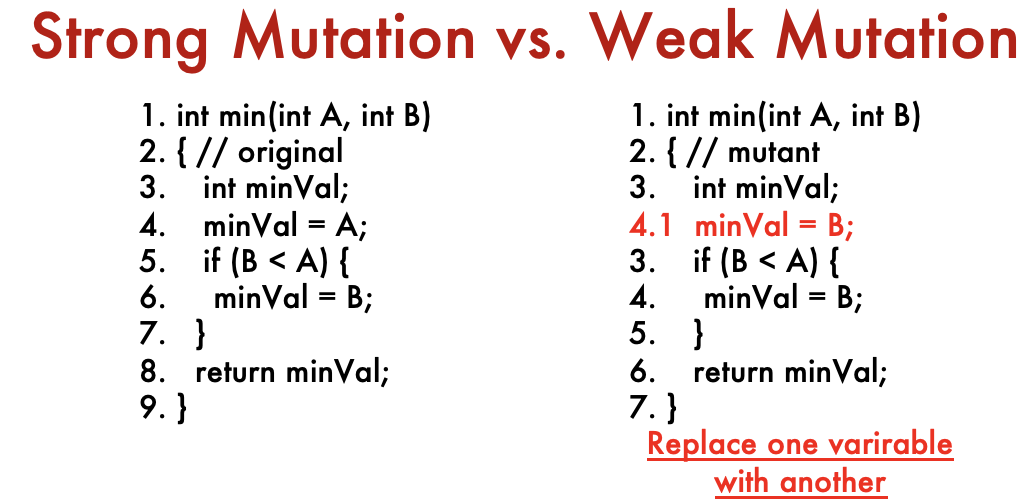

A simple example

- Discussion

- Mutant 3 is equivalent, because

minValand A have the same value at the changed line of code

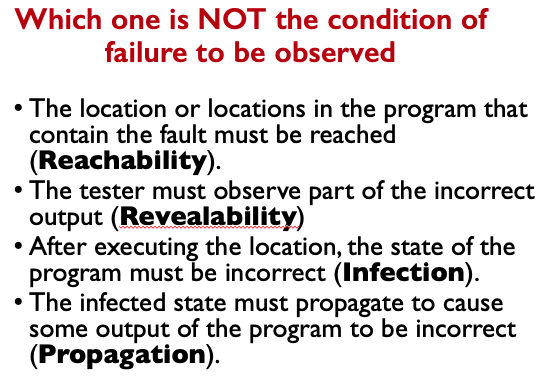

- In order to have an appropriate test case, we must:

- Reach the program fault seeded during execution (reachability)

- Cause the program state to be incorrect (infection)

- Cause the program output to be incorrect (propagation)

Strong and Weak Mutation

- Strong mutation: a fault must be reachable, infect the state, and propagate to output

- Weak mutation: a fault which kills a mutant need only be reachable and infect the state

Experiments show that weak and strong mutation require almost the same number of test cases to satisfy them

Strong Mutation Coverage (SMC)

- Strongly killing mutants

- Given a mutant for a program and a test case , is said to strongly kill iff the output of on is different from the output of on

- Strong mutation coverage (SMC)

- For each mutant in , contains a test case which strongly kills .

Weak Mutation Coverage (WMC)

- Weakly killing mutants

- Given a mutant that modifies a source location in program and a test case , is said to weakly kill iff the state of the execution of on is different from the state of the execution of on , immediately after some execution of

- Weak mutation coverage (WMC)

- For each mutant in , contains a test case which weakly kills .

- Note it’s output difference vs. state difference for SMC and WMC!!

- Reachability: unavoidable

- Infection: need

- Propagation: wrong

minValneeds to return to the caller; that is we cannot execute the body of the if statement, so need - Condition for strongly killing mutation

- TC: , return 7 but expected 5

- Conditions for weakly killing mutation

- TC: , return 2 and expected 2

Need to go through ALL the following examples

Below. My brain kinda froze.

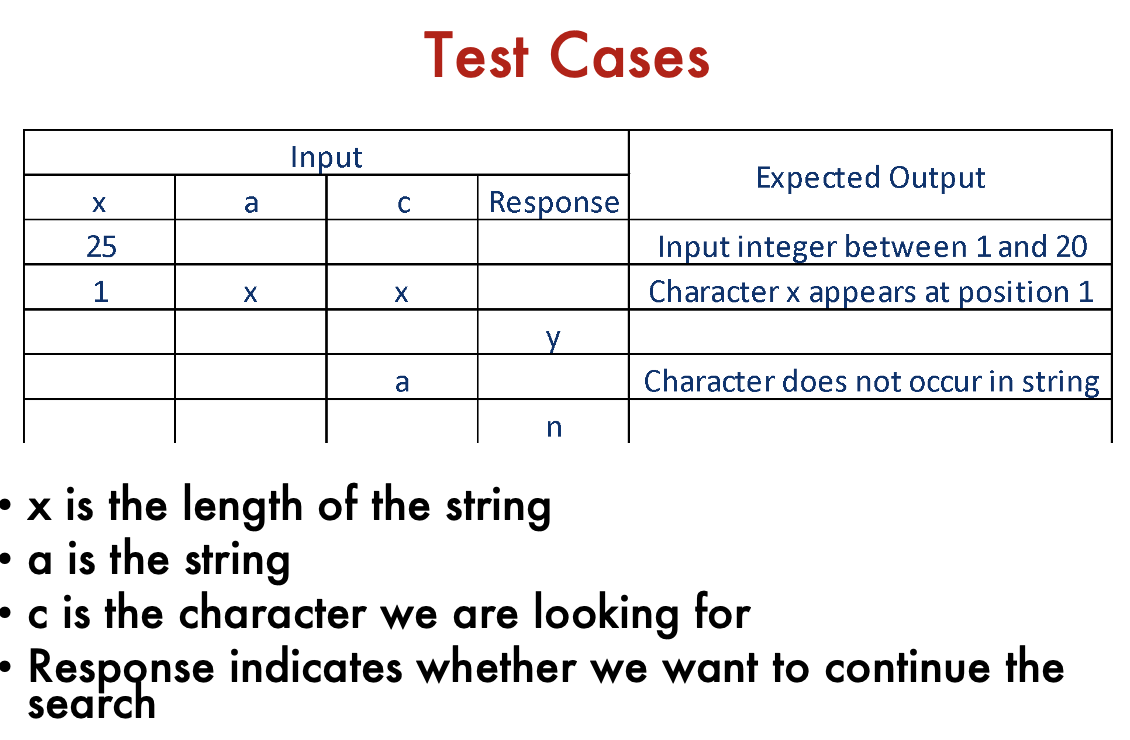

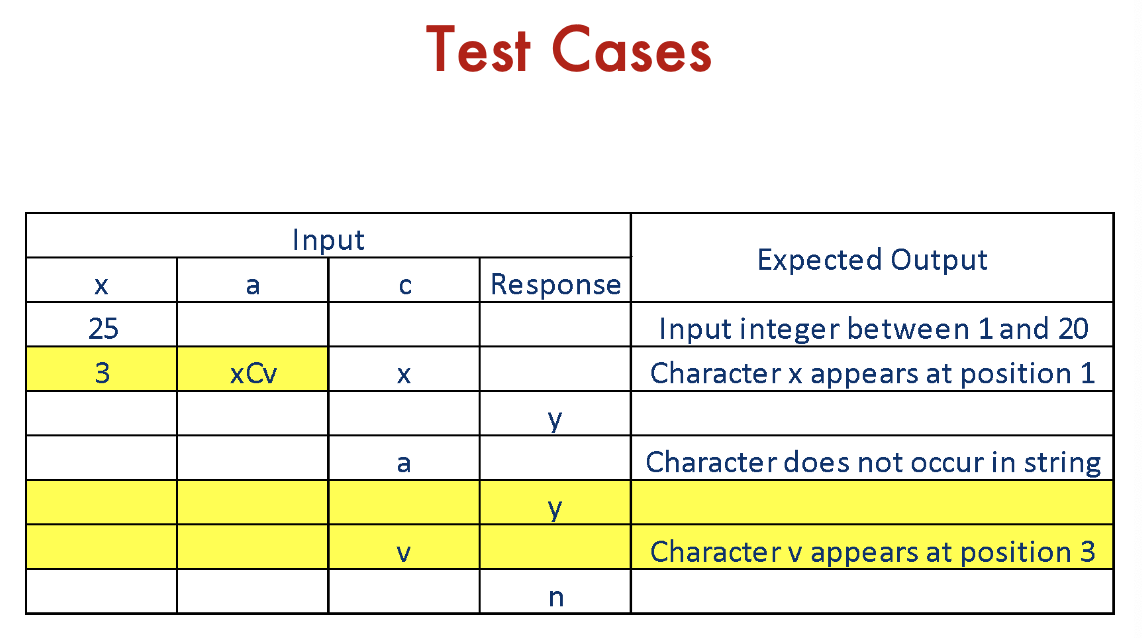

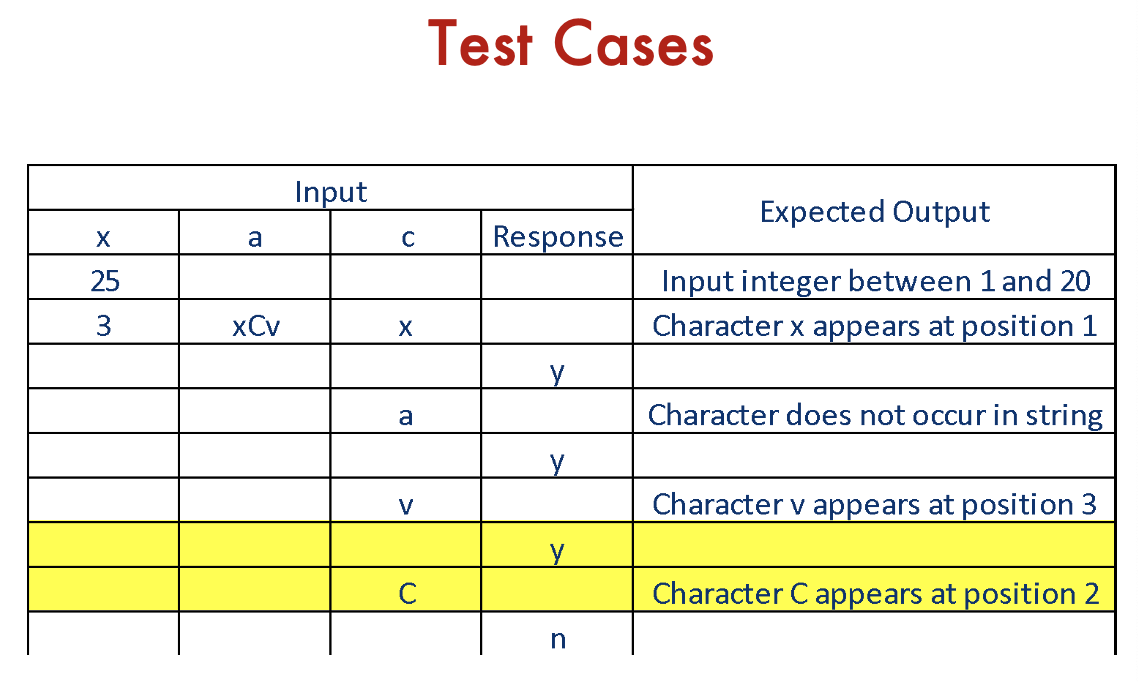

Another Simple Example

The program prompts the user for a positive number in the range of 1 to 20 and then for a string of that length. The program then prompts for a character and returns the position in the string at which the character was first found or a message indicating that the character was not present in the string. The user has the option to search for more characters.

- First input an array a with length

- Then input an array , search for

- Then say you want to continue

- then search for

char a - Then say you want to continue

Mutation Testing: Summary

- Measures the quality of test cases

- Assumption: in practice, if the software contains a fault, there will usually be a set of mutants that can only be killed by a test case that also detects that fault

- Provides the tester with a clear target (mutants to kill)

- Computationally intensive, a possibly very large number of mutants is generated

- Equivalent mutants are a practical problem: it is in general an undecidable problem

Discussion

- Probably most useful at unit testing level but research is progressing…

- Mutation operators for module interfaces (aimed at integration testing)

- Mutation operators on specifications: Petri nets, state machines… (aimed at system testing)

- Also very useful for assessing the effectiveness of test strategies:

- Assess with the help of the mutation score test cases generated according to different test strategies

- Use the mutation score as a prediction of fault detection effectiveness

What about non-functional bugs?

- Can we design mutants that introduces non-functional issues?

- A delay (like

wait()) in the code to introduce performance issues?

- How big the delay is appropriate?

- Would the two assumptions still hold for non-functional bugs?

- Developers only make small issues>

- Tests detects simple issues can detect complex issues?

- The infection and propagation are hard to define outside of the functional test domain.

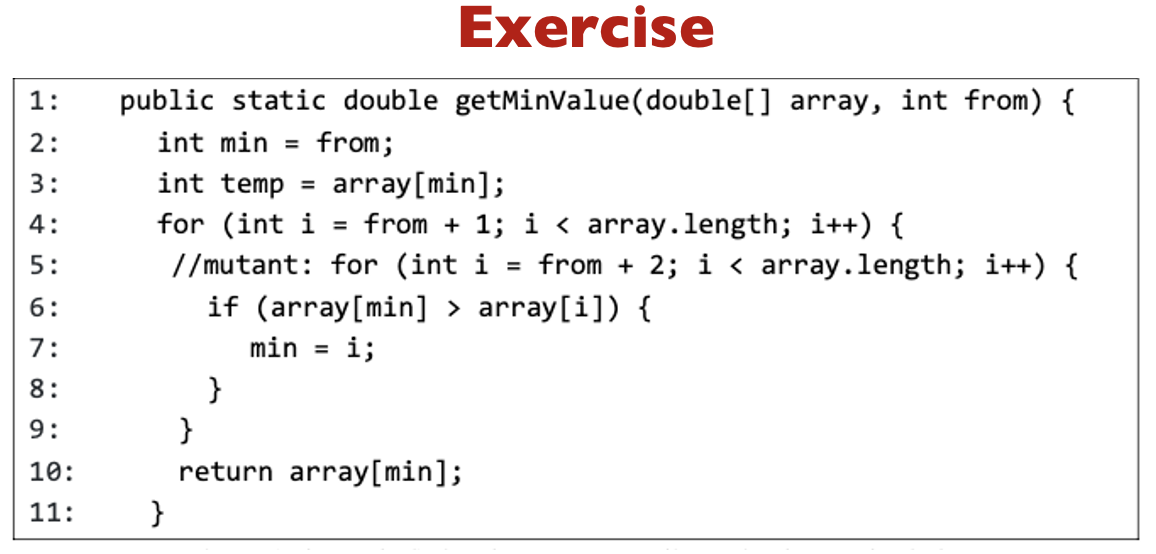

#todo

#todo

- If possible, find test inputs that do not reach the mutant. If it is impossible, explain why.

- If possible, find test inputs that satisfy reachability but not infection for the mutant. If it is not possible, explain why.

- If possible, find test inputs that satisfy reachability and infection, but not propagation for the mutant. If it is not possible, explain why.

- Find a mutant of line 4 that is equivalent to the original statement.

Relevant Readings:

- Jorgensen: Chapter 21

- Ammann & Offutt: Chapter 9

Week 4

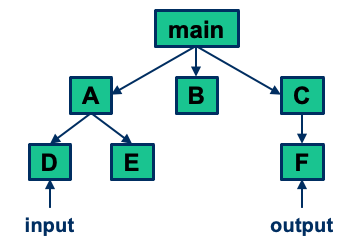

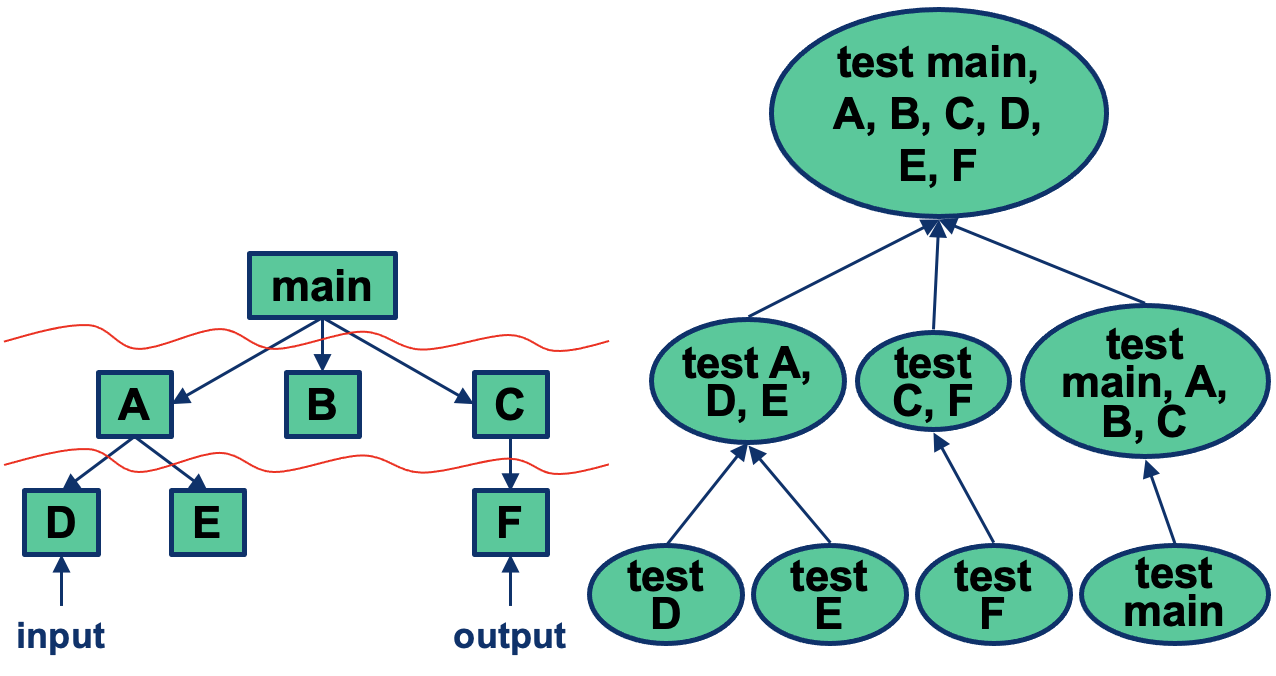

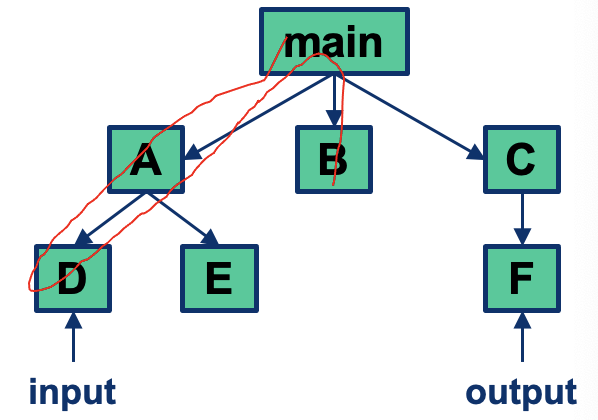

Integration Strategy

- How individual components are assembled to form larger program entities

- Problem 1: test component interaction

- Problem 2: determine optimal order of integration

- Strategy impacts:

- The form in which unit test cases are written

- The type of test tools to use

- The order of coding / testing components

- The cost of generating test cases

- The cost of locating and correcting detected defects

Integration Testing

Integration Testing

Goal: ensure components work in isolation and when assembled

Need a component dependency structure

Questions

- How do we ensure a component is effectively tested in isolation?

- Assuming all components work in isolation, what types of faults can be expected? mostly interface-related (e.g., wrong method called, wrong parameters, incorrect parameter values, use of version that is not supported)

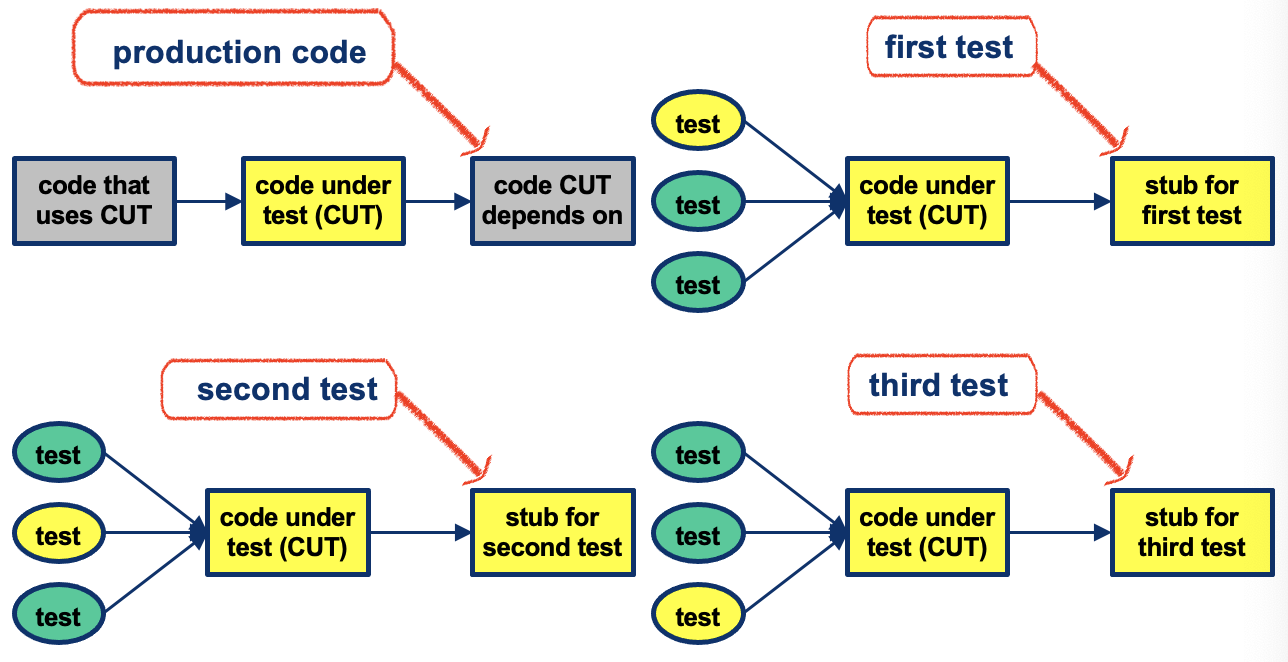

Integration Testing: Stubs

- Stub: replaces a called module

- Can replace whole components (e.g., database, network)

- Must be declared/invoked as the real module:

- Same name as replaced module

- Same parameter list as replaced module

- Same return type as replaced module

- Same modifiers (static, public or private) as replaced module

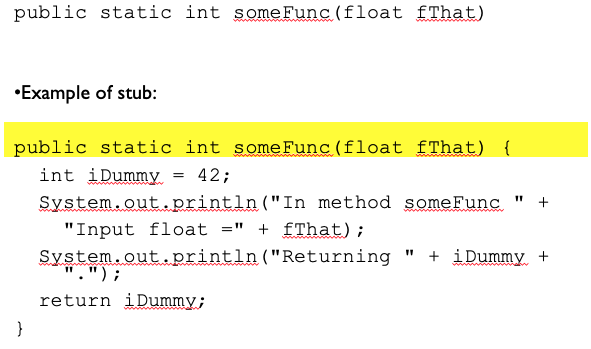

Example of stub:

So apparently, notice how it’s somehow isolating this component without needing the actually implementation of other parts. We have a dummy variable and we just want to know if it prints the given inputs out correctly.

- Common functions of a stub:

- Display / log trace message

- Display / log passed parameters

- Return value according to test objective - may be:

- Form a table

- From an external file

- Based on a search according to parameter value

- …

void main() {

1 int x, y;

2 x = A();

3 if (x > 0) {

4 y = B(x);

5 C(y);

} else {

6 C(x)

}

7 exit(0);

}- Test for path 1-2-3-4-5-7:

- Stub for

A()such thatx > 0returned

- Stub for

- Test for path 1-2-3-6-7

- Stub for

A()such thatx <= 0returned

- Stub for

- Problem:

- Stubs can become too complex

Integration Testing: Drivers

- Driver module used to call tested modules:

- Parameter passing

- Handling return values

- Typically, drivers are simpler than stubs

public class TestSomething {

public static void main (String[] args) {

float iSend = 98.6f;

Whatever what = new Whatever();

System.out.println("Sending someFunc: " + iSend);

int iReturn = what.someFunc(iSend);

System.out.println ("SomeFunc returned: " + iReturn);

}

}- A driver module, in the context of integration testing, is responsible for calling the tested modules or components and facilitating the integration process.

- The method

someFunc()of theWhateverinstancewhatis called withiSendas its argument. This is the point where integration testing occurs, as the driver module is interacting with thesomeFunc()method, which is likely part of a larger system or module. - Is the TestSomething the driver module?

- the driver module is the

TestSomethingclass. The purpose of this class is to act as a driver for testing thesomeFunc()method, which is likely part of another module or class (in this case, theWhateverclass).

- the driver module is the

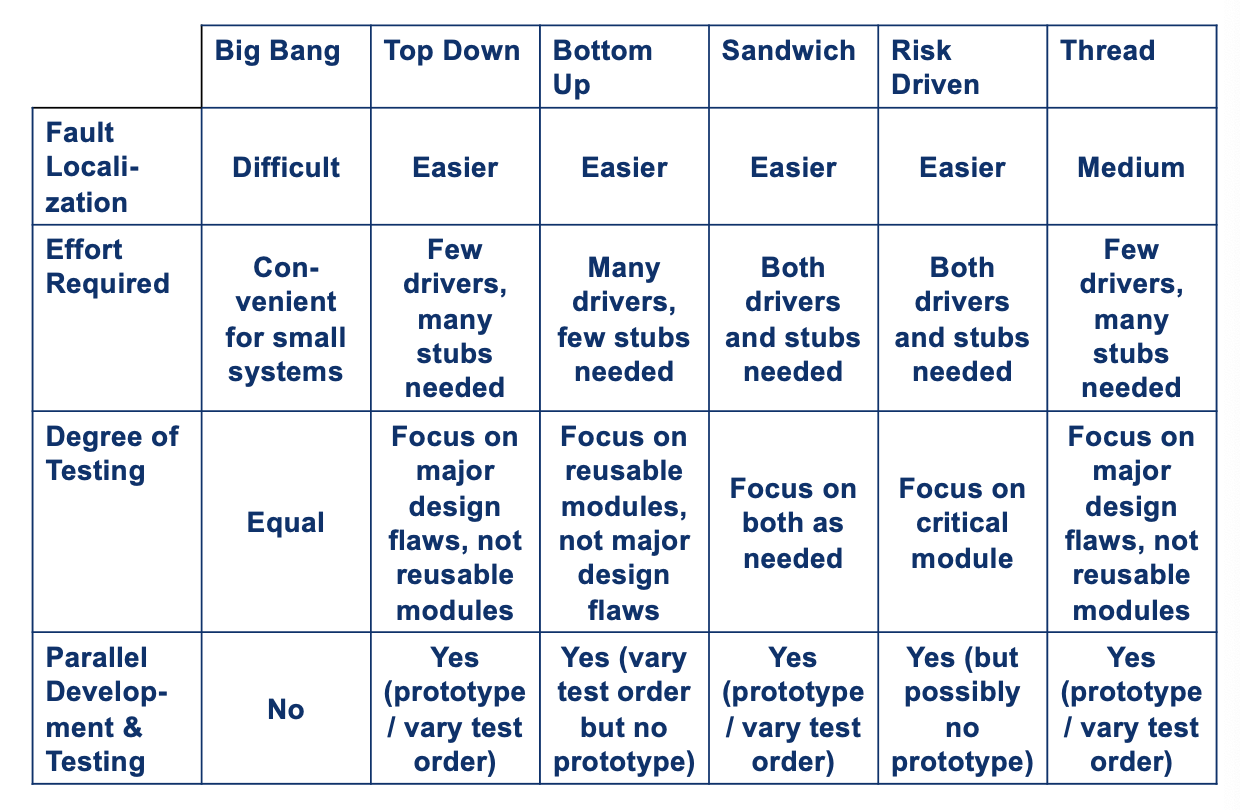

Integration Testing Strategies

- Different strategies for integration testing:

- Big Bang

- Top-Down

- Bottom-Up

- Sandwich

- Risk-Driven

- Function/Threaded-Based

- Comparison criteria:

- Fault localization

- Effort needed (for stubs and drivers)

- Degree of testing of modules achieved

- Possibility for parallel development (i.e., implementation and testing)

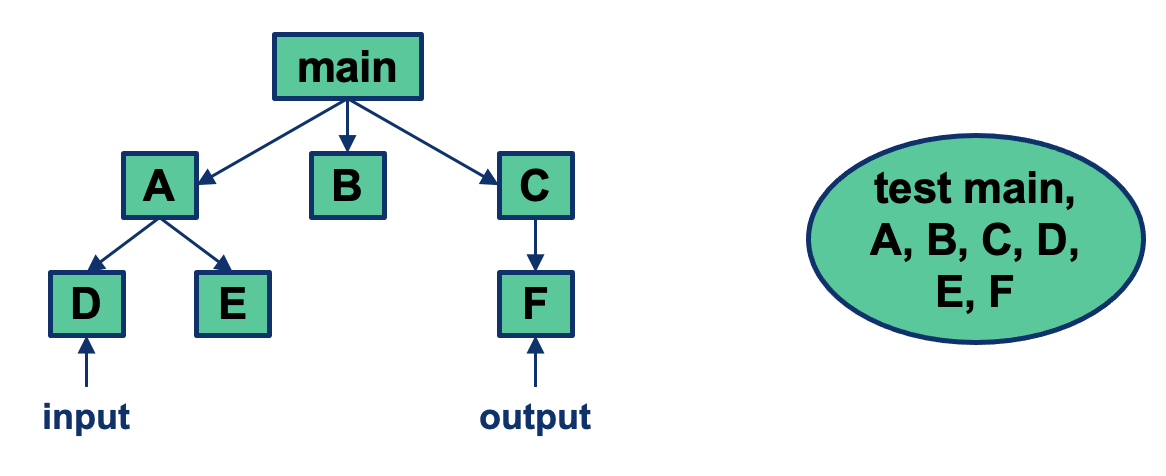

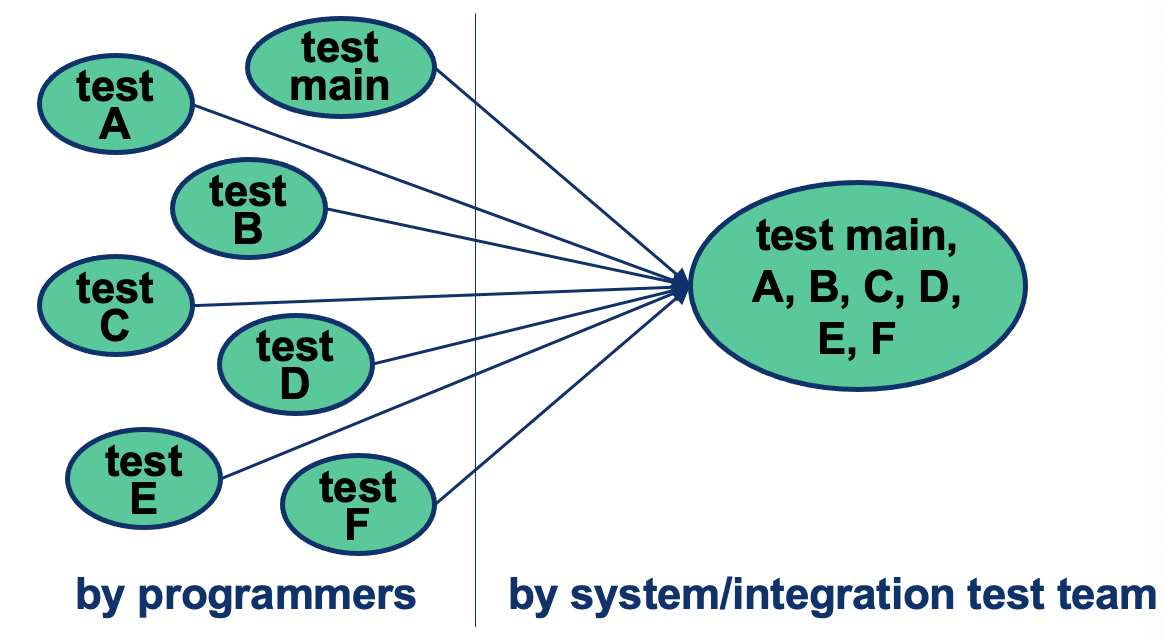

Big Bang Integration

- Non-incremental strategy

- Integrate all components as a whole

- Assumes components are initially tested in isolation (that’s the responsibility of programmers)

Advantages:

- Convenient for small/stable systems

Disadvantages:

- Does not allow parallel development (i.e., testing while implementing)

- Fault localization difficult

- Easy to miss interface faults

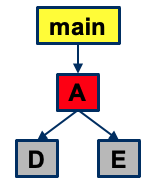

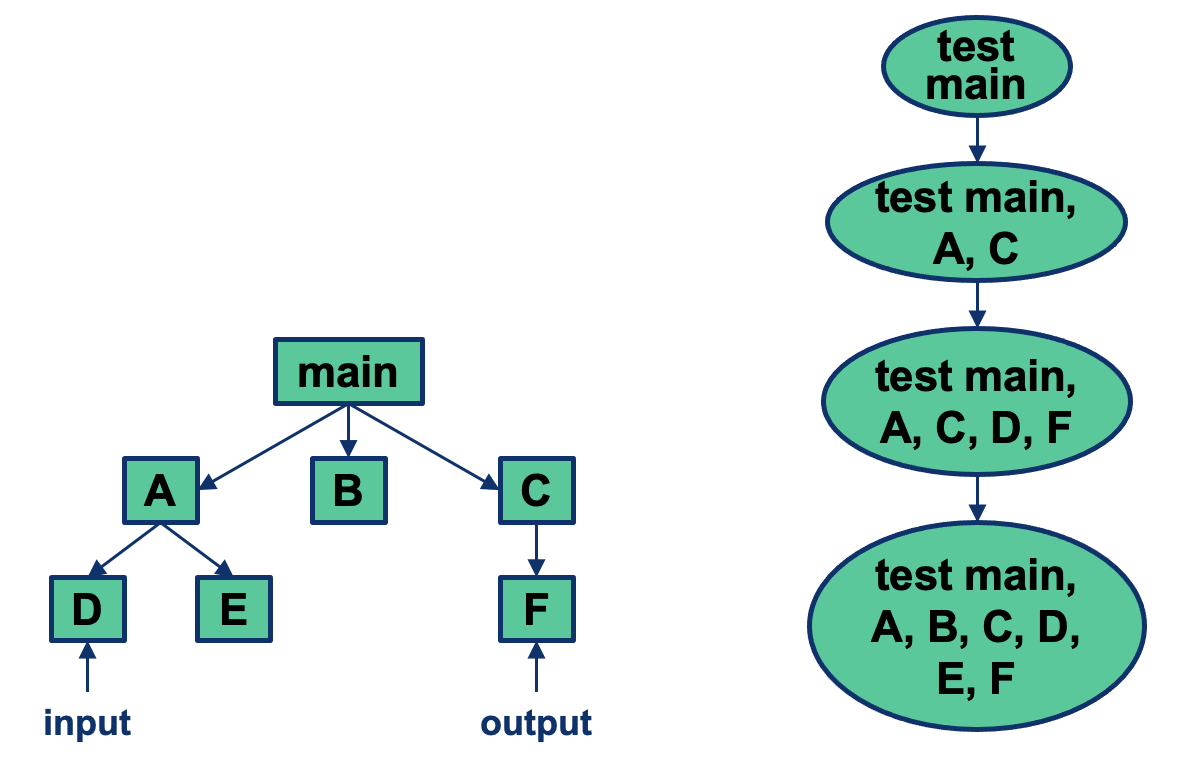

Top-Down Integration

- Incremental strategy

- Test high-level components, then called components until lowest-level components

- Possible to alter order such to test as early as possible:

- Critical components

- Input/output components

Advantages:

- Fault localization easier

- Few or no drivers needed

- Possibility to obtain an early prototype

- Testing can be in parallel with implementation

- Different order of testing/implementation possible

- Major design flaws found first (in logic components at the top of the hierarchy)

Disadvantages:

- Needs lots of stubs

- Potentially reusable components (at the bottom of the hierarchy) can be inadequately tested

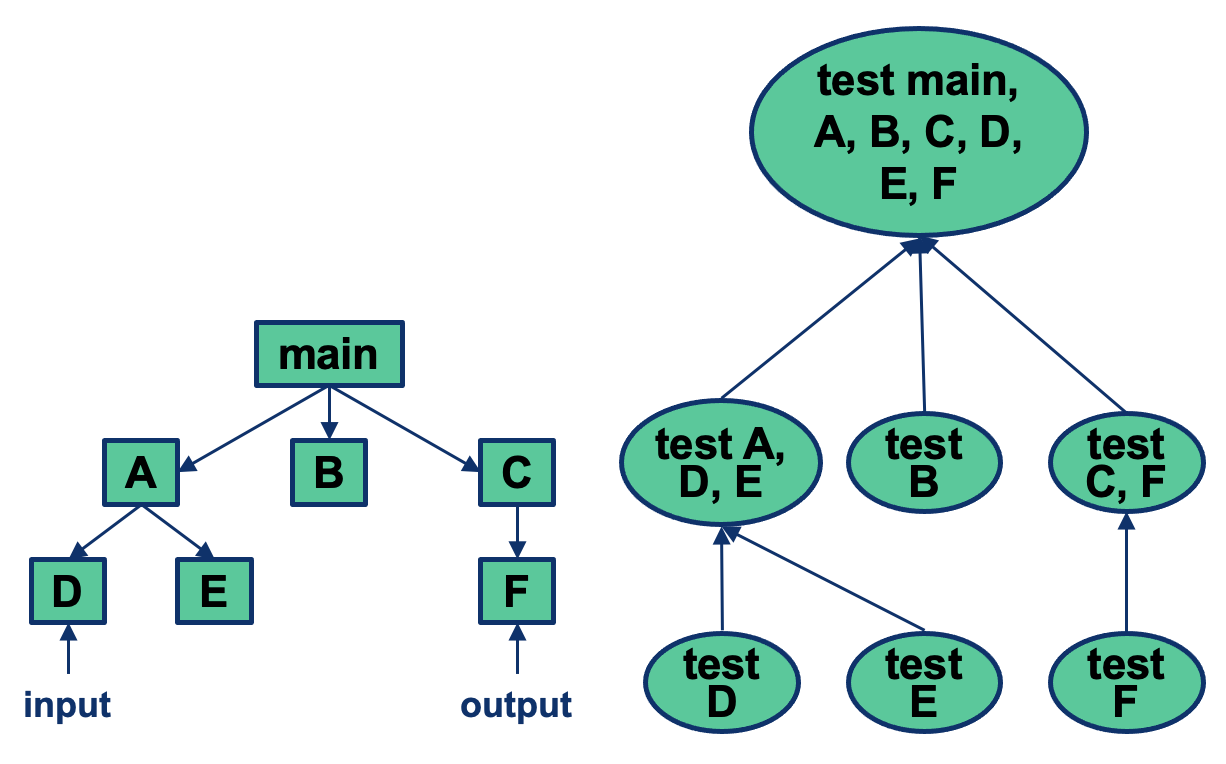

Bottom-Up Integration

- Incremental strategy

- Test low-level components, then components calling them until highest-level component

Advantages:

- Fault localization easier (than big-bang)

- No need for stubs

- Reusable components tested throughly

- Testing can be in parallel with implementation

- Different order of testing/implementation possible Disadvantages:

- Needs drivers

- High-level components (that relate to the solution logic) tested last (and least)

- No concept of early skeletal system

Sandwich Integration

- Combines top-down and bottom up approaches

- Distinguishes three layers:

- Logic (top) tested top-down

- Middle

- Operational (bottom) tested bottom-up

Test form main at start stubs out ABCDEF

Other Integration Testing Strategies

- Risk-driven integration:

- Integrate based on criticality (most critical or complex components integrated first with called components)

- Function/thread-based integration:

- Integrate components according to threads/functions they belong to

Testing Object-Oriented Systems

Object-orientation helps analysis and design of large systems, but based on existing data, it seems that more, not less, testing is needed for OO software.

Many unit-testing techniques are applicable to classes (e.g., state-based testing, control flow-based testing, data flow-based testing), but OO software has specific constructs that we need to account for during testing:

- Encapsulation of state

- Inheritance

- Polymorphism and dynamic binding

- Abstract classes

- Exceptions

Basically, OO helps design of large systems, but testing is more difficult!

- Unit and integration testing are especially affected as they are more driven by the structure of the software under test

- Unit and integration testing of classes is white-box testing, as it is using structural information of classes

Need specific techniques for OO systems

Recall

That the integration testing techniques have been based on functions… the scenario is more complex for classes.

Class vs. Procedure Testing

-

Testing single methods can be based on traditional unit testing techniques, but it is usually simpler than testing of functions (subroutines) of procedural software, since methods tend to be small and simple

-

In OO systems, most methods contain a few LOCs

- complexity lies in method interactions

-

Method behaviour is meaningless unless analyzed in relation to other operations and their joint effect on a shared state (data member values)

-

Testing classes poses new problems:

- The identification of behaviour to be observed, i.e., how to complement input/output relations with state information to define test cases & test oracles.

- The manipulation of objects state without violating the encapsulation principle (state is not directly accessible, but can only be accessed using public class operations)

- Polymorphism and dynamic binding leads to the test of one-to-many possible invocations of the same interfaces

- Each exception needs to be tested (e.g., analyze the code for possible null pointer exception)

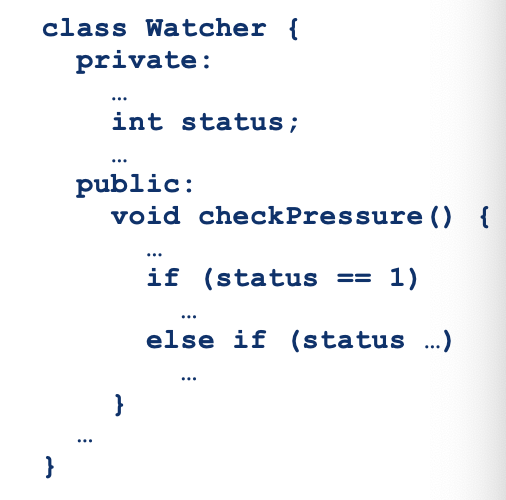

Example: Watcher

- Testing method

checkPressure()in isolation is meaningless:

- Generating test data

- Measuring coverage

- Creating oracle is more difficult:

- The value produced by

checkPressure()depends on the state of class Watcher’s instances (variable status)- Failures due to incorrect values of variable status can be revealed only with tests that have control and visibility on that variable.

New Fault Models

- Traditional fault taxonomies, on which control and data flow testing techniques are based, do not include faults due to object-oriented features

- New fault models are vital for defining testing methods and techniques targeting OO specific faults, e.g.,:

- WrongWrong instance of method inherited in the presence of multiple inheritance

- Wrong redefinition of an attribute / data member

- Wrong instance of the operation called due to dynamic binding and type errors

- There is a lack of statistical information on frequency of errors and costs of detection and removal

Basically saying it introduces new kinds of error that we need to address when testing classes!

OO Integration Levels

- Functions (subroutines) are the basic units in procedural software

- Classes introduce a new abstraction level

- Basic unit testing: the testing of a single operation (method) of a class (intra-method testing)

- Unit testing: the testing of methods within a class (intra-class testing) (integration of methods)

- It is claimed that any significant unit to be tested cannot be smaller than the instantiation of one class

Intra-class Testing (continued)

- Black box, white box approaches

- Data flow-based testing (but across several methods in one class)

- Each exception raised at least once

- Each interrupt forced to occur at least once

- Each attribute set and got at least once

- State-based testing

- Big bang approach indicated for situation where methods are tightly coupled

- Alpha-omega cycle (constructors, get methods, Boolean methods, set methods, iterators, destructors)

- Complex methods can be tested with stubs/mocks

Note

Destructors are generally not there in Java systems (exception is the finalize() method).

State-based testing⇒ not covered in these slides ⇒ Since the state of an object is implicitly part of the input and output of methods, we need a way to systematically explore object states and transitions. This can be guided by a state machine model, which can be derived from module specifications.

- Classes introduce different scopes of integration testing: the testing of interactions among classes (inter-class testing), related through dependencies, i.e., association, composition, inheritance

Cluster integration

- Integration of two or more classes through inheritance (cluster) incremental test of inheritance hierarchies

- Integration of two or more classes through containment (cluster)

- Integration of two or more associated classes / clusters to form a component (i.e., subsystem)

- Big bang, bottom-up, top-down, scenario-based

- Integration of components into a single application (subsystem/system integration)

- Similar techniques for cluster integration also applicable at this level (big bang, bottom-up, top-down, use case-based)

- Client/server integration

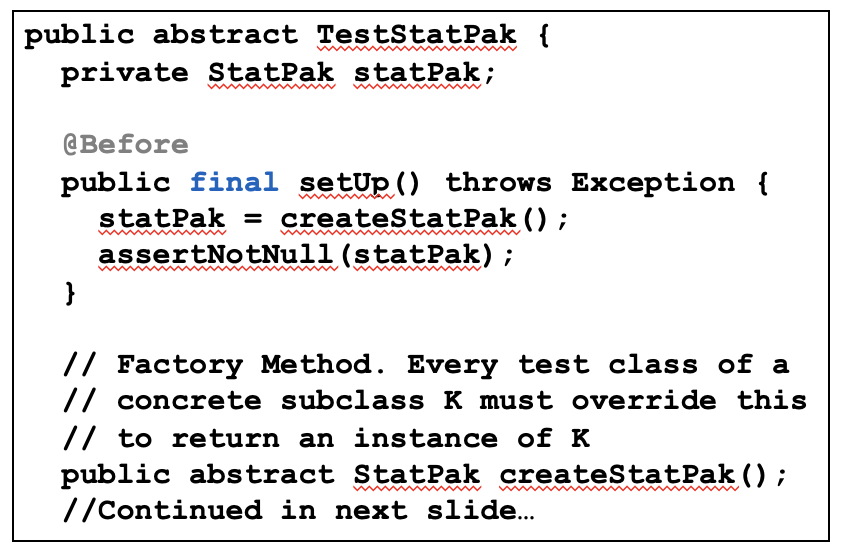

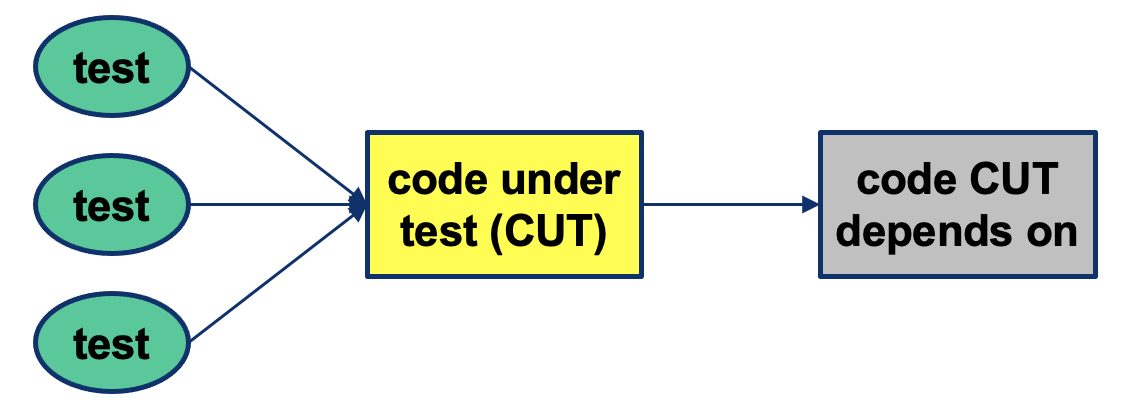

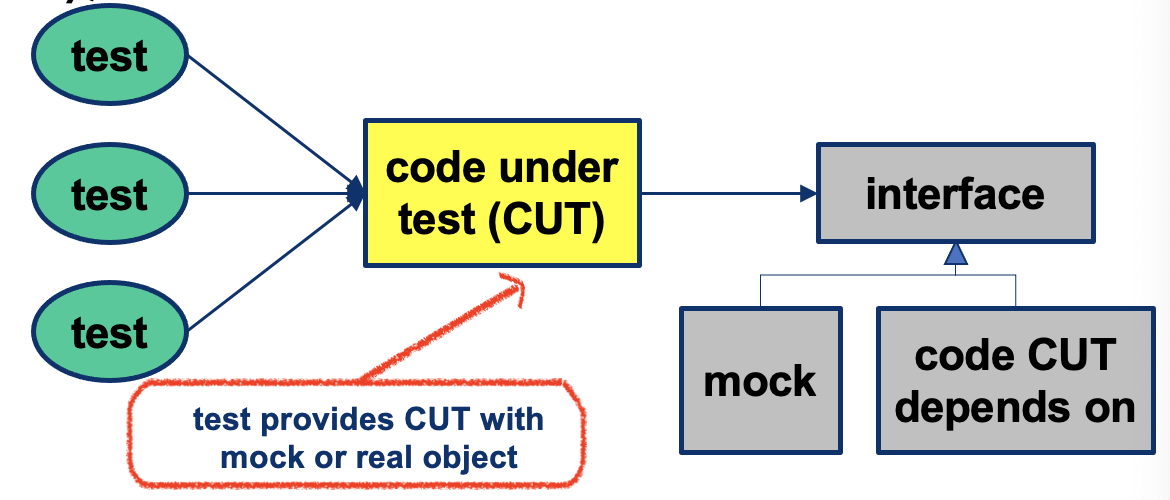

Mock Objects

Testing of OO systems requires drivers and stubs

- Difficult to flexibly stub dependent code without:

- Changing CUT

- Maintaining a library of stub objects

- Difficult to manage a library of stubs

Introduce Mock object (form of stubs):

- Based on interfaces

- Easier to setup and control

- Isolates code from details that may be filled in later

- Can be refined incrementally by replacing with actual code

- Based on dependency inversion principle (higher-level modules use interfaces as an abstract layer instead of lower-level modules directly)

- Test controls mock behaviour (sets up which one to use)

- Mock transparently replaces actual code

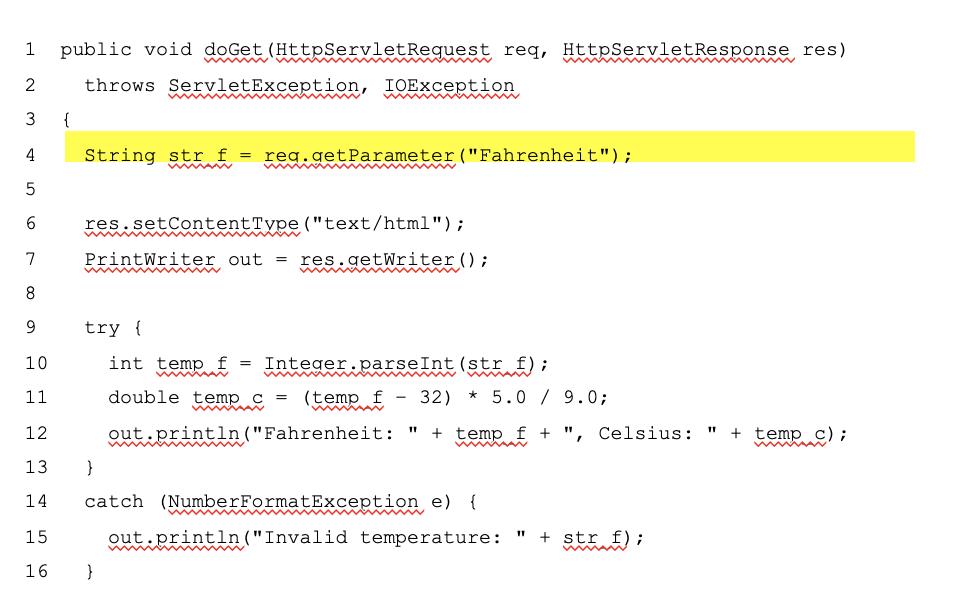

Mock Objects: Example

Testing a servlet:

- How to unit test without involving a web server, servlet container?

- How to automate testing?

- Not having to check results in a web browser?

- Use something like the JUnit framework?

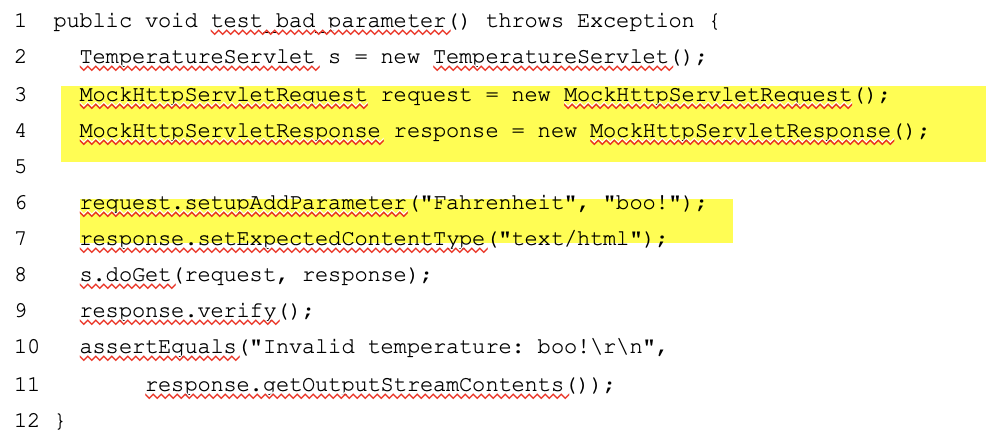

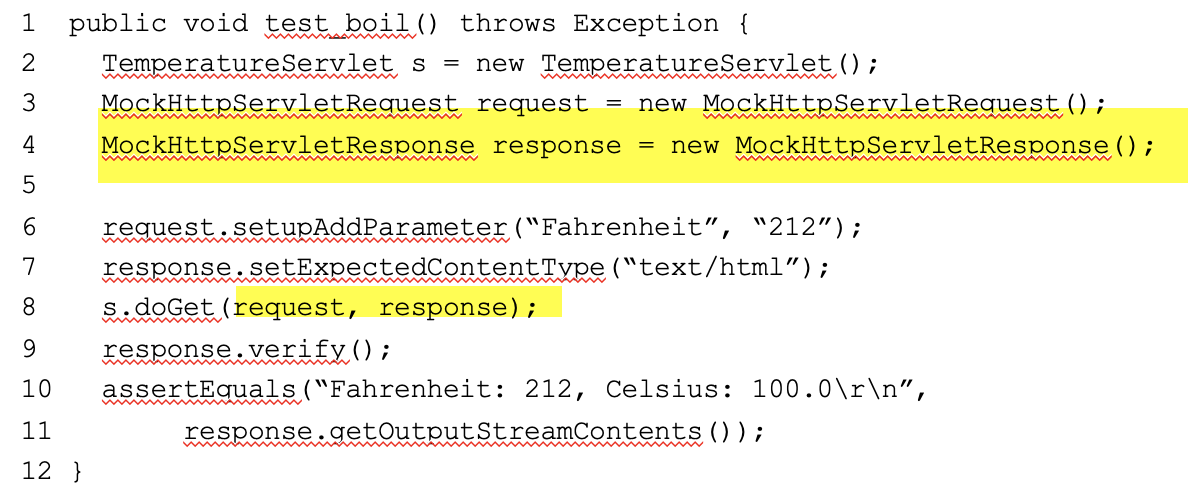

Mock Objects: Example (continued)

Mocks replace HttpServletRequest and HttpServletResponse when testing doGet:

Mocks replace HttpServletRequest and HttpServletResponse when testing doGet:

Mock Objects: Creation

- Manual creation:

- Advantage: more control

- Disadvantage: may introduce need for several classes, difficulties with existing infrastructure classes (e.g., JDBC)

- Popular frameworks:

- EasyMock

- Mockito

- Older frameworkds:

- iMock

- MockMaker

- MockObjects

- Mockrunner

Mockito

Characteristics of a Mock

- A mock object is a dummy implementation for an interface or a class in which you define the output of certain method calls. Mock objects are configured to perform a certain behaviour during a test. They typical the interaction with the system and test can validate that.

- Configure (mock or spy)

- Define output (stub)

- Call verify (verify interaction)

http://www.vogella.com/tutorials/Mockito/article.html

The Mockito Basics

- Create a Mock

- Verify

- Stubbing

- Iterating

- Argument Captor

- Spy

Creating a Mock

- A Mock has the same method calls as the normal object

- However, it records how other objects interact with it

- There is a Mock instance of the object but no real object instance

- List example

- Let’s import Mockito statically so that the code looks clearer

import static org.mockito.Mockito.*;- //mock creation

List mockedList = mock(List.class);- Or @Mock List mockedList;

Verify

- “Once created, a mock will remember all interactions. Then you can selectively verify whatever interactions you are interested in.”

- Let’s mock a List and use the add function

mockList.add(“one”);verify(mockedList).add(“one”);verify(mockedList).add(“two”);//will fail because we never called with this value

- You can verify number of invocations

verify(mockedList, times(2)).add(“one”);/ /will fail since called onceverify(mockedList, atLeast(2)).add(“one”);//will fail since called once

Verify no more interactions

- Checks if any of given mocks has any unverified interaction.

“//using mocks

mockedList.add("one");

mockedList.add("two");

verify(mockedList).add("one");

//following verification will fail verifyNoMoreInteractions(mockedList);

Stubbing

- Return the whatever value we passed in

- Or “By default, for all methods that return a value, a mock will return either null, a primitive/primitive wrapper value, or an empty collection, as appropriate. For example 0 for an int/Integer and false for a boolean/Boolean.”

- Examples

when(mockedList.get(0)).thenReturn("first");

when(mockedList.get(1)).thenThrow(new RuntimeException());

Basic Mock Conclusions

-

Verify is critical as it allows us to determine what was passed to a mocked method by the method under test

- Asserts only check returned values

- Verify checks that a method is called

-

Examples

verify(mockStorage).barcode("1A");

verify(mockDisplay).showLine("1A");

verify(mockDisplay).showLine("Milk, 3.99");

- We still use asserts when we test values of the class/method under test

- “when” allows us to stub our method

when(mockStorage.barcode("1A")).thenReturn("Milk, 3.99");

Stubbing Gotchas

- Once stubbed, the method will always return a stubbed value, regardless of how many times it is called.

- Last stubbing is more important - when you stubbed the same method with the same arguments many times.

//All mock.someMethod("some arg") calls will return "two"

when(mock.someMethod("some arg")) .thenReturn("one")

when(mock.someMethod("some arg")) .thenReturn("two")

- How would you Stub the Stack?

Stubbing consecutive calls (iterator-style stubbing)

when(mockedStack.pop()).thenReturn(3,2,1);

Verify InOrder

- Want to ensure that the interactions happened in a particular order

List singleMock = mock(List.class);

//using a single mock

singleMock.add("was added first");

singleMock.add("was added second");

//create an inOrder verifier for a single mock

InOrder inOrder = inOrder(singleMock);

//following will make sure that add is first called with "was added first", then with "was added second"

inOrder.verify(singleMock).add("was added first");

inOrder.verify(singleMock).add("was added second");

- // B. Multiple mocks that must be used in a particular order

- Check that the verify of the call of mockStorage to the barcode happens before the call of mockDisplay to showline

- Use the InOrder class

//the order of calls in mockDisplay and moStorage will be tracked together

InOrder inOrder = inOrder(mockDisplay, mockStorage);

inOrder.verify(mockStorage).barcode("1A");

inOrder.verify(mockDisplay).showLine(“Milk, 3.99");

Verify InOrder with verifyNoMoreInteractions

mock.foo(); //1st

mock.bar(); //2nd

mock.baz(); //3rd

InOrder inOrder = inOrder(mock);

inOrder.verify(mock).bar(); //2n

inOrder.verify(mock).baz(); //3rd (last method)

//passes because there are no more interactions after last method:

inOrder.verifyNoMoreInteractions();

//however this fails because 1st method was not verified: Mockito.verifyNoMoreInteractions(mock);

Argument Captor

- Capture the value that is passed in

- Essential call verify and ask what was passed in

- Set it up for barcode(String barcode)

ArgumentCaptor<String> argCaptor = ArgumentCaptor.forClass(String.class);

- Capture what was passed to barcode

verify(mockStorage).barcode(argCaptor.capture());

//this line verifies the barcode function is called and remembers what is the input for the bar code function

- Use that arg value later to verify what was displayed

verify(mockDisplay).showLine(argCaptor.getValue());

// to ensure the same values are called in both barcode and showLine

Spying on real objects

- You can create spies of real objects. When you use the spy then the real methods are called (unless a method was stubbed).

- Let’s spy the LinkedList

List list = new LinkedList();

List spy = spy(list);

//optionally, you can stub out some methods:

when(spy.size()).thenReturn(100);

//using the spy calls *real* methods

spy.add("one");

- Spys have lots of Gotchas so avoid as much as possible

- Use only when you have legacy code that you can’t completely Mock out

Some Additional Tutorials

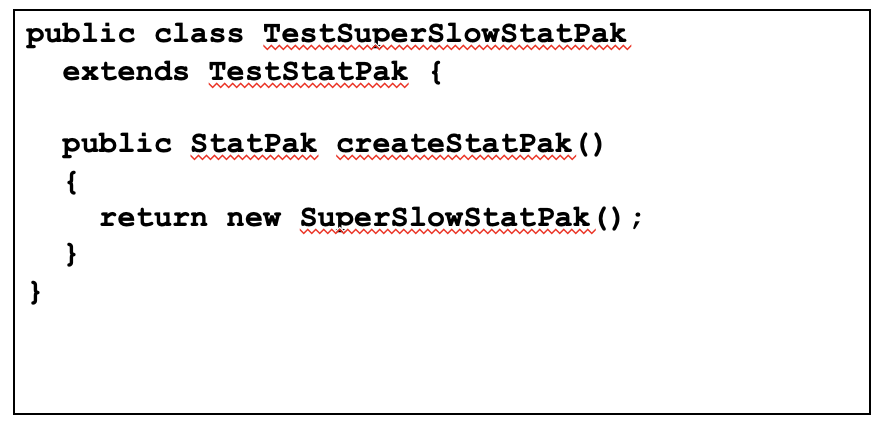

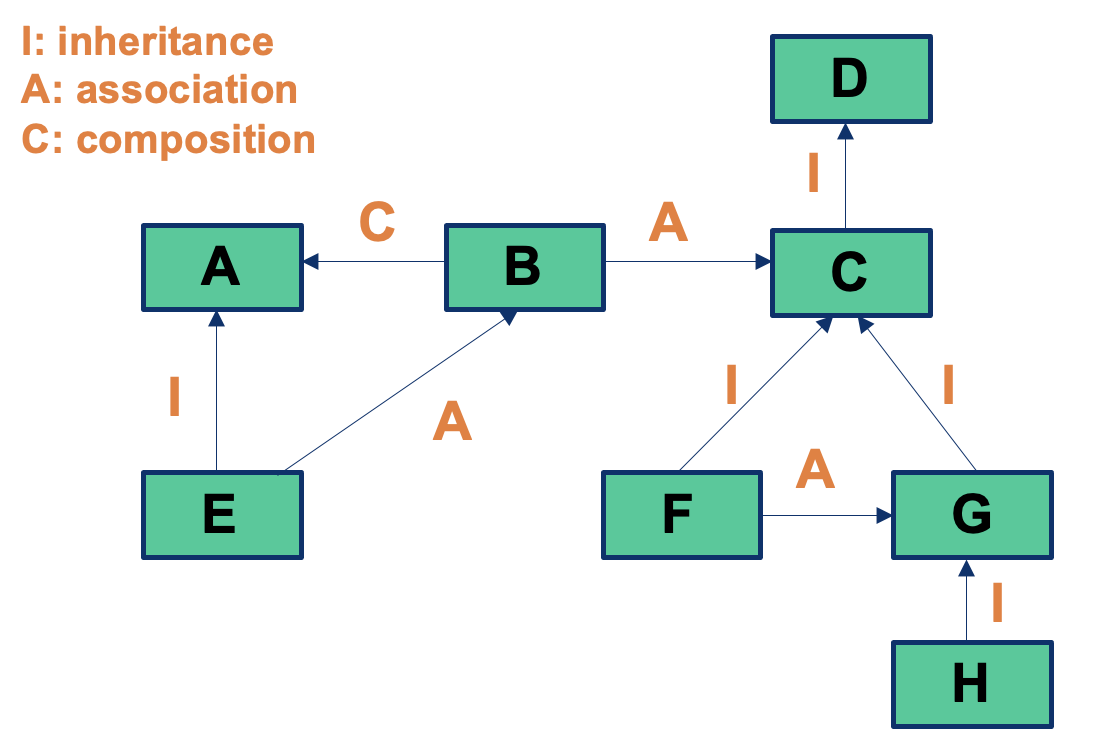

OO Integration

OO Integration Levels

- Classes introduce different scopes of integration testing: the testing of interactions among classes (inter-class testing), related through dependencies, i.e., association, composition, inheritance

Cluster integration

- Integration of two or more classes through inheritance (cluster) incremental test of inheritance hierarchies

- Integration of two or more classes through containment (cluster)

- Integration of two or more associated classes / clusters to form a component (i.e., subsystem)

- Big bang, bottom-up, top-down, scenario-based

- Integration of components into a single application (subsystem/system integration)

- Similar techniques for cluster integration also applicable at this level (big bang, bottom-up, top-down, use case-based)

- Client/server integration

Testing and Inheritance

- Should you retest inherited methods?

- Can you reuse superclass tests for inherited and overridden methods?

- To what extend should you exercise interaction among methods of all superclasses and of the subclass under test?

Inheritance

- In the early years people thought that inheritance will reduce the need for testing

- Claim 1: “If we have a well-tested superclass, we can reuse its code (in subclasses, through inheritance) with confidence and without retesting inherited code”

- Claim 2: “A good-quality test suite used for a superclass will also be sufficient for a subclass” Both claims are wrong.

Integration and Inheritance

- Makes understanding code more difficult

- Instance variables similar to global variables with respect to individual methods

- Test suite for a method almost never adequate for overriding methods

- Necessary to test inherited method in the context of inheriting classes

- Accidental reuse (inherited method not built for reuse)

- Multiple inheritance (multiple test cases needed, possibility of incorrect binding, repeated inheritance)

- Abstract classes need instantiation

- Generic classes

Interactions you may miss #1: Inherited methods should be retested in the context of a subclass

- Modifying a superclass: we have to retest its subclasses (expected)

- Add a subclass (or modify an existing subclass): we may have to retest the methods inherited from each of its ancestor superclasses, because subclasses provide new context for the inherited methods

- No problems if the new subclass is a pure extension of the superclass:

- Adds new instance variables and methods but there are no interactions in either directions between the new instance variables and methods and any inherited instance variables and methods

Add a subclass (or modify an existing subclass): excepted case

Testing of Inheritance

- Principle: inherited methods should be retested in the context of a subclass

- Example: if we change some method m in a superclass, we need to retest m inside all subclasses that inherit it

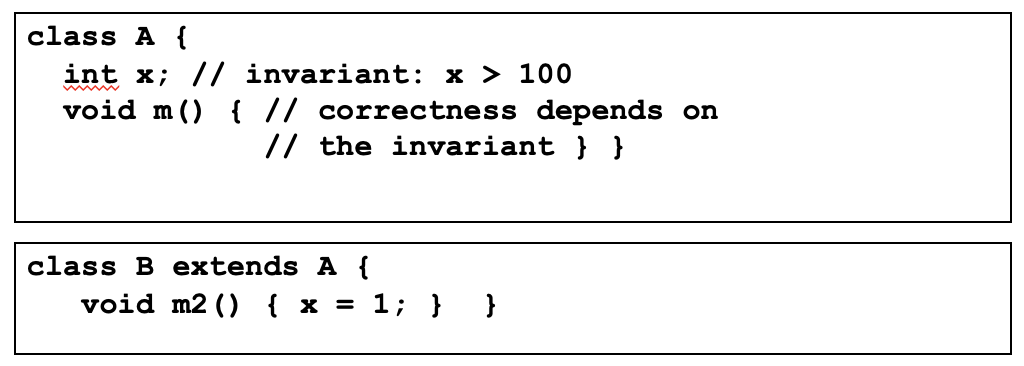

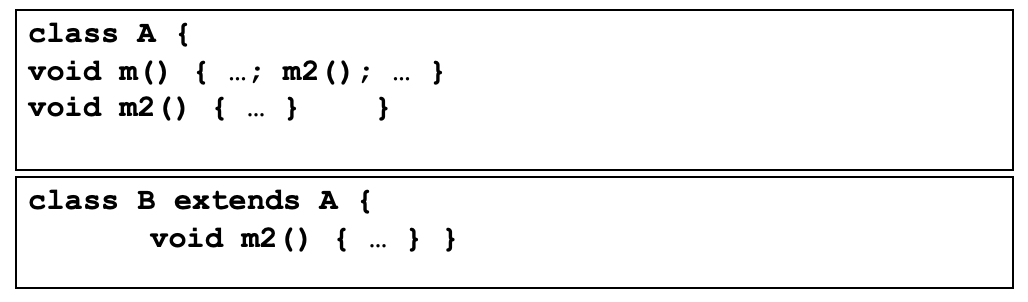

Inheritance: Simple Example

inherited methods should be retested in the context of a subclass

If we add a new method m2 that has a bug and breaks the invariant, method m is incorrect in the context of B even though it is correct in A

- Therefore, m should be tested in B

- The example illustrates that even if a method is correct in the superclass, it may become incorrect in the subclass if the subclass introduces changes that affect the behaviour or assumptions of the inherited methods.

- Therefore, retesting inherited methods in the context of subclasses ensures the correctness and integrity of the software’s behaviour.

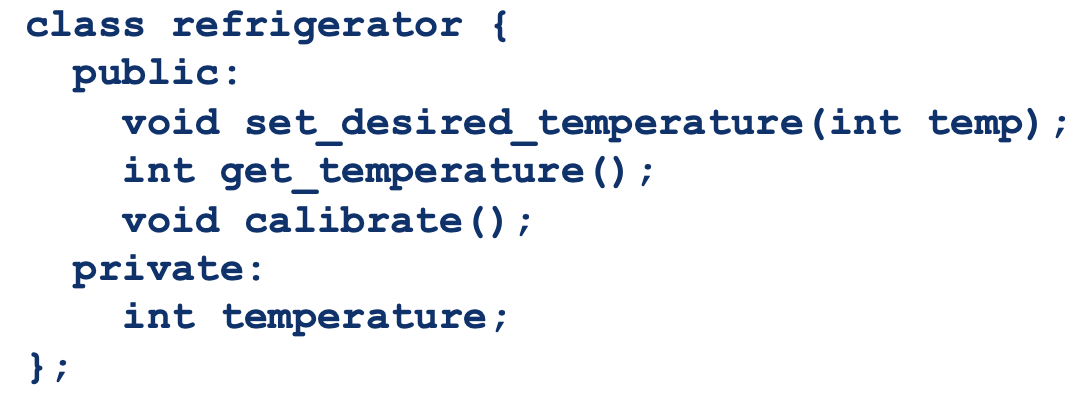

Inheritance: Another Example

set_desired_temperature()allows the temperature to be between 5°C and 20°Ccalibrate()puts the actual refrigerator through cooling cycles and uses sensor readings to calibrate the cooling unit.- A new more capable model of refrigerator is created and can cool to – 5°C

- class

better_reefrigerator- a new version of

set_desired_temperature()calibrate()is unchanged- Should

better_refrigerator::calibrate()be retested? It has the exact same code?

- Yes, it has to be re-tested!

- Supposed that calibrate works by dividing sensor readings by temperature

- In

better_refrigerator, it is possible that temperature is 0°C causes a divide by 0 failure incalibrate()which cannot happen in refrigerator

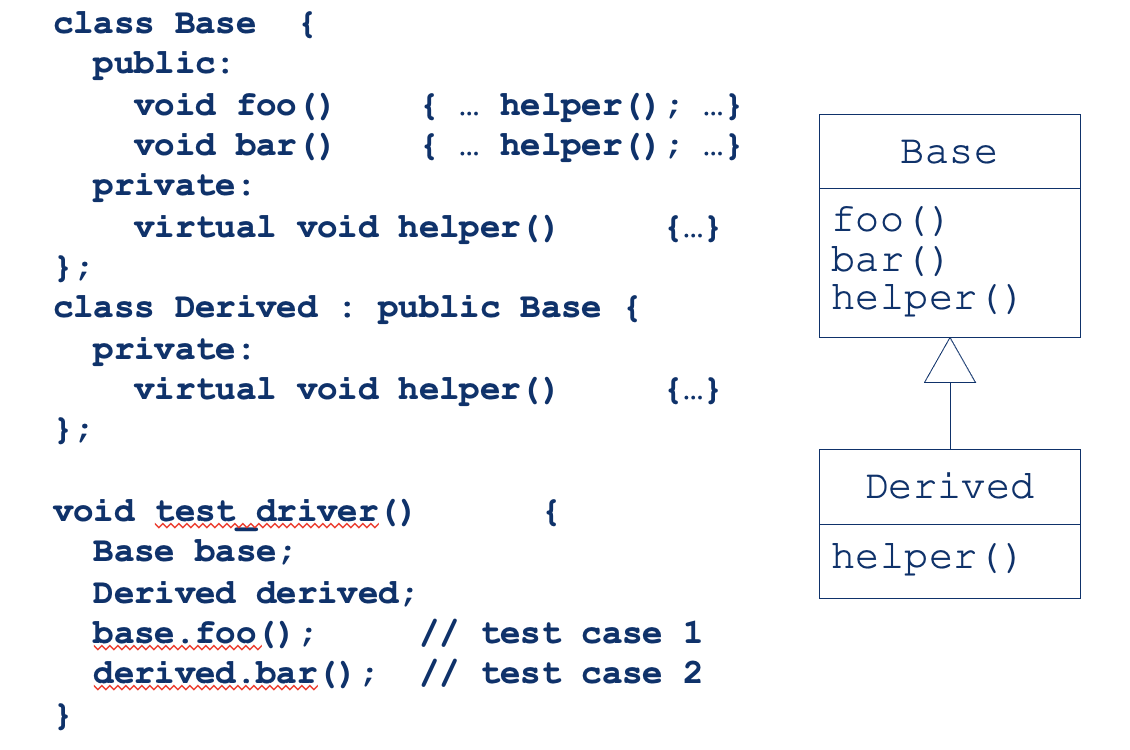

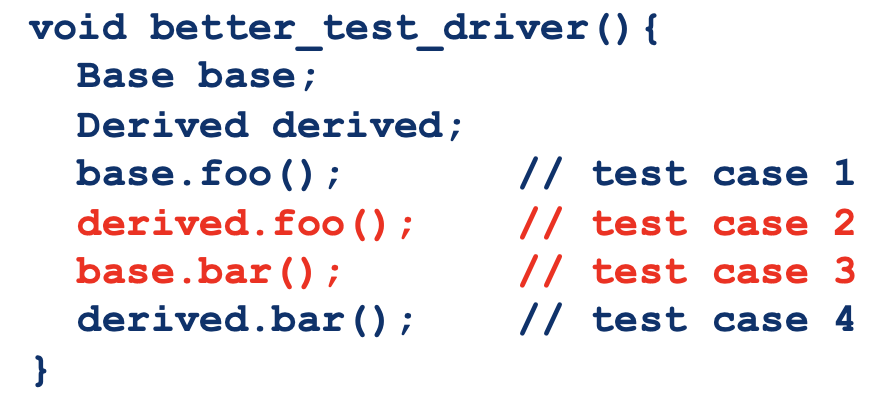

Interactions you may miss #2: Overriding of Methods

- OO languages allow a subclass to replace an inherited method with a method of the same name

- The overriding subclass method has to be tested but different test sets are needed!

- Reason 1: if test cases are derived from a program structure (data and control flow), the structure of the overriding method may be different

- Reason 2: the overriding method behaviour (input/output relation) is also likely to be different

Inheritance: Simple Example

- If inside B we override a method from A, this indirectly affects other methods inherited from A.

- e.g., method m calls B.m2, not A.m2: so we cannot be sure that m is correct anymore and we need to retest it inside B

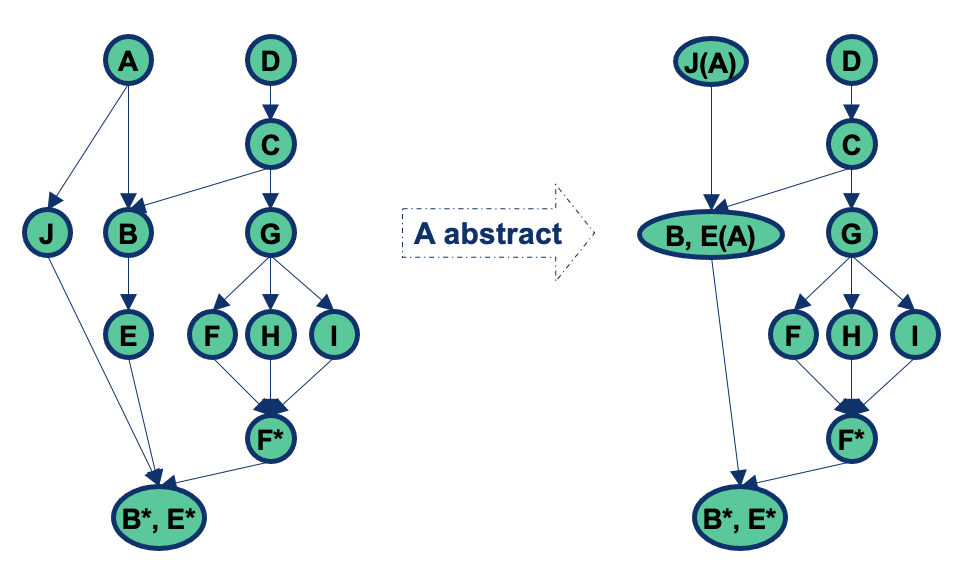

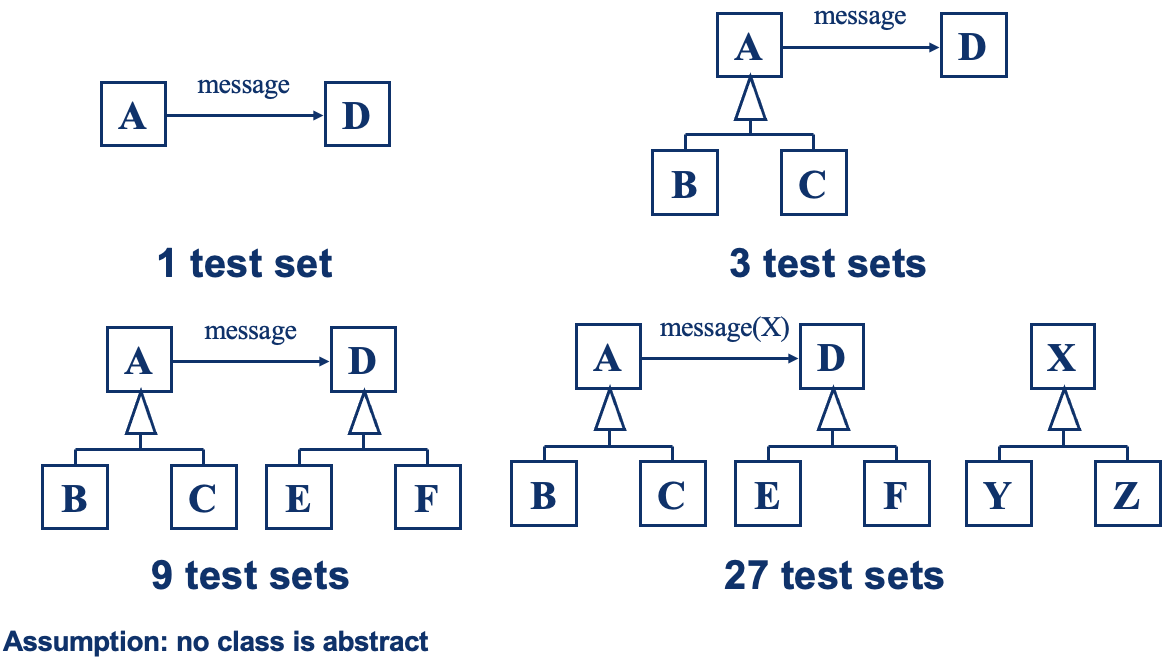

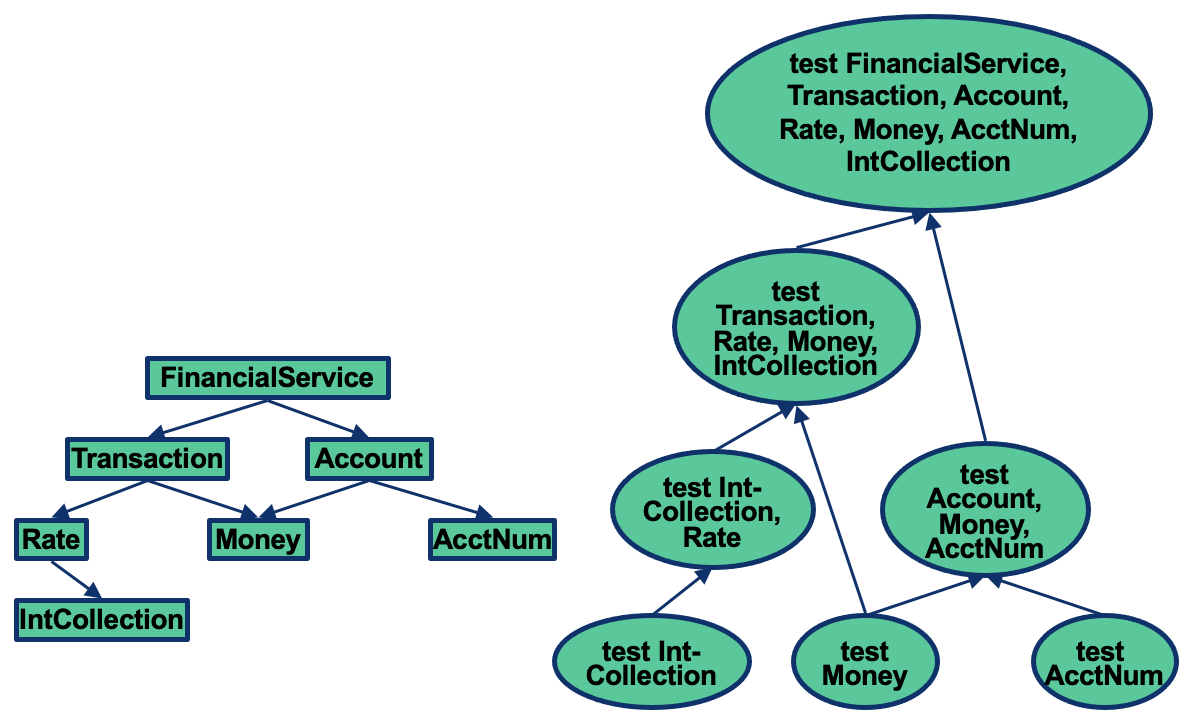

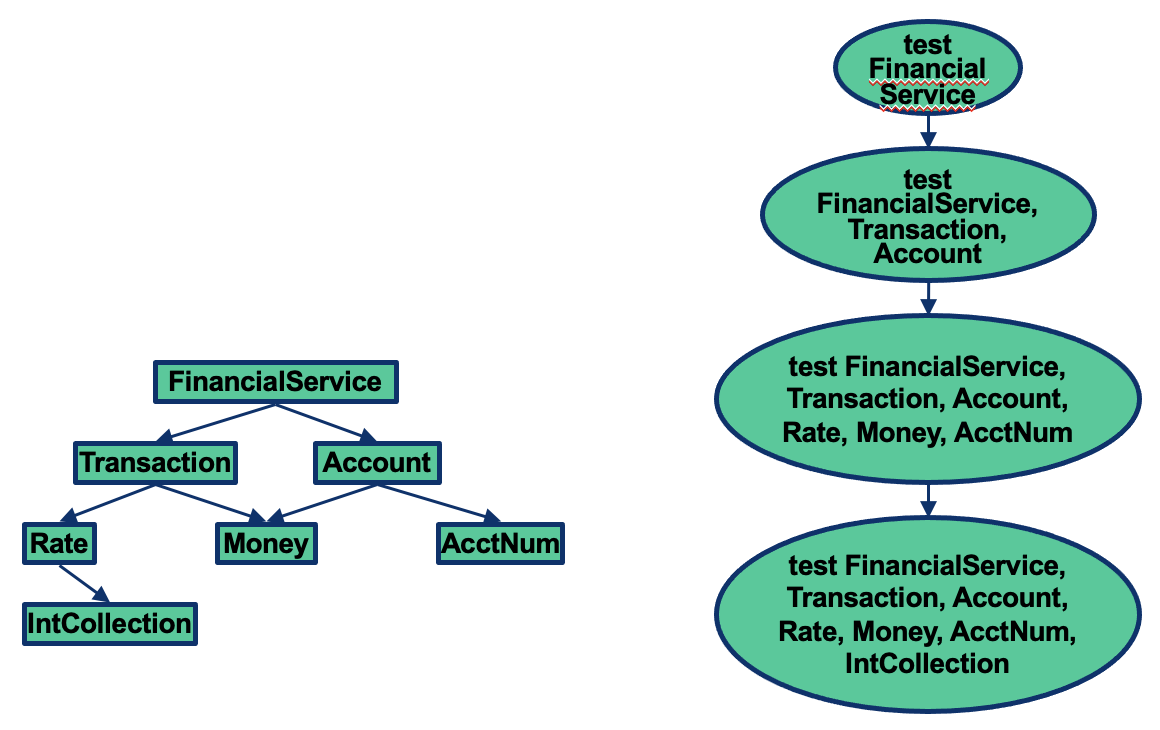

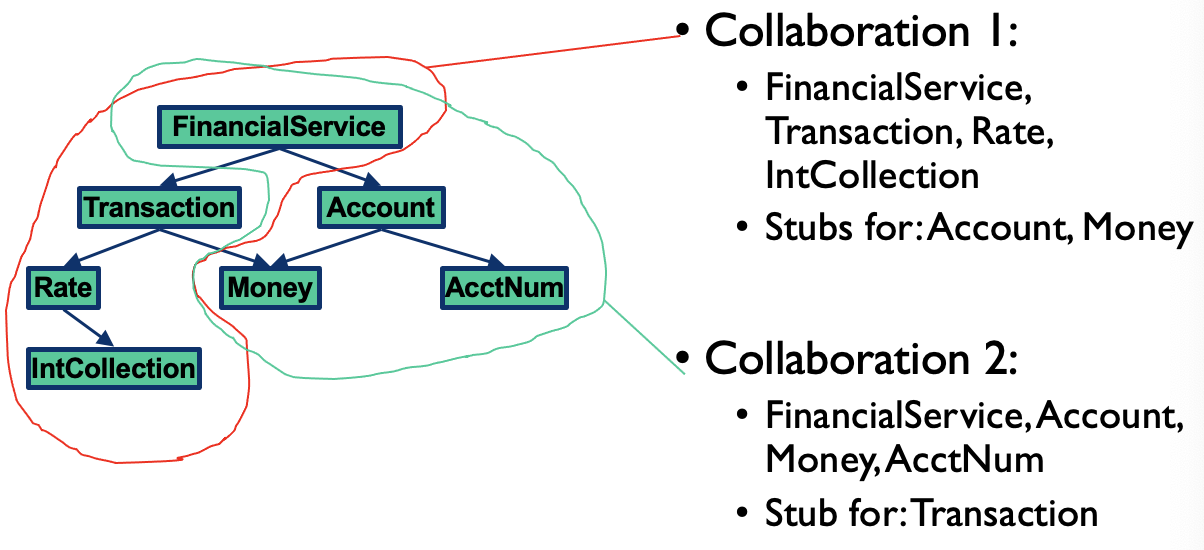

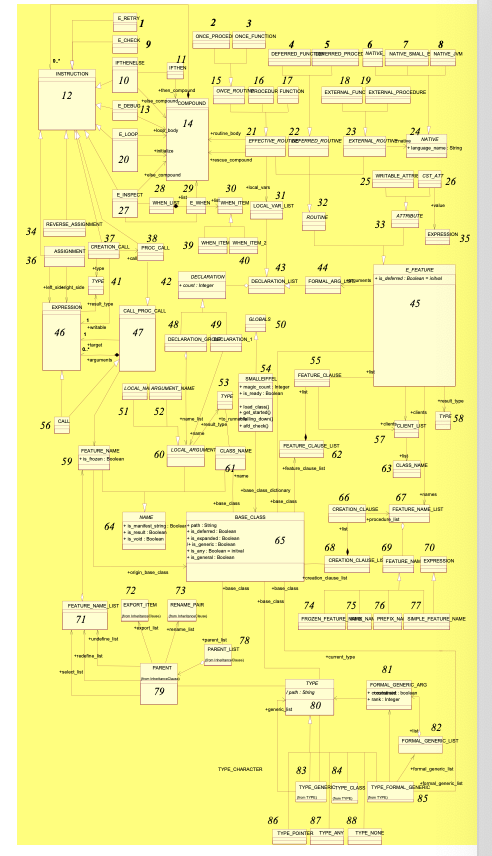

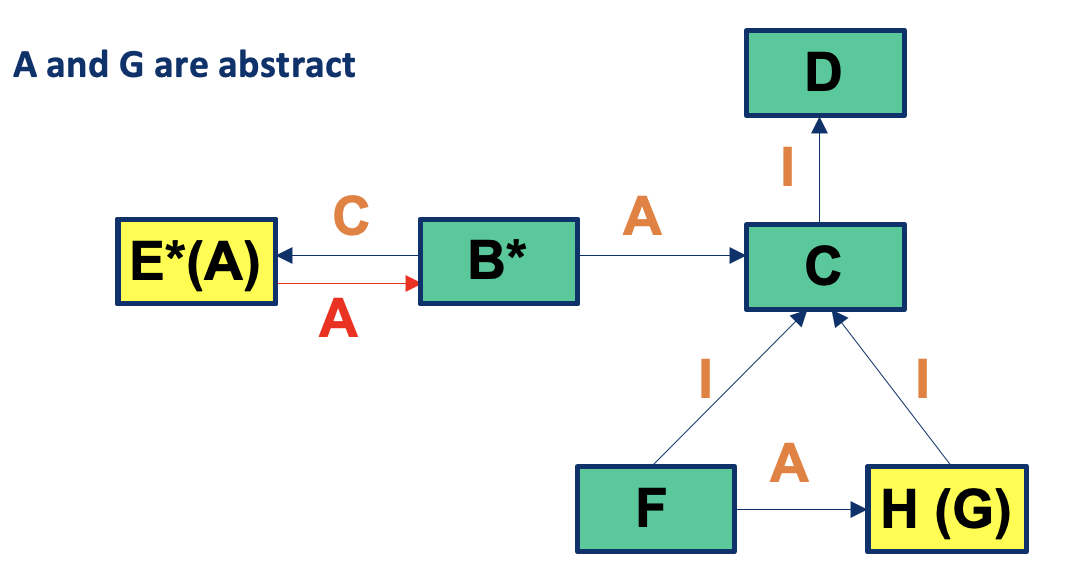

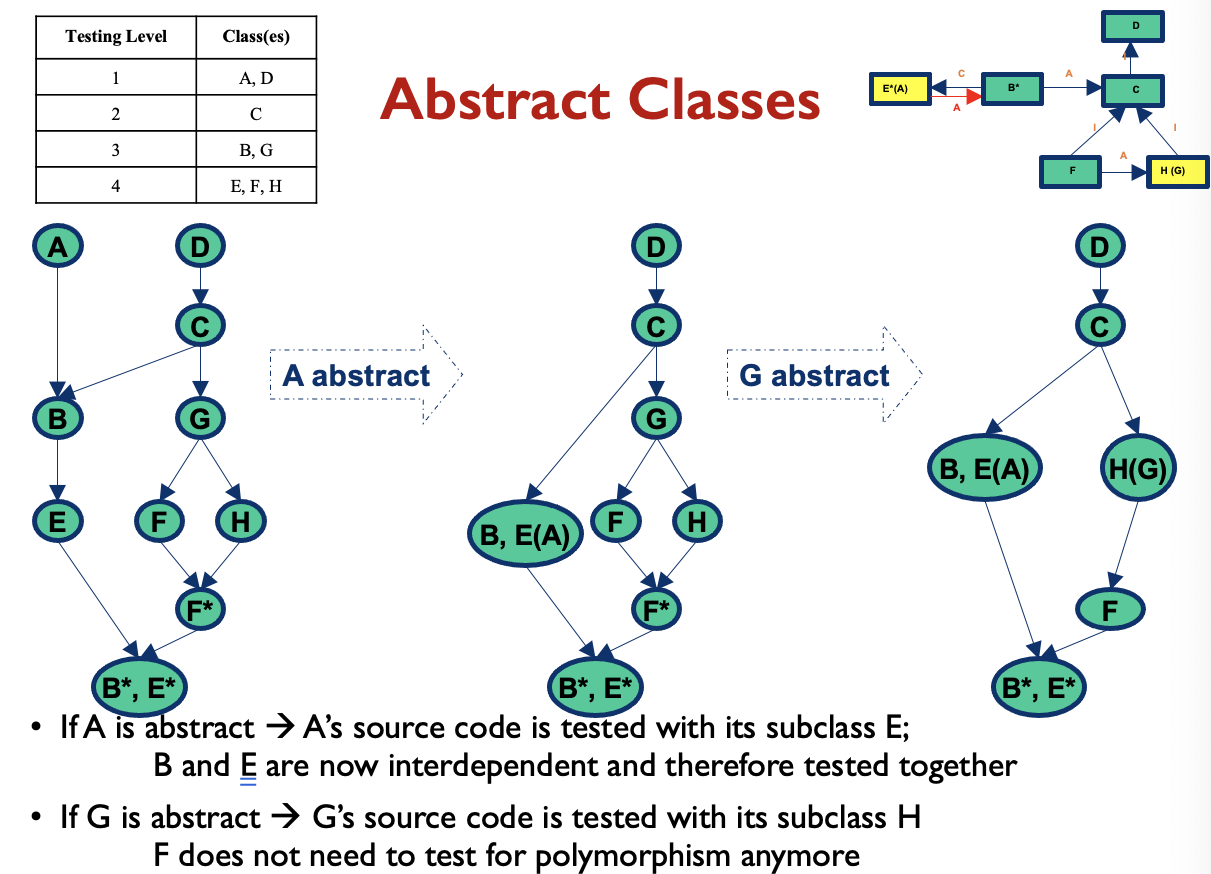

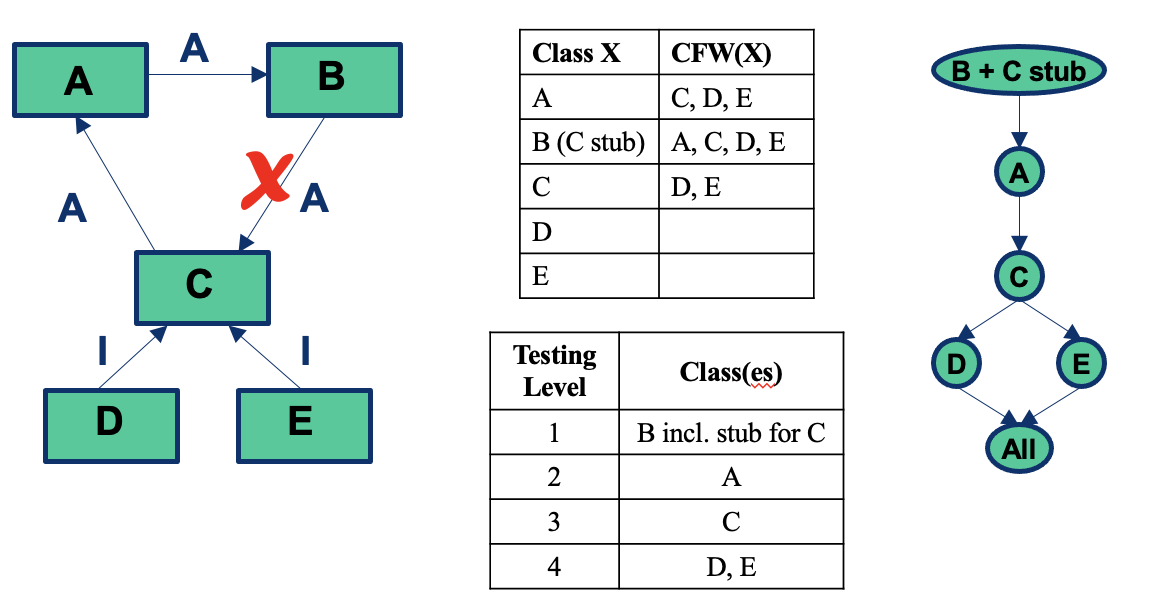

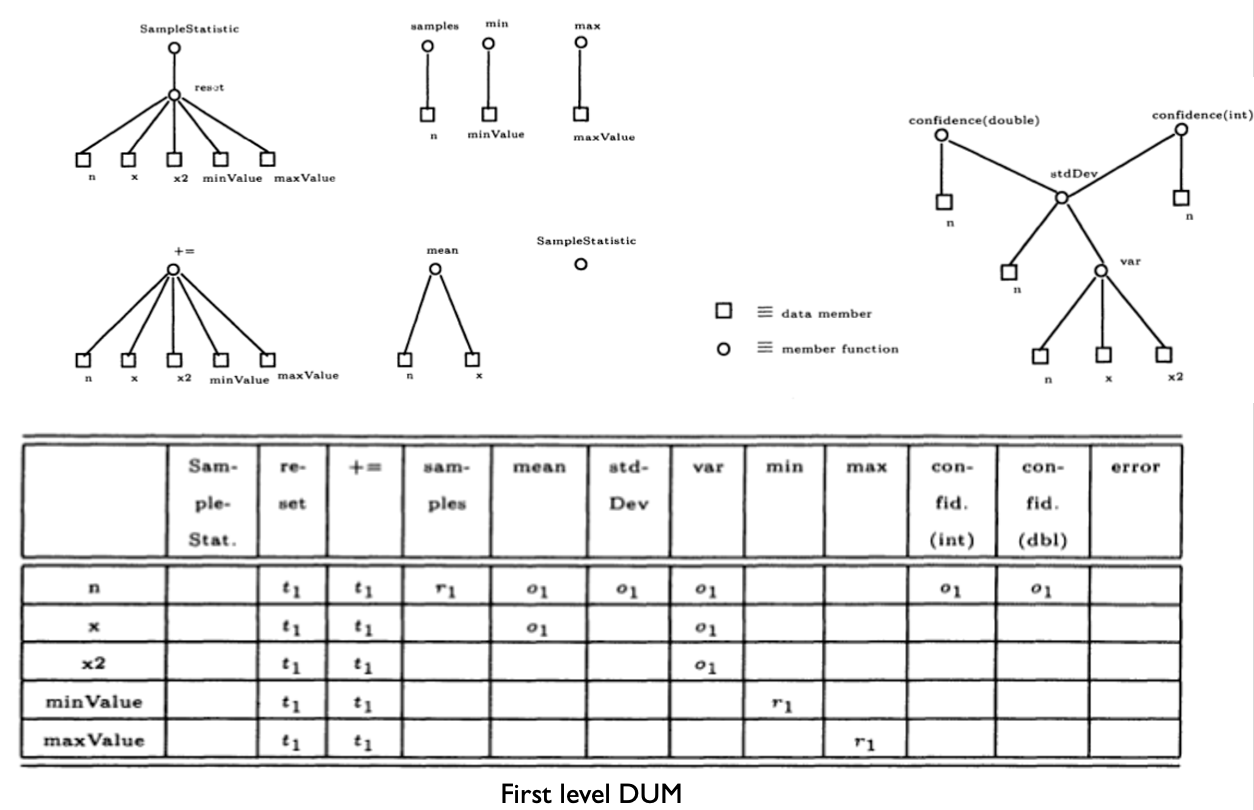

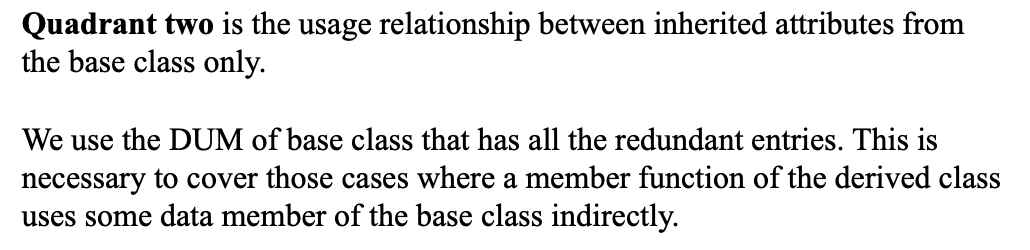

- Test cases developed for a method m defined in A are not necessarily sufficient for retesting m in subclasses of A