MATH213: Advanced Mathematics for Software Engineers

Taken in Winter 2024. Taught by Dr. Matthew Harris. Office EIT 4018. (Really important for SE380)

Course Description: Laplace transform methods for: solving linear ordinary differential equations, classical signals, and systems. Transfer functions, poles, and zeros; system stability. Frequency response of linear systems and its log-scale representation (Bode plot). Fourier series. Applications in areas of interest for software engineers and computer scientists. Brief introduction to Fourier transforms in the context of signals and systems.

Course website: https://ece.uwaterloo.ca/~math213/index.html. But everything is on Learn. Matthew Harris’ notes are enough.

Final

- Laplace transform

- Fourier transform

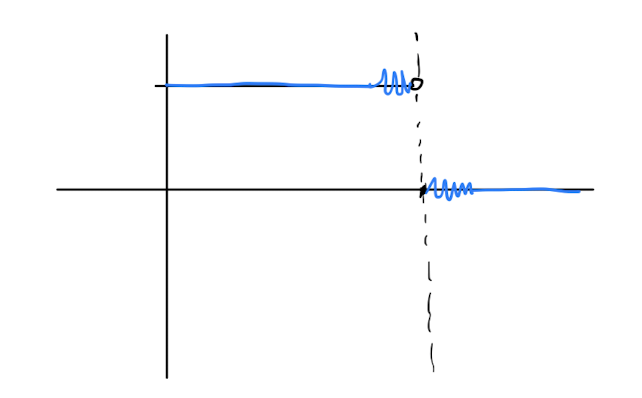

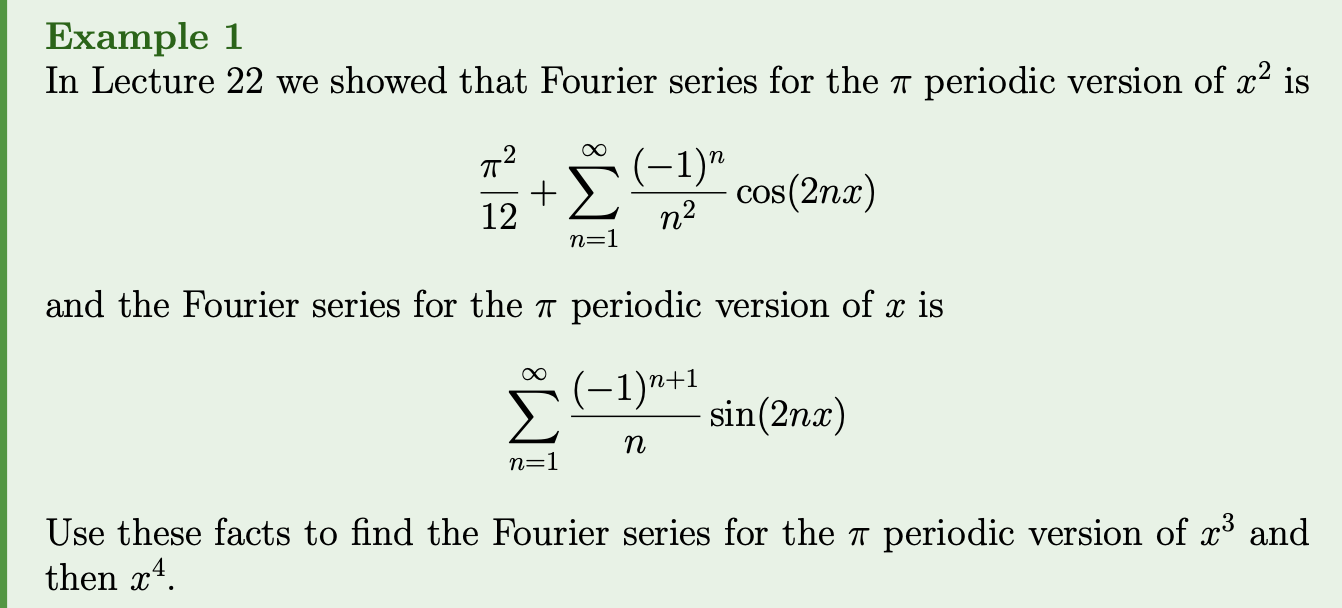

- Here is a Fourier series, use it to find a Fourier of another thing

- Integration tricks

- If functions converges

- Difficulty level of homework (tutorial)

- Can also ask Laplace stuff

- True and false questions

- Mostly computations, linear : is it causal,, etc.

Midterm

https://tutorial.math.lamar.edu/Classes/DE/LaplaceIntro.aspx

Review:

- Partial Fraction integrals

- Integration by parts

- Lecture 1 and 2

- Lecture 3 and 4

- Lecture 5

- Lecture 6

- Lecture 7

- Lecture 8

- Lecture 9

- Lecture 10

- Lecture 11 - midterm review

- All tutorials

- Properties of Laplace transform

Messed up entirely on the question asking us to perform Convolution Theorem. Instead did it by definition…

Concepts

- Differential Equations

- Ordinary Differential Equations

- Laplace Transform

- Inverse Laplace Transform

- Laplace Table

- Convolution

- Initial Value Theorem

- Transfer Function

- Final Value Theorem

- Signal

- Bifurcation Diagram

- Fourier Series

A5: Up to Lecture 18? L19 helps with drawing plots and Q4c-d (which are quick after you have the results from a-b) need results from L19 to complete. That said if you think about what the plots mean you can likely work out the needed results pretty quickly.

Lecture 1

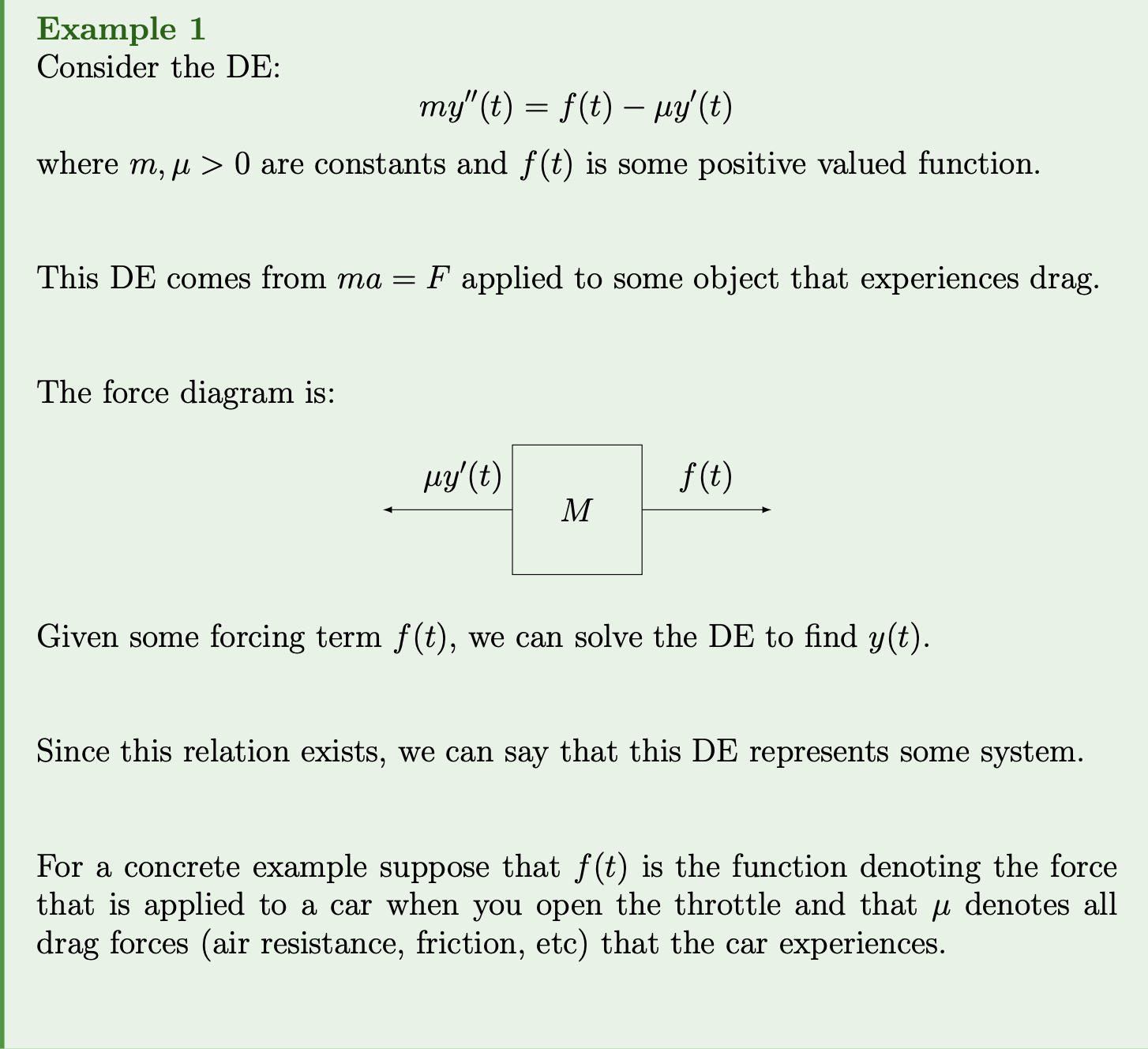

What are DEs and where do they come from?

Introduction to Differential Equations DEs.

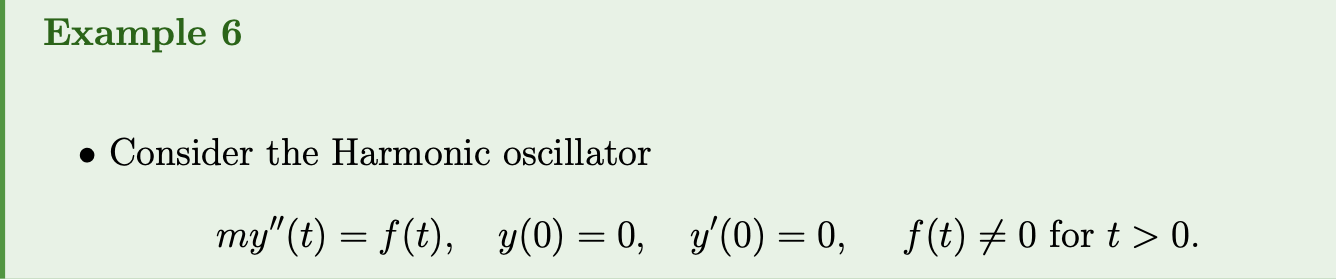

Not so sure about Example 6. Very confusing

Lecture 2

Definition 1: Independent and Dependent Variables and Parameters

The dependent variable(s) of a DE are the unknown functions that we want to solve for i.e. etc.

The independent variable(s) of a DE are the variable(s) that the independent variable(s) depend on i.e. etc.

A parameter is a term that is an unknown but is not an independent or dependent variable i.e etc.

Definition 2: Order of a DE

The order of a DE is the order of the highest derivative.

Definition 3: ODEs and PDEs

A DE is an ordinary differential equation (ODE) if it only contains ordinary derivatives (i.e. no partial derivatives)

A DE is a partial differential equation (PDE) if it contains at least one partial derivative of a independent variable.

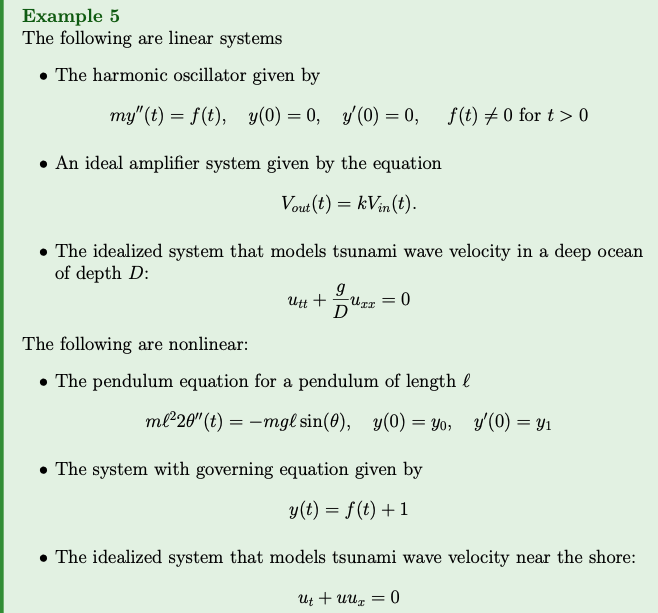

Definition 4: Linear and nonlinear DEs

A DE that contains no products of terms involving the dependent variable(s) is called linear.

If a DE is not linear then it is nonlinear.

Definition 5: Homogeneous and Inhomogeneous: DEs

DE where every term depends on a dependent variable is called homogeneous.

A DE that is not homogeneous is called inhomogeneous or nonhomogeneous.

Linear homogeneous DEs have the property that if and both solve the DE then so does for all.

This is the same property that was used to define linearity in MATH115! i.e. a vector valued function is linear if and only if for all and for all .

The difference is that we now study linear functions applied to the vector space of all sufficiently differentiable functions (e.g. ) instead of vectors in i.e. there are no matrix representations for linear functions.

Theorem 1: Linear ODEs with Variable Coefficients

All ODEs of the form where , the functions are real valued, but and can be complex valued are linear ODEs.

Here is called the forcing term.

Lecture 3

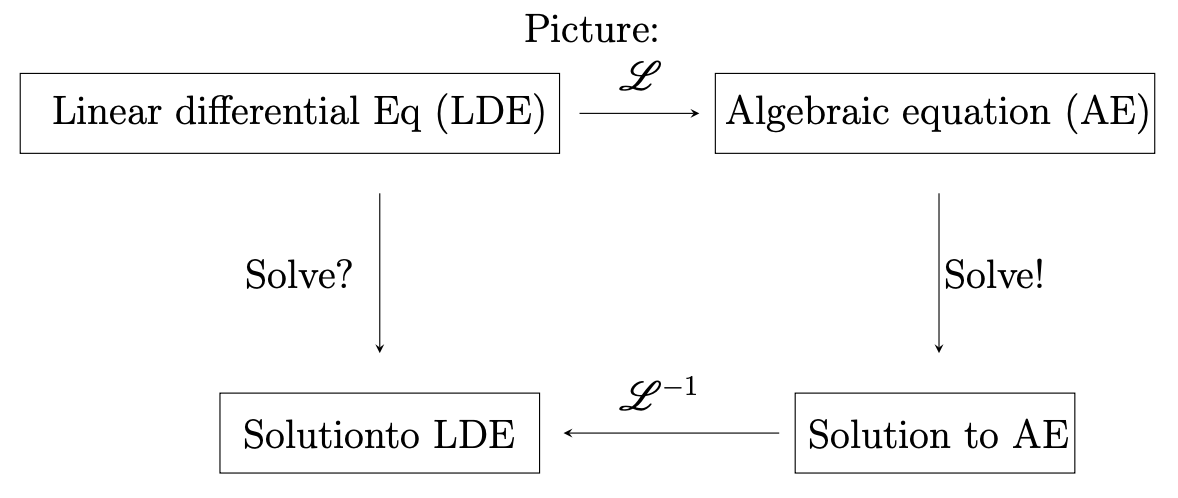

Laplace Transform Motivation:

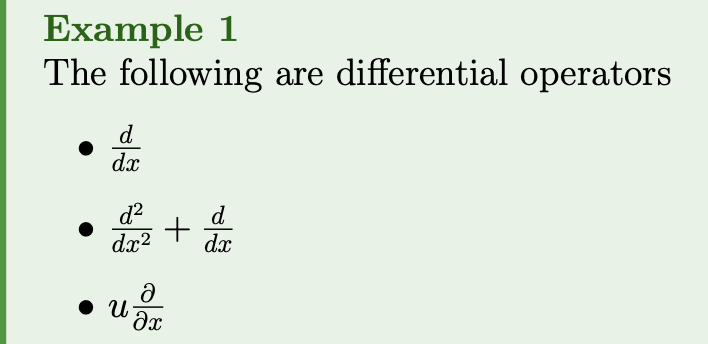

Definition 1: Differential operator

A differential operator is a special type of function (called a functional) that accepts a function and returns another function and only consists of taking derivatives, multiplying by functions, and adding other differential operators.

Example:

All ODEs are of the form:

where is the dependent variable, is the independent variable, is the forcing term and is a differential operator.

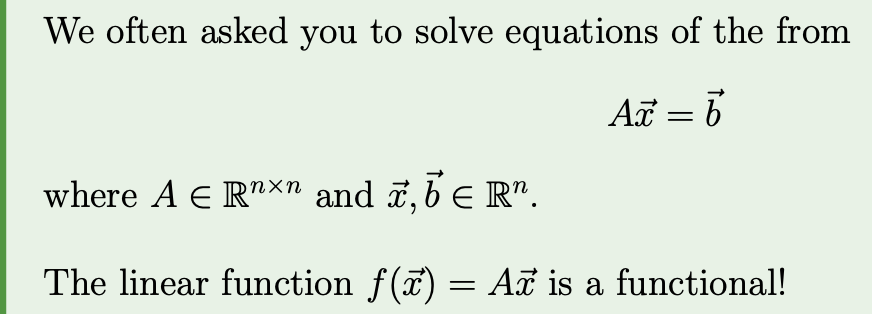

Also in MATH115 we studied functionals called linear transformations!

Note: looks quite similar to given that is linear …

This is the basis of the Laplace transform approach to solving ODEs.

Laplace Transform and Inverse Laplace Transform

Lecture 4

Lecture goal:

- be able to compute Laplace transforms from the definition

- know what one-sided or unilateral Laplace Transform is

- understand some commonly used (and important) properties of the Laplace transform

Definition 1: Unilateral Laplace Transform

The Unilateral Laplace Transform or One-sided Laplace Transform of a function defined only for is defined as

Caution: If we use the symbol "" we mean the two sided transform unless otherwise stated.

Bunch of examples. Go see.

Properties of the Laplace Transform:

Theorem 1

For any function , the one-sided Laplace transform will always converge given that there is some sufficiently large such that exists

Theorem 2: Laplace Transform is Linear

Suppose that and have Laplace transform and .

Then for all {} the ROC is the intersection of the ROCs for and .

Theorem 3: Time-Scaling

If {} then for {}

Theorem 4: Exponential Modulation

Prof said he might ask proofs in exams.

Theorem 5: Time-Shifting

If and then .

Theorem 6: Multiplication by

tIf then .

Theorem 7: Laplace Transform of a Derivative / Integral

Let be such that there is a real value such that the integral converge and such that there exists a function such that for and there exists a real value such that converges. In this case or in other-words

The proof for this is basically in the previous example. Example 8. This theorem is how we will solve linear DEs!!!

Lecture 5

Lecture goals:

- know how to use Laplace Transform table and the properties of the Laplace transform to evaluate Laplace Transforms and “simple” inverse Laplace Transforms.

- know what the convolution operator is and its connection to Laplace Transforms

2 pretty straightforward examples.

Convolution

Before solving DEs we introduce one more super useful property of the Laplace Transform by first introducing convolution.

To motivate the continuous convolution operator we start with some discrete cases.

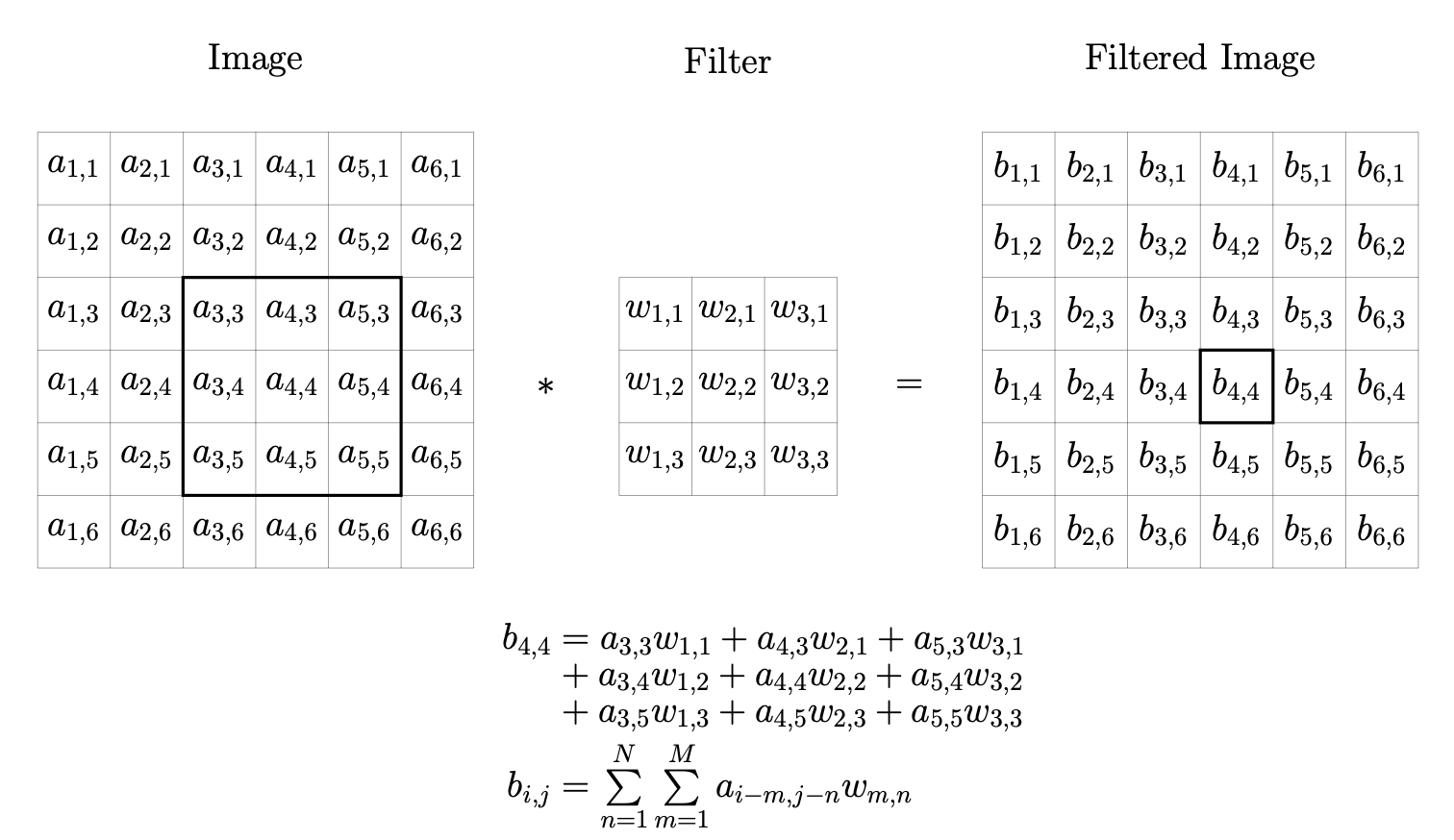

2D Image filtering: Consider the image of a random internet cat:

Suppose we wanted to blur the image. We could do this by replacing each pixel with a weighted average of the nearby pixels

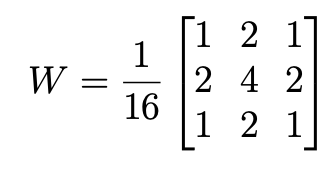

This process of filtering the image is called discrete convolution. If we use a Gaussian filter matrix i.e. something of the form:

then the filtered cat image becomes

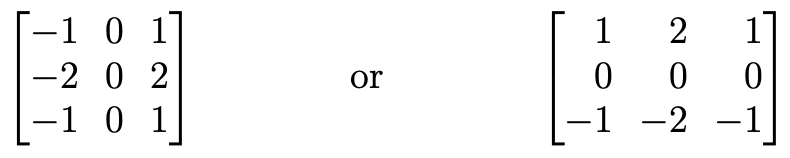

If we use a Sobel filter matrix i.e. something of the form

or the norm of the results then the aforementioned filtered cat images are summed becomes

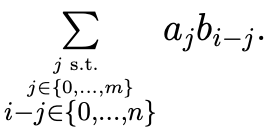

Polynomial Multiplication:

Suppose that and . The product would be

Note that the coefficient of is just

This has the same functional form as the filtering example (but is in 1D) and hence a 1D convolution of the vectors and .

Continuous Convolution:

Motivated by the previous discrete applications we define a continuous version of the convolution.

Definition 1: Convolution

The convolution of the functions and , denoted by is the integral given that the integral converges.

Before we continue, we define the idea

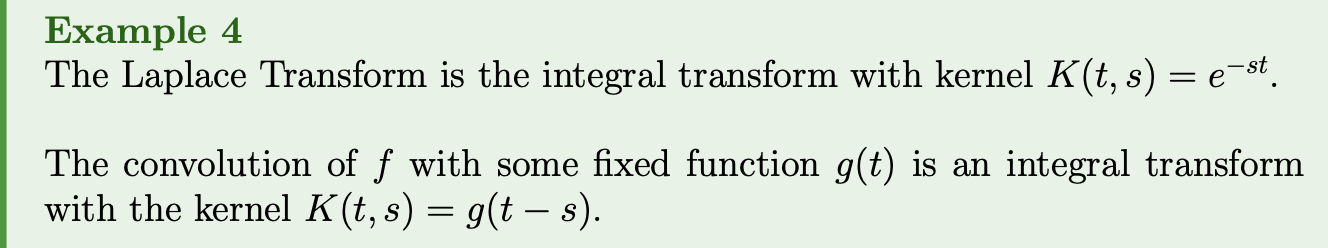

Definition 2: Integral Transform

An integral transform is a functional (function on a set of functions) that can be written in the form

.

The function is called the kernel of the integral transform.

Convolution Properties:

Theorem 1: Convolution Properties

A. The convolution operator is commutative B. If and are one-sided functions (i.e. for ) then

and hence the convolution is also one sided.

Theorem 2: Convolution Theorem

If there exists such that the integrals

and

converge then,

This theorem states that the Laplace Transform of a convolution is the product of the transforms! A direct result of this allows us to “quickly” compute inverse Laplace transforms:

Note: for real valued functions and , the convolution is a real valued integral (i.e. not a contour integral in the complex plane)!

Lecture 6 - Solving DEs via Laplace Transforms

Definition 1: Characteristic Polynomial

The Characteristic Polynomial of a linear differential equation with constant coefficients

The polynomial multiplied by after you take the Laplace transform. This polynomial will always be

4 examples: need to be able to do the Partial Fractions. I understand but still need to do example 4 PF as exercise.

Extensions of our method: Our method also “works” for some Linear ODEs with non-constant coefficient. The problems are

- evaluating the Laplace transform of for some given function and a unknown function and

- we end up with another (but simpler) differential equation to solve.

If then this is particularly “nice”!

Example 5.

Lecture 7 - Rational Functions, Poles, and Initial and Final Value Theorems

Lecture goals:

- understand what a pole is and

- understand the initial and final value theorems After computing the Laplace transform of a function we usually end up with a function that is the ratio of polynomials. Such functions have a name.

Definition 1: Rational Function

A rational function is a function of the form where and are polynomials.

Definition 2: Regularization

A function is the regularization of a function if

- For all in the domain of , .

- For all such that exists but is undefined, .

Theorem 1

If is the regularization of a function that has a finite number of discontinuities then .

Definition 3: Finite Zeros and Finite Poles

A rational function that is its own continuous continuation the roots of are called the **(finite) zeros of ** and the roots of are called the (finite) poles of .

Definition 4: Poles and Zeros at Infinity

Given a rational function has zero at infinity if . is said to have a pole at infinity if its reciprocal has a zero at infinity.

Convention 1: Unless otherwise stated when we talk about poles and zeros we mean the finite poles and finite zeros.

Definition 5: Proper and Strictly Proper Rational Functions

A rational function is proper if the degree of its numerator is less than or equal to that of its denominator. A function is strictly proper if the degree of the numerator is strictly less than that of its denominator.

We care about these ideas because the inverse Laplace transform is a complex valued integral and because of this, we can compute the inverse transform of by only knowing information about the poles of .

Two important results that are related to poles (and more later).

The initial value theorem:

Definition 6: Piecewise Continuous

A function is piecewise-continuous on a given finite interval if

- has a finite number of discontinuities in that interval and

- for each discontinuity both the left and right hand limits exits (note they can be different values)

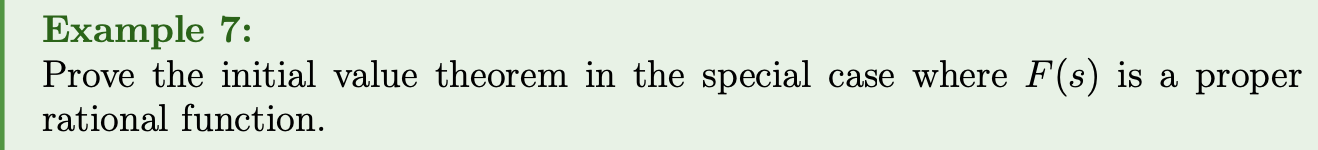

Theorem 2: Initial Value Theorem

If is a piecewise-continuous and converges for some then

Understand this theorem:

- The above gives us a way of computing the IC of from without computing the inverse Laplace transform and instead evaluating a limit in the complex plane

- is generally complex so the limit is in the complex plane NOT the real number line thus:

- tends to infinity

- can do anything (stay 0, oscillate, go to infinity, do random things, etc.)

- For many problems we can treat the limit as the standard limit you are used to working with.

The final value theorem:

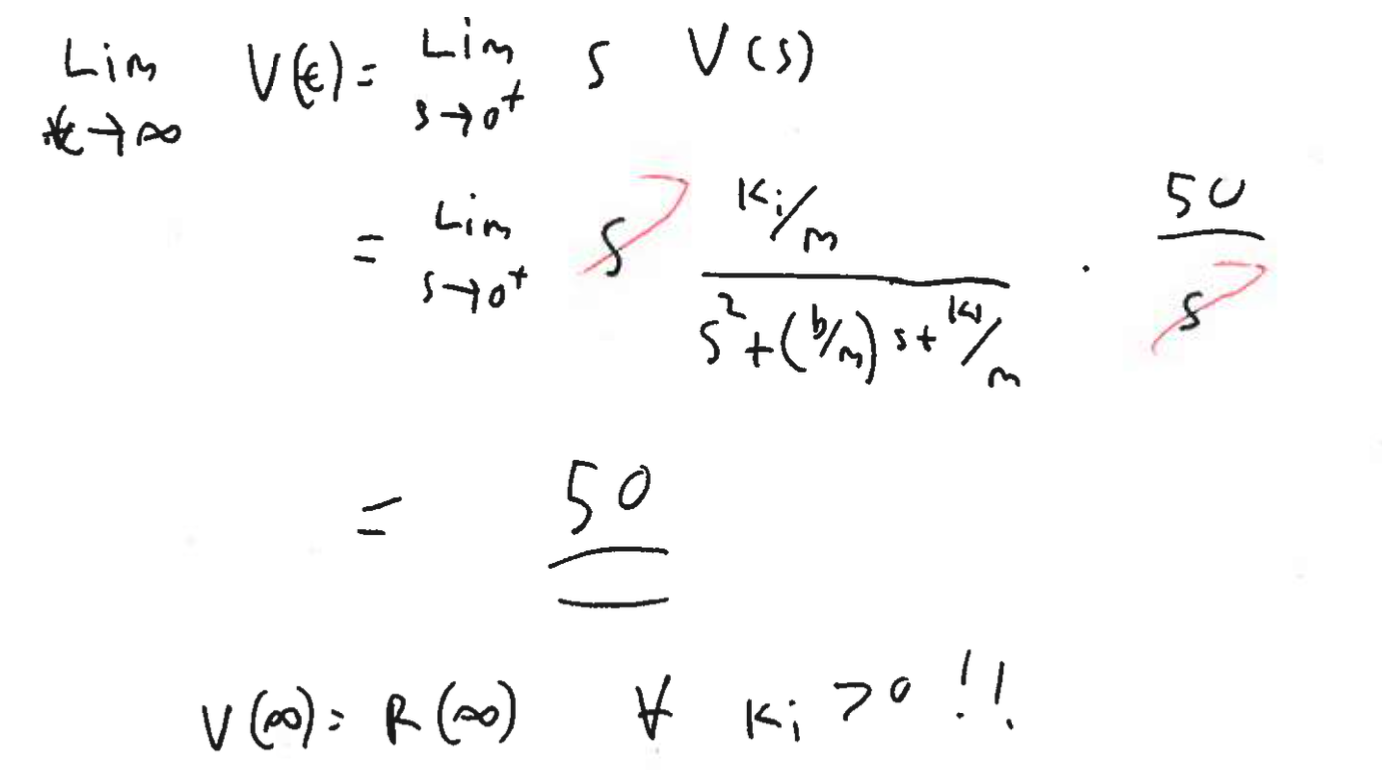

Theorem 3: Final Value Theorem

For a function , if

- is a proper rational function

- has the property that all the poles have real parts that are strictly negative with the exception of a single pole (of order 1) at

or if is the product of a function satisfying the above conditions multiplied by a complex exponential , then

.

If the poles of a rational function do not satisfy the above condition, then does not exist.

i.e.

Understanding this theorem:

- When it applies, the above gives us a way of computing the “final value” of from without computing the inverse Laplace transform and instead evaluating a limit in the complex plane.

- Again is generally complex so the limit is in the complex plane NOT the real number line. Limits at finite points in the complex plane are a bit different from infinite limits.

- Here the formal definition of a limit of a complex valued function at is the constant such that for every there is a such that if then where is the standard modulus function.

- In practice for any problems of computing limits at 0, you can treat the limit as the standard limit you are used to working with.

- Connection to 2D limits from MATH119 - Calculus 2 for Enginering.

- Provided that the limits exist, we have

Examples in notes.

Note

When the final value theorem does not apply, we do not know if the limit diverges or if it oscillates. Hence, we need more math to be able to ascertain if a solution is bounded or not (without computing the inverse transform)! See examples 8C and 8E for unstable systems and D for a stable but non-convergent system.

Lecture 8 - Zero-input, zero state response, and the delta function

Lecture goals:

- understand how poles effect the inverse Laplace transform

- what the zero-input and zero-state are

- know what the delta function is and its properties are

Understand Poles:

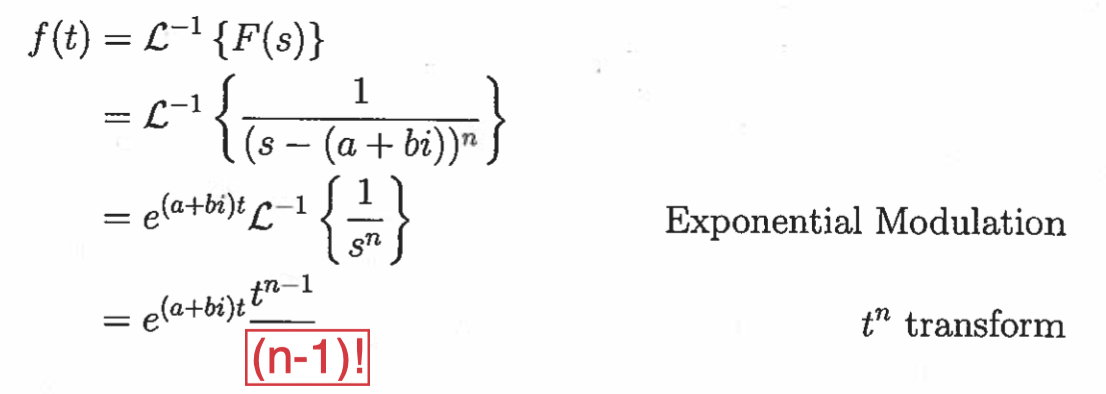

The final value theorem is useful but we can actually do a bit better so we will examine and analyze all the various cases of a function with a single pole. Consider a function with a single pole of (natural number greater than 1) order at :

Taking the inverse Laplace transform gives:

Thus all functions that have a rational Laplace transform (the ones we have covered thus far), have an inverse transform of the form of a weighted sum of terms of the form of the above.

We hence analyze these functions via case analysis!

In conclusion the location of the poles tells us about the behaviour of the solution at infinity (via the final value theorem) but also the initial values (via the initial value theorem).

If only… there was a way to control where the poles are and to thus control the behaviour of the solution to a DE we care about…

Enter the ideas of the zero-input response, the zero-state responses and the transfer function

Definition 1: Zero-State and Zero-Input Response and the Transfer function

Given a linear DE with constant coefficients of the form

along with the needed initial conditions , the Laplace transform of the equation will be of the form

where is some function of the ICs and coefficients.

- The zero-input response is . This is the response of the system of the DE models to the initial conditions (i.e. when the forcing term is 0)

- The zero-state response is . This is the response of the system the DE models to the forcing term (i.e. when the ICs are all 0).

- The transfer function is . This function determines the zero-input and the effect of the forcing term.

To help understand the role of the transfer function in the relation between the zero-input response and the zero-state response, we introduce the idea of generalized functions.

In 1927, Physicist Paul Dirac in his “The physical interpretation link of the quantum dynamics” paper introduced the function now known as the “Dirac delta” function.

Definition 2: Dirac Delta Function

The function is defined as the “function” (called a special function or a distribution) that satisfies the properties that:

when

Of course there is no classical function that satisfies the above conditions but we can think of as the limit of a collection of functions which we now explore.

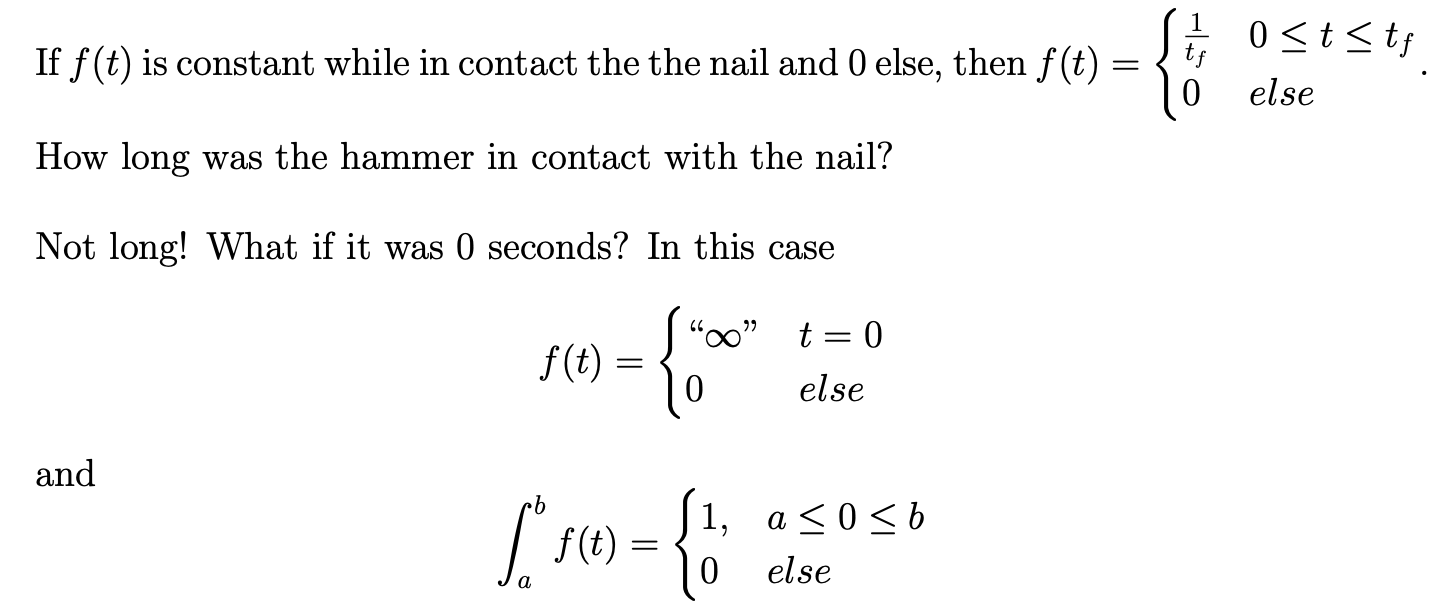

Suppose you hit a nail with a hammer and do a total amount of work of 1 unit. If is the amount of force applied over time then .

We call this function . Mathematically one can make this definition formal but to do so requires a lot of mathematical background.

Theorem 1

If is a “well-behaved” function defined at then

.

The above is the main property of the delta function we need.

Example 3:

Transfer function

The transfer function is the systems transform of the impulse function.

In general if where is the transform of the forcing term and is the characteristic polynomial, then

where is the system’s transfer function.

Hence a system’s response to any function is simply the convolution of with the systems response to the delta function - called the system’s impulse response.

This is a theorem!

Theorem 2

The zero-state response of a linear DE is the convolution of the input (i.e. forcing term) with the systems impulse response.

With the above results in mind, the solution to any linear DE with constant coefficients can be written as

where is the inverse Laplace transform of the transfer function, are the poles of the zero-input function, is the order of the th pole, is the coefficient given by the partial fraction decomposition and is the number of poles (which will always be finite because of the form of the second term).

Lecture 9 - Delta function derivatives and intro to systems and signals

Lecture goals:

- understand how to differentiate the delta function

- know what systems and signals are

Derivatives of

It turns out that it is useful to take the derivatives of .

In MATH 119 we told you that we can’t differentiate functions at discontinuities so?

Recall that is not a function in the classic sense but is instead a distribution, a collection of functions that have a common property when we take a limit. Hence to differentiate such functions, we need to redefine what it is to be differentiable.

We will not do this formally but will instead play with delta until we magically arrive at the result.

We will start with the derivative of a simpler function.

Examples 1, 2, 3 and 4. Look at notes for solutions.

\delta(t)Recall it is not a function, but instead a distribution, a collection of functions that have a common property when we take a limit.

Notation 1

denotes which is the effect of on the test function .

Systems and Signals:

Given a linear differential equations and some specified initial conditions:

There are two classic problems one considers in engineering.

Definition 1: Analysis and Synthesis

- Analysis: Given a forcing term solve for .

- Synthesis: Given a , or some conditions on , find a forcing term such that solved the differential equation.

We have thus far focused on the analysis problem, which is the easier of the two, but the synthesis problem is generally more useful in engineering design.

To solve the synthesis problem we need a good understanding of how relates to . Think about the spring problem from L8 Ex2 as an example.

Definition 2: Signals

A signal is a complex-valued function of an independent real variable .

- usually (but not always) represents time.

- If the domain of the function is or some interval of then the signal is said to be a continuous-time (CT) signal.

- If the domain of the function is , or other discrete subset of then the signal is said to be a discrete-time (DT) signal.

Definition 3: Systems

Mathematically, a system is a map from a space of input signals to a space of output signals.

Notation 2

If represents some system then or or simply indicated that is the response (solution) of the system to the input signal .

The above is similar to systems of equations from 115 and the connection to ma- trices and the “input” and “output” vectors.

Explicitly, the system of equations

can be written as

Here one can ask the analysis question of solving for (“easy”) or the synthesis question of solving for (hard”) and generally wants to know the relation between the response and input.

Further, the matrix S is the map between the vector space of input functions (all valid ) and the vector space of output functions (all valid ).

Definition 4

A system is continuous-time (CT) if both its input and output signals are CT.

A system is discrete-time (FT) if both its input and output signals are DT. is a hybrid system if ti contains both CT and DT signals.

Lecture 10 - Systems and signals introduction continued

Lecture goals:

- know what systems and signals are

- understand the various properties we generally care about

Connection between CT systems and DEs:

A DE represents a CT system only when for any signal in the set of input signals of the system, there is a signal in the output class that satisfied the DE.

Example 1:

Connection between DT systems and difference equations:

DT systems are represented by difference equations. An example of a difference equation is the mine craft chicken model from assignment 1.

Here is another classic fun difference equation

This models the normalized population of bunnies with the constraints that

- there is growth proportional to the population for small populations and

- if the population is close to the environmental limit, in this model, then the population declines.

To Lecture10 magic.m!!

The previous two examples demonstrate that generally speaking we can explore the relation of the input and outputs for many systems by “simply” solving DEs, difference equations etc.

We now examine some more important properties of systems.

Definition 1: Memoryless vs Dynamic systems

A system is memoryless, if the instantaneous output value depends only on the input value at time .

A system that is not memoryless is dynamic.

Most interesting problems involve systems that are dynamic.

Definition 2: Causality

A system is causal if only depends on for .

Idea: the output signal only depends on the previous behaviour of (i.e. not the future values of ).

Definition 3: Causality (Alternate Definition)

A system is causal if for all whenever for all and and then , for all .

To see why the last example above is noncausal one needs to notice that is defined as a limit of a difference quotient and as such it depends on more future information than does.

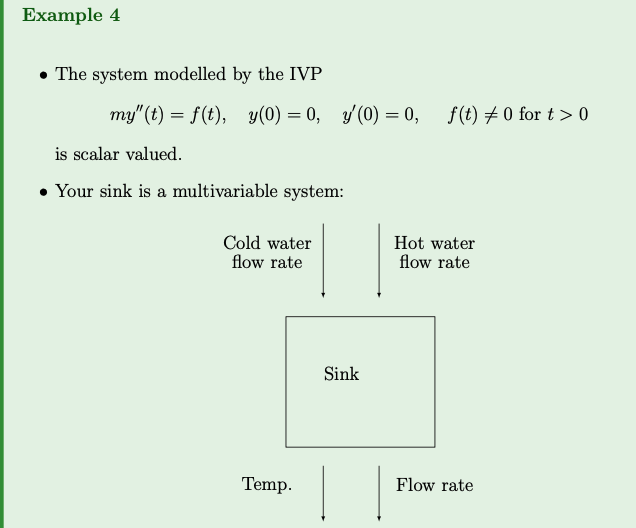

Definition 4: Multivariable vs Scalar Systems

A system is multivariable if is has multiple inputs and/or outputs.

A system is scalar if it has a single input and a single output.

Multivariable systems are harder to control then scalar systems.

We will study scalar systems (but may examine multivariable systems a bit).

Definition 5: Linearity

A system S is linear if it has the property that if and are input signals and , then

In simpler terms, it means that if you have a system that is linear, then scaling the input signal by a constant () and adding it to another scaled input signal by another constant () will have the same effect on the output signal as scaling and adding the output signals individually. This property is fundamental in many areas of engineering and mathematics, particularly in signal processing and control theory.

Note if then and we can approximate the full pendulum by the linear oscillator:

Linear systems are “easy” to study but tend to have “simpler dynamics” than non- linear systems.

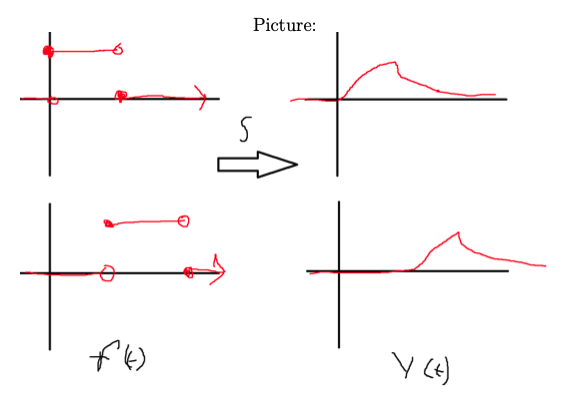

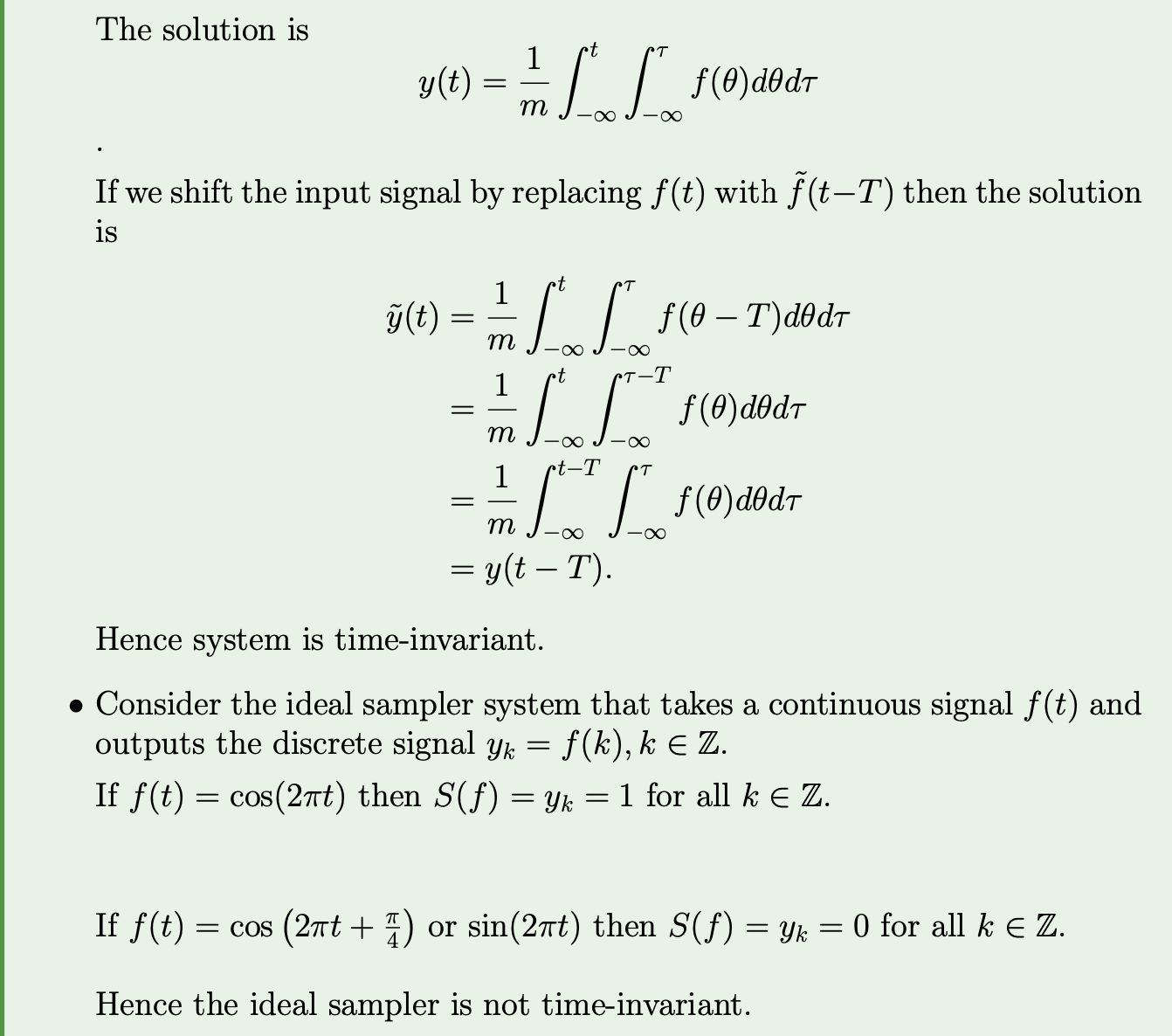

Definition 6: Time-invariance

A system is time-invariant if its response does not change with time.

Explicitly if then for all .

A system that is not time-invariant is called time-variant.

Definition 6 defines time-invariance for a system. A system is considered time-invariant if its response remains the same regardless of shifts in time. Mathematically, if an input signal produces an output signal y(t)y(t), then for any time shift , if the input signal is applied to the system, the output remains the same.

For the rest of this course we will focus on linear time invariant systems of LTSs.

These systems are often modelled by constant-coefficient ODES and hence our work on the Laplace transform can be used to study the system…

Lecture 11 - Midterm Review

Do the examples.

Example 1:

- I don’t understand how to solve example 1. Wow, what a start.

- How do you differentiate between continuous and discrete time??

- Continuous time vs discrete time: Since the independent variable is continuous, this system is a continuous-time (CT) system.

- Memoryless vs dynamic: The presence of terms like , , and indicates that the system has dynamic behaviour. Therefore, it is a dynamic system.

- Causal vs non-causal: In the equation , the output depends on the current and past values of itself (, , and ), which means it only depends on past or present inputs. Therefore, the system is causal.

- Multivariable vs scalar: This system has a single input () and a single output (), making it a scalar system.

- Linear vs nonlinear: The system equation involves nonlinear terms like and , so the system is nonlinear.

- Time-invariant vs time-variant:A system is time-invariant if its response does not change with time. In other words, if we shift the input signal in time, the output signal should shift by the same amount.

For a time-invariant system, shifting the input signal by should result in a corresponding shift in the output signal by .

Let’s examine if this holds true for the given equation:

If we shift by , we get . The corresponding output should satisfy the equation:

y6(t−T)+y4(t−T)+6y(t−T)=f(t−T)y6(t−T)+y4(t−T)+6y(t−T)=f(t−T)

Since the equation remains the same after the time shift, the system is time-invariant.

In summary:

- Continuous time

- Dynamic

- Non-causal

- Scalar

- Nonlinear

- Time-variant

Example 2:

Memorize

and

Example 3: (Partial Fraction)

Example 4: Solve IVP

Have the options between PF or Convolution

Example 5: Solve IVP

Example 6:

Example 7: Write inverse Laplace transform of…

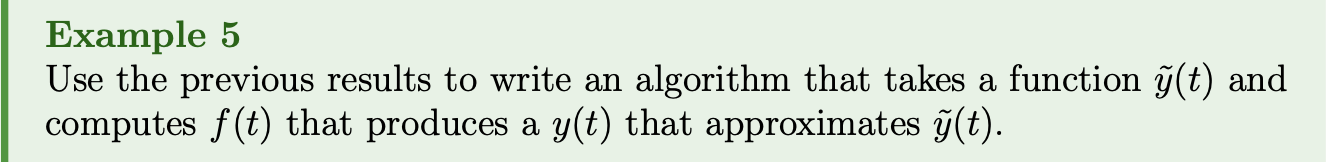

Lecture 12 - Fun with Foreshadowing (Looking ahead)

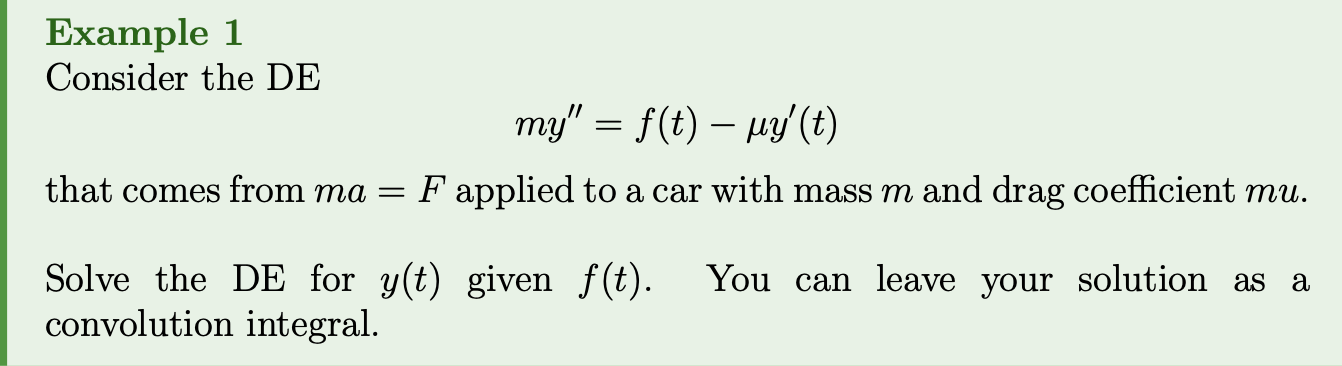

In the synthesis problem we are given and want to find . Later we will cover how to do this in mathematical detail but… how do we in principle do this??? Let’s look at an example!

Solution: The solution is

To compute the inverse Laplace transform note that

Hence, by exponential modulation in the Laplace table

Combining this with the previous result gives

Now suppose that we want to find such that is given by a particular provided function. In the next few examples, we will derive a controller that is commonly used to solve this problem in real time.

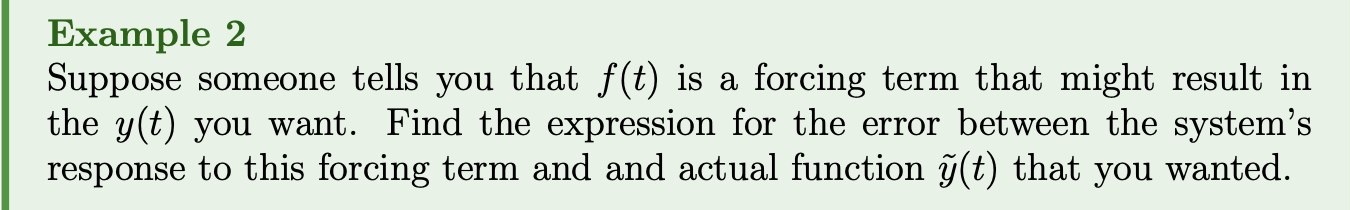

The response of the system to is given by

and hence the error is

If the error is 0 for all values of , then we found the solution to the synthesis problem!

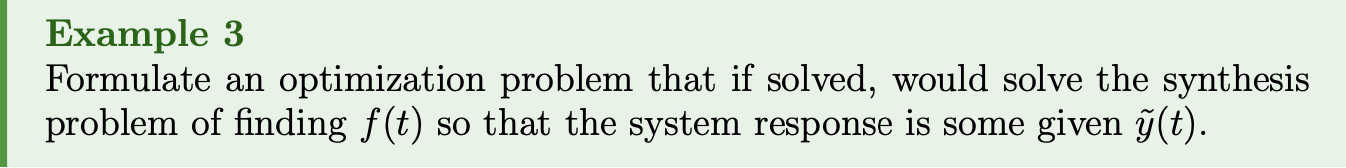

We thus want to pick (ideally that is smooth) such that the error is as close to 0 as possible. Hence we want to solve the optimization problem

In the above there are few different ways to define what we mean by min. Two common ones are

- Minimize the maximum value over all i.e. the norm

- Minimize the average value i.e. the

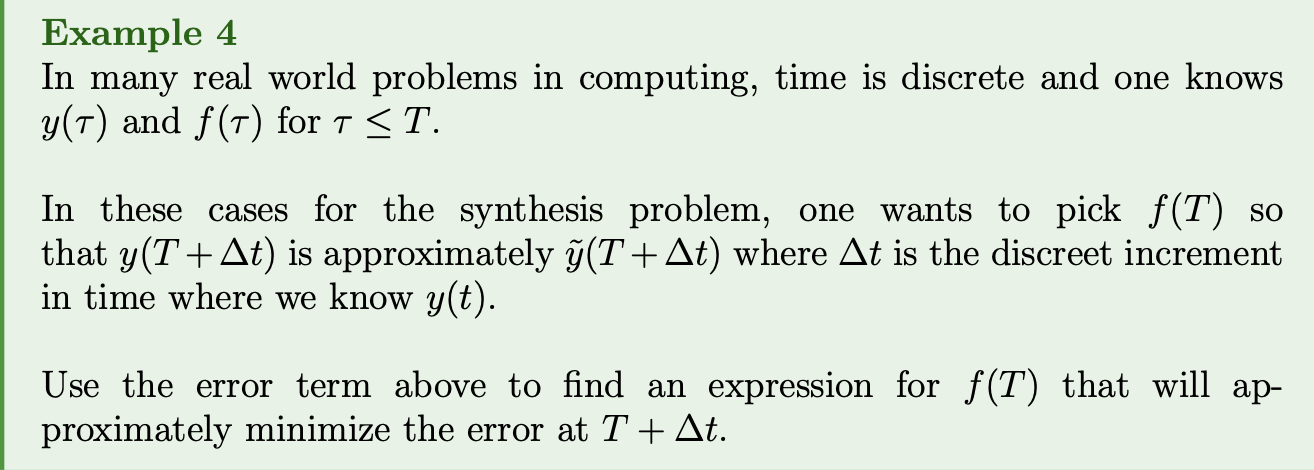

The (signed) error at is given by

Assuming is continuous and is small, we can write

and

where is some number (formally it is the integral but we don’t want to compute it in practice…). Thus

If we want then we can pick which we will write as where is non-zero constant.

Here is some pseudocode

Define y_want(t), kp, the error at t=a and

the convolution kernal, G, for the given DE.

for t=0:Dt:b

f(t+Dt) = kp*E(t)

y(t+Dt) = num_int(f(u)*G(t-u*), u, 0, t+Dt)

E(t+Dt) = y_want(T+Dt)-y(t+Dt)

end for

In the above num_int is some numerical method that integrates.

Lecture12_basic_PID.m implements a method that uses the above pseudocode with extra controls for the integral and derivative of the error.

Lecture 13 - Systems Input and Responses

Lecture goals:

- understand the impulse response of an LTI

- the convolution properties of LTIs

- response of an LIT to exponential inputs

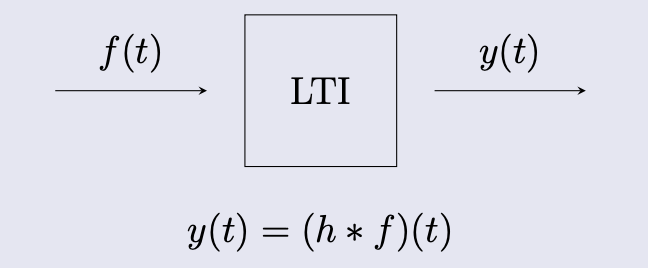

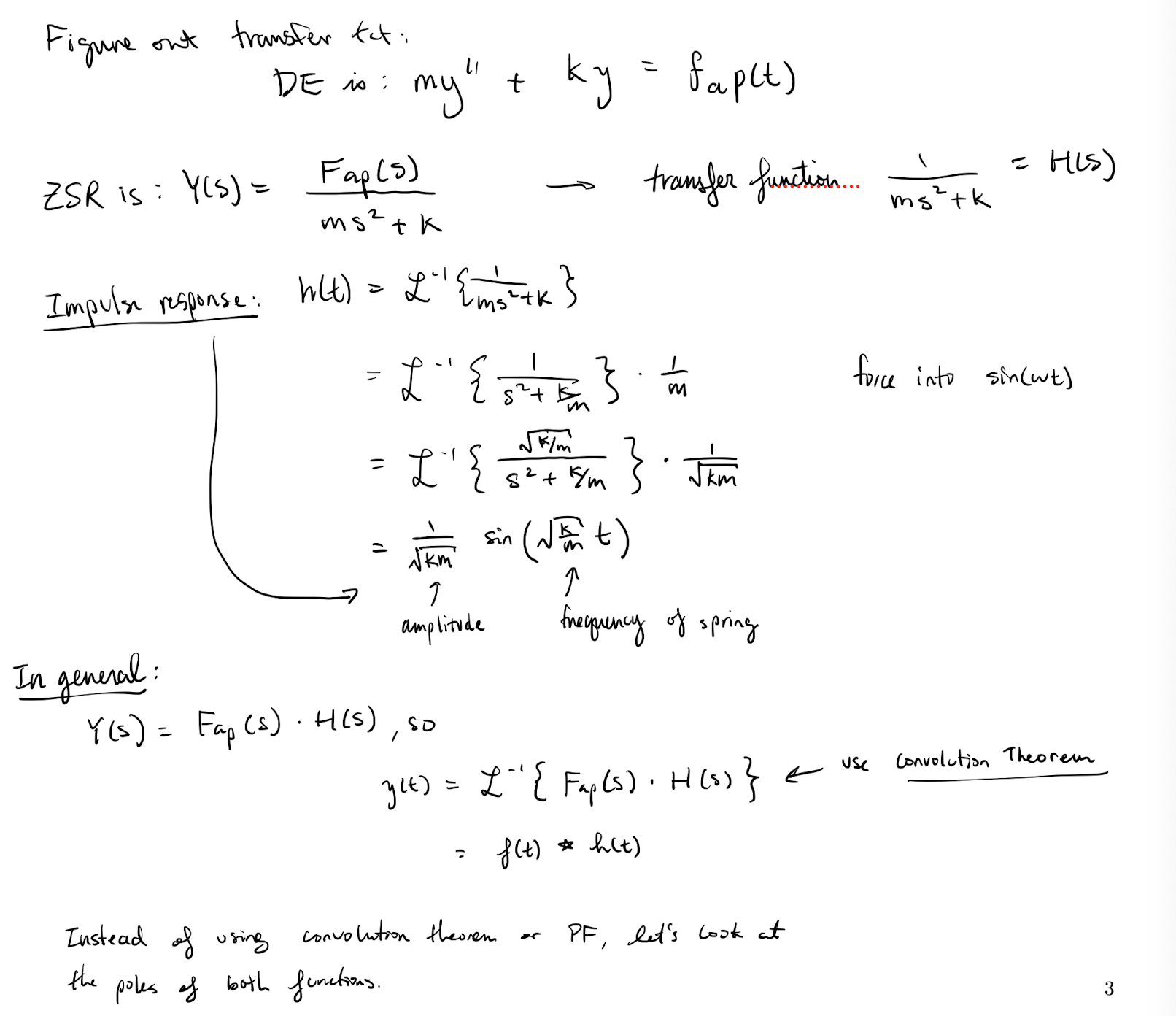

Recap of some DE results:

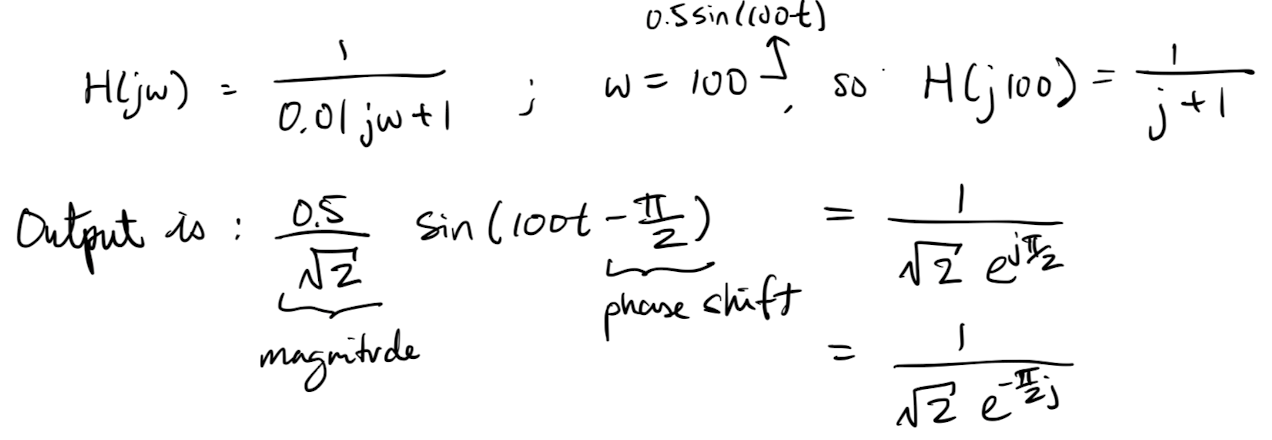

Recall that for a linear DE with constant coefficients the zero state response is

where:

- is the Laplace transform of the output signal,

- is the transfer function of the DE, and

- is the Laplace transform of the forcing term (input signal).

If the forcing term is the delta impulse function (i.e. ), then and thus

In this case, represents the response of the system to an impulse input. Consequently, the inverse Laplace transform of , denoted as , is termed the impulse response of the DE. The impulse response describes the behaviour of the system when subjected to an impulse input, which is often a critical characteristic in analyzing system dynamics and properties.

Thus we also call

the impulse response of the DE.

Understanding the impulse response of an LTI system is fundamental, as it provides insights into how the system responds to sudden changes or impulses in the input signal.

Note

That this is simply the zero-state response to a unit impulse. Thus when we write

the transfer function, , is always just the Laplace transform of the impulse response.

Finally by the convolution theorem the zero-state solution is simply

More explanation

Furthermore, by the convolution theorem, the zero-state solution (the solution without considering initial conditions) can be expressed as the convolution of the impulse response with the input signal , denoted as .

In summary, the transfer function encapsulates the system’s behaviour and characteristics, particularly its response to an impulse input. The convolution theorem provides a powerful tool for analyzing LTI systems by relating the input and output signals through convolution with the impulse response. Understanding these concepts is crucial for analyzing and designing LTI systems in various engineering applications.

is the impulse?

Impulse response of a LTI:

The above holds for Linear DEs with constant coefficients but what about more general LTIs. In general theses might not be able to be modelled by a DE… so how do we find their responses to general inputs.

Theorem 1

The response of an LTI system is the convolution of the input with the system’s impulse response.

Proving this in the CT case is a bit messy so we will show that the result holds for the DT case and then make the connection to the CT case.

First we need to define some DT analogues of CT objects we have worked with.

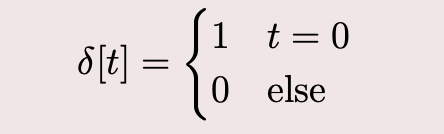

Definition 1: Kronecker delta

The Kronecker delta function is defined as the function from to given by:

Theorem 2

For all functions

Theorem 2 shows how to decompose every function into a linear combination of impulse functions (i.e. is a basis for all DT functions.)

Proof of Theorem 1: in notes.

Clarifications on Theorem 3 and 4

Theorems 3 and 4 provide further insights into the behavior of Linear Time-Invariant (LTI) systems, particularly regarding their response to different types of inputs and how this behavior can be analyzed using the Laplace transform.

Theorem 3: More explicit version of Theorem 1

Given the LTI

where is the impulse response of the system.

Theorem 4: LTI response to an exponential

If is a LTI then for any where is the LT of the system’s impulse response and is called system’s transfer function.

Note this means that complex exponentials are the eigenfunctions of LTIs and the transfer function tells you the eigenvalues of the system! (like with DEs)

Thm 4 explanation

This theorem states that if represents an LTI system, then the response of the system to a complex exponential input is given by , where is the Laplace transform of the system’s impulse response. This is referred to as the system’s transfer function. Furthermore, it highlights that complex exponentials are eigenfunctions of LTI systems, and the transfer function provides information about the eigenvalues (poles) of the system, similar to the behaviour of differential equations.

Proof: in notes.

TLDR; In general the response of a LTI system to an input f(t) is given by

and since the eigenfunctions of LTIs are complex exponentials, we can decompose into eigenbasis of complex exponentials (like we did with DEs).

In the frequency domain we have

Hence, if we want to analyze the system we can replace convolution with multiplication and simply analyze the system based on its eigenfunction decomposition, poles, etc. (like we did with DEs).

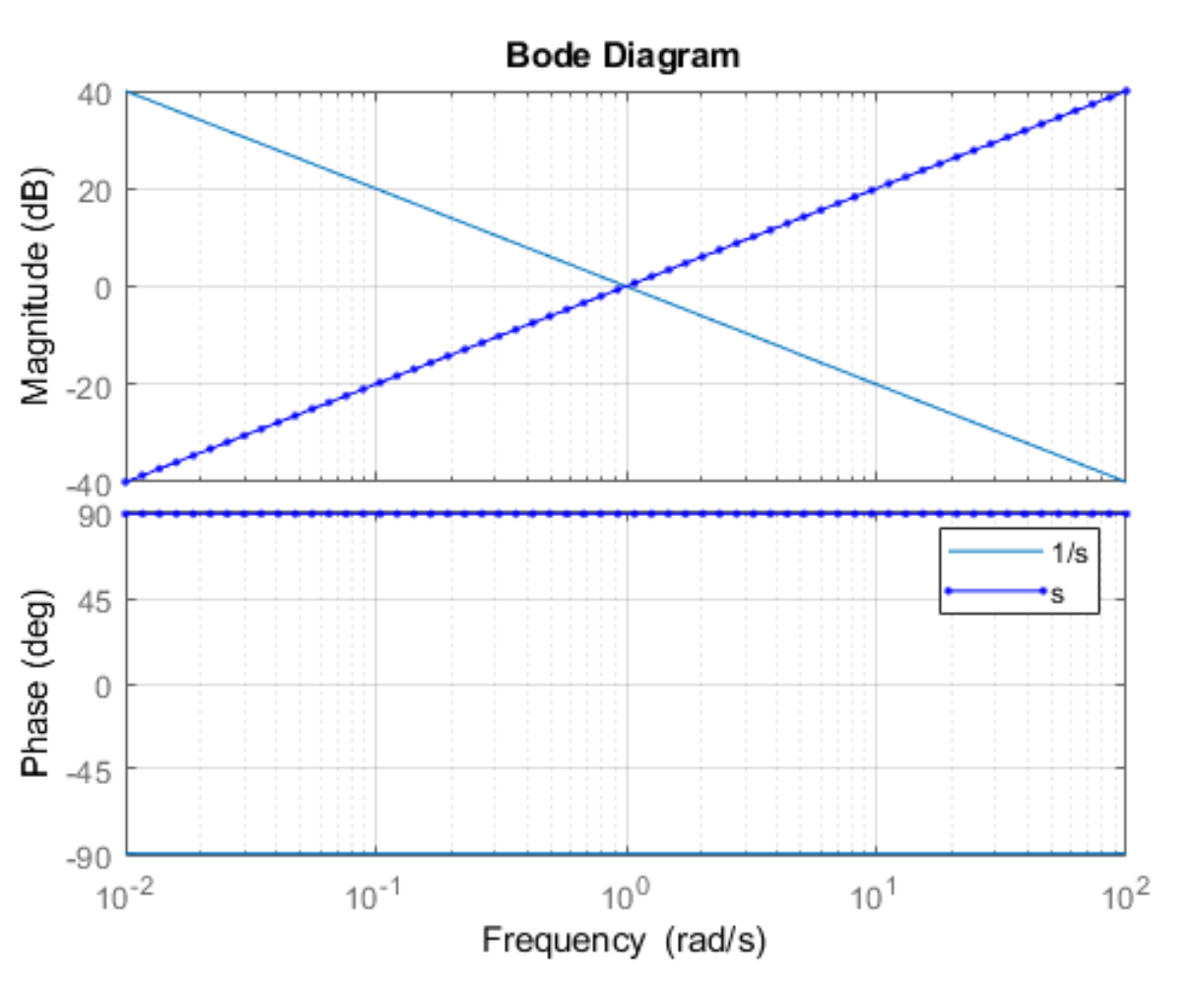

Lecture 14 - Transfer functions, Responses and the standard first order system

Lecture goals

- Understand the connection between our previous material for DEs and systems

- Know what the standard 1st order system is

If a system is able to be modelled by a linear DE with constant coefficients, i.e. , then the transfer function can be obtained from the differential equation.

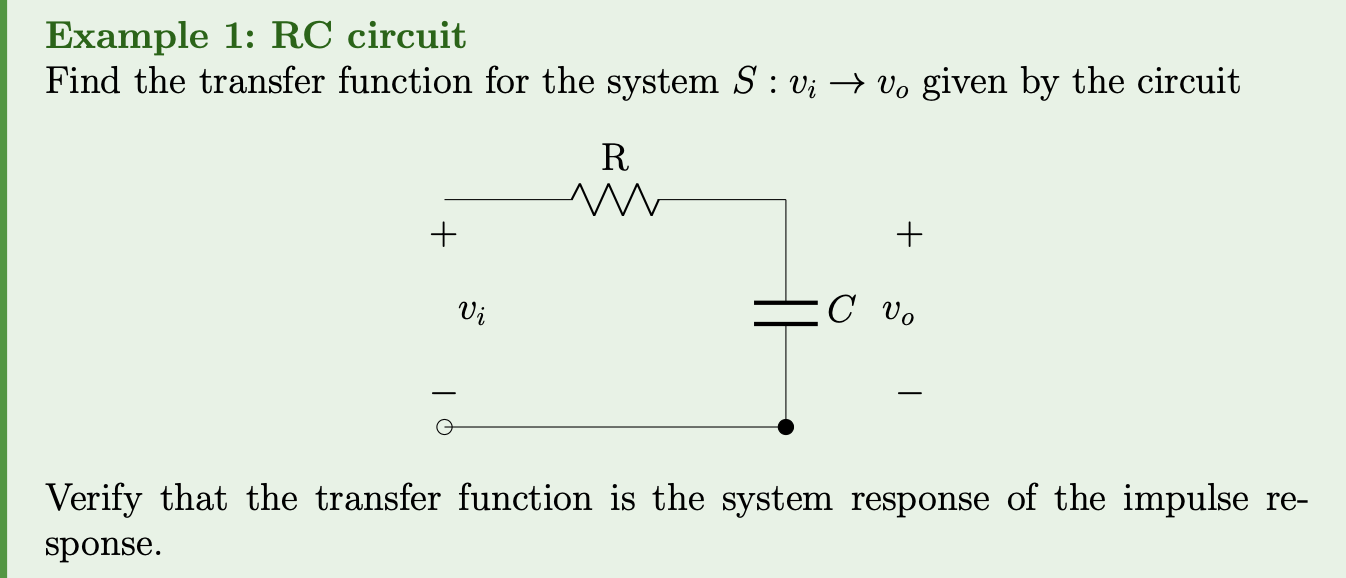

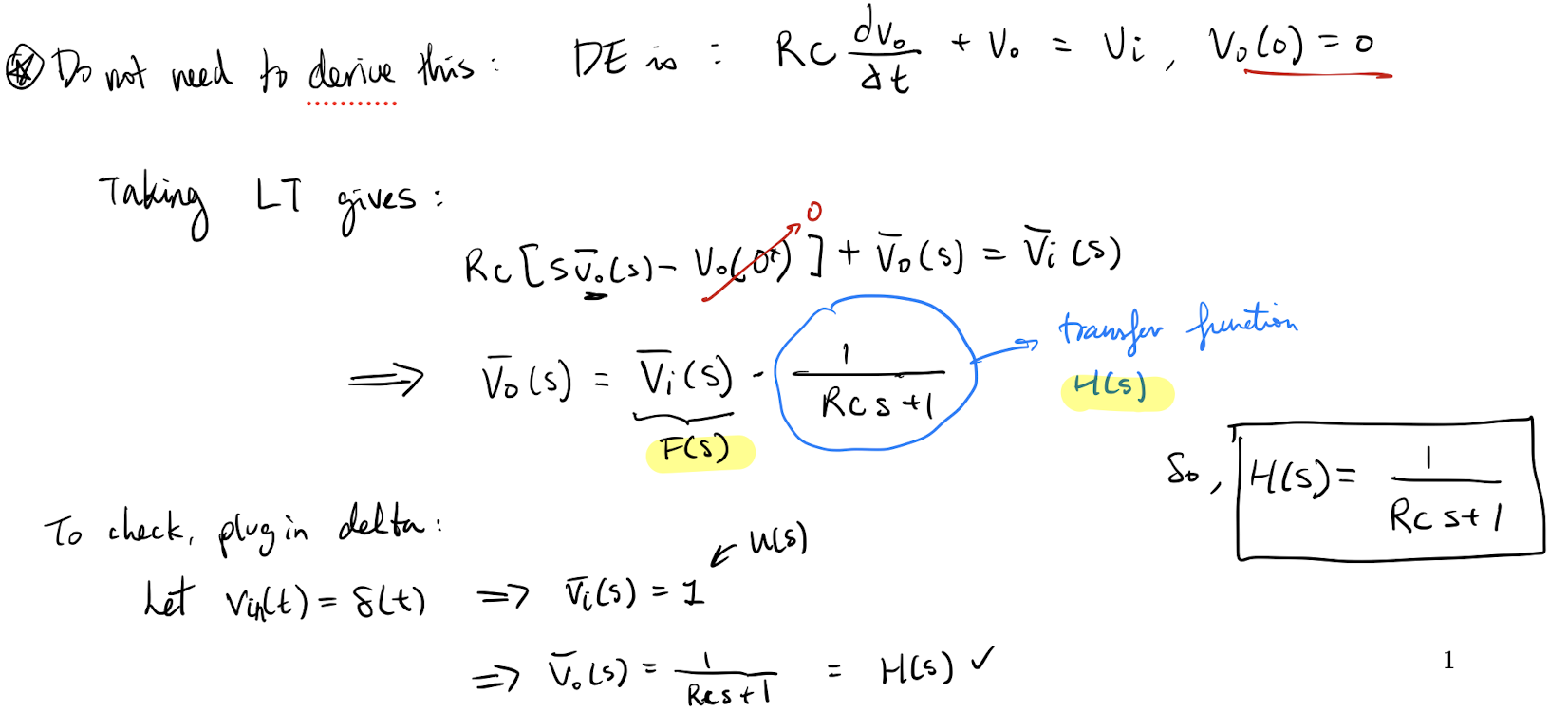

These examples

These examples illustrate the application of transfer functions and impulse responses in analyzing system behavior. The first example involves an RC circuit, a common electrical system, while the second example deals with a dampened linear harmonic oscillator, a common mechanical system.

Understanding these examples helps in grasping the practical applications of transfer functions and responses in various engineering systems. It also highlights the connection between differential equations and system analysis, showing how concepts from one domain can be applied to understand and analyze systems in another domain.

A few things to note:

- In general can have poles of its own and thus the system response to will reflect the poles of both the transfer function, , and the LT of the forcing term .

- The effects of the transfer function are present for any input so it is particularly important to understand the effects of the poles (and zeros) of .

- We will mostly look at the cases where the input is a unit impulse, , or a unit step, . Recall from A3 Q3 that for a second order DE these terms allow us to effectively set the initial condition at for the system.

- The response to the unit step impulse is .

- The response to the unit step impulse is .

- The step response is the integral of the impulse response (subtle connection to L12…)

In the “real world” it is often easier to physically generate a unit step function than a unity impulse so point 2 above gives us a nice way to compute transfer functions.

Understanding general system responses

Recall that all polynomials with real valued coefficients can be factored into a product of linear and quadratic terms. Hence if we want to understand the system response of any system, it is sufficient to understand how first and second order linear systems respond. We will thus explore the standard 1st and 2nd order systems in detail. All other LITs can be studied by taking a linear combination of the results of the standard systems.

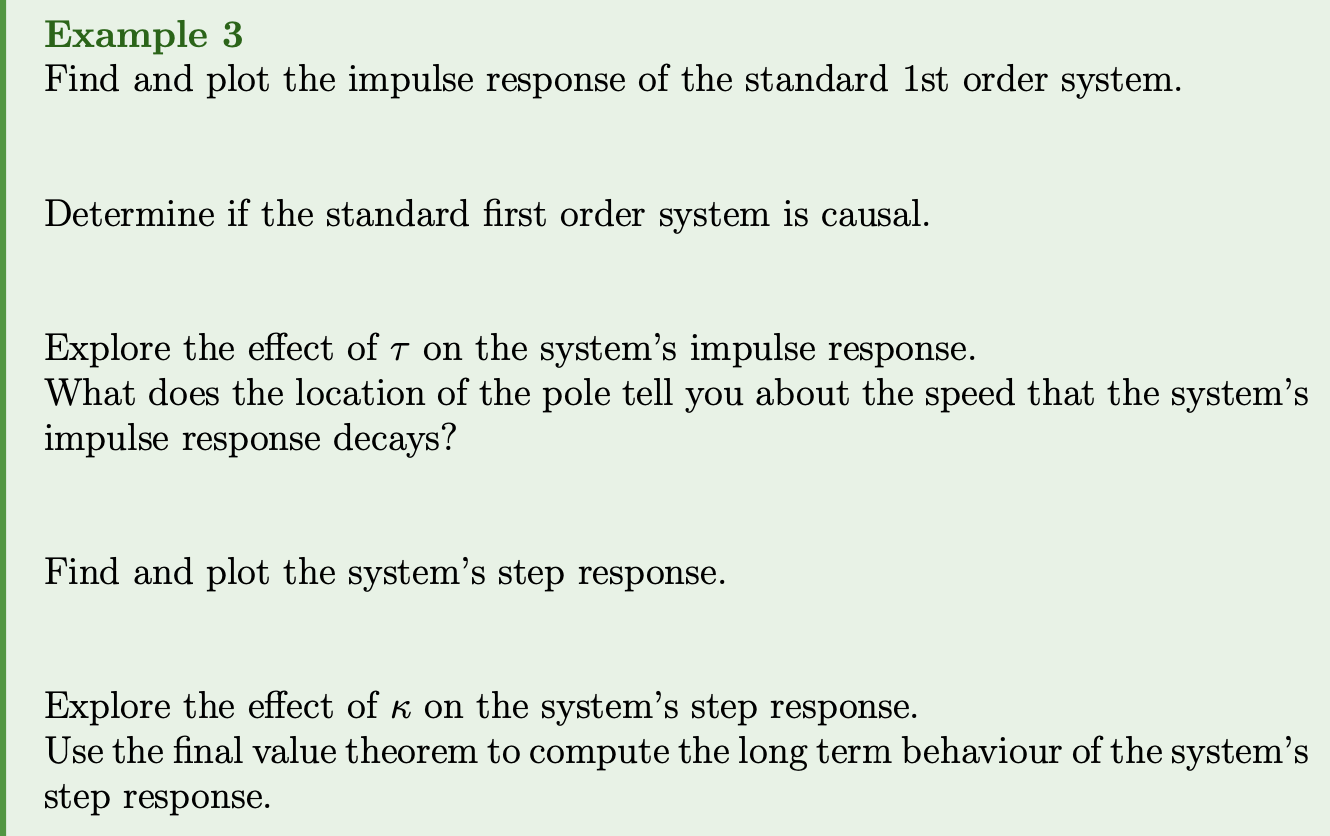

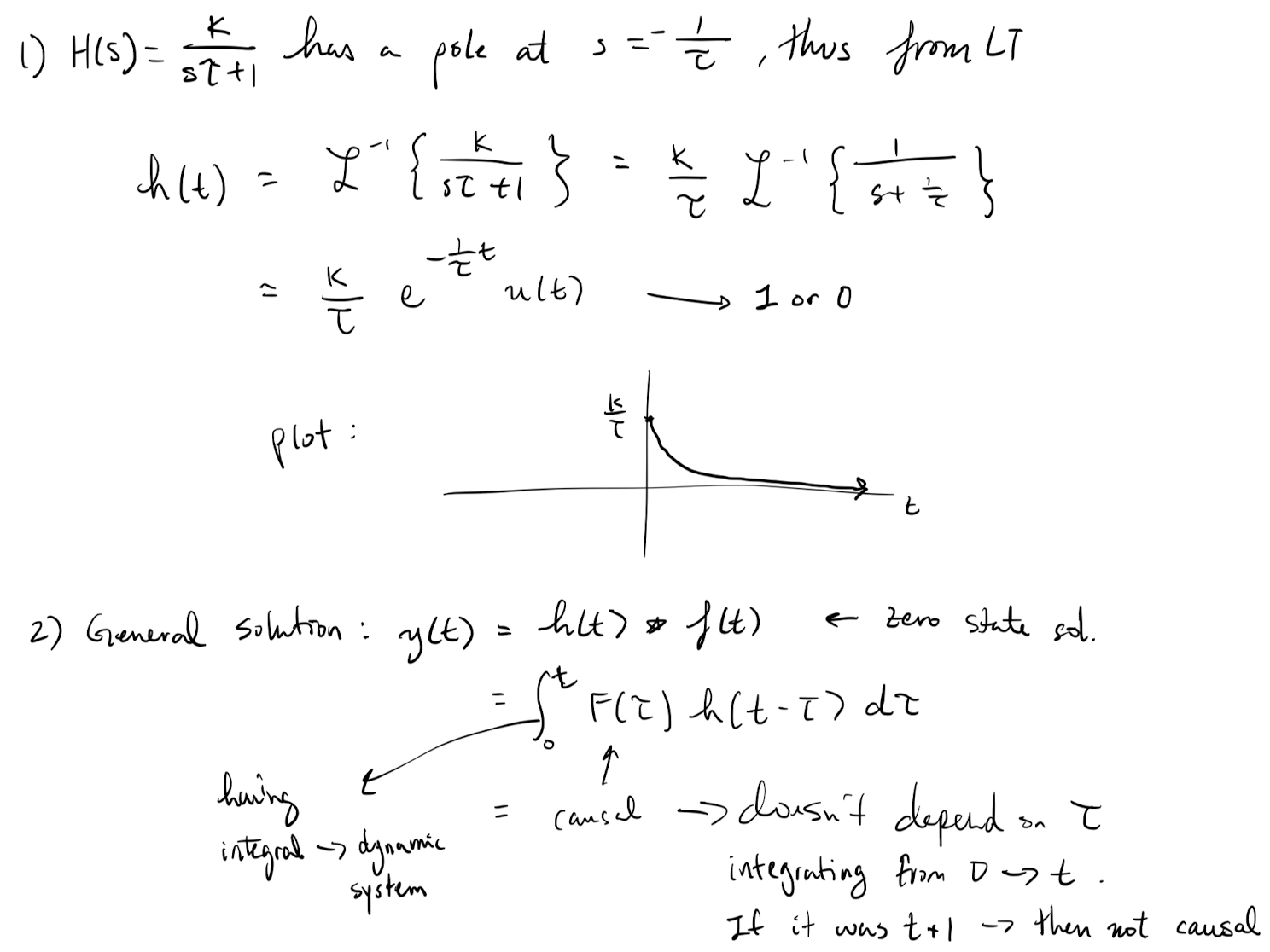

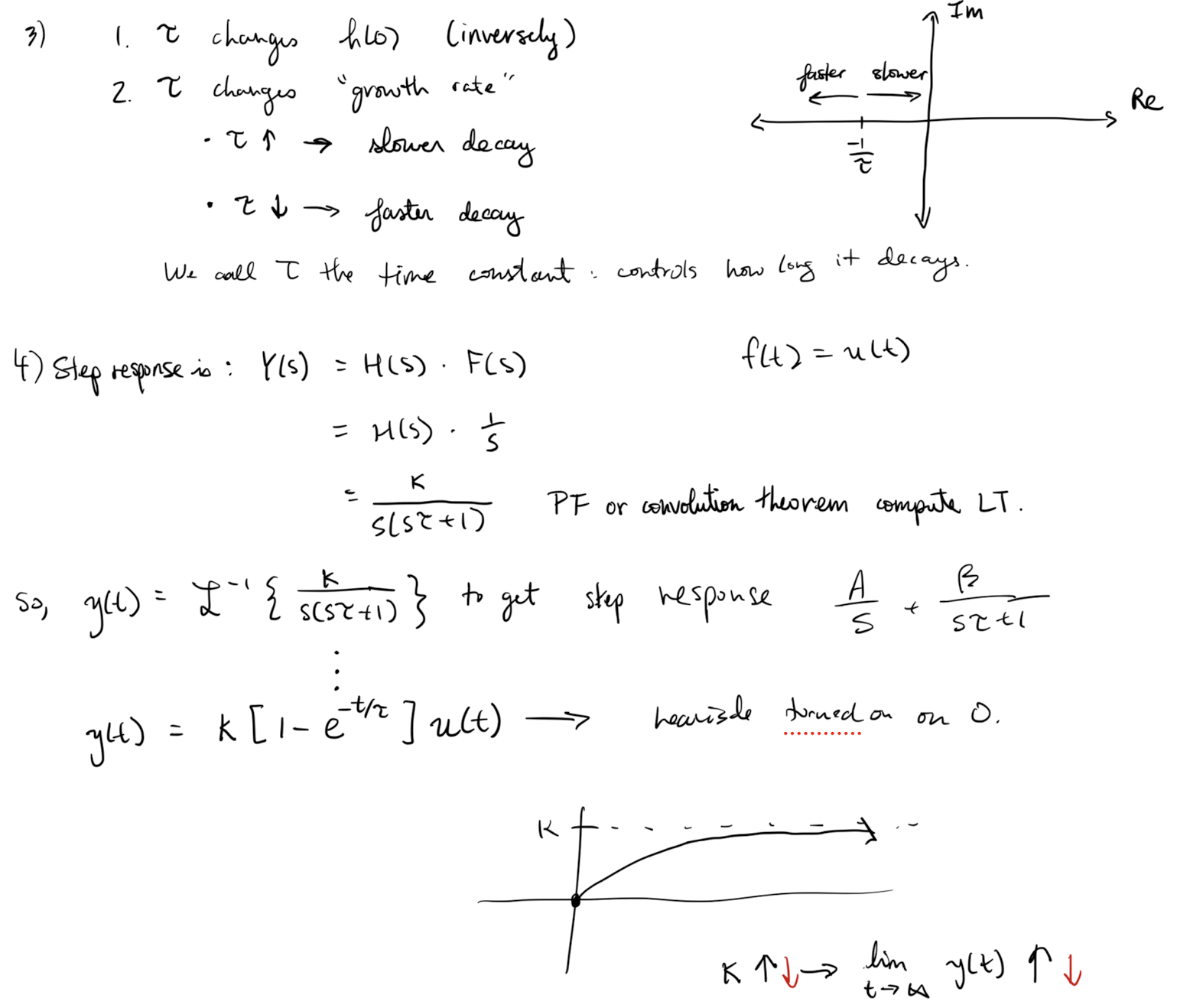

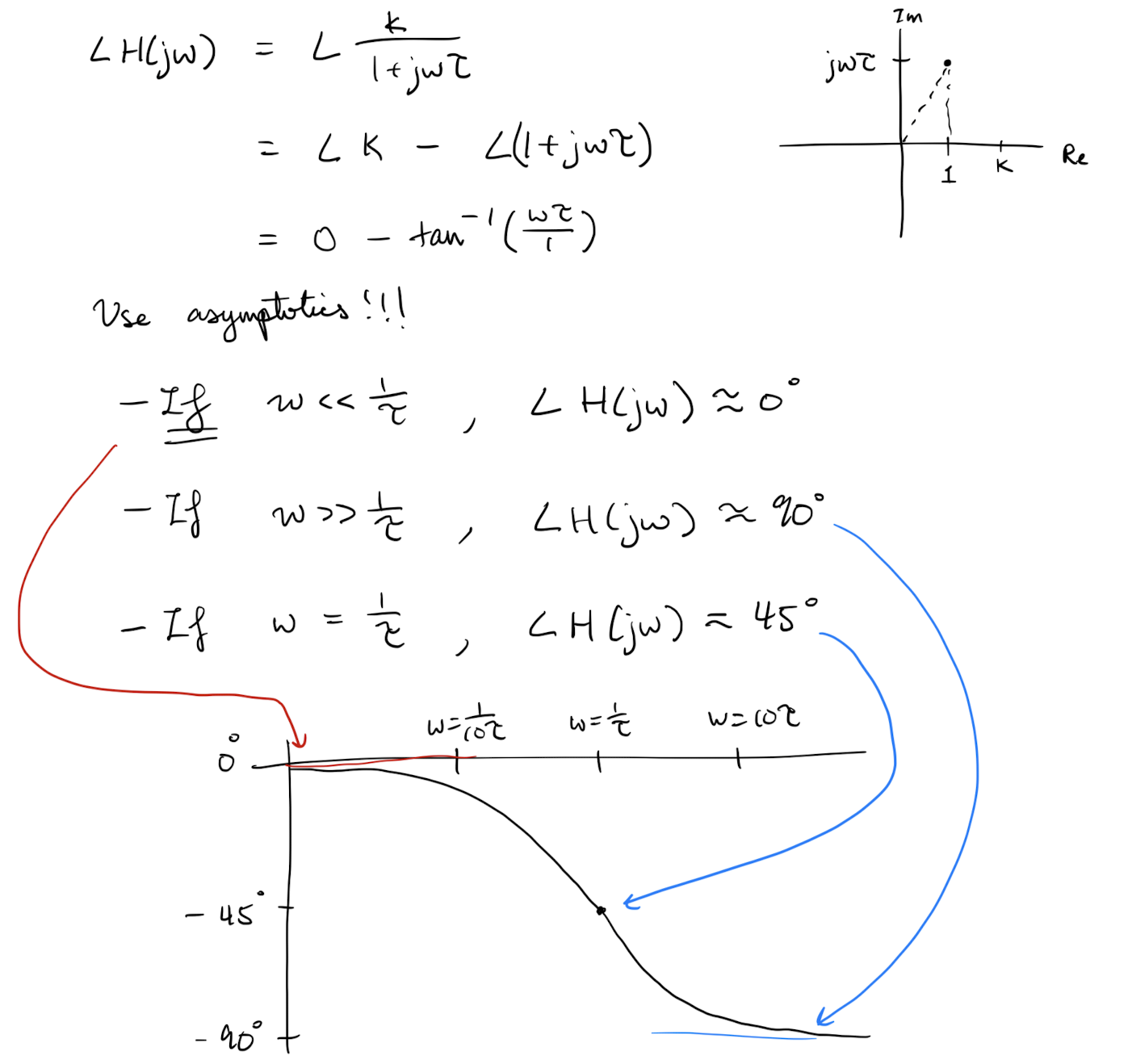

Definition 1: Standard 1st order system

The standard 1st order system has the transfer function where . is called the DC gain and is called the time constant.

In this transfer function:

- represents the gain of the system at DC (zero frequency).

- determines the rate at which the system responds to input changes. A larger ττ indicates slower response, while a smaller ττ indicates faster response.

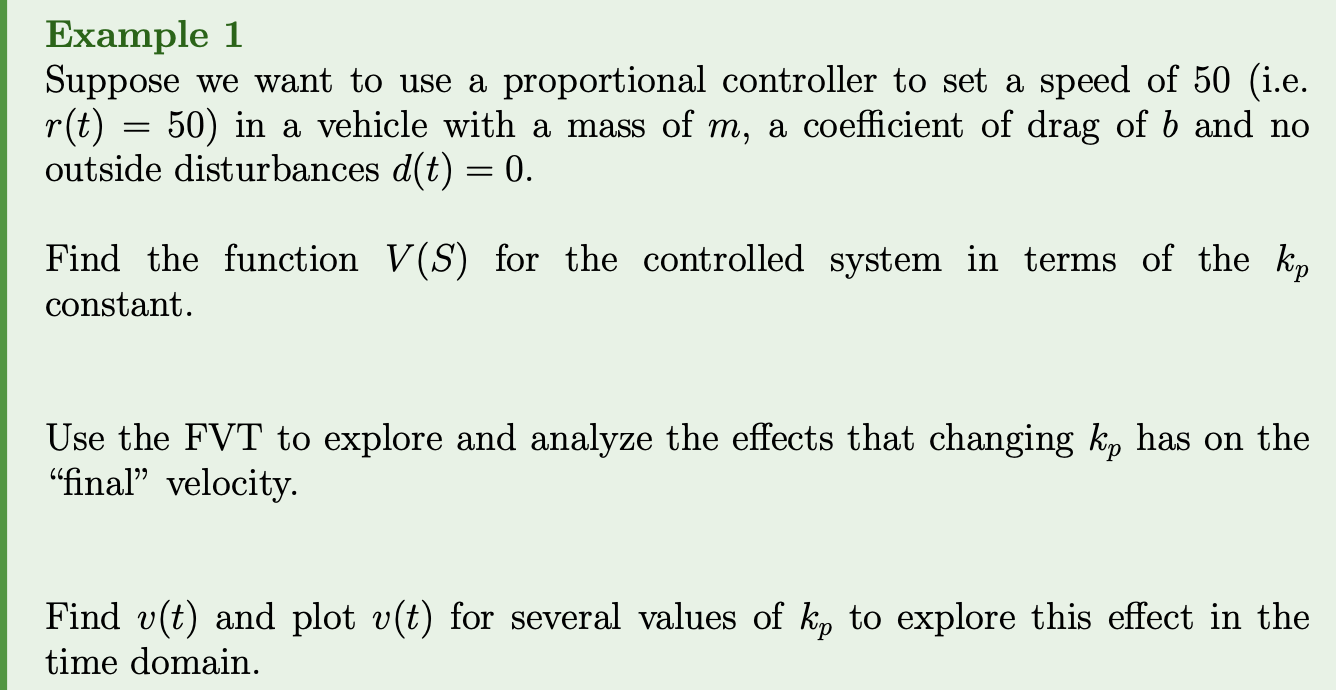

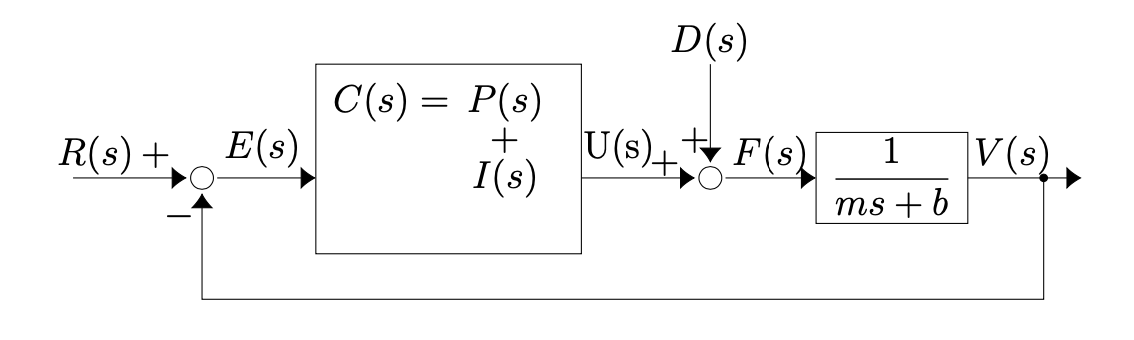

Lecture 15 - Basic Control Theory - Cruise Control

Lecture goals:

- Understand the basic of closed control loops

- What proportional and integral control systems are

- Their benefits/limitations and how to set them up to control a system

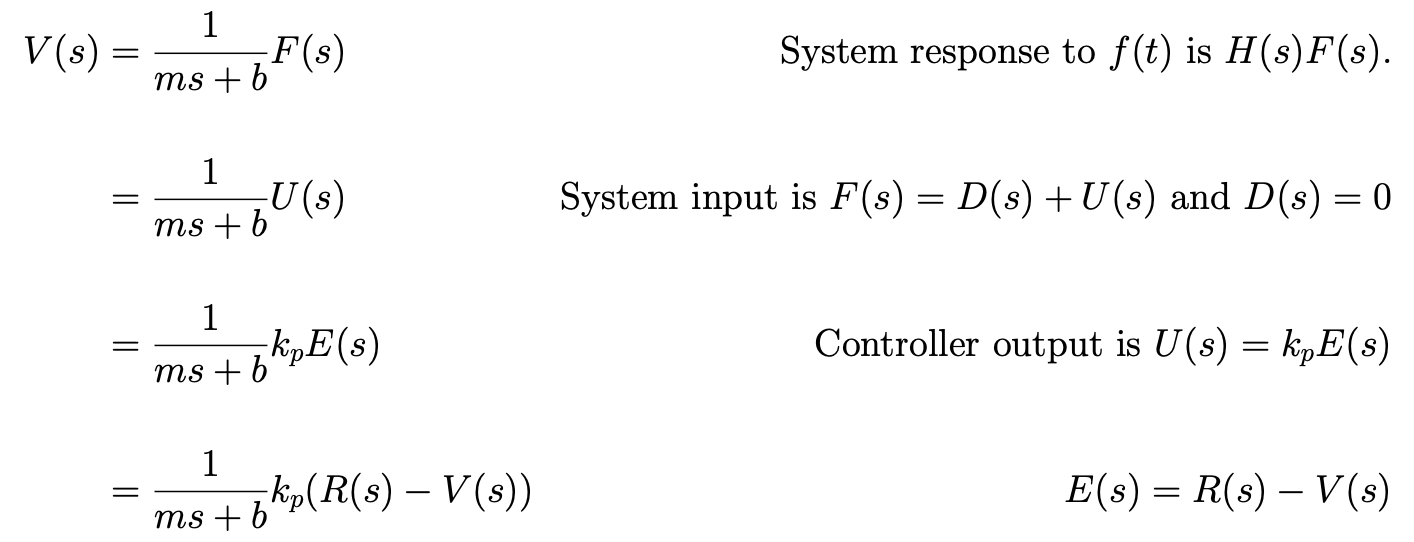

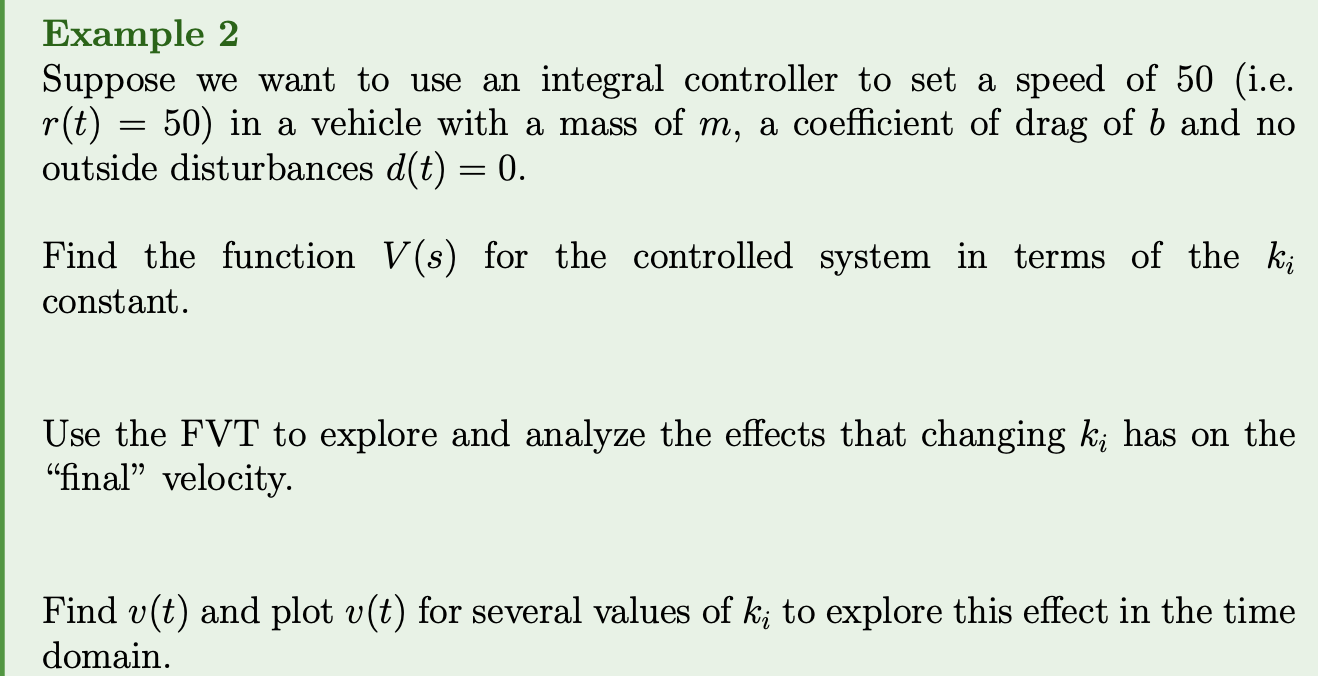

Consider the simple system that models the velocity of a vehicle:

where is the velocity of the vehicle, is the mass, is the coefficient of drag and is the velocity forcing term which is a combination of the effects of the engine/brakes as well as the effects of the road (hills etc).

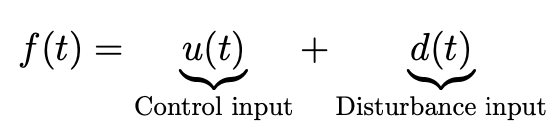

It is nice to split up the input into terms we can control (brakes/gas) and terms we can’t (road conditions):

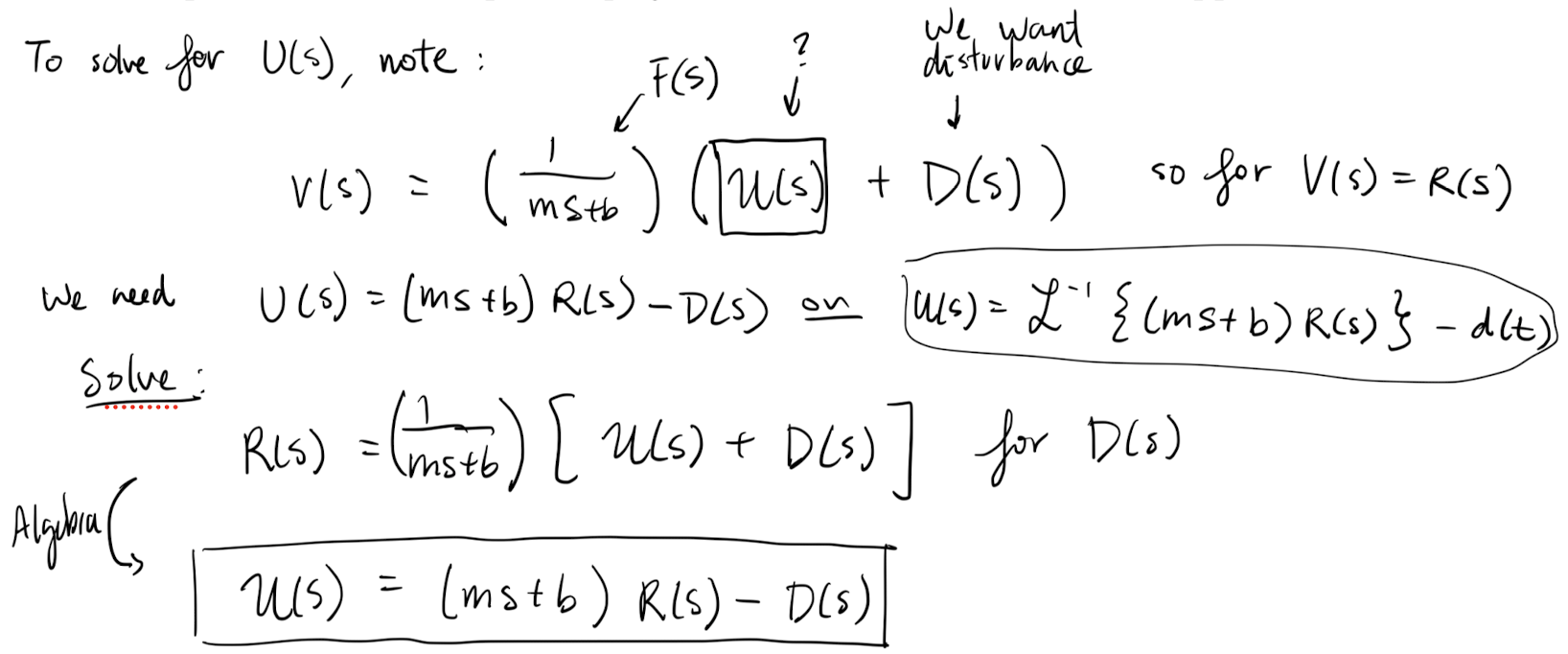

Goal: Find so that the vehicle velocity follows some given reference input, .

Solution: We use a “controller” to adjust to obtain our desired velocity. We do this is frequency space.

We examine two options.

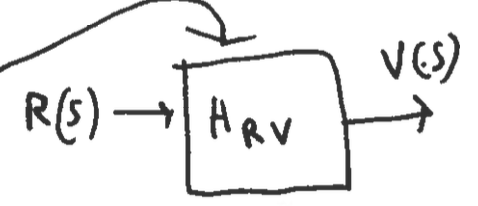

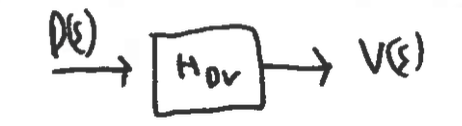

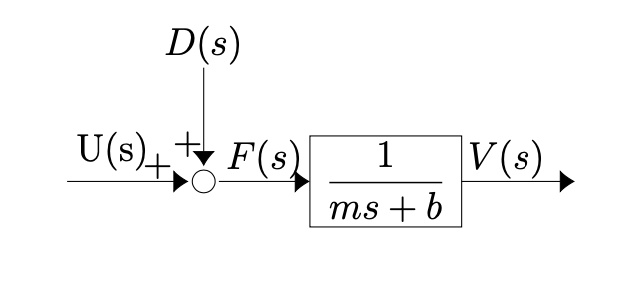

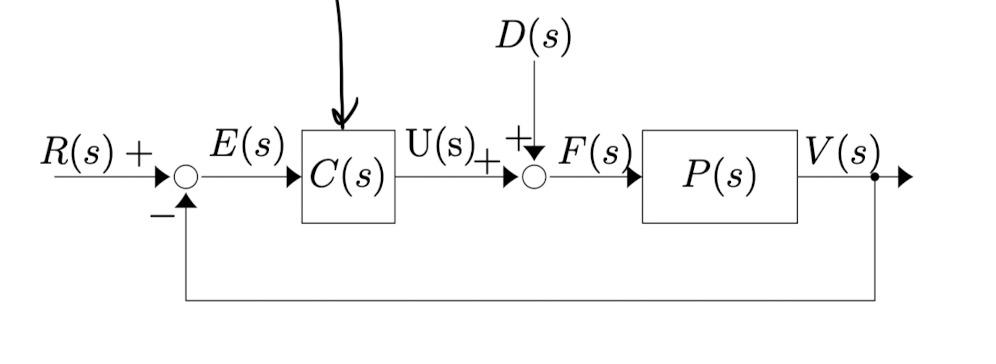

Open loop controller:

The box is the car system .

Here:

- is the Laplace transform of the control input.

- is the Laplace transform of the disturbance input.

- is the Laplace transform of the total forcing term. ()

- is the Laplace transform of the resulting velocity.

These controllers only work well if we know the disturbance the system undergoes, if the system always needs a constant known output or if the user can make adjustments as needed.

Some applications include washer/dryers for clothing, toasters, watering systems, step motors etc but open loop systems do not work well for our application.

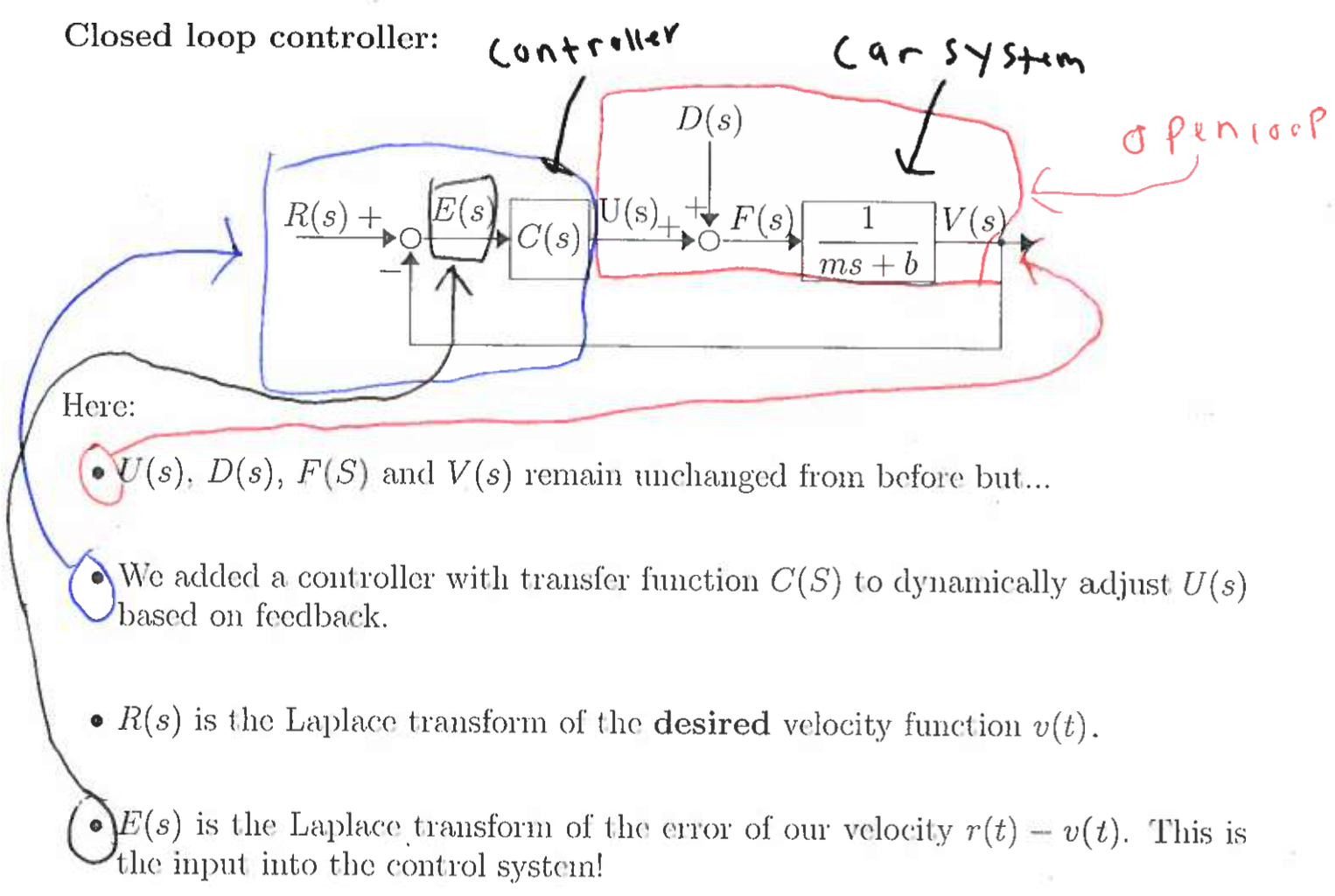

Closed loop controller:

Here:

- and remain unchanged from before but…

- We added a controller with transfer function to dynamically adjust based on feedback.

- is the Laplace transform of the desired velocity function .

- is the Laplace transform of the error of our velocity . This is the input into the control system!

New Goal: Find a transfer function such that (as ) and hence we have the desired velocity i.e. .

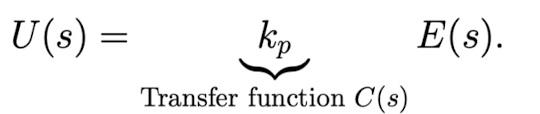

Proportional control:

Idea: Let for . In this case

- if then we “hit the gas” to speed up,

- if we “hit the breaks” to slow down and

- if we do nothing.

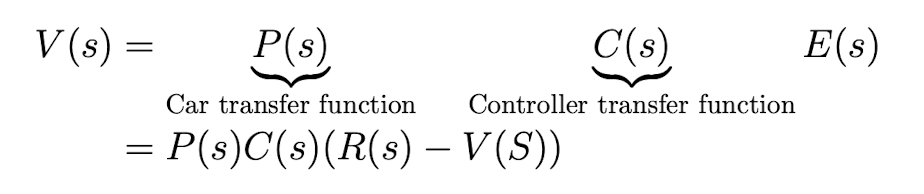

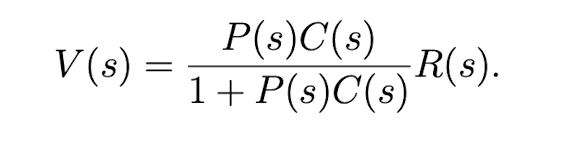

We will explore how this works in the case where .

If we use this controller then

We have

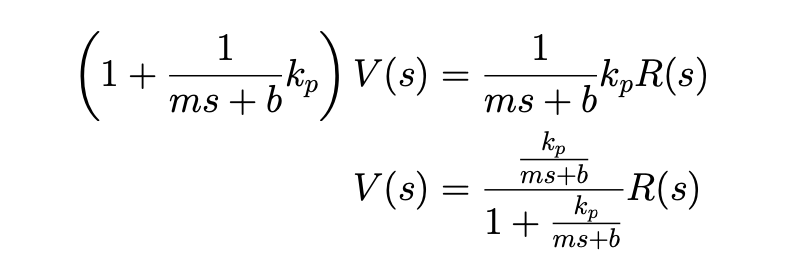

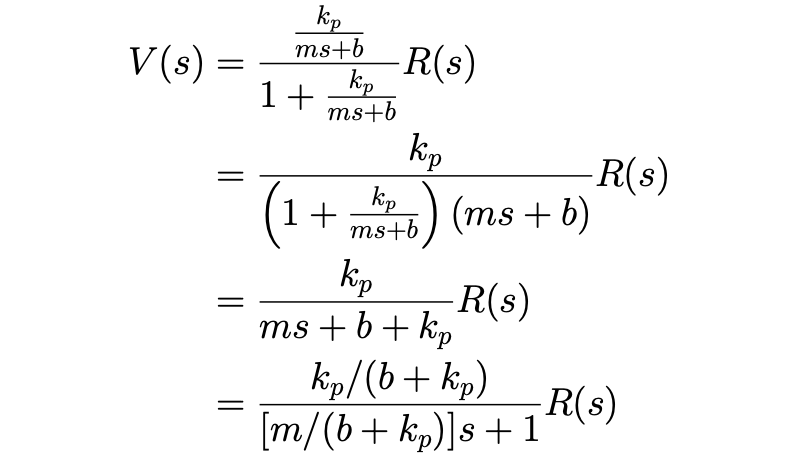

Solve for :

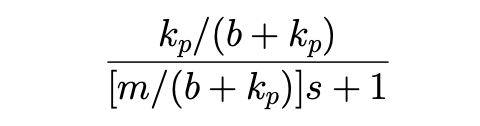

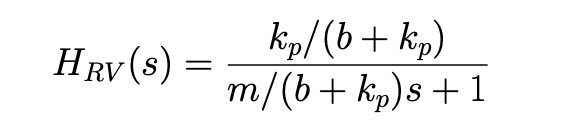

Let’s write this in standard form:

Just algebra. : What comes out of the system (the fraction) given .

The transfer function for the controlled system is

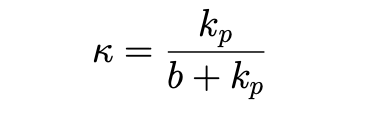

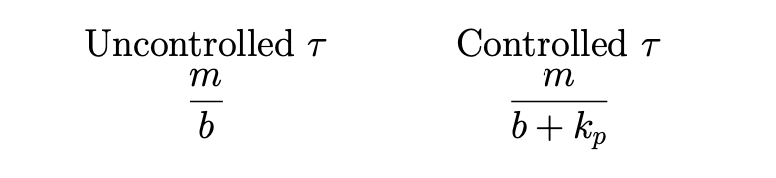

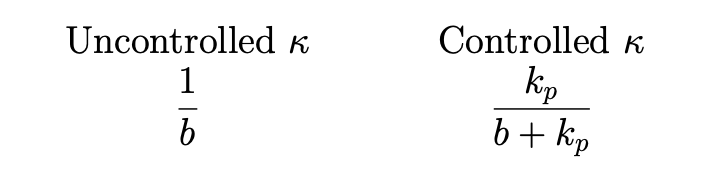

which is the transfer function for a first order system with a DC gain of (number on top. Defined in the last lecture.)

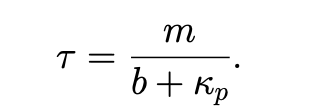

and a time constant of

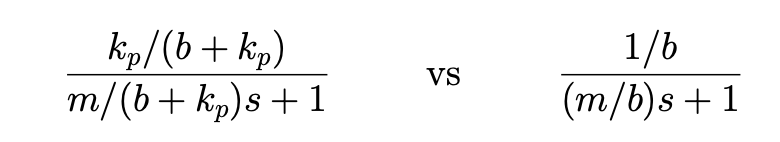

This transfer function looks similar to the transfer function for the uncontrolled car:

but we can now adjust the DC gain and time constants by changing .

We will call this transfer function for the controlled system

Controlled system

because it controls based on and .

If we want to account for a disturbance term, we can simply find a transfer function such that .

Pic:

By linear superposition (all the systems are linear) the net response would be

Note

We usually just consider for this course.

Now, we want to pick appropriately so that the system does what we want.

Changing lets us

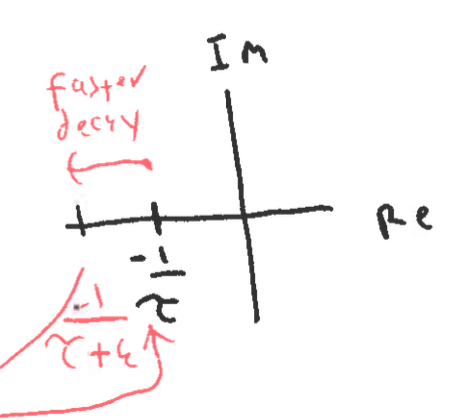

- Adjust the time constant:

For larger values of , becomes smaller.

Recall that a smaller leads to a faster decay to the “final” value of the system (which always exists for the standard first order system).

- Adjust the DC gain

by changing , we can make obtain any value between 0 and 1.

Insert the answers…todo

To see the effects of in the time domain run Lecture15_pcontroller.m.

Major limitation: If is ever 0 then .

Thus in the absence of any disturbance to the system (i.e. ), the input into the system also vanishes.

Thus, there is no system input and hence will drop (since ).

In general controllers can never achieve the perfect asymptotic velocity!

While in this example, we can get as close to the desired velocity as we want, in for higher order systems controllers tend to overshoot and potentially become unstable for large values of .

Hence we introduce

Integral controllers: (average the error!)

Let the error term be proportional to the integral of the error:

This will go to zero exactly when the average error is 0. (This controls the average.)

Pic:

It can hence (in principle) bring the average error to 0! We hence repeat the same analysis to this new controller. (What changed? Nothing except )

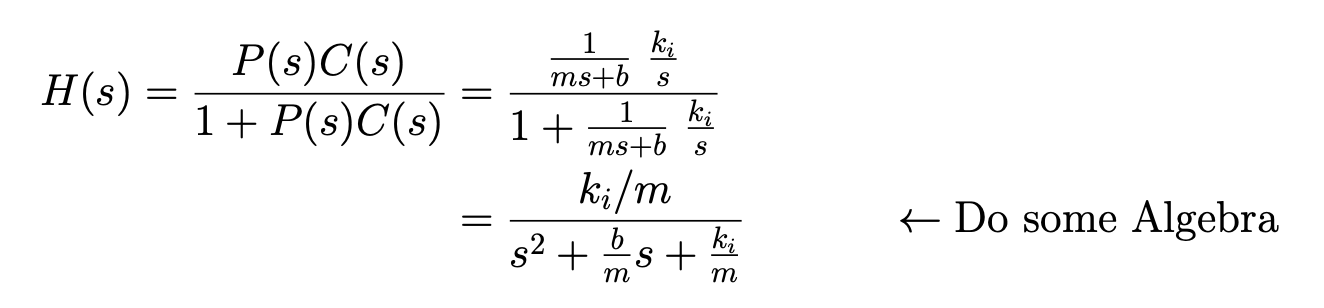

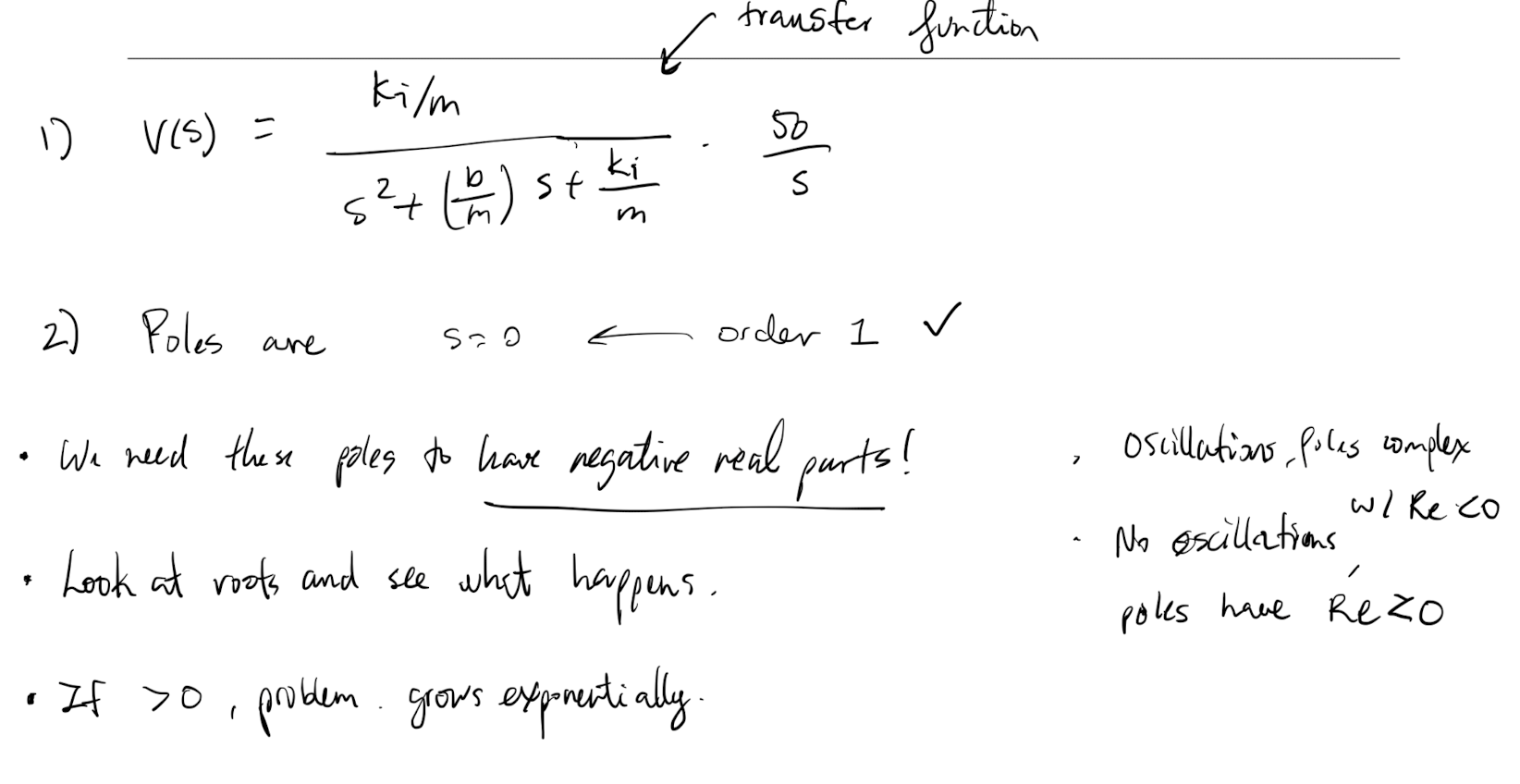

The transfer function for the integral term is (Hw 4 Q1).todo

Hence

so

Thus the new controlled system has a transfer function of

How is this different from the first one?

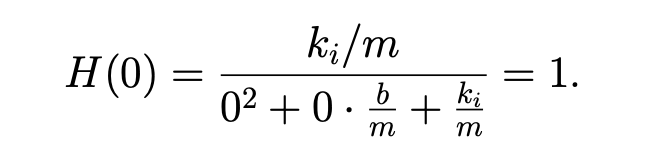

This one is squared!! Notice .

This is the transfer function for a second order system () so we will delay its full analysis until we cover the standard second order system in the next lecture but the DC gain is

Now let’s play with this system a bit more.

To see the effects of in the time domain run Lecture15pcontroller.m Major Limitations:

- Since we adjust based on the integral the error, integral controllers do strongly quickly adjust to changes. i.e. they “adjust the average”

- The above leads to the potential to overshooting the goal velocity. This is also in part because the solutions generally with come in conjugate pairs and hence the solution will have sinusoidal terms that will decay to 0.

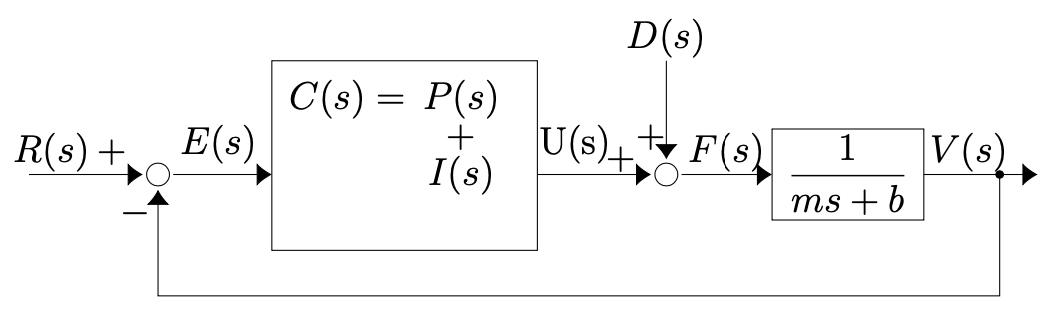

Lecture 16 - Standard 2nd order system, PI controllers and extra poles

Lecture goals:

- Understand the basics of the standard second order system

- Know what a PI controller is.

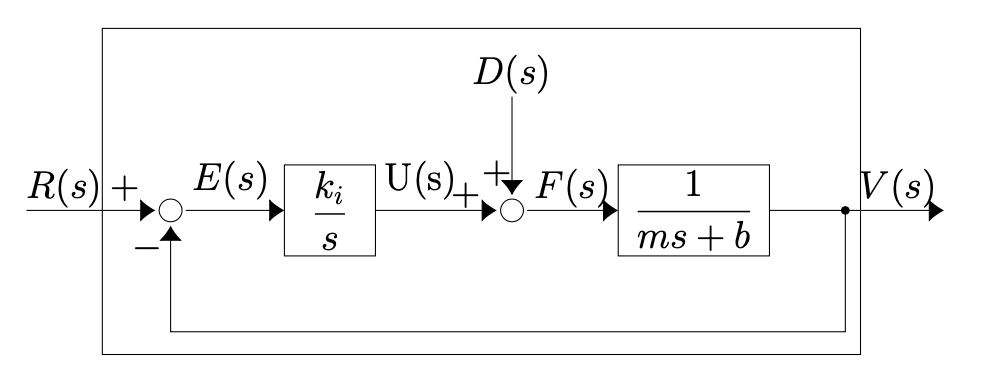

The system diagram for the integrally controlled car cruise control problem from last lecture

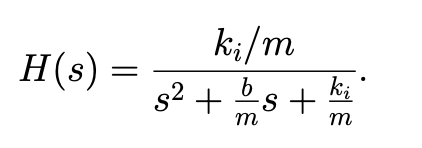

and we found that the transfer function for the controlled system (the big box) is

Do I need to memorize that or is it given in formula sheet??

Systems with transfer functions of this form are common so we will introduce and analyze the standard second order system

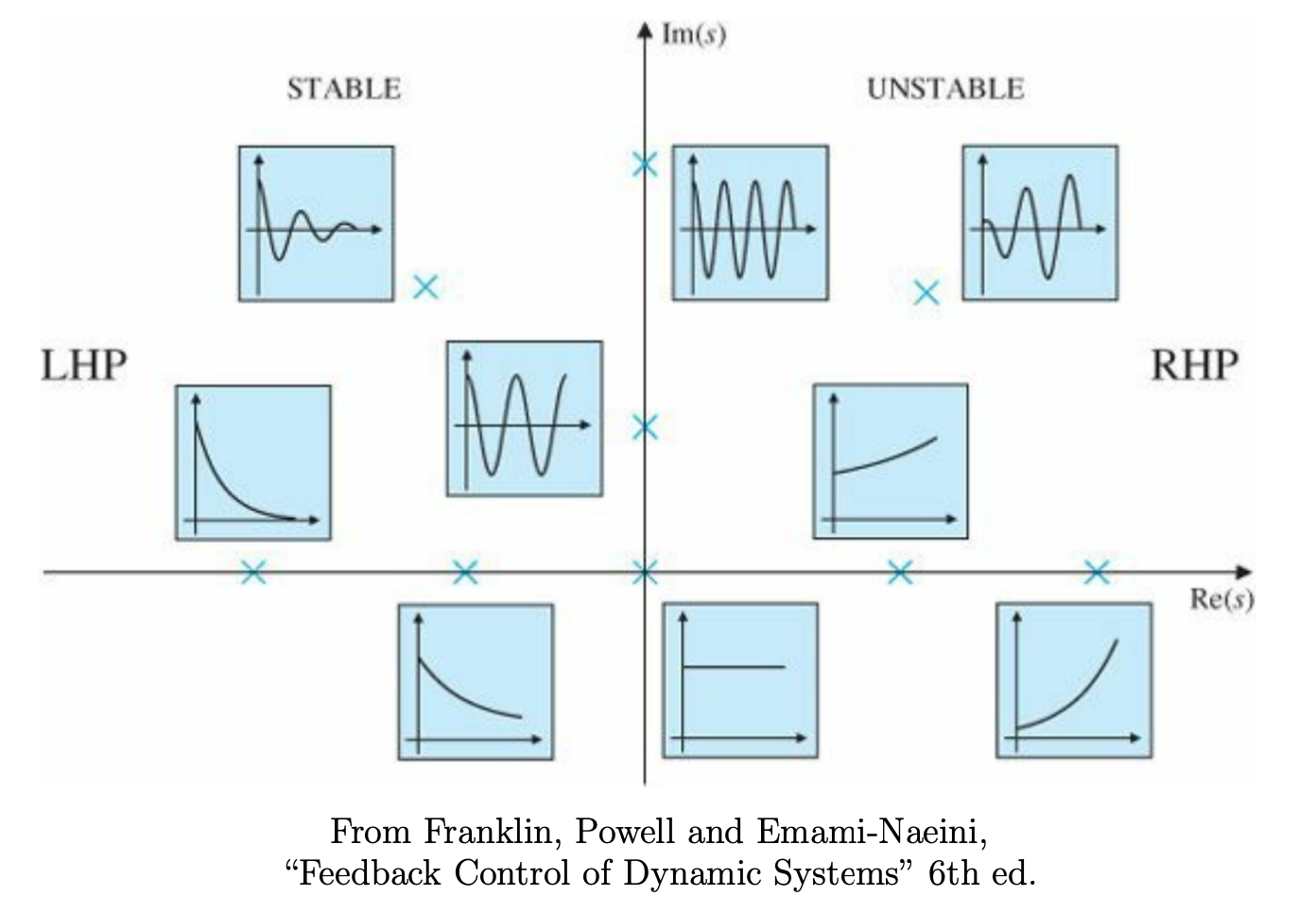

Definition 1: Standard second order system

The standard second order system has a transfer function given by

where and .

Examples of theses systems include cruise control with integral control, the harmonic oscillator (with or without damping), and the RLC circuit.

We will now analyze the behaviour of the possible behaviours of the standard second order system. By doing this, we validate the analysis of the cruise control system with an integral controller from the last lecture

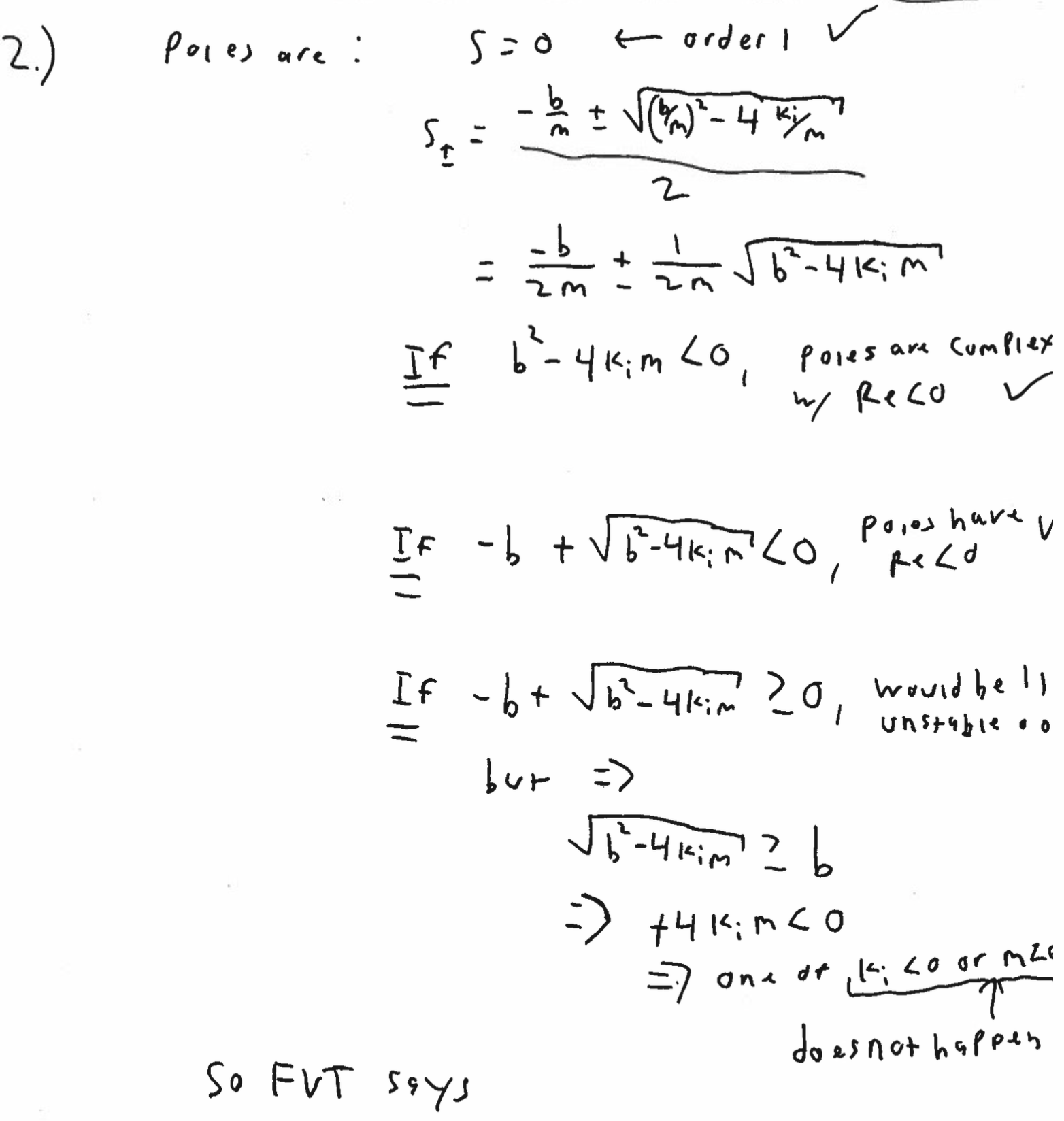

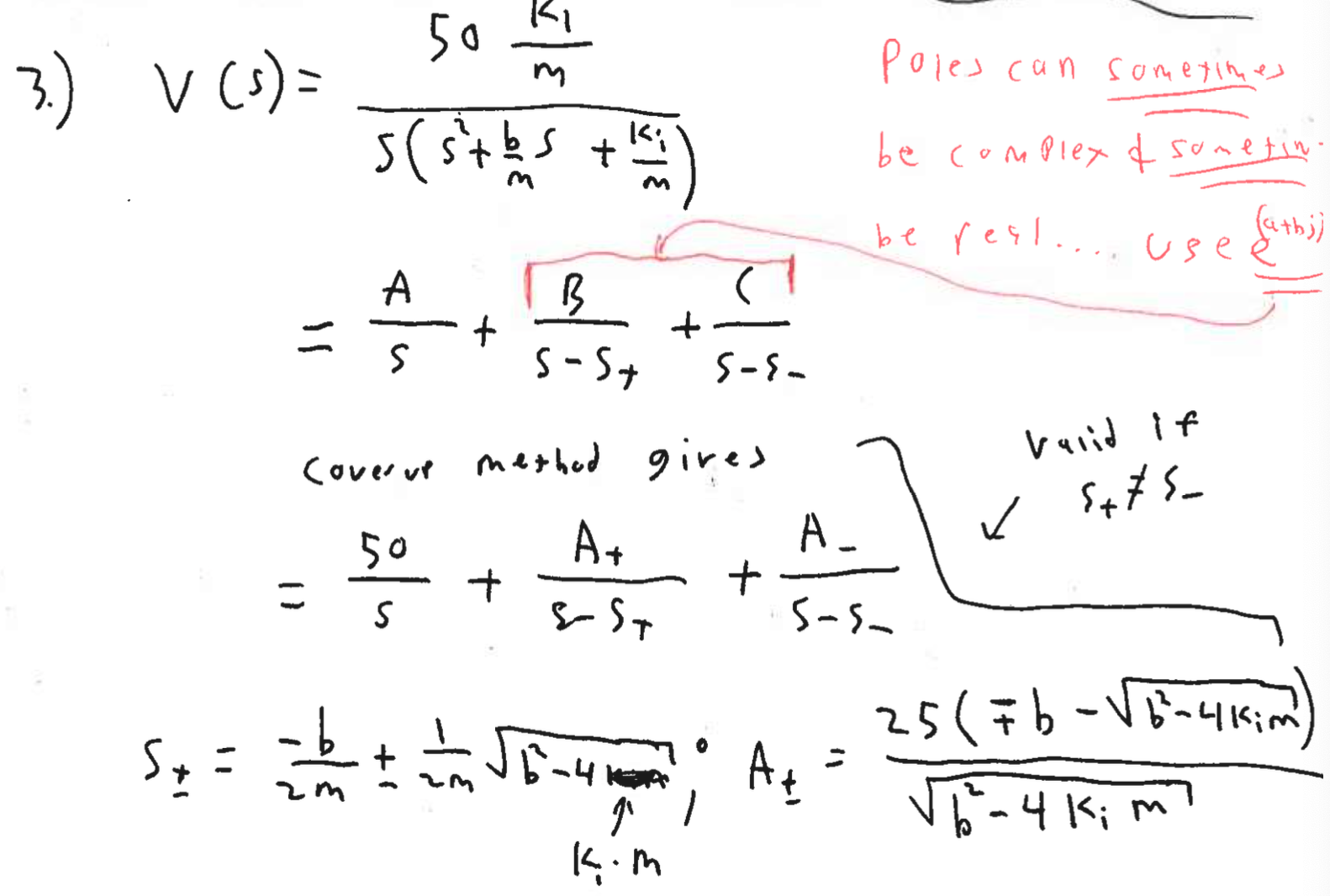

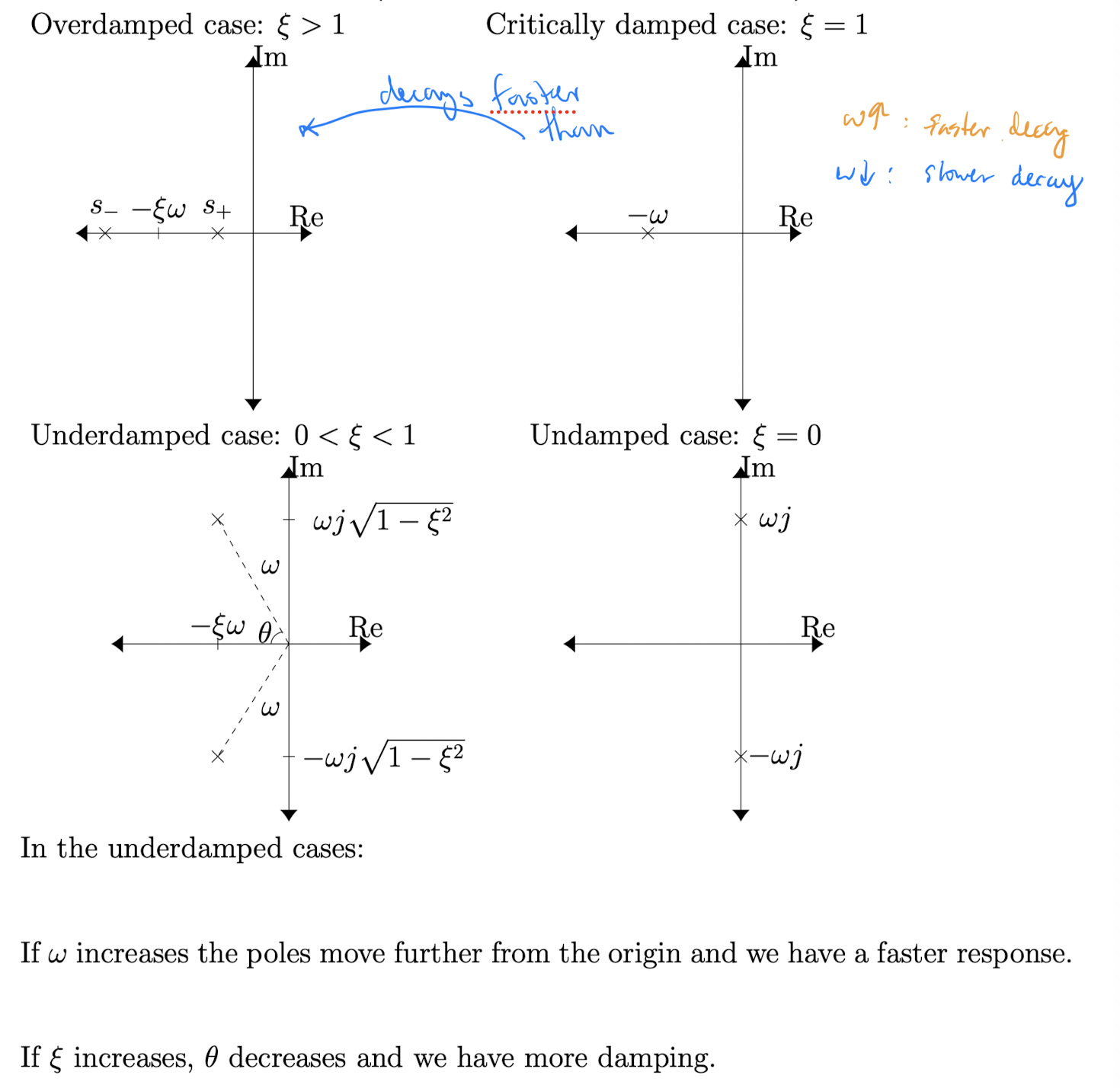

The quadratic formula tells us that the poles of are

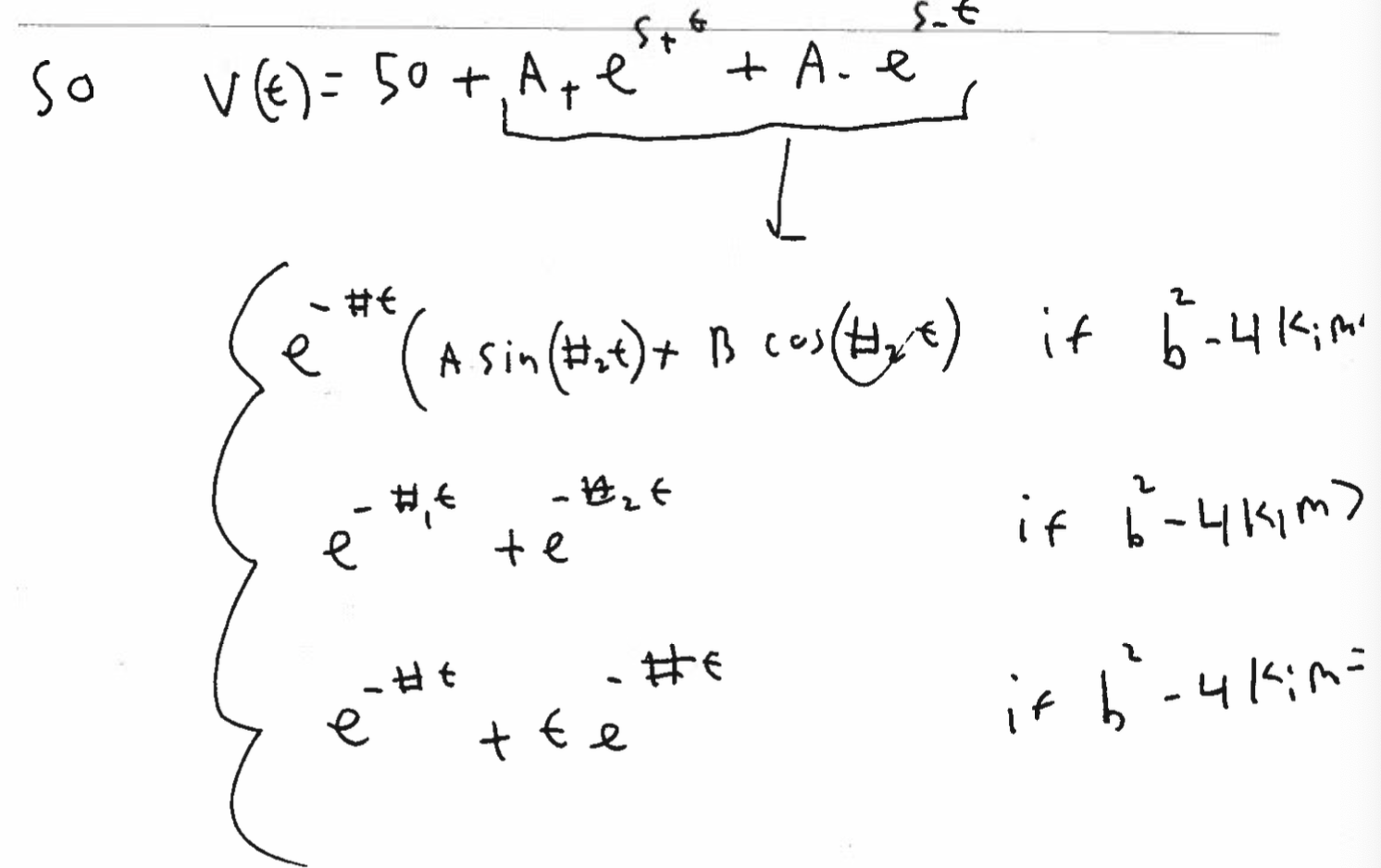

There are hence three different forms of the solution based on where the poles lie.

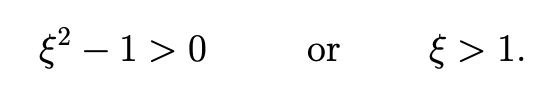

Case 1: Two real distinct pole. (The real roots) In this case (if is , then square root ) is real and greater than 0 then there are two distinct real valued poles. (bigger than 0 and a real number)

Since for the standard second order system, this happens when

In this case we decompose via PF as

for some .

Thus in this case the standard second order system can be decomposed as sum of two first order systems!!

To show that these are well behaved first order systems (i.e. remain bounded for bounded inputs), first note that for ,

Hence,

Why

<0?To make it have negative real parts?? I guess so

Thus, the poles of the transfer function have negative real parts and therefore any input that is bounded (i.e. has no poles with positive real part) cannot resonate to cause the system response to be unbounded.

For completion, we will find the system’s impulse response. Using the coverup method and simplifying gives

and hence the system’s impulse response is

which does decay as !

Relating this to A3Q1, this is the overdamped case of the harmonic oscillator

Case 2: One real repeated pole: If , or simply if , then there is a single repeated pole at

For completion we compute the system is again well behaved.

For completion we compute the system’s impulse response to examine the solution. In this case

Hence the system’s impulse response is simply

Relating this to A3Q1, this is the critically damped of the harmonic oscillator.

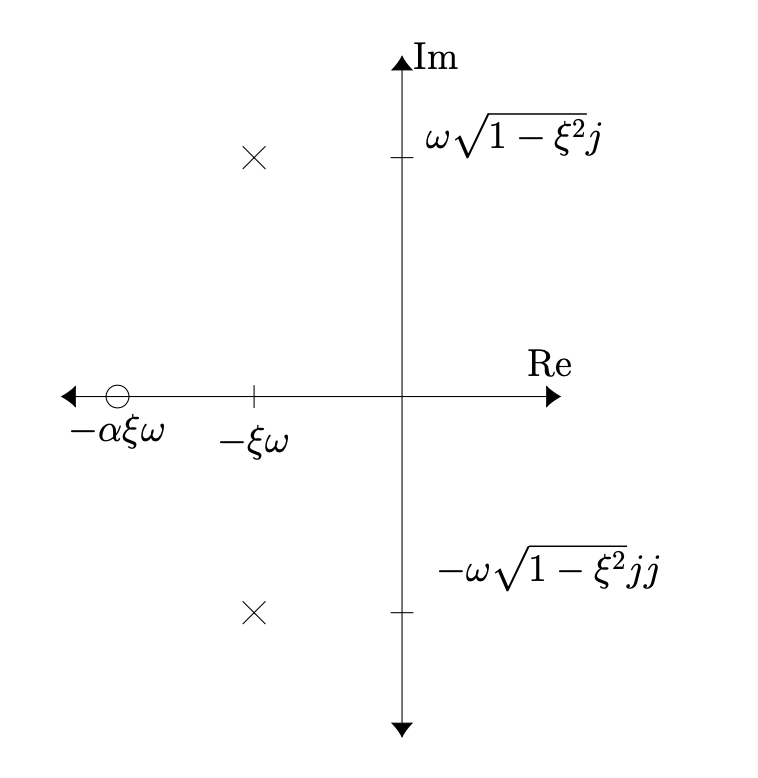

Case 3: Two complex conjugate poles with a non-zero real component: If , or simply if , then we have complex conjugate poles at

where for ease in future computations, we pulled out the negative from inside the square root.

These roots have negative real parts and hence the system is again well behaved.

We now find the systems’ impulse response. We know that the solution will be decaying sine waves so we prepare the transfer function to use the exponential modulation rule and the sine transform.

complete the square or just note the roots^

from the Laplace Table (LT table) we have

I seriously don't know how to compute this with the Laplace Table...

Help, i need the steps

Relating this to A3Q1, this is the underdamped case of the harmonic oscillator.

Case 4: Two complex conjugate poles with a zero real component: If , or simply if , then we have complex conjugate poles at

How is this different from case 2??

Isn’t it also , BUT for case 4, and for case 2, . That’s the difference. Critically damped case vs. Undamped case .

These roots have zero real parts and hence the system may not be well behaved is there is resonance!

We now find the system’s impulse response.

so from the LT table we have

Relating this to A3Q1, this is the undamped case of the harmonic oscillator.

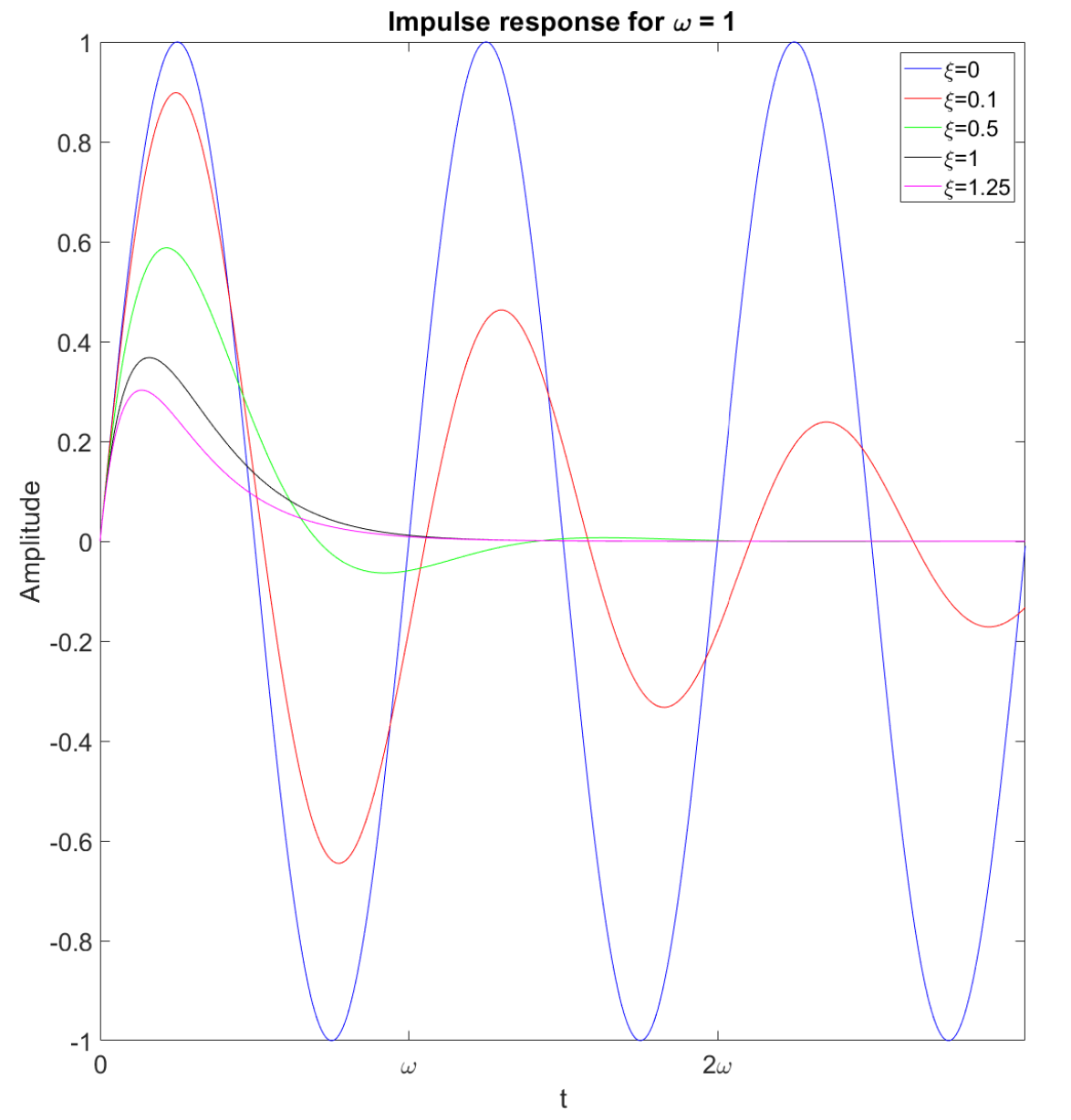

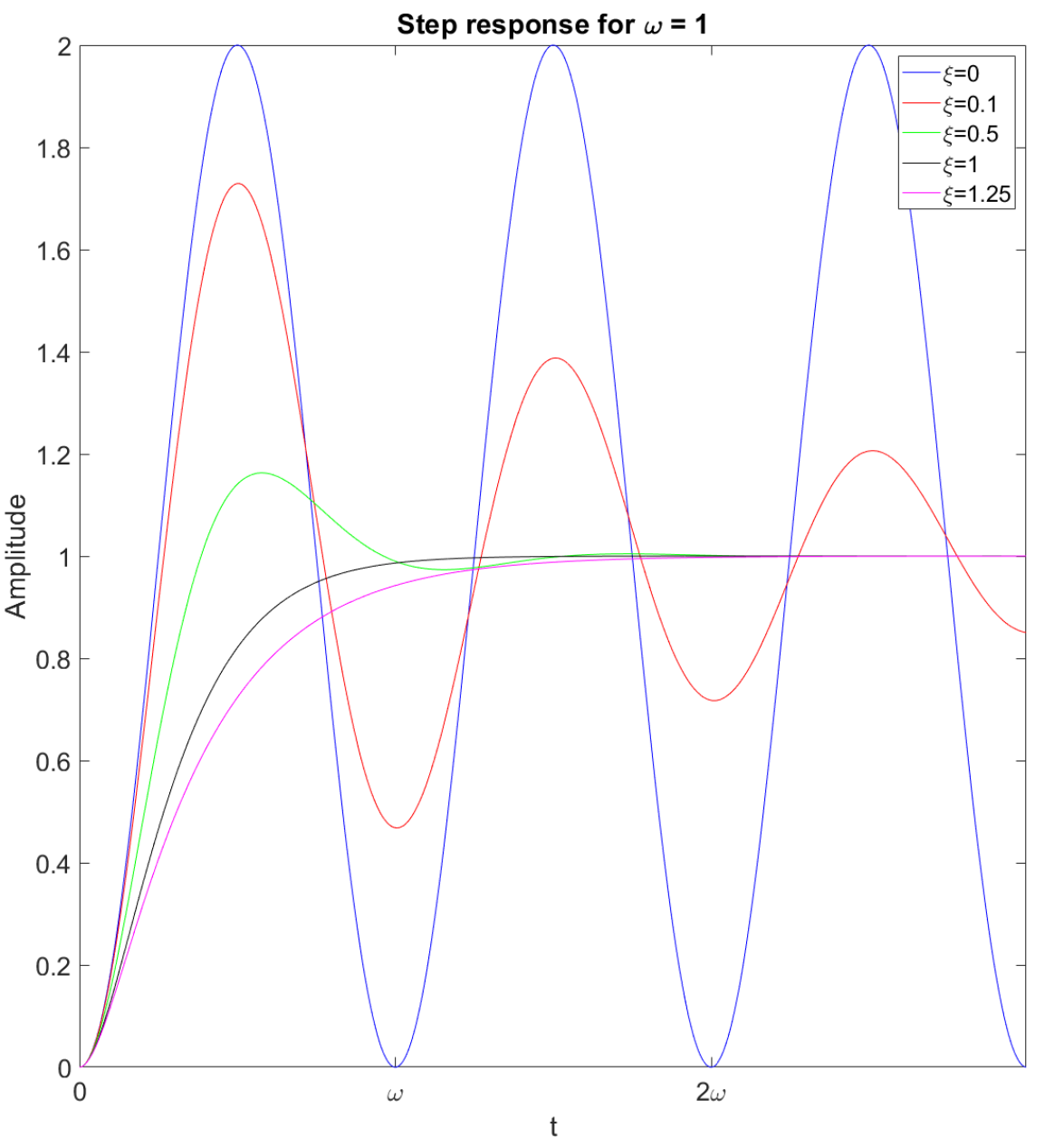

We can now plot the impulse response to all these systems. Here is a plot!!

See the L16.m file for a video!

We can also compute the step responses and plot them, we will not show the computations but the step response for other than is

where . At we have a scaled exponential. Here is a plot of some sample solutions

See the L16.m file for a video!

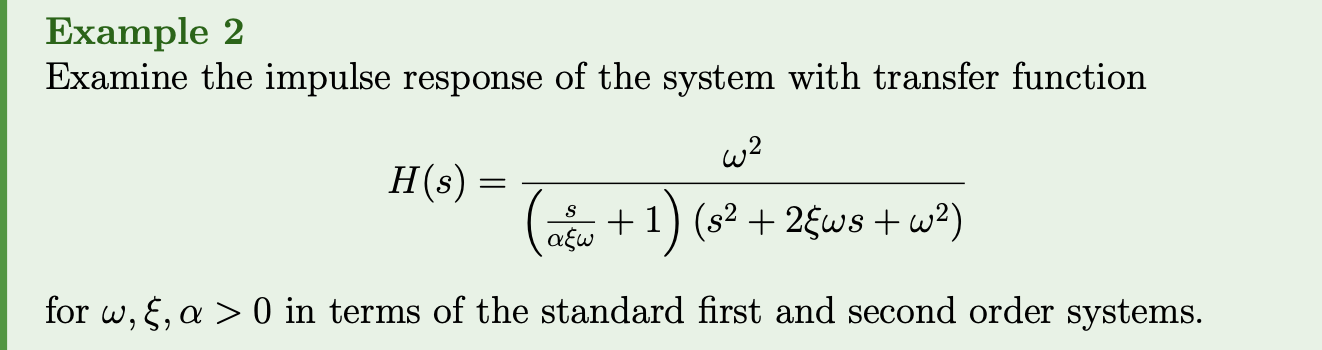

Pole location summary (poles are marked with a ):

Matlab video!!! See Lecture16.m file for a nice video of the poles and the step response.

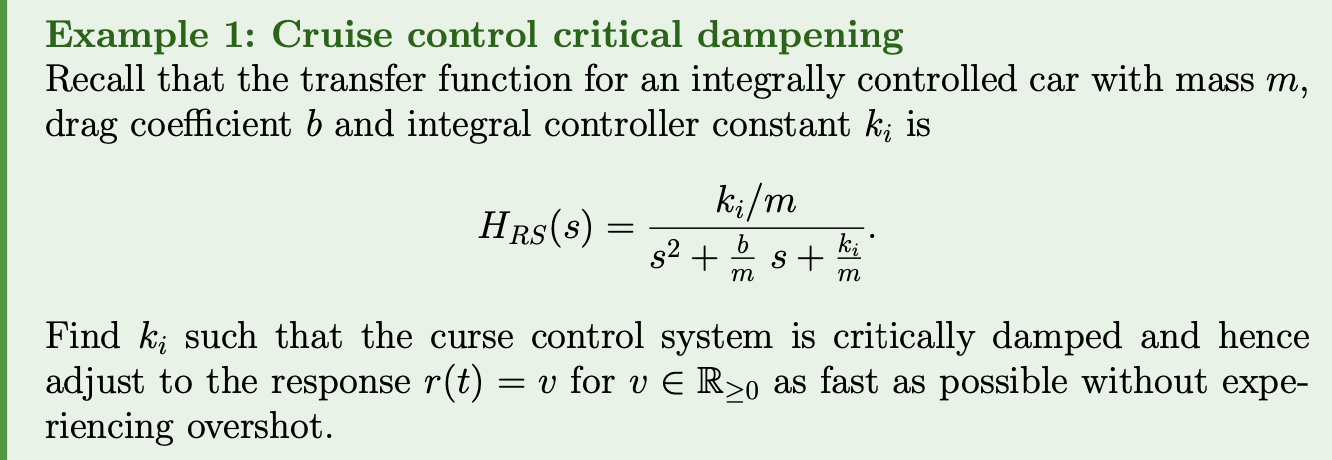

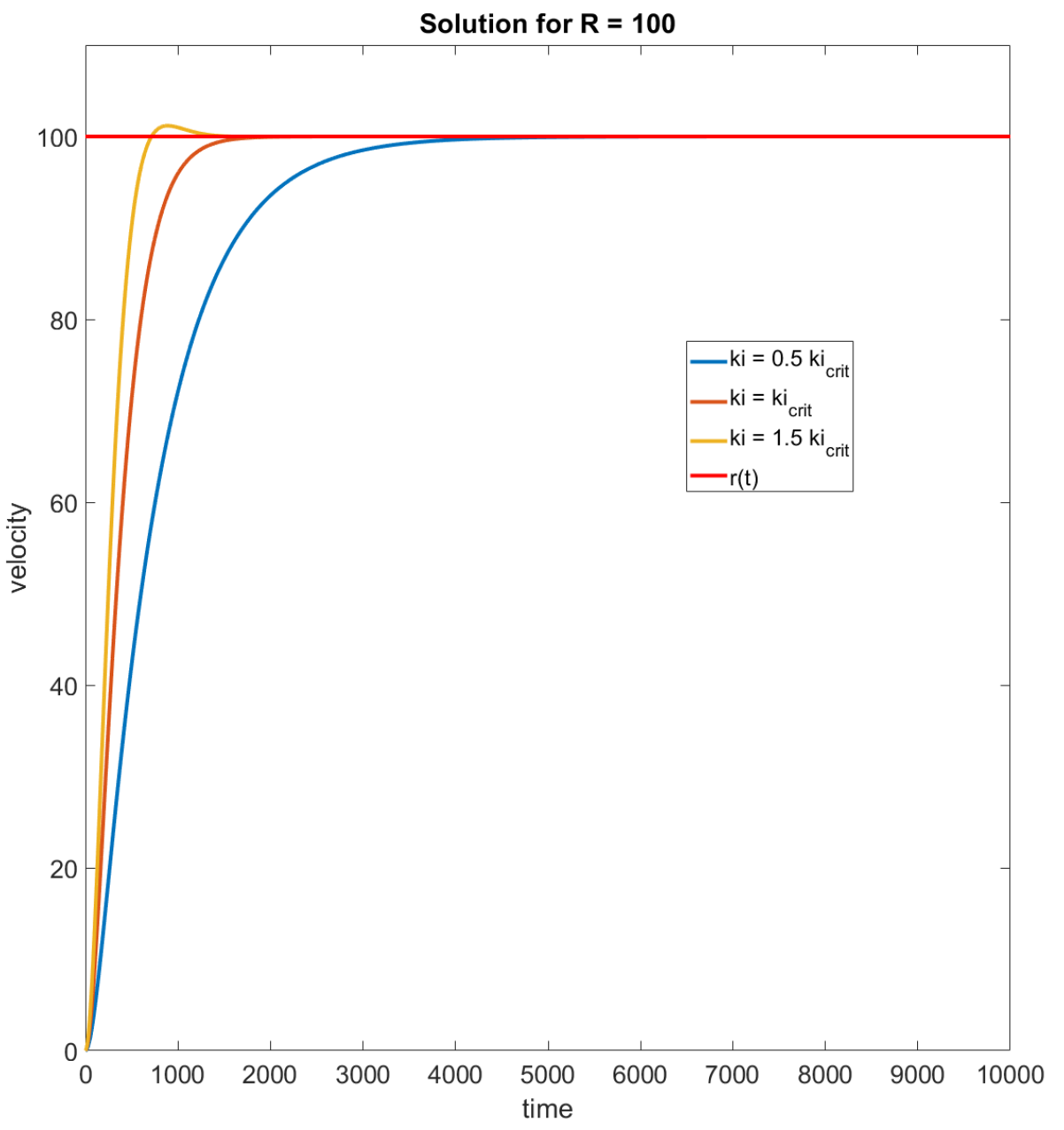

Solution: This is a second order system with and

To be critically damped, we need (Case 2) and thus needs to satisfy

Here is a plot of the behaviour of the controlled system’s response for values of around this critical value:

See the second L16.m file if you want to explore this more!

PI controller:

For the cruise control problem, the controller by itself gets us to a close velocity quickly, but misses the exact velocity and the controller by itself gets us to the exact velocity, but is slow to do so.

Let’s use them together!!!

The transfer function for this system is

Next lecture we will analyze this transfer function and how its zeros and poles change the system response and then look at more complex transfer functions.

Lecture 17 - PI controllers, zeros, extra poles and stability

Lecture goals:

- Analyze a PI controller for a first order system

- Know the effects of the zeros and (“extra”) poles of a transfer function

Recall that the system diagram for a PI controlled car is

and the transfer function for this system is

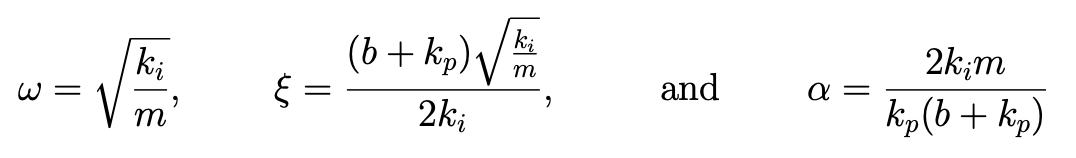

We will first simplify the functional form of to something more familiar by noting that it is of the form

where we note that this is almost of the form of the standard second order system but it has a zero.

To make the connection between and note that

Since , the poles and zeros can be plotted in the complex plane as

For the cruise control system we mostly care about the step response, so we will analyze the response to the step function.

This can be decomposed into different responses of the standard second order system

- : Std. 2nd order impulse res.

- : Std. 2nd order step response

We know what the standard second order impulse and step response look like as a function of and (L16), so we just need to explore how changes the linear combination of these responses. Since controls where the zero is, we are studying the effect of the zero on the response.

We will look at the extreme cases:

- If then (Std. 2nd order step response)

In practice, if then the effects of the impulse response term can ignored in many practical applications.

- If then . Thus

and we see a lot of overshooting/instability.

Example

In terms of the cruise control example:

- If increases for a fixed value of then

- is unaffected.

- increases. This leads to a faster system response when and a slower system response with . It also leads to more oscillations when .

- decreases. This causes the impact of the standard second order impulse response part of the response to increase. i.e. we will see a larger “bump” in the initial velocity.

- If increases for a fixed value of then

- increases. This in isolation leads to a faster oscillating response.

- decreases. This has the opposite effect of .

- increases. This has the opposite effect of .

Generally, for this application we care about getting to the steady state velocity quickly and do not want to overshoot. Hence we want no oscillations and we want the impact of the impulse term to not cause overshooting.

Combining the effects above,

for this system we use to adjust the speed of the response and to control the dampening of the oscillations. The exact values of and needed will depend on and but generally we want to avoid the oscillations.

todo Review this again

Didn’t really understand…

See the Lecture17_PI_controller.m for some examples of the controlled velocity.

Adding a pole:

Since , the poles and zeros can be plotted in the complex plane as

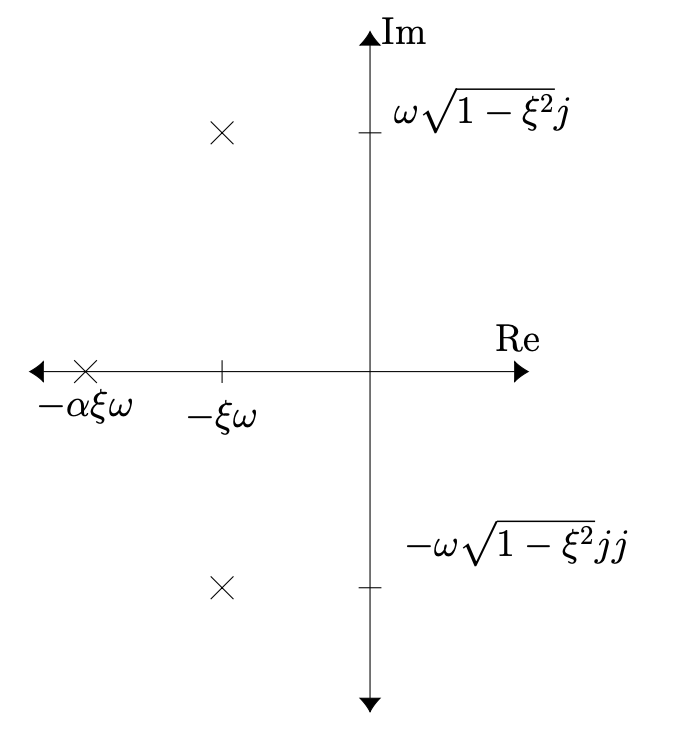

Based on the locations of the poles/functional form of we note that we can decompose as a linear combination of first and second order systems

We need to know the relative values of (and the decay rates of the system responses) to know what the impulse response looks like.

Evaluating the PF decomposition components givestodo

Understand and learn how to do this.

I forgot…

changes the relative weights of the impacts of the first order system and the second order system. What happens as changes?

We again look at the extreme values:

- If then and .

In this case, the impulse response looks like that of a standard second order system given that the complex poles are closer to the imaginary axis than the complex pole.

The latter happens when . In practice this happens for or so.

- If then and .

In this case, the impulse response looks like that of a standard second order system given that the complex poles are closer to the imaginary axis than the real pole.

Assuming the roots are complex valued (i.e ), the latter happens when . In practice this happens for or so. If the roots are not complex then the second order system decomposed into two first order systems but the result still holds.

See Lecture17_extra_poles.m for pictures showing this.

Definition 1

When a real pole or a complex-conjugate pair of poles are an order of magnitude closer to the imaginary axis than all other poles then we say that they are dominant.

Dominant pole

Pick the dominant poles and ignore the others in order to determine the behaviour.

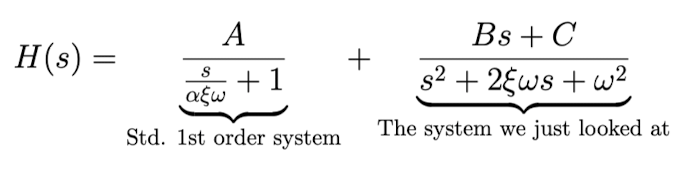

Linear Stability:

Recall the general behaviours of the impulse response caused by a pole at some point for .

The impulse response of ALL Linear Time Invariant systems is a linear combination of the types of functions shown in the above plot. ( because of PF)

Further since is a basis for the set of all functions, the response of ANY LTI to ANY function , can be written as a convolution of with a linear combination of the types of functions shown above.

Definition 2: System Stability

LTI, , is stable if decays to 0.

A LTI, , is unstable if is unbounded.

A LTI, , is marginally stable if is bounded but does not decay to 0. (sin, cos)

The type of stability mentioned above is often called linear stability.

Definition 3: Transfer function stability

A transfer function is stable if the system it is a transfer function for is stable.

A transfer function is unstable if the system it is a transfer function for is unstable.

A transfer function is marginally stable if the system it is a transfer function for is marginally stable.

Theorem 1: Stability

A transfer function is stable if all poles have a negative real part.

A transfer function is unstable if there is a pole with a positive real part OR there is a second order pole that has a real part of 0. (but if pole of first order, then it is marginally stable)

A transfer function is marginally stable if there are no poles with positive real parts OR second order poles with a real part of 0 and in addition there is at least order 1 pole that has a real part of 0. (can have pole on imaginary 0, but they need to be first order)

Sketch of proof:

If all poles have negative real parts then the impulse response is a sum of exponentials (potentially with oscillations) that decay to 0 and hence the impulse response decays.

If there is any pole with a positive real part, then that component of the impulse response grows. Hence the impulse response will not be bounded.

If the first condition is met, then the system is not unstable and hence the impulse response does not grow without bound. If the second condition is met then there is either a sinusoidal component to the impulse response or a constant component. In either case, these terms will keep the system response from decaying to 0.to-understand

Definition 4: Bounded-input, bounded-output (BIBO) stable

A LTI, , is bounded-input, bounded-output (BIBO) stable if is bounded for all bounded functions .

Theorem 2

A LTI system with a rational transfer function is BIBO stable if and only if its transfer function is both stable and proper.

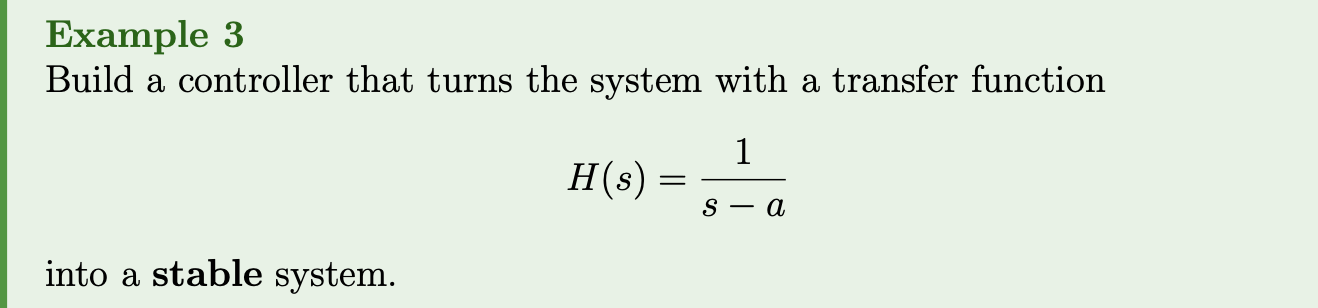

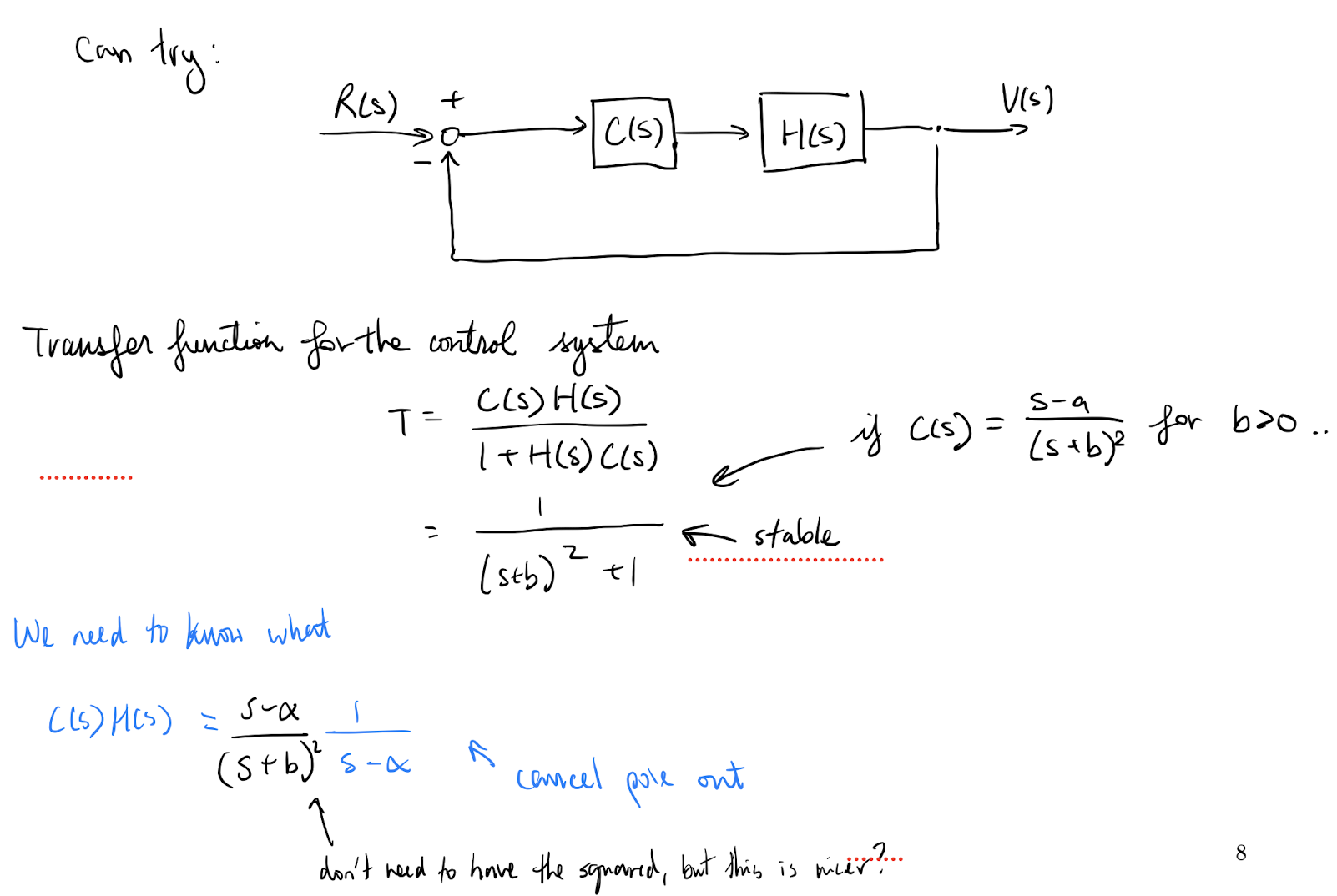

Example 3

Assume .

Understand this problem.

And wtf is T (transfer function) that we got? Where did we get it from?

Why is for ???

Why is it stable? Look at the definitions.

NASA tried this method for a Jupiter rocket (1957) and Atlas 4a rocket (1957) and others… don’t do this!

I repeat NEVER cancel unstable poles in the above way. Go to the tutorial if you want to see how to do this properly.

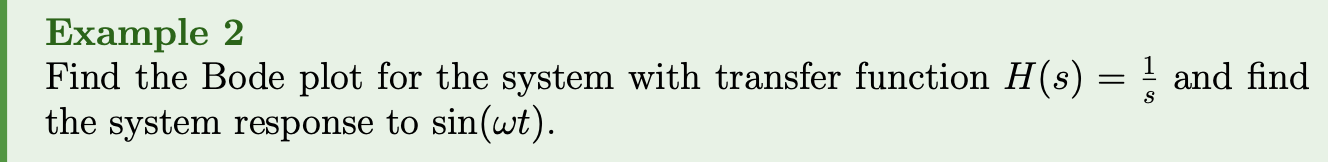

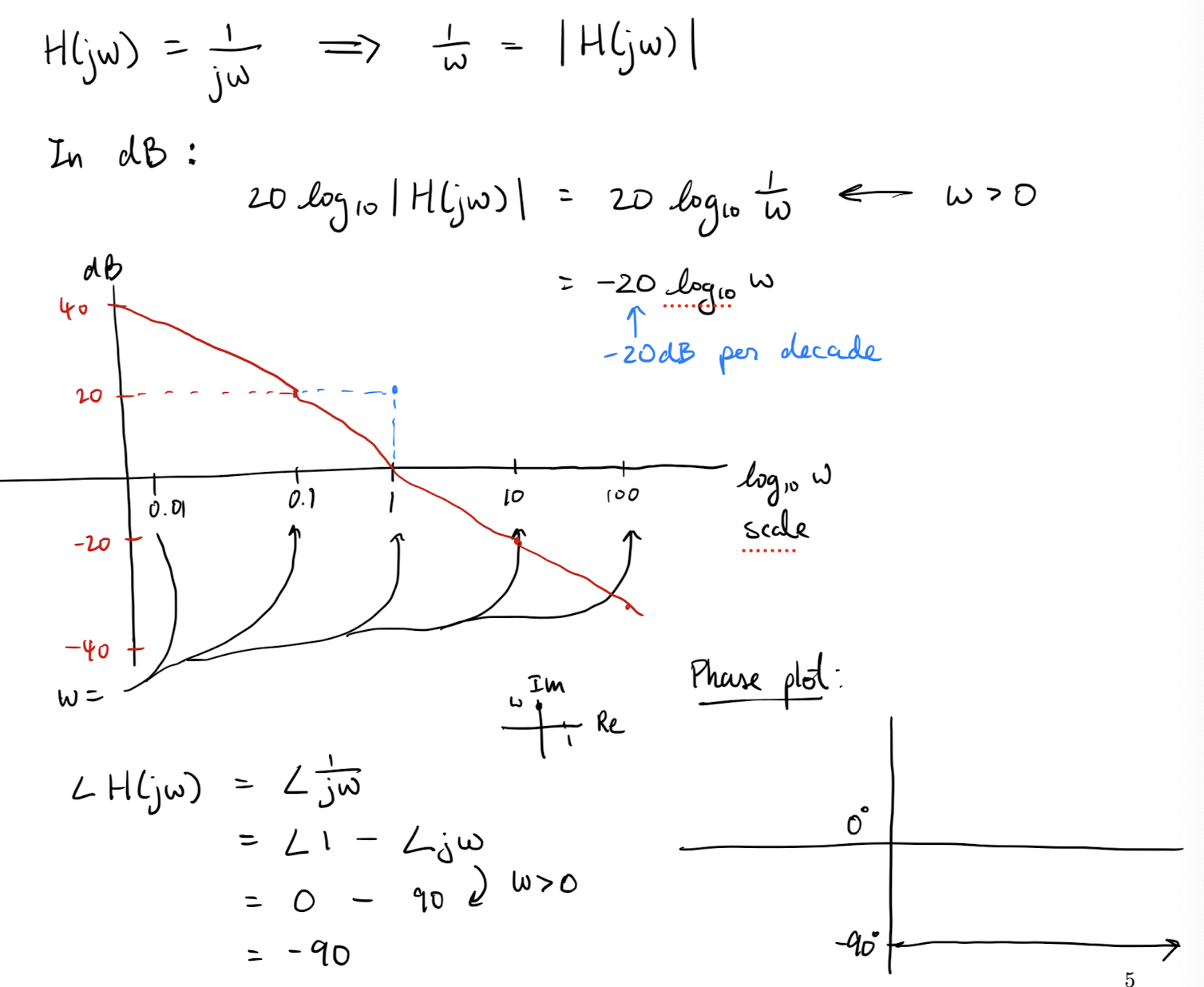

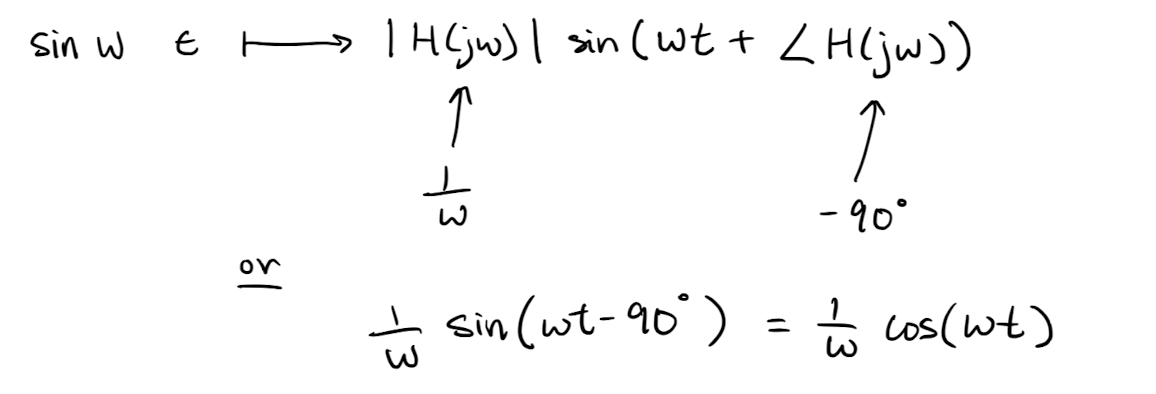

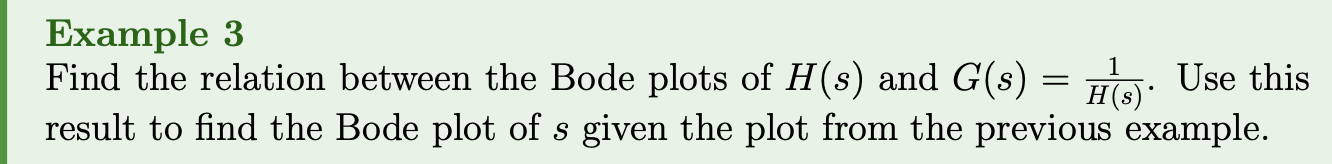

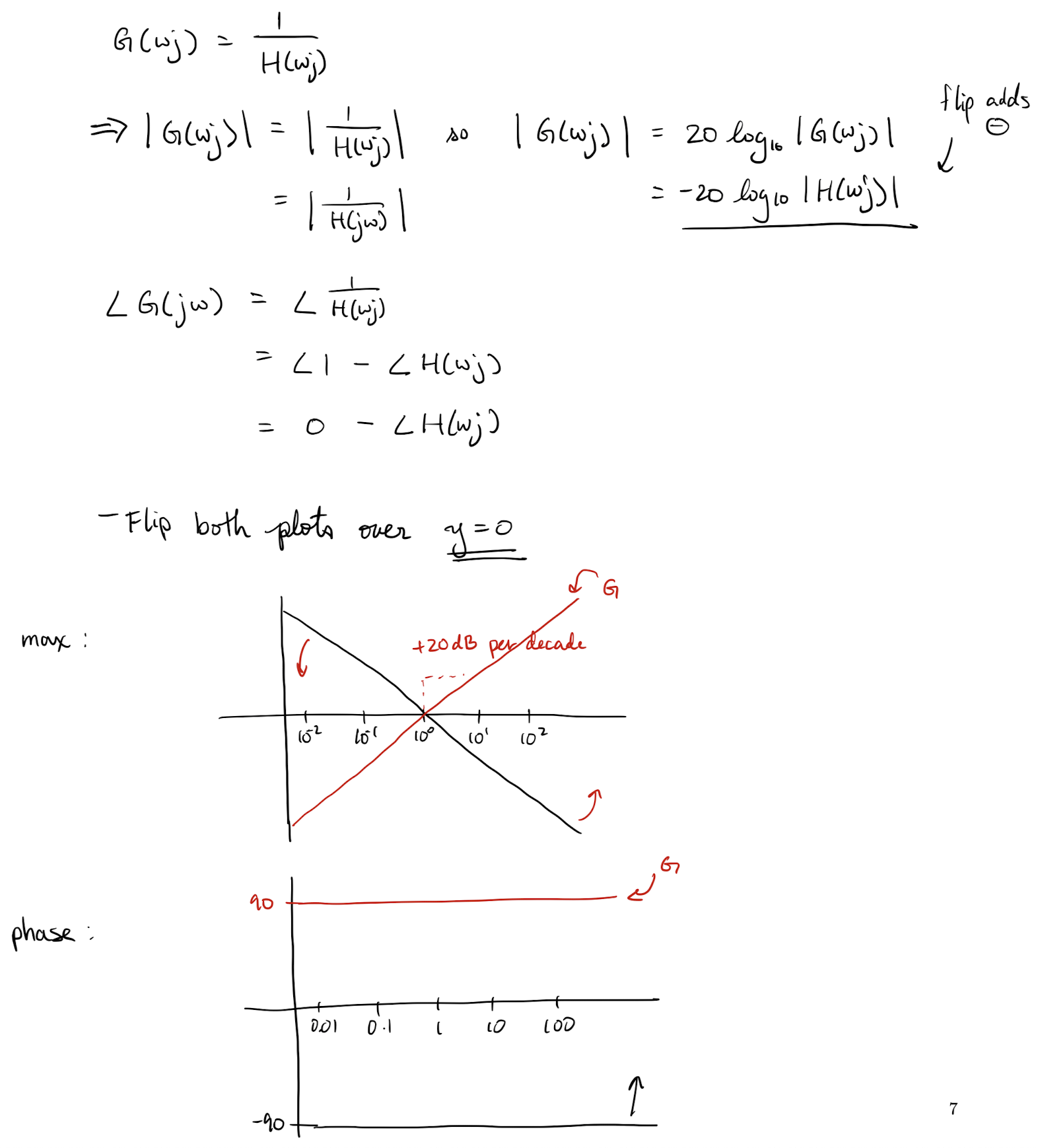

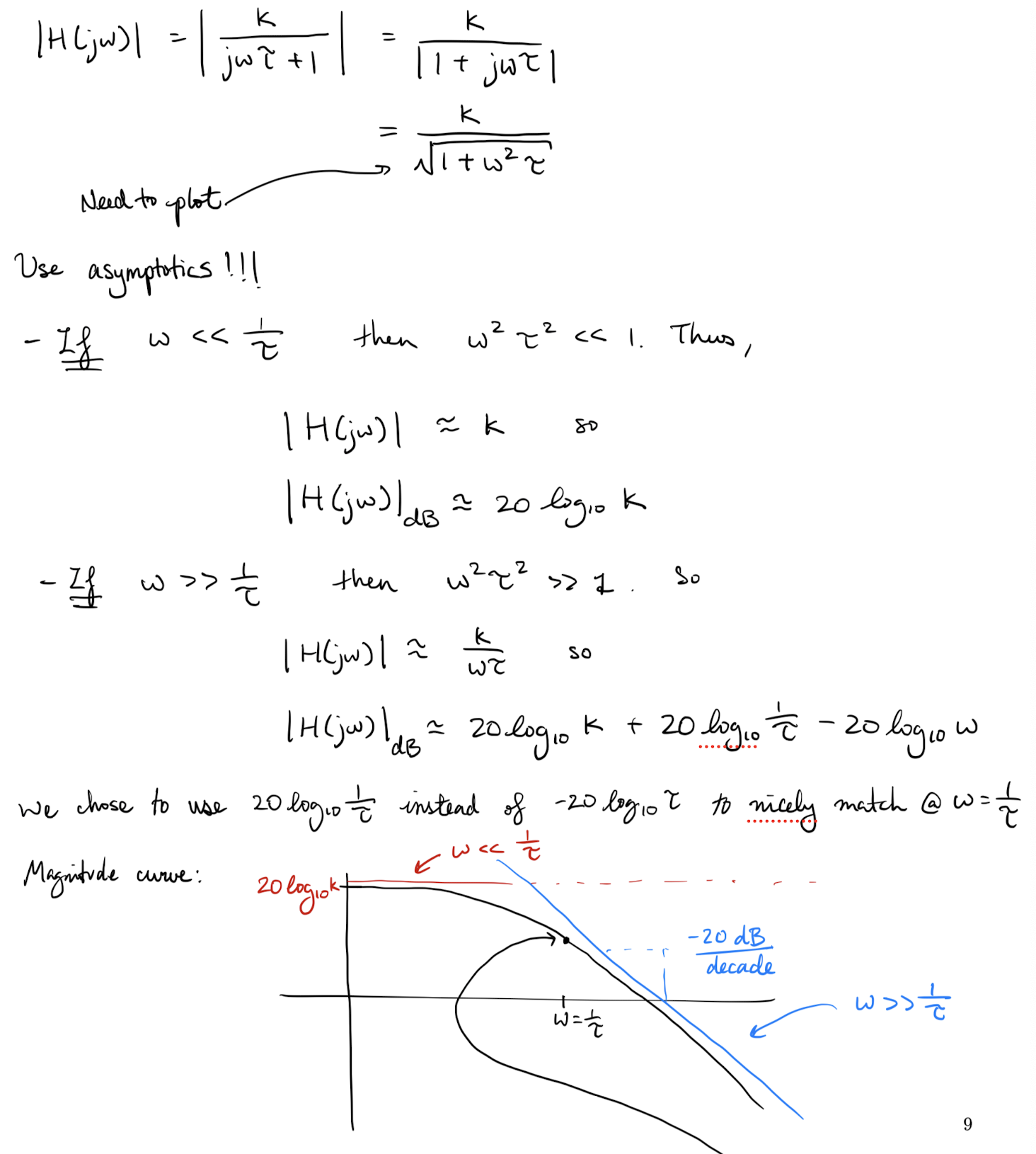

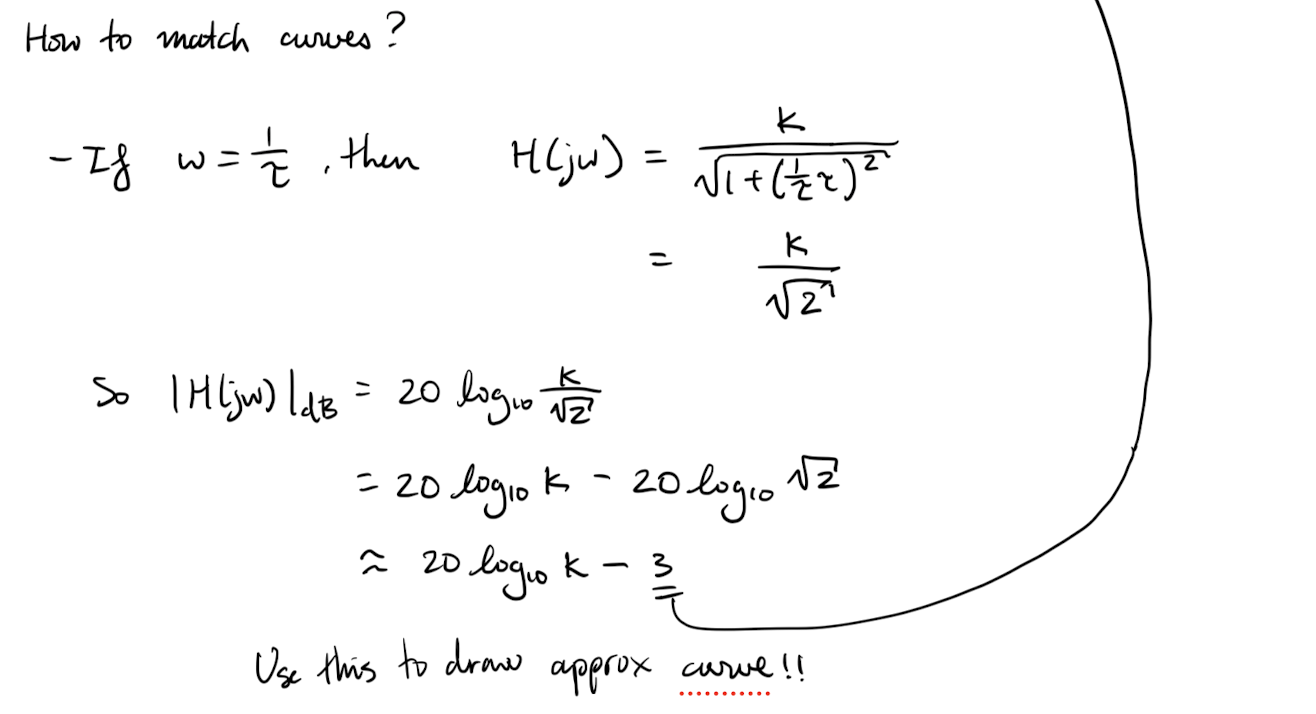

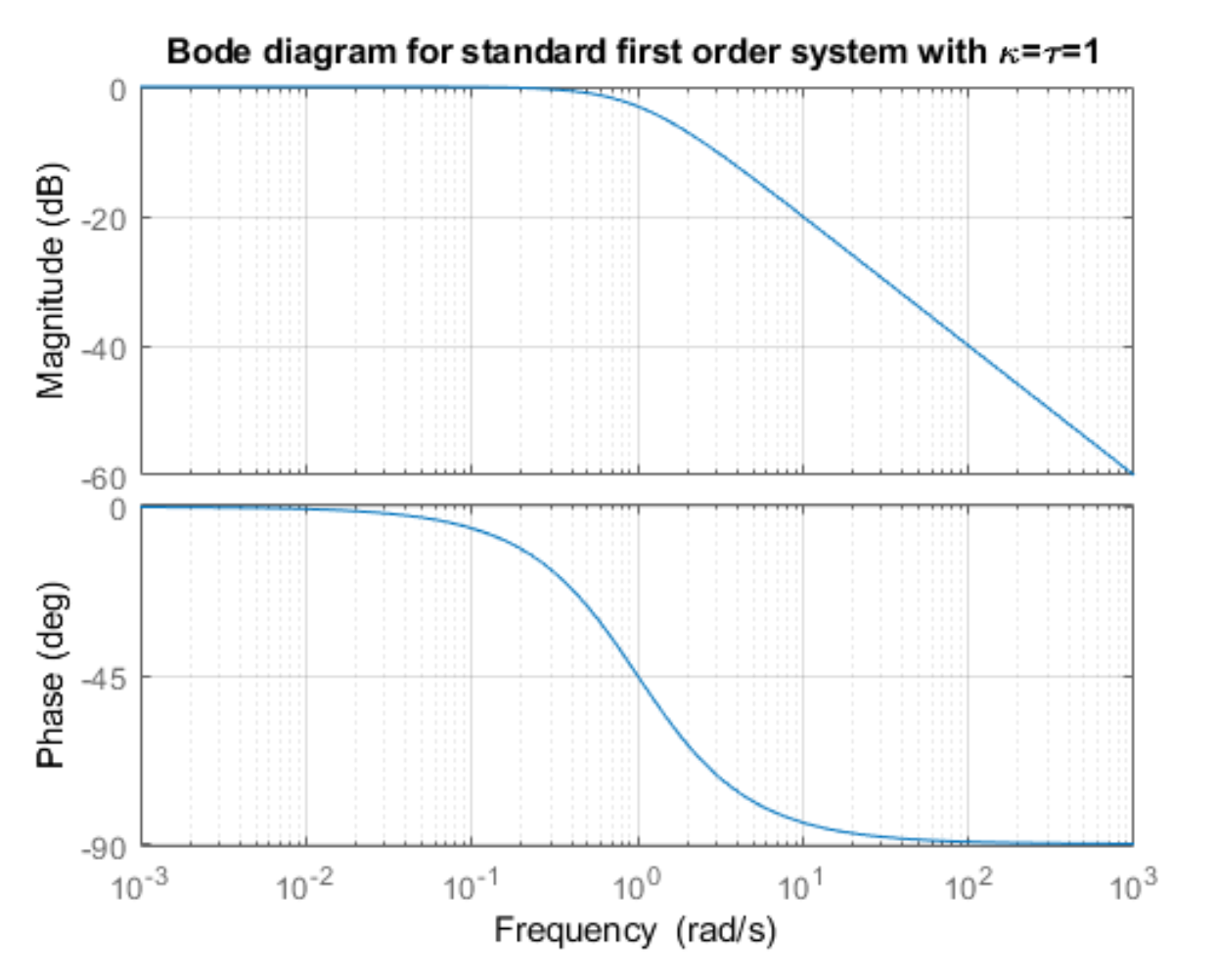

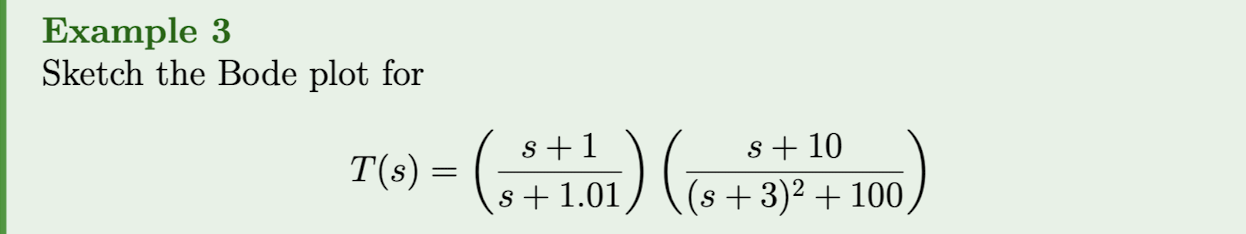

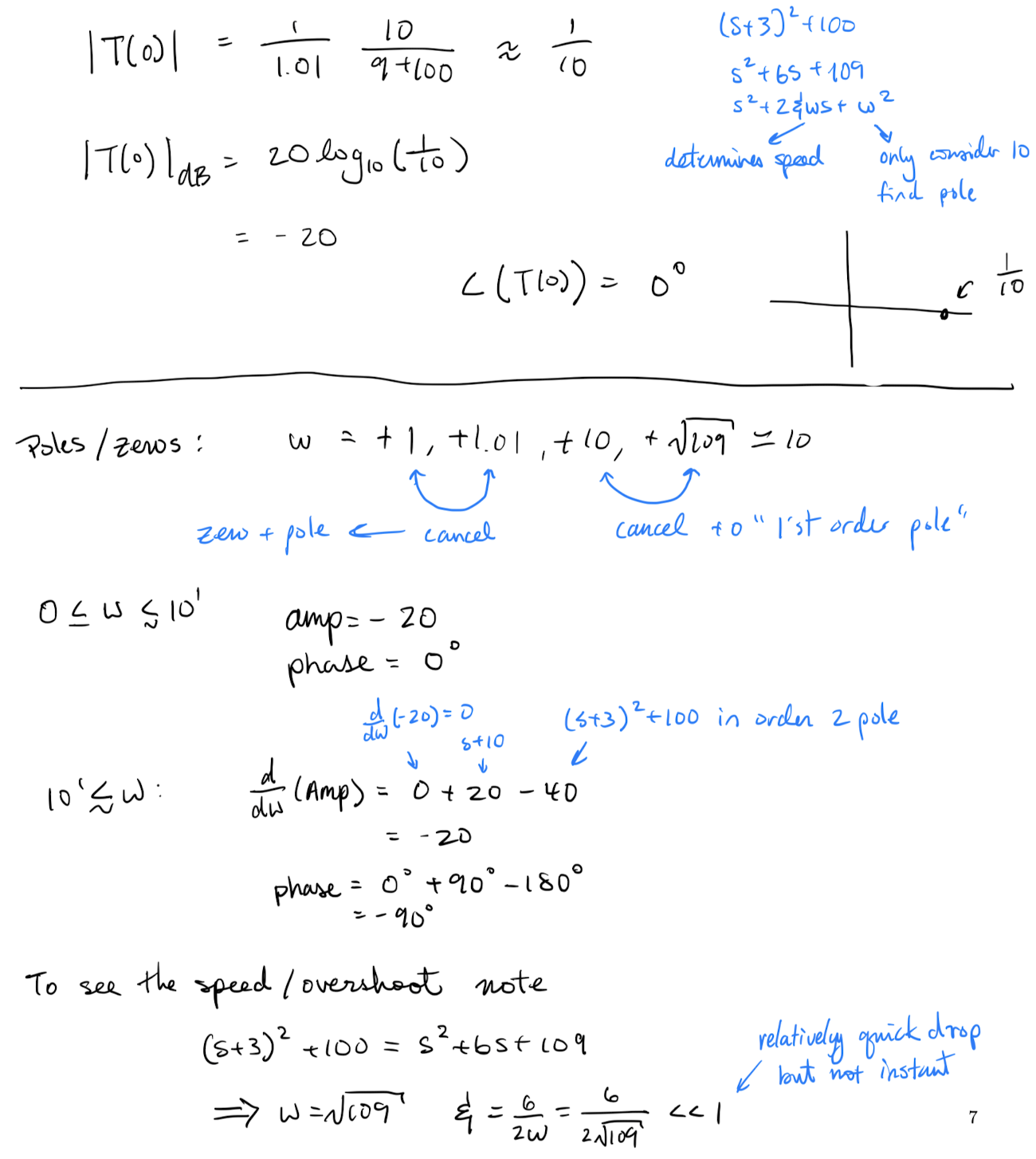

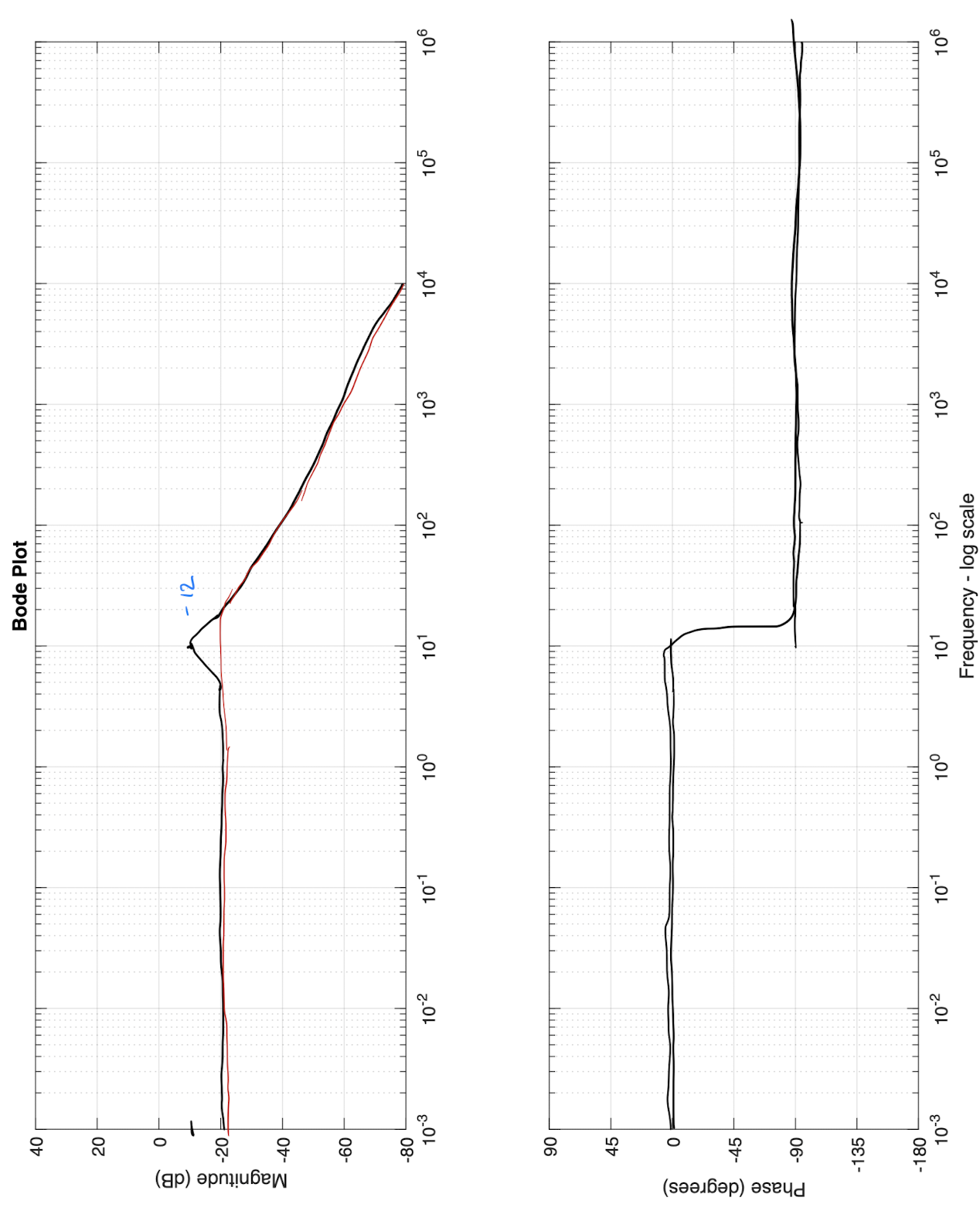

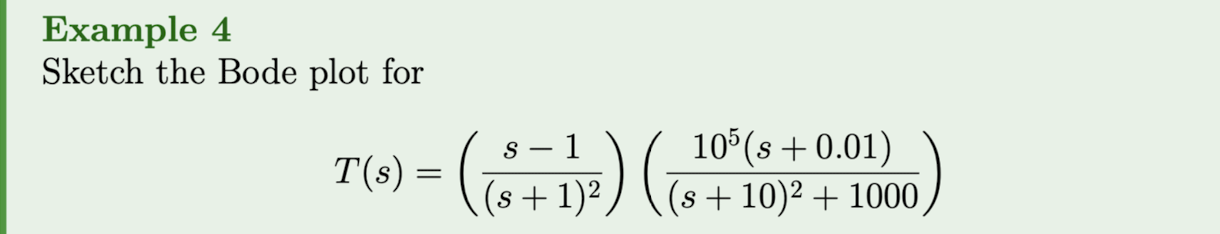

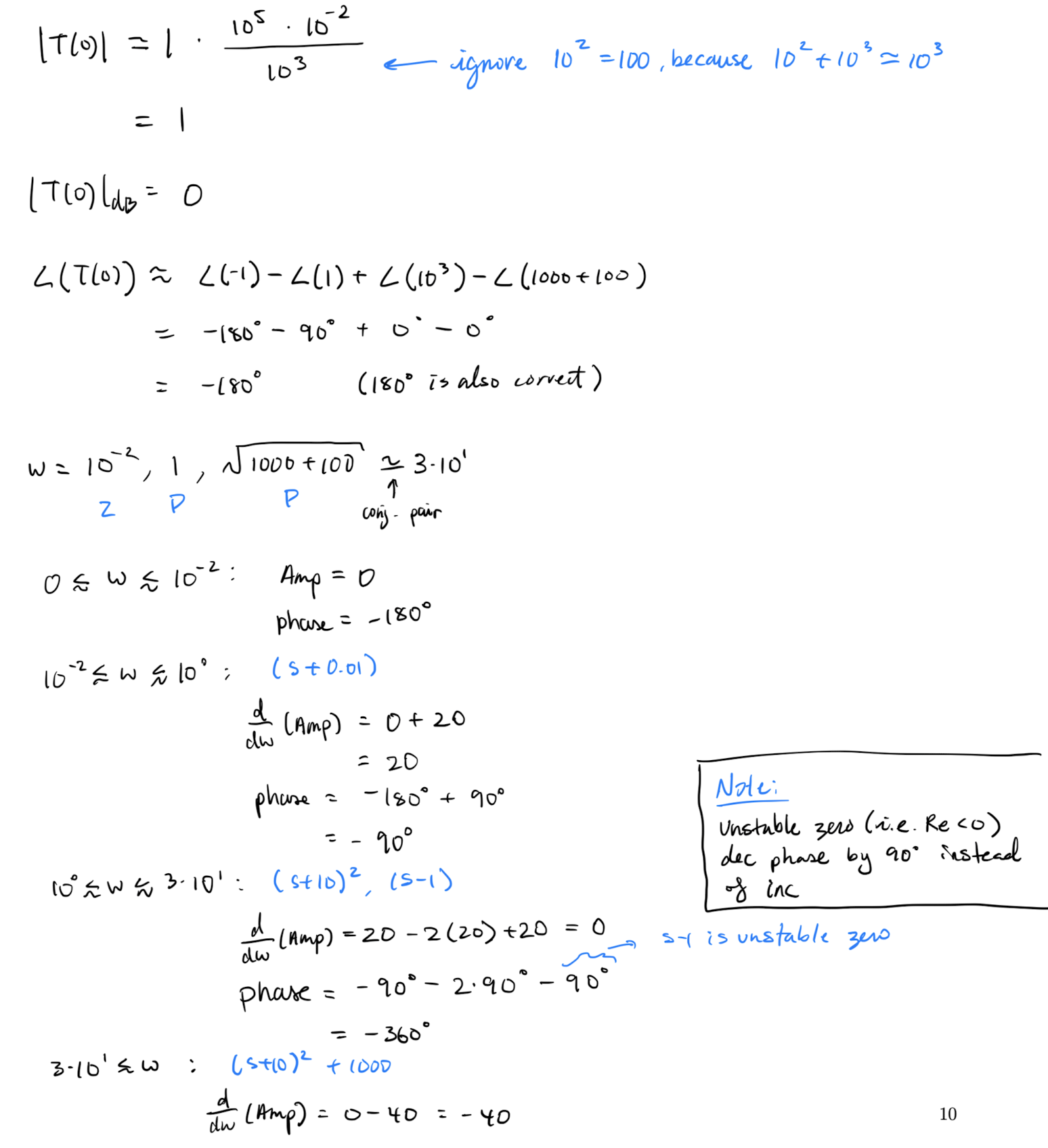

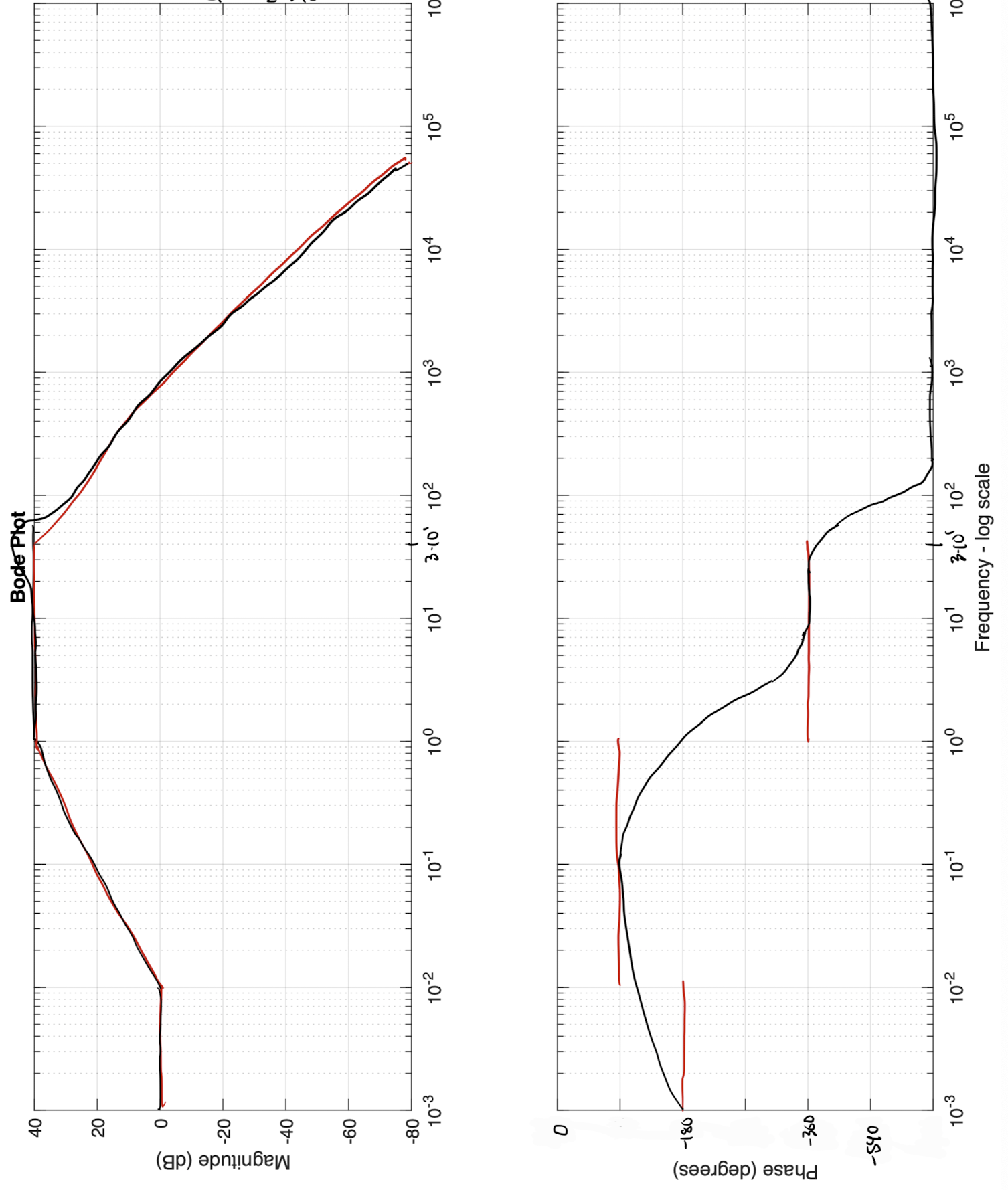

Lecture 18 - Bode plots

Lecture goals:

- Understand what the frequency response is and

- be able to generate and interpret Bode plots.

In lectures 13-17 we learned how to:

- compute the response of a LTI given an input function by either evaluating or computing ,

- analyze the general structure of the unit impulse and step responses by looking at the poles (and zeros) of the transfer function

- apply simple control systems (P, I, and PI) to control a system to have the behaviour we want it to have (i.e. cruise control problem).

- that the transfer function is the Laplace transform of the system’s impulse response

- that complex exponentials are the eigenfunctions of LTIs

These methods work well for many simple/academic problems but this method requires us either to find all of the poles (or potentially the dominant ones) or to evaluate a convolution integral.

Sometimes it is not possible to find all the poles/roots of the transfer function!

Sometimes we want to work in the time domain rather than the frequency domain.

In these cases, we need to use a different approach that has its own pros and cons.

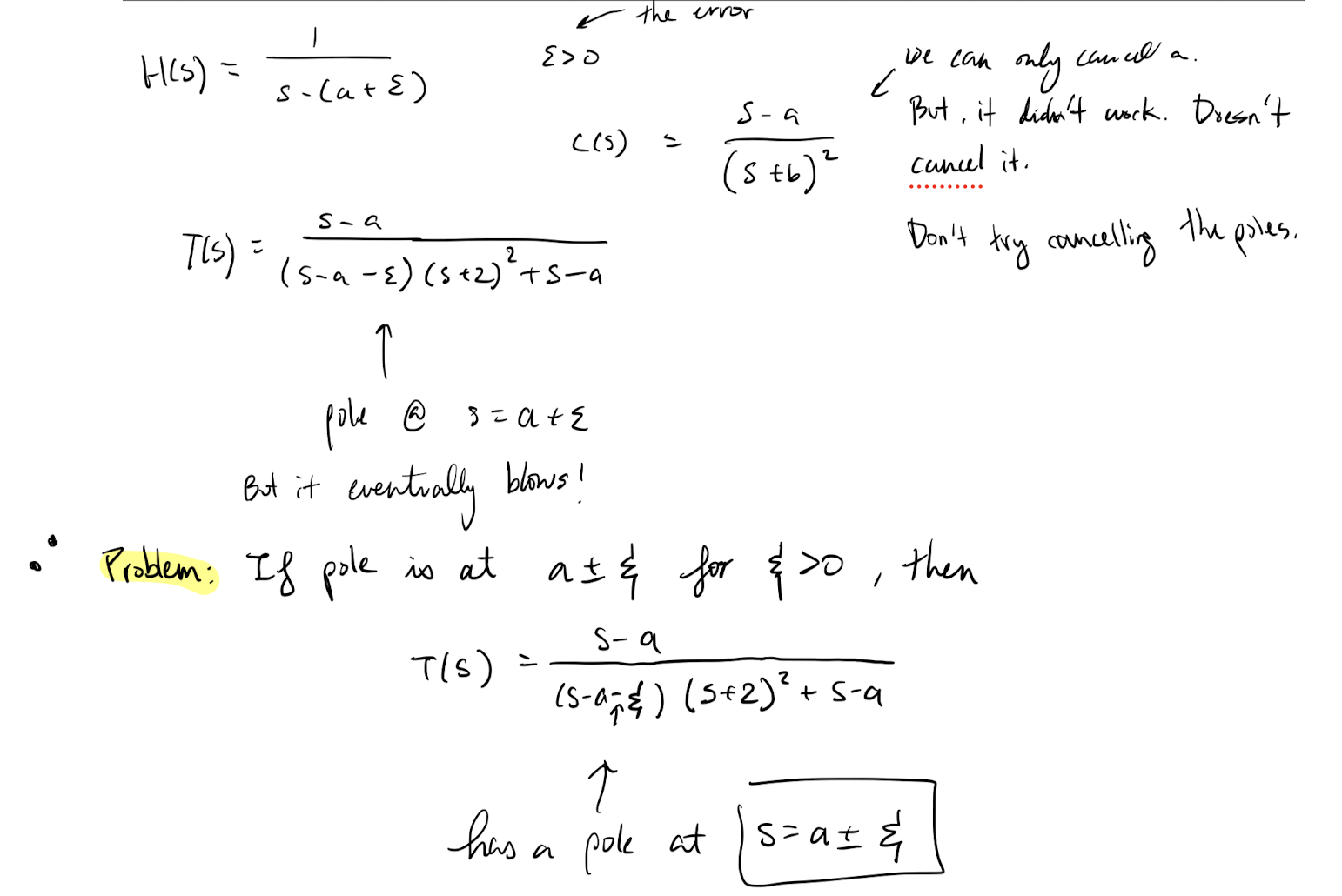

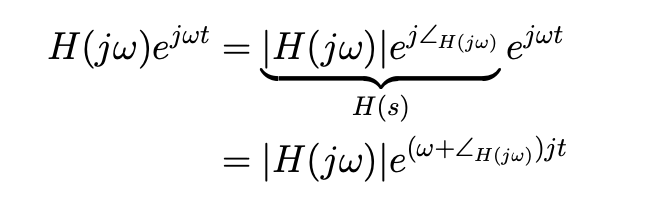

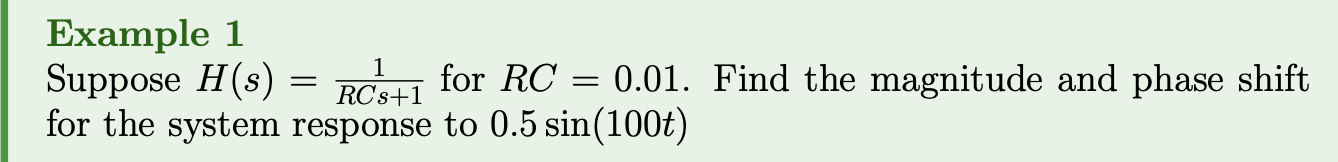

Deriving the frequency response:

Recall Theorem 4 from lecture 13:

Theorem 1: LTI response to an exponential

If is a LTI with transfer function then for an

.

Now, if then the above gives .

is a complex number so we can “recall” from MATH115 that we can write it in polar form:

“Recall” from MATH115 that this decomposition can be viewed geometrically:

Now we can write the system’s response to as

- :

Because of the above, is called the frequency response.

Explicitly, is the factor we need to scale the input signal by in order to find the system’s response of .

Theorem 2

If is a LTI with transfer function , then

Sketch of proof: Recall that

Use the above identity along with the previous results and then do some algebra.

Observations: The above says that the system response of an LTI to a sin wave of frequency :

- has an amplitude scaled by

- has the same frequency

- Has a phase shifted by

In the real world signals have a clear starting time (i.e. real world signals are one sided) so the above can’t be used for many applications. Lucky for us:

Theorem 3

If is a stable LTI with transfer function , then as

.

We will skip this proof.

Looking ahead: Fourier series/transforms will allow us to decompose functions as a sum/integral of sin and cos waves.

Hence if we know what the LTI does so all complex exponentials, then we can decompose a signal into a sum/integral of complex exponentials, scale and phase shift them, and then add the results together to see the response to the original signal.

We want a nice way to display the scaling factors and phase shifts so we introduce Bode plots.

Bode plots:

- Bode plots re a graphical representation of the frequency response.

- We need two plots: one for in “decibels” (dB) vs and one for vs

- The aforementioned plots are called the “magnitude” and “phase” curves respectively.

Using these conventions has two benefits:

- it allows curves to be approximated by piecewise lines (we will show this via examples).

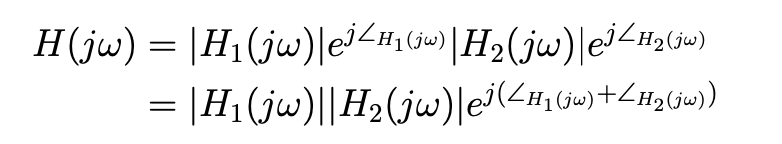

- allows plots for complex transfer functions to be build by adding plots from simpler transfer functions.

To see the basic idea behind this approach suppose and that we have the Bode plots for and .

In this case:

The angles are additive so we can simply add the phase curves of and to get the phase curve for .

The magnitudes are not additive but… “recall” that so if we use decibels for the magnitude, then the magnitude curves become additive.

Definition 1: Decibels

in decibels is .

Using decibels for the magnitude: if then we have

So if we use decibels, then we can just add the magnitude curves to find the magnitude curve of .

Bode plot examples:

Some questions I have (should've attended class):

- How is ??

From ChatGPT

For understanding why in the context of Bode plots, we need to consider the properties of complex numbers and their representation in polar form.

Given that , where represents the magnitude of and represents its phase angle, we can write as:

Now, let’s examine the phase angle of . According to Euler’s formula, represents a rotation in the complex plane by an angle of in the clockwise direction. Therefore, if has a phase angle of , will have a phase angle of .

In other words, .

This property is important in Bode plots because when plotting the phase response, the phase of is essentially the negative of the phase of , which affects the overall behaviour of the system at different frequencies.

Note that the Bode plot for a system with no poles and a zero is simply this plot flipped over .

Thinks I dont understand about this example's solution

- Why use ?

- For if , , why is the way it is? In denominator…

- How did it simplify to ?

- How did we get ?

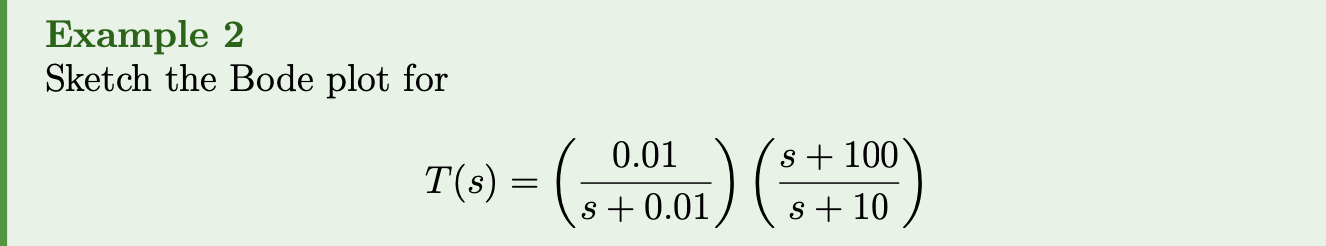

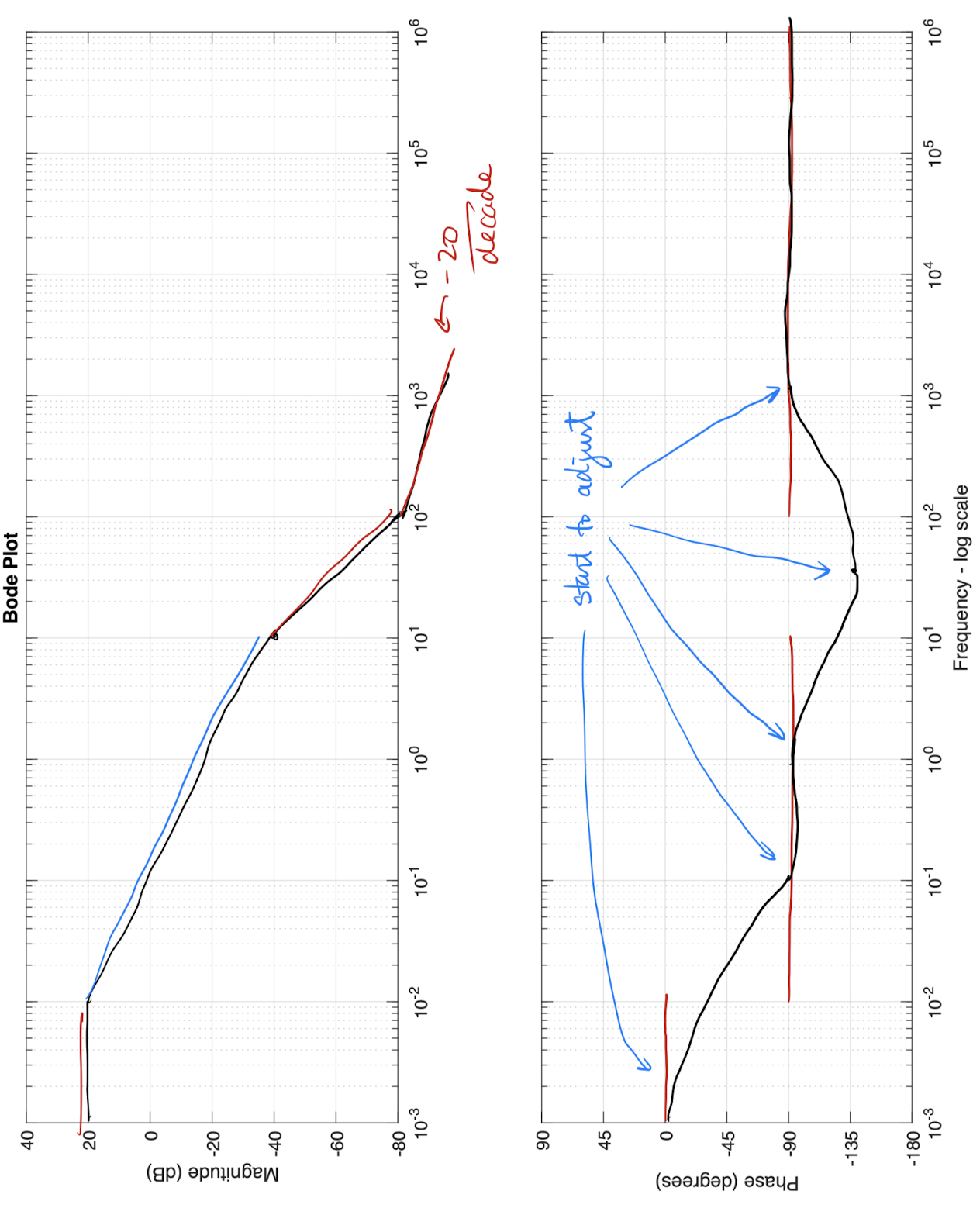

If a given a transfer function then the Bode plot for is found by

- Finding the magnitude and phase curves for each .

- Adding the magnitude and phase curves

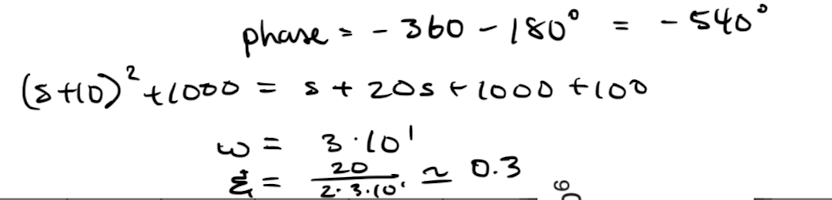

Lecture 19 - Bode plots 2

Lecture goals:

- Refine our ability to quickly draw bode plots,

- approximate the transfer function from bode plots,

- and determine stability of the system/closed loops using Bode plots.

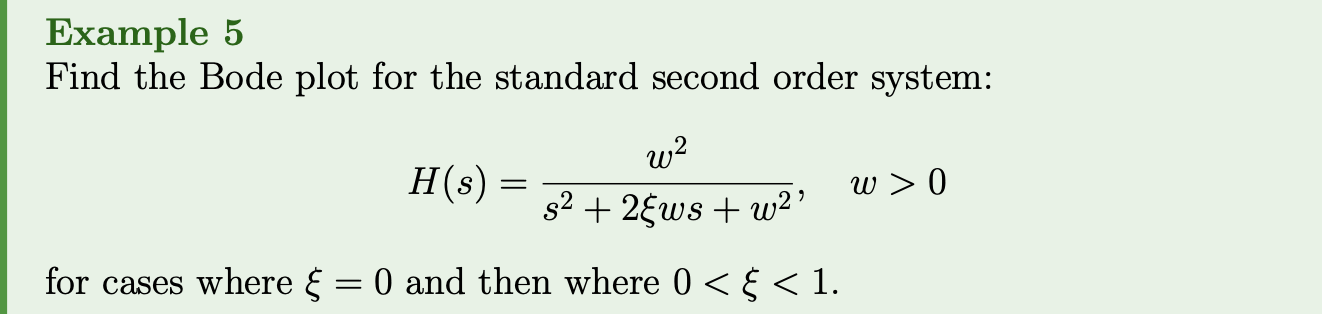

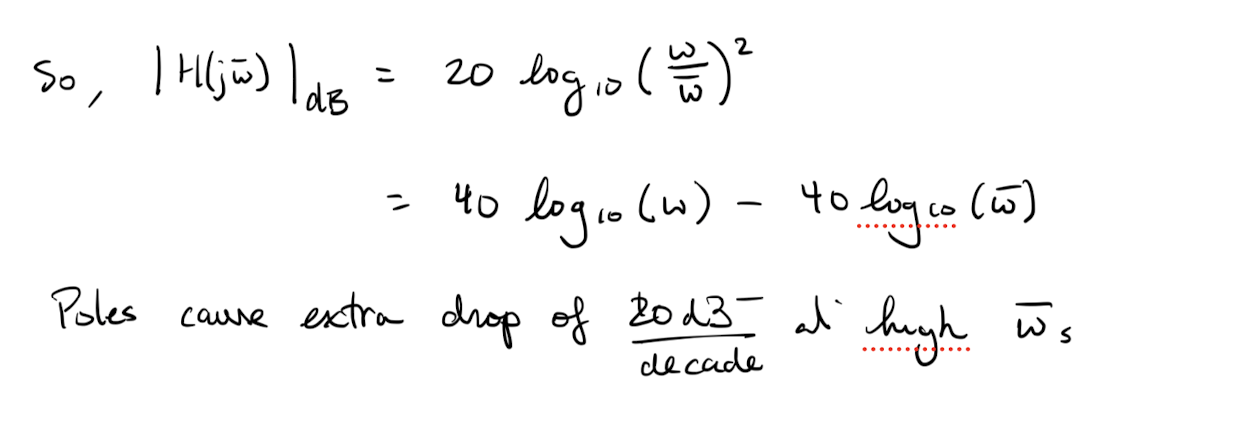

Summary of main results from Lecture 18:

-

The “starting value” of the magnitude curve is and the “starting value” of the phase curve is .

This will be not exists (i.e. the curve is unbounded) if there is a pole with a real value of 0.

-

If the transfer function has an order one stable pole at a point then the magnitude curve will experience an extra decrease of and the phase curve will experience a drop of (see L18 Ex 4).

These phase adjustment will be rather “slow” taking an order of magnitude (before and after the pole) to adjust.

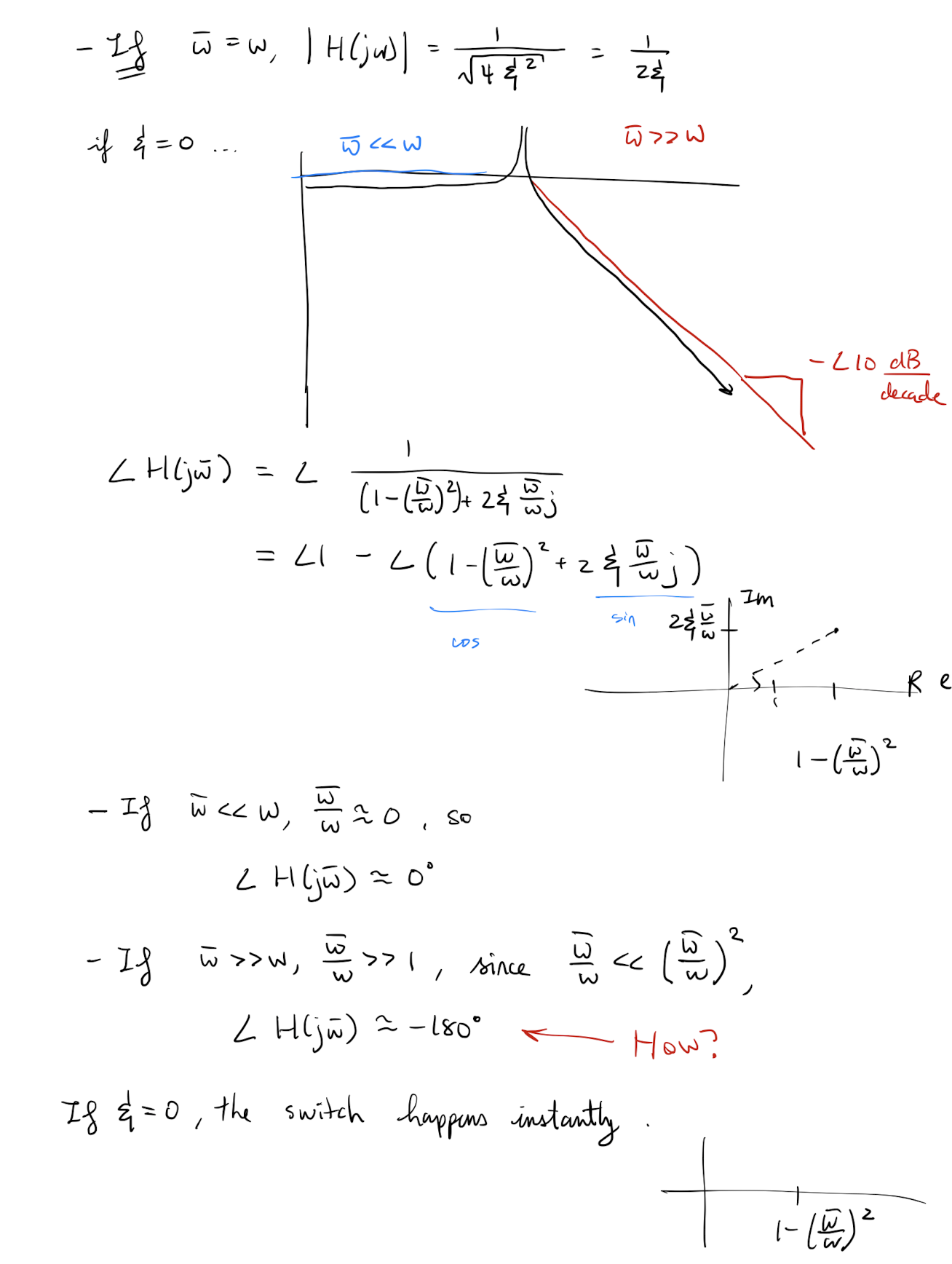

-

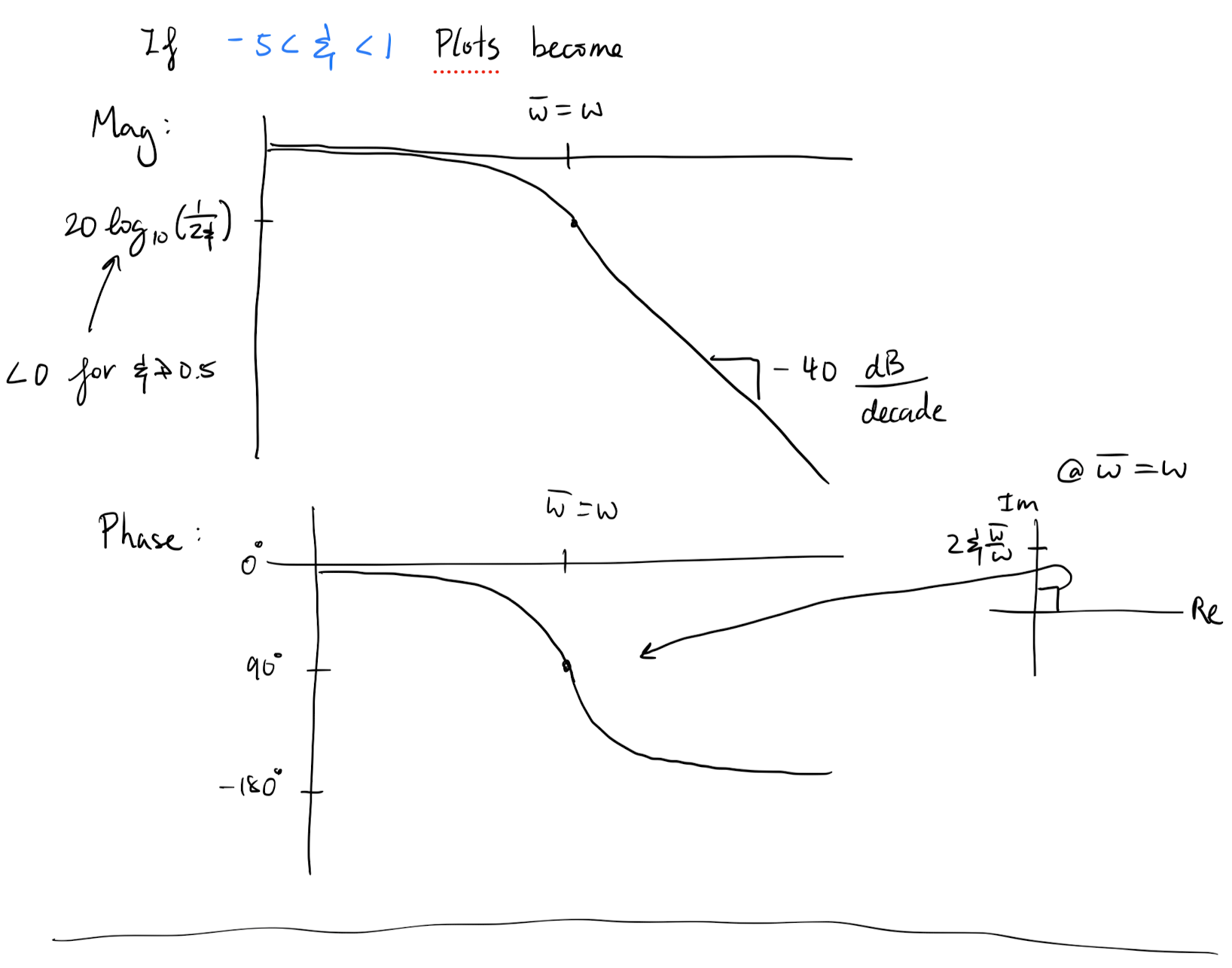

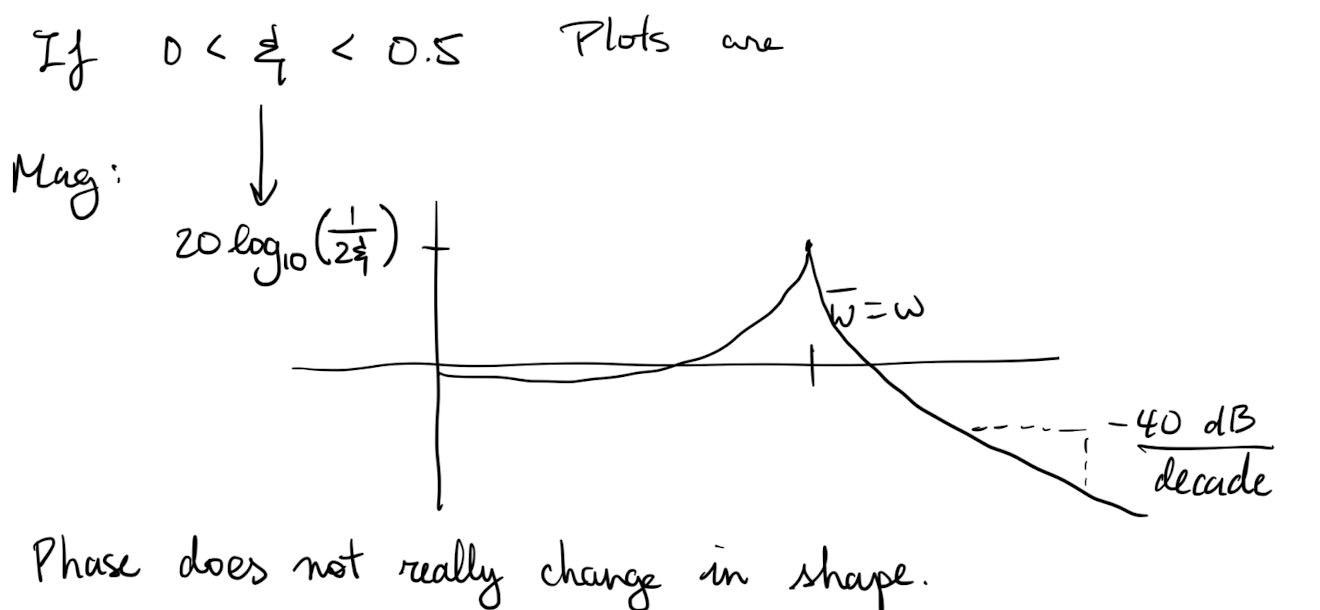

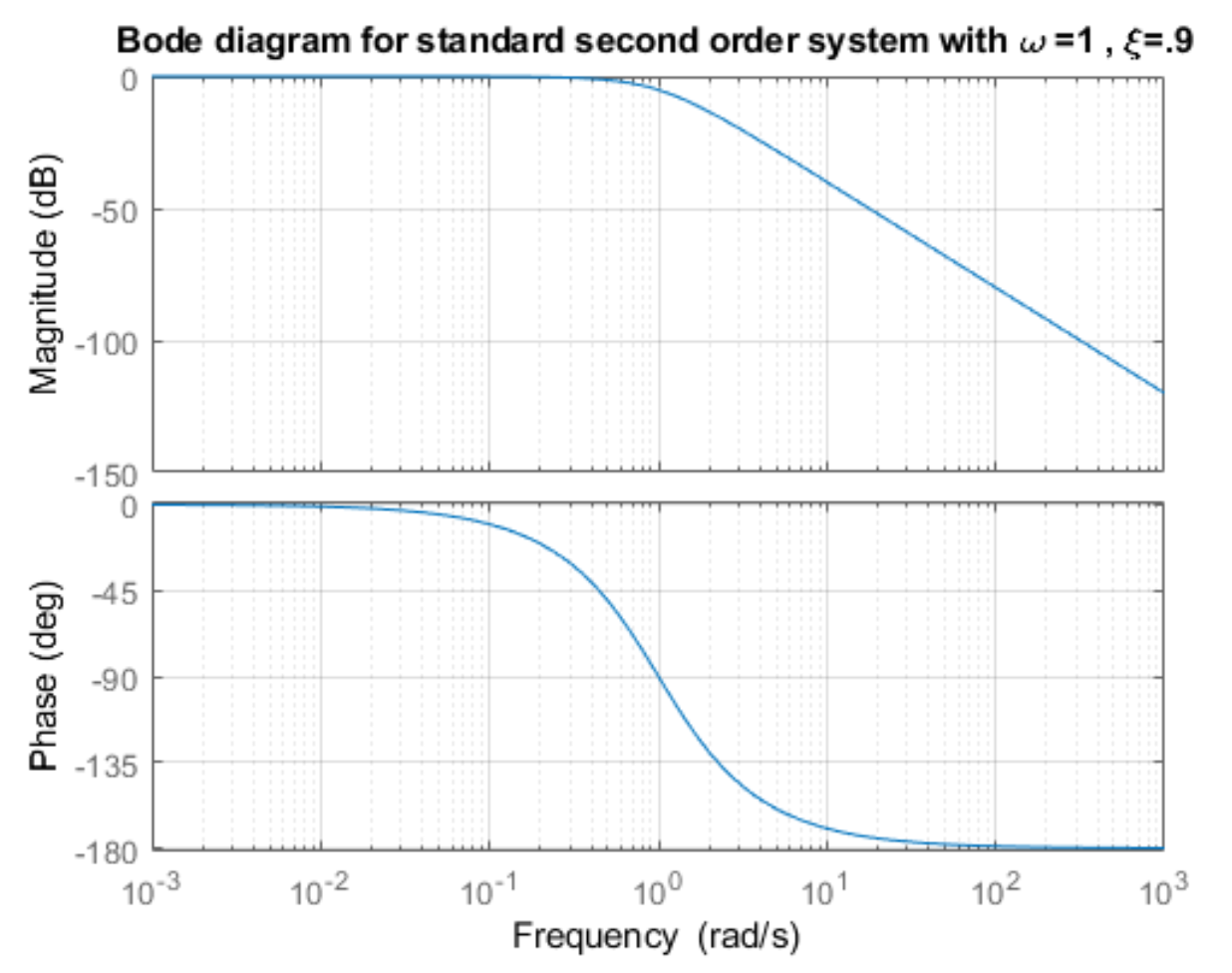

If the transfer function has complex conjugate poles that are stable then the magnitude curve will experience an extra decrease of and the phase curve will experience a gain of . The location of this adjustment is given by is the term in the standard second order system (see L18 Ex 5).

-

The speed of adjustment is related to :

- causes fast adjustments and overshooting in the amplitude plot for nearby ,

- causes slow adjustments and undershooting in the amplitude plot for nearby .

-

If we have zeros of order 1 or of order 2, at some point then the magnitude and phase curves experience the opposite effects as listed in the above two points.

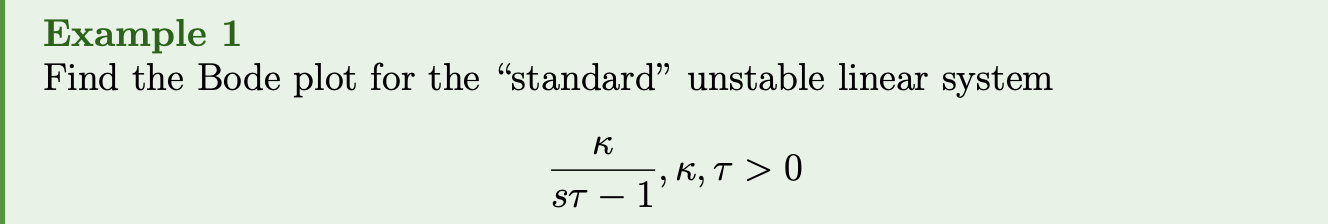

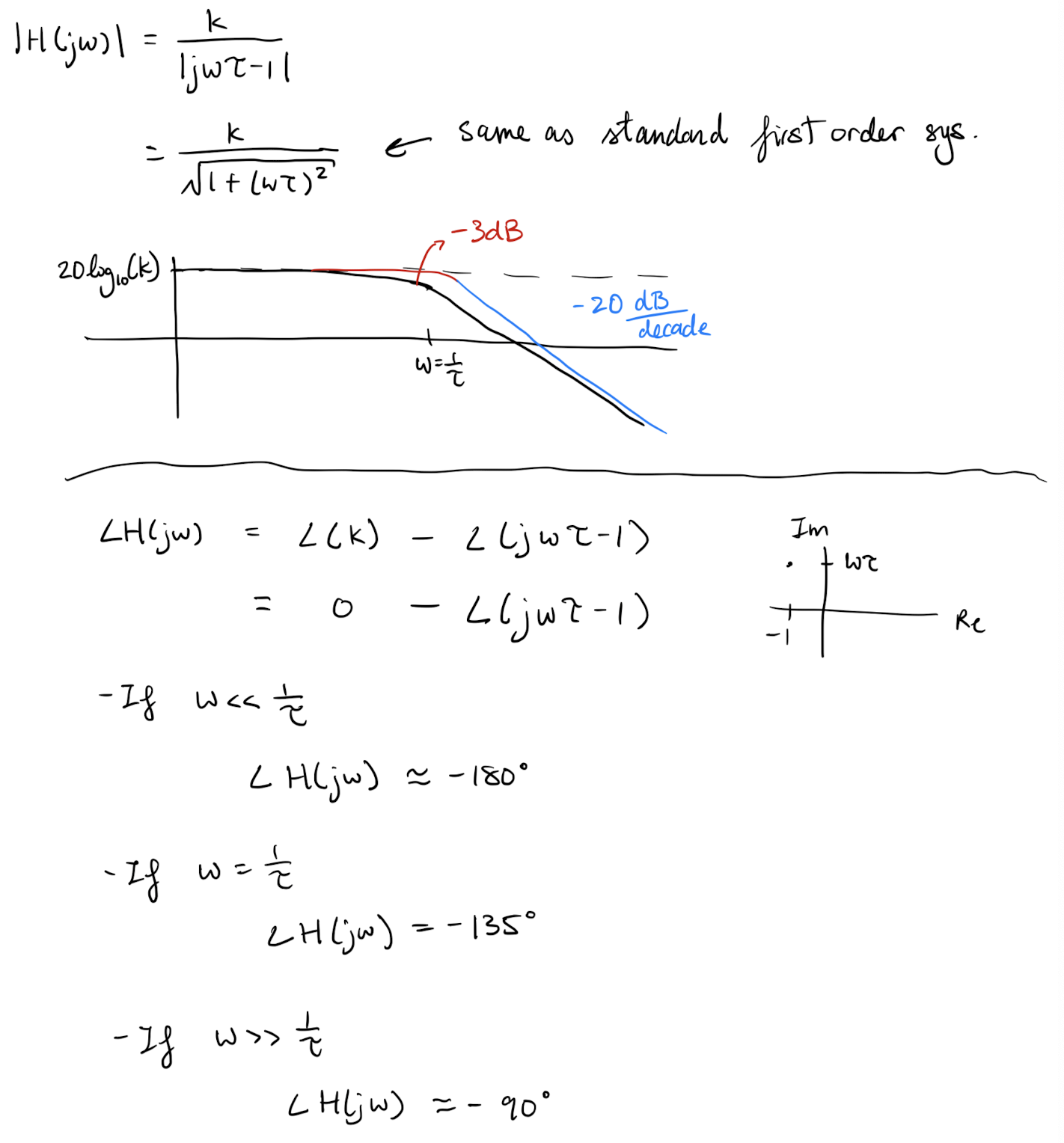

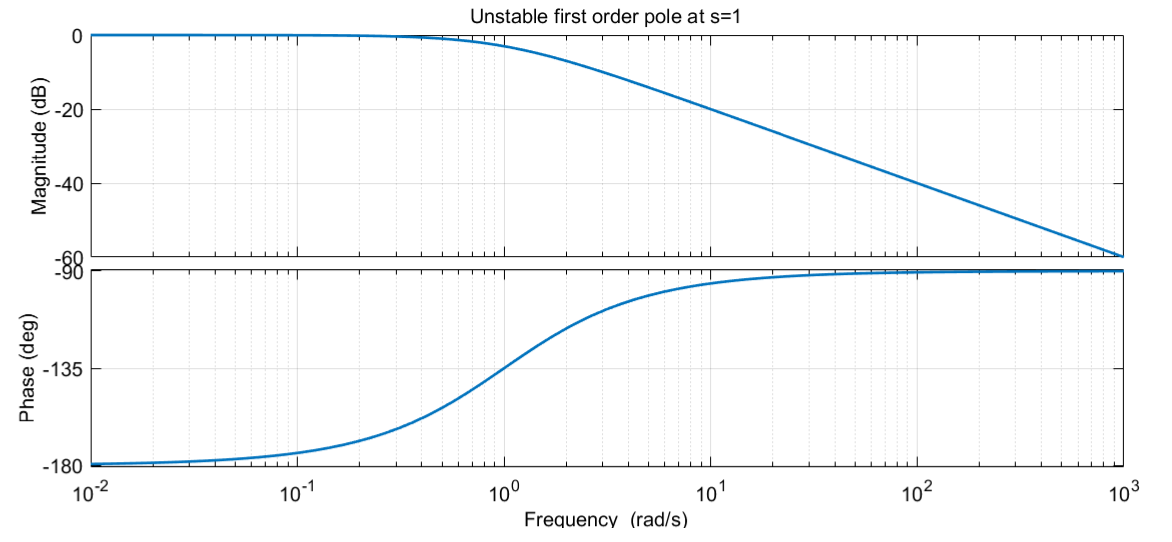

What if we have an unstable pole?

In this case Bode plots do not make sense to apply as they assume there is a steady solution but… for completion

Similar result for second order systems but they are a bit more complex to analyze and often there are better ways to test stability so we skip the analysis.

In general if you see a decrease in the slope of the asymptotic amplitude curve and an increase in the phase, then you can conclude that the system is unstable (and hence a Bode plot should have never been made). You can not conclude anything about stability of the system modelled by the transfer function used to generate the plot though.

To quickly generate plots, we can use the results derived from lectures 18 and 19 by either adding the curves or using the relation of the poles/zeros and the curves to sketch asymptotic lines. The latter is often nicer.

Note

Unstable poles (dec net amp change + inc phase) make system unstable. Unstable zeroes don’t, but can cause more oscillations.

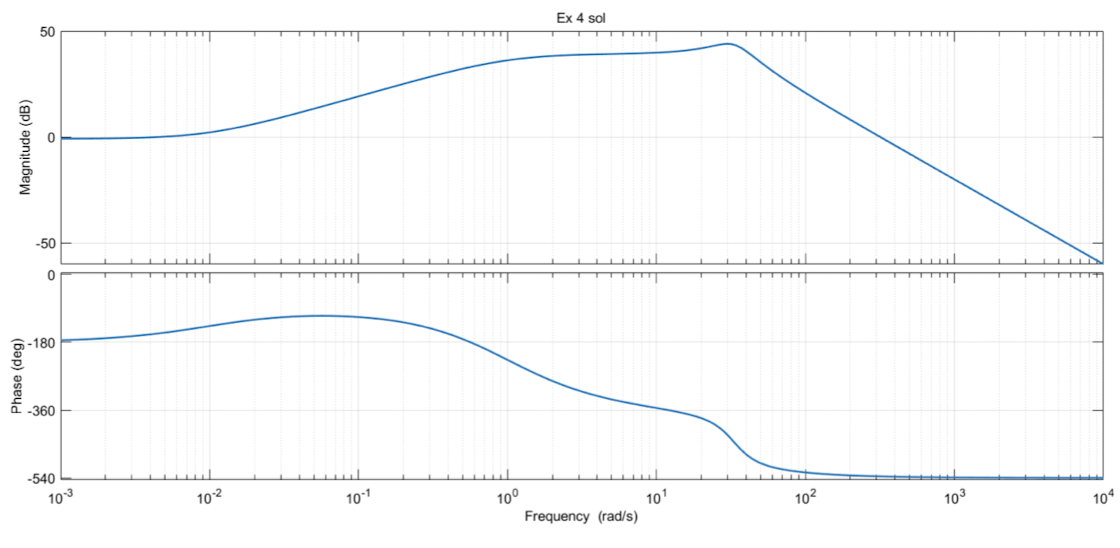

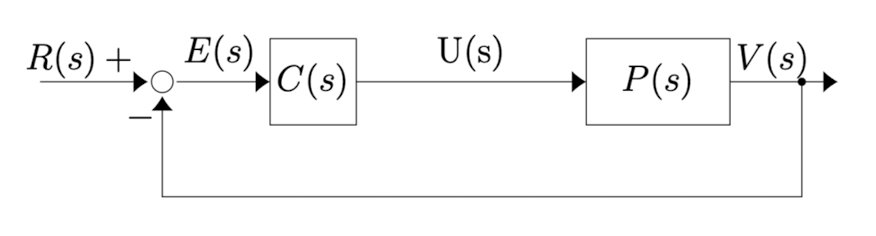

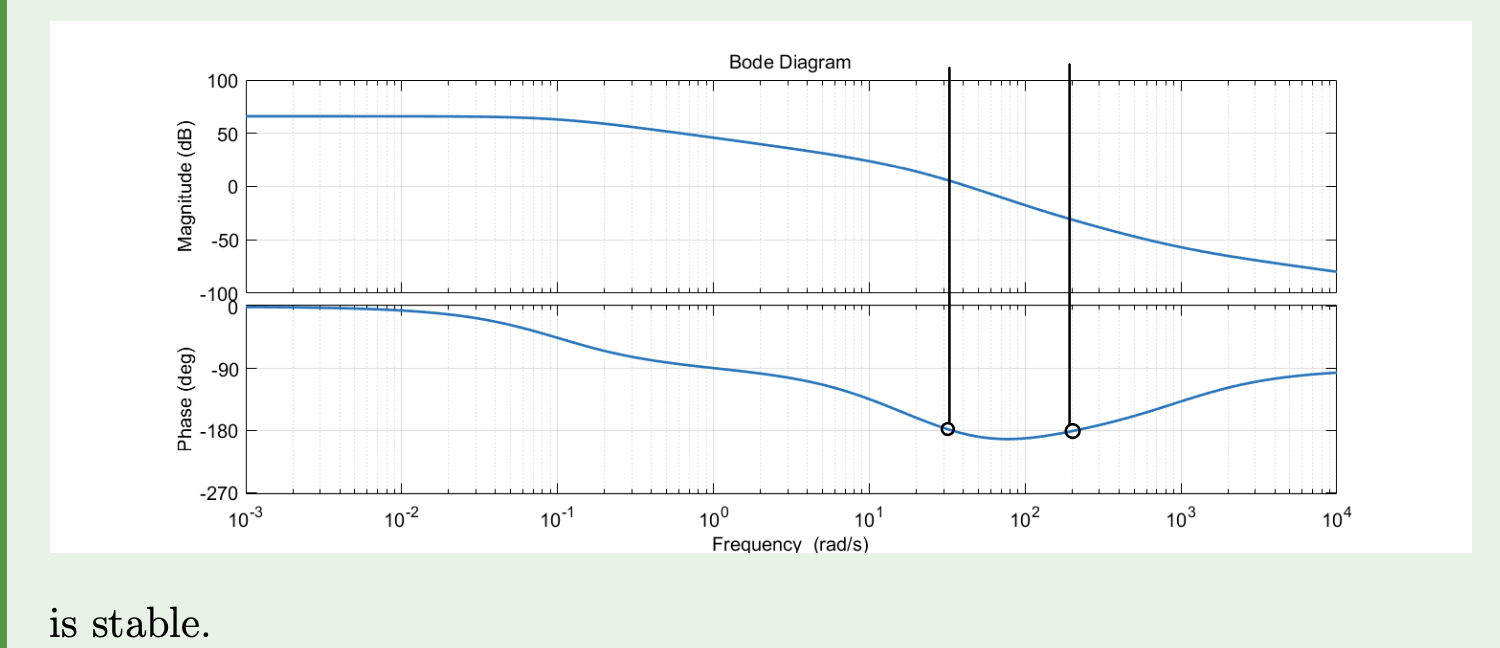

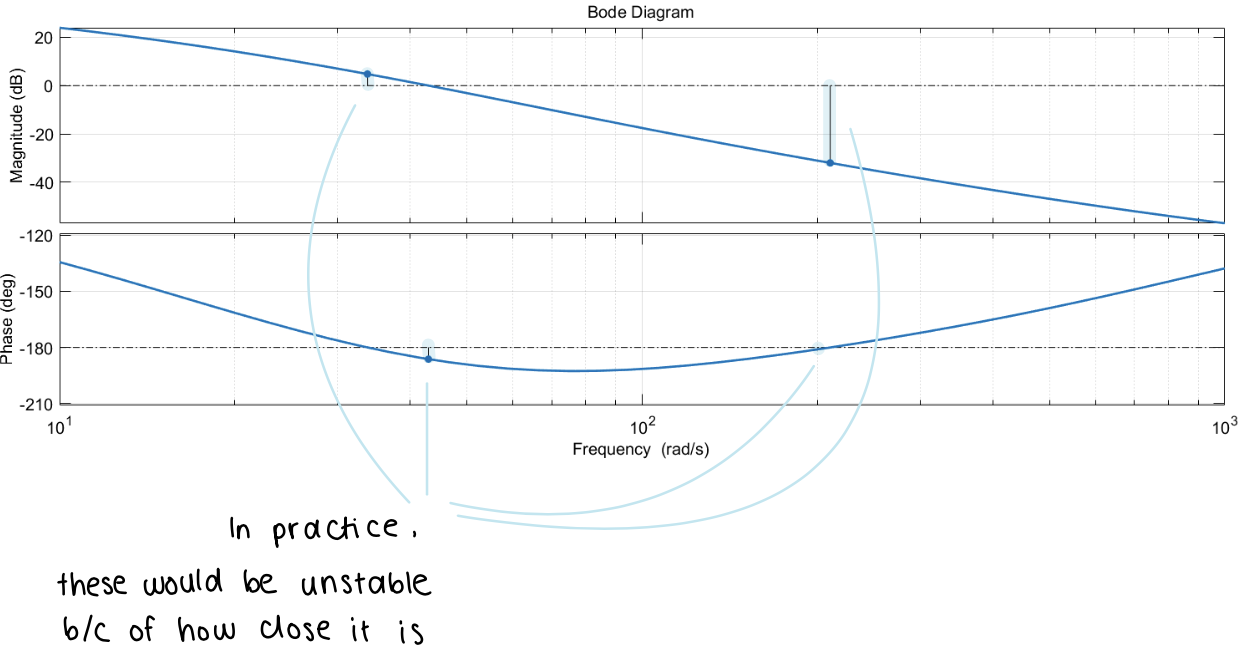

Recall that for a closed loop system with system diagram given by:

The transfer function for this system is

Hence the closed system will be stable when both and are stable and when

Hence if and are both stable, then the closed loop control system is unstable when

This happens when the amplitude curve is while the phase is either or (or some other equivalent angle).

In general to talk about stability we will not look for these exact conditions but will instead look at the points where either the amplitude or the phase is critical and then look at how far we are from stability. We generally require some threshold to be “far enough” from being unstable.

Formally stable, but close to unstable.

Lecture 20 - Signals - Intro

Lecture goals:

- know the big picture of Fourier series/transforms

Bode plots allows us to quickly see how a system responds to input signals of the form .

This can be used to quickly tell what type of filtering/amplifying the LTI does to the sin wave.

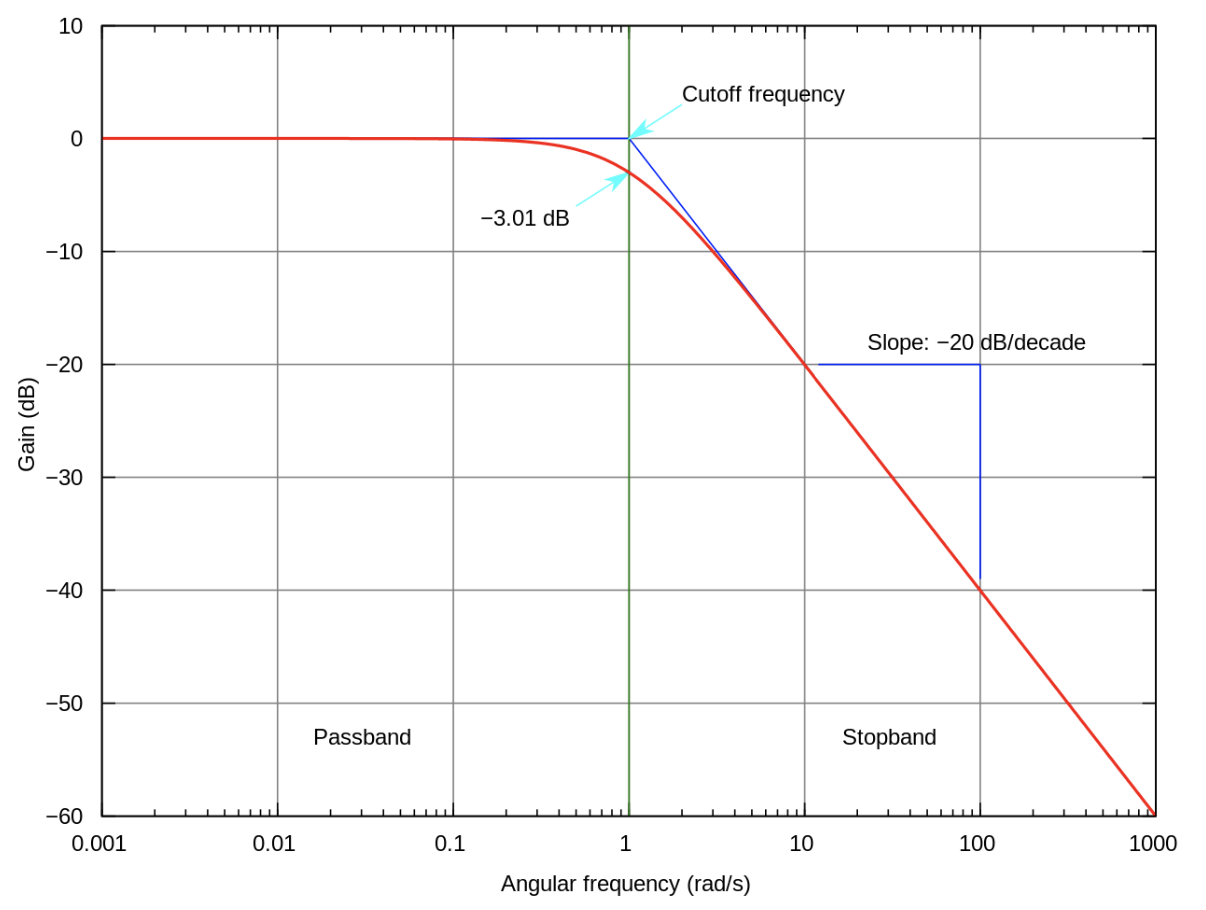

For example a low pass filter is a LTI that removes the “high” frequency waves. Explicitly, they reduce the amplitude of all waves with a frequency larger than some cutoff .

All stable systems with a single pole and no zeros are low pass filters. Here is the hopefully familiar amplitude plot (stolen from wiki because I liked their annotations):

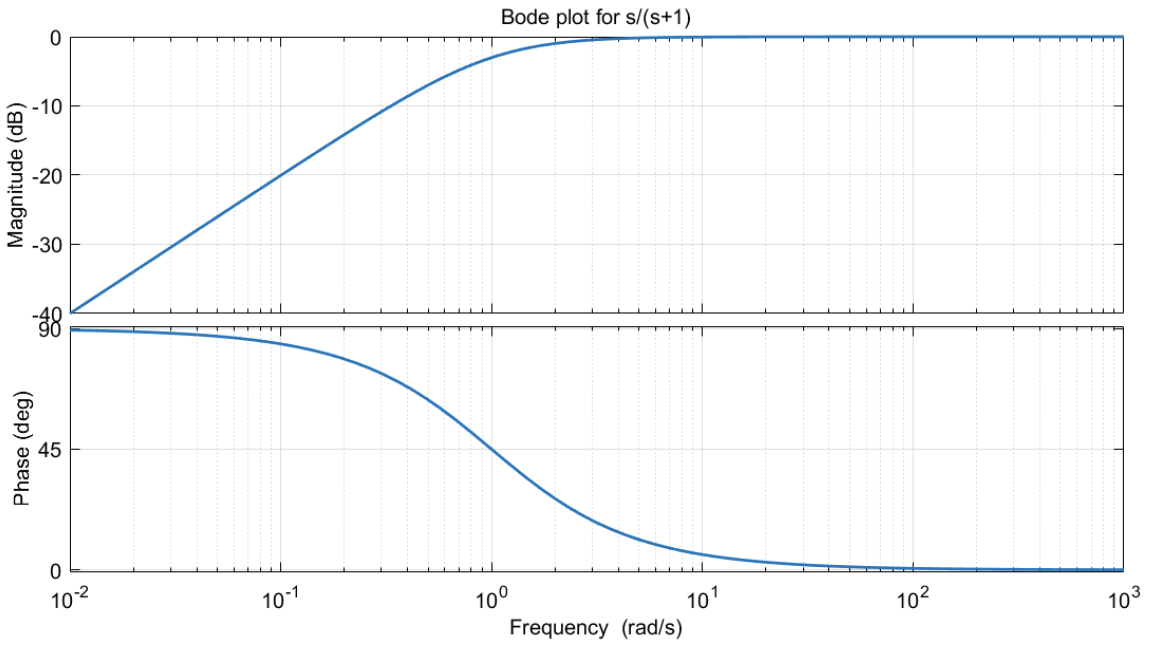

As another example for a high filters we need the amplitude curve to go to dB at some cutoff point. The standard high pass filter transfer function is

for and its bode plot is

If you wanted stronger drops in the frequency, you could simply add more zeros near 0 and poles to counter them!!!

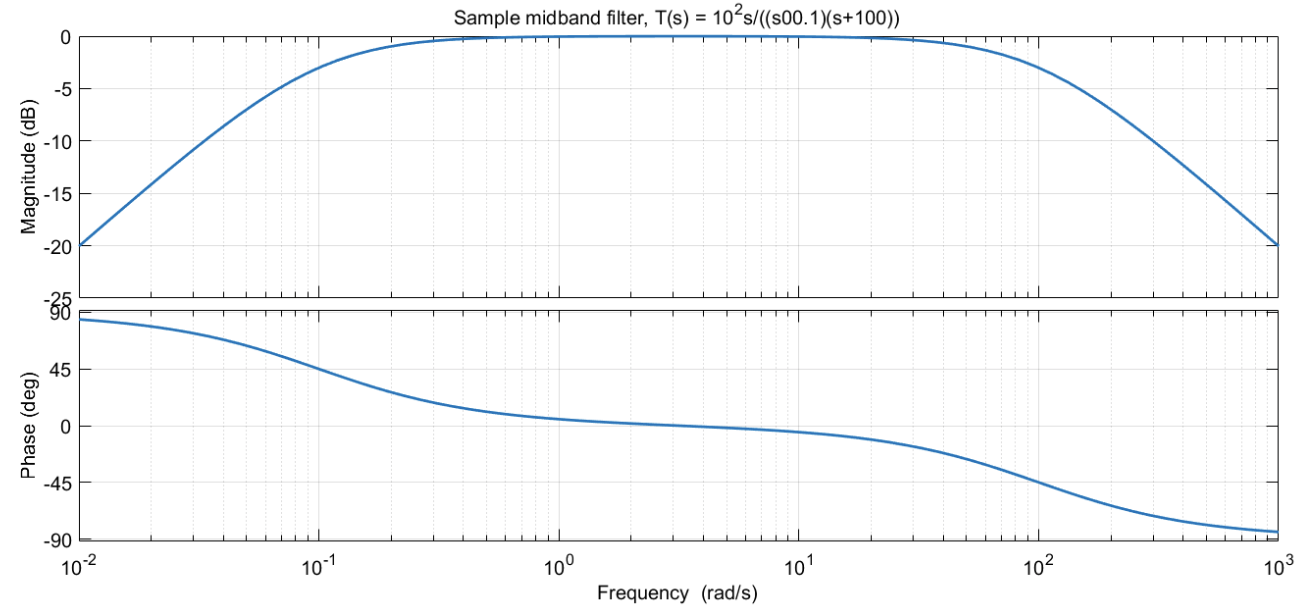

If you wanted a medium band filter, then you can add another pole later:

for . Here is a sample Bode plot

This is all fair and good but it only works if we are working with sine waves.

Most functions are not sine waves… but what if they (i.e. functions we care about) can be written as a linear combination of sine waves?

Fourier series:

Fourier series are obviously useful when working with systems, but they are also useful when working with signals on their own.

We will first work with a classic signal example that was a major problem in the days of the telegraph,

- in the early days of landline phones,

- in the early days of cell phones and the early Internet,

- Is the reason why we developed 5G,

- and will continue to be a problem for the foreseeable future.

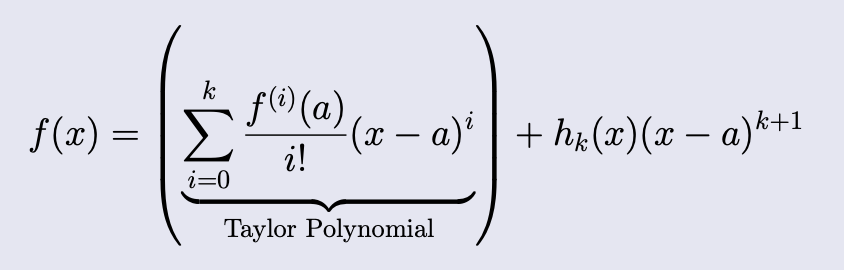

”Recall” Taylor’s theorem from MATH119:

Theorem 1: Taylor's Thorem

Let be an integer and let be a real valued function that is differentiable at least times at some point . Then there exists a real valued function such that

and .

For infinitely differential functions we can write

Examples: , or .

Here we used the basis but what if…

somehow… could be used as a basis for some set of functions?

This would synchronize well with LTIs and it is what we will study for the rest of this course.

MATH115 orthogonal basis “review” part 2 (see L3 for the version we used for Laplace transforms):

Suppose that there is a orthogonal basis for .

This means that where .

If we wanted to write in this basis we would need to solve the system of equations

We can solve this using orthogonality (as in L3). Explicitly to find we take the dot product with :

To make this method work for we MUST

- Find a “dot product”, called an inner product, for functions such that "" and compute the .

- Define (the different types of) convergence for series of functions i.e. .

To fft_fun.m

To voice analyzing phone thing!!

In subsequent lectures we will:

- Generalize the dot product to functions

- Learn how to use this new dot product to compute a few different versions of Fourier series (sin, cos, half sin, half cos and complex exponential),

- Talk about convergence and what the Fourier series for converges to (it is not always ) and

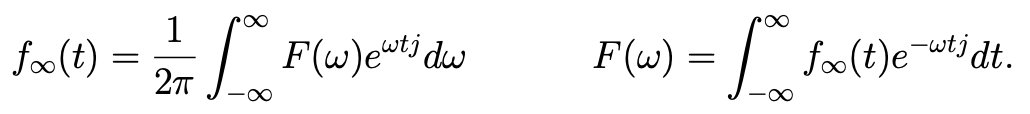

- Talk about the Fourier transform (which is just the 2-sided Laplace transform when ).

Lecture 21 - space, inner product on , and computing Fourier coefficients

Lecture goals:

- know what the standard inner product in is

- and know how to use it to compute Fourier coefficients of periodic functions.

In general properly defining a dot product for functions is a huge issue so we will only work with a special class of functions called “Lebesgue square integrable functions” or L2 functions which makes things nice:

Definition 1:

L^{2}functionsA complex valued function is in the class if

exists and is finite.

is in the class if

exists and is finite.

and form vector spaces so ideas from MATH115 can be used (with proper adjustments). Now if is a member of for some fixed then our goal is to write

for .

To do this we need to somehow solve for the

By comparison with how we solved

for an orthogonal basis

We need a…

Inner product for L^{2}([-\tau,\tau])

Recall that if then

Now if and are complex valued functions, then the “dot product”, which will be called an inner product, should follow a similar definition,.

The summation becomes integration!!!

Definition 2: Standard Inner product on

L^{2}([a,b])If and are complex valued functions in then the standard inner product is

Theorem 1: Existence of Inner Product

If then exists and is finite.

We skip the proof since it needs some real analysis…

Theorem 2

The set of complex exponentials is an orthonormal basis for a subspace of .

Partial proof: We will not prove that the collection is linearly independent but will prove that they are orthonormal.

Now that we have an orthonormal basis for a subset of , we can project any function in into our basis by using our inner product.

Note that since we are doing a projection and the basis may not be (is not…) a basis for , the result of projecting into this basis may not be equal to the original function in the traditional sense.

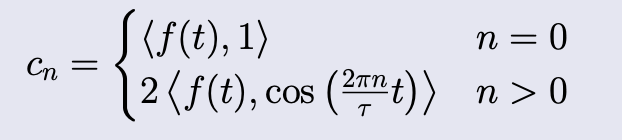

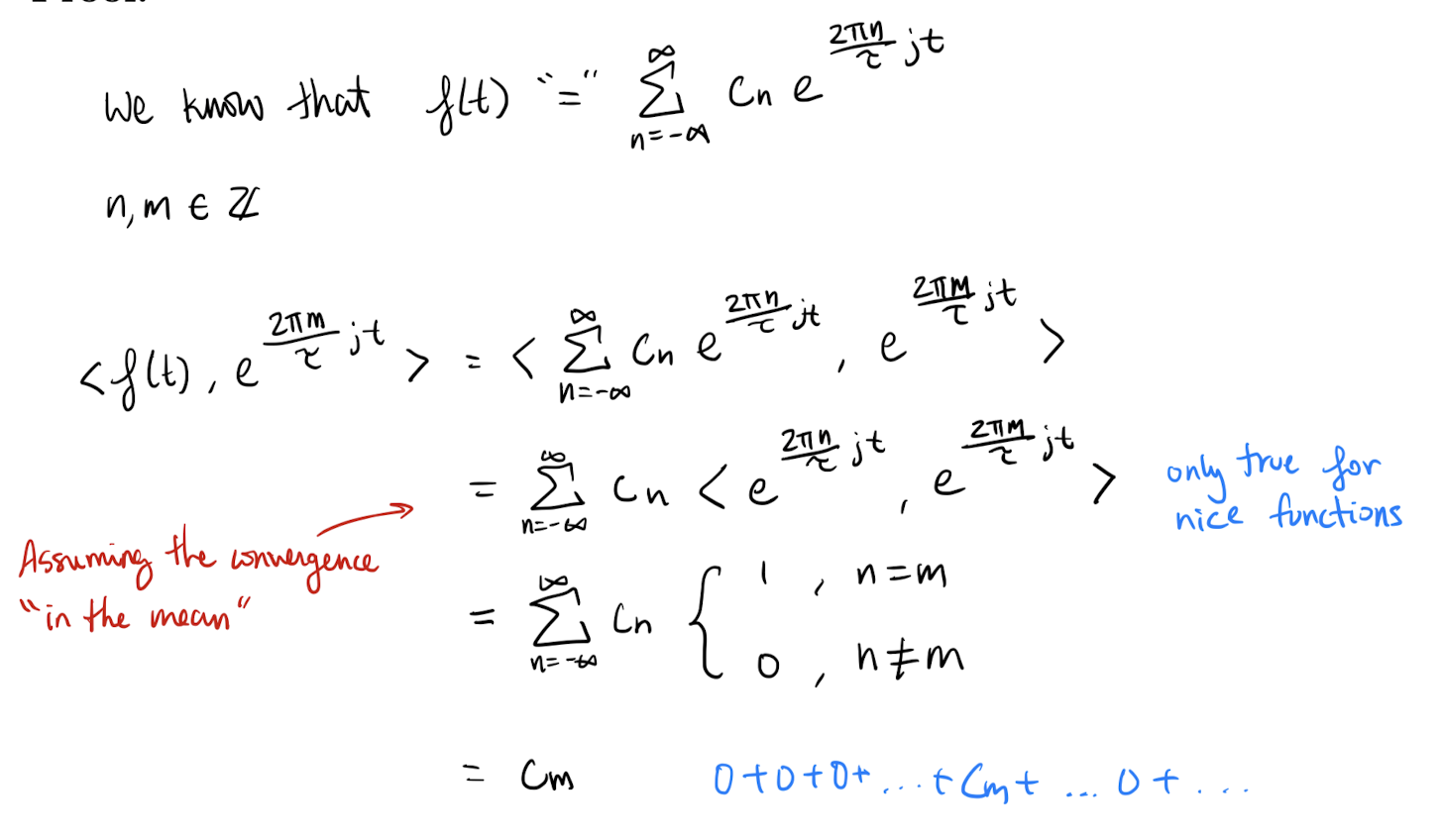

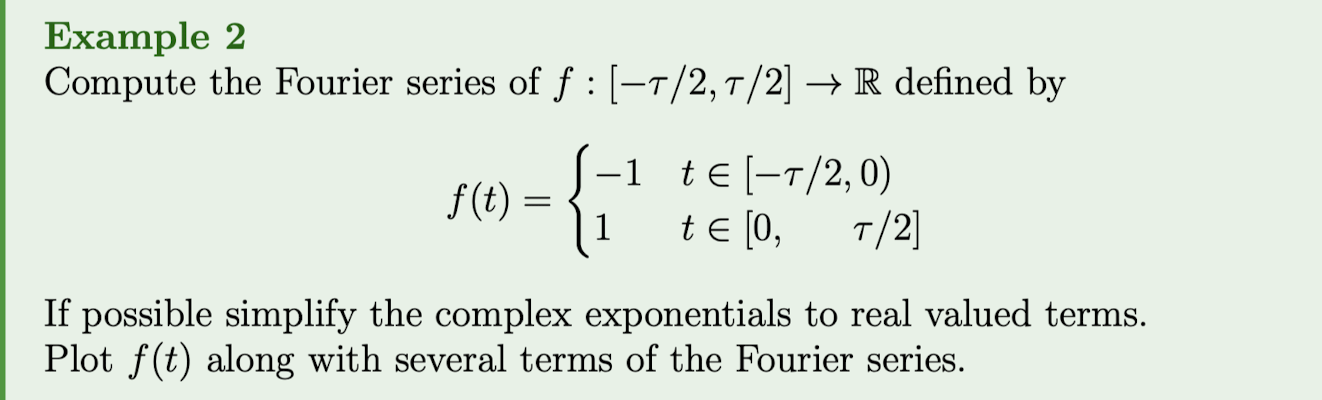

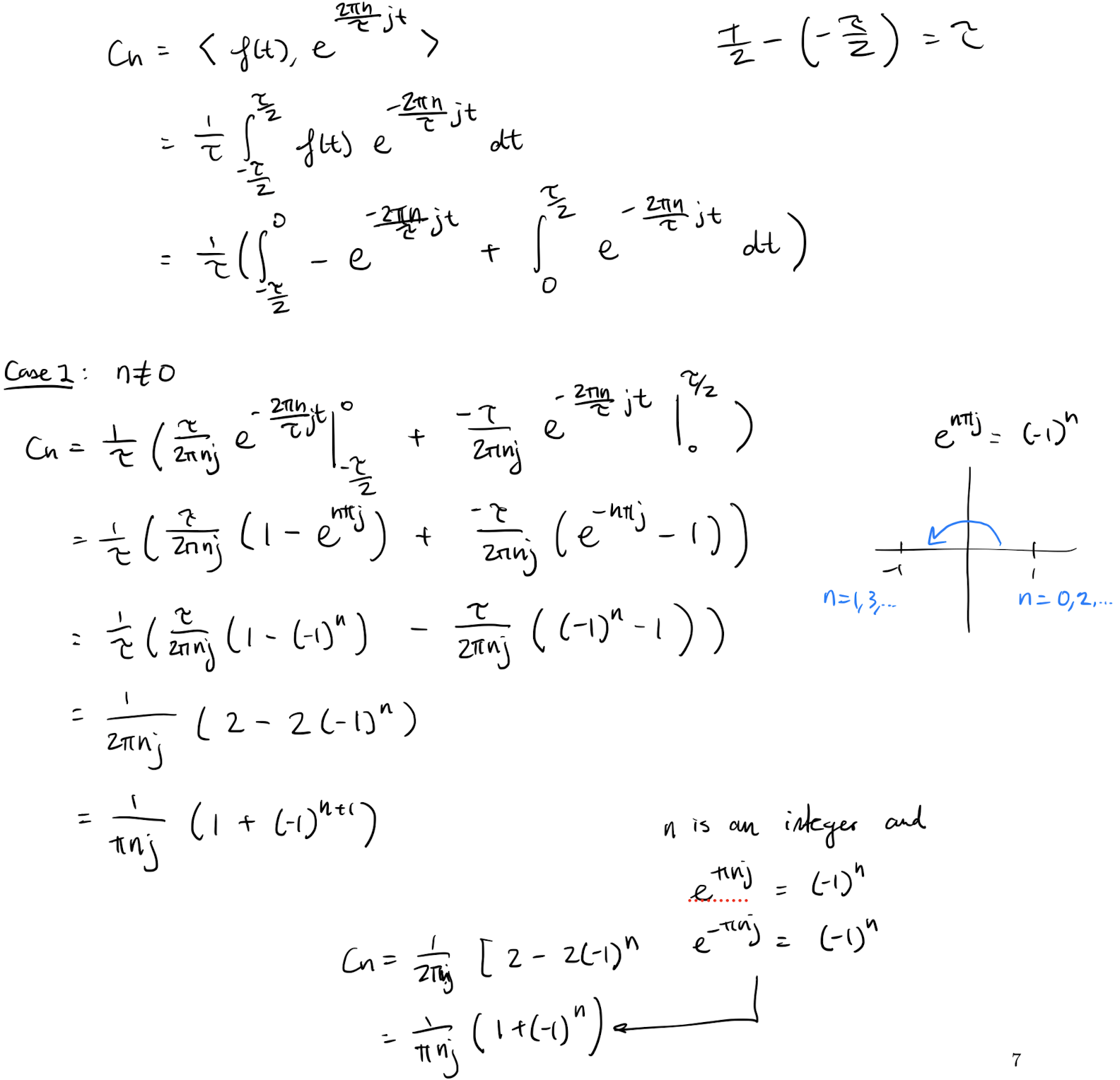

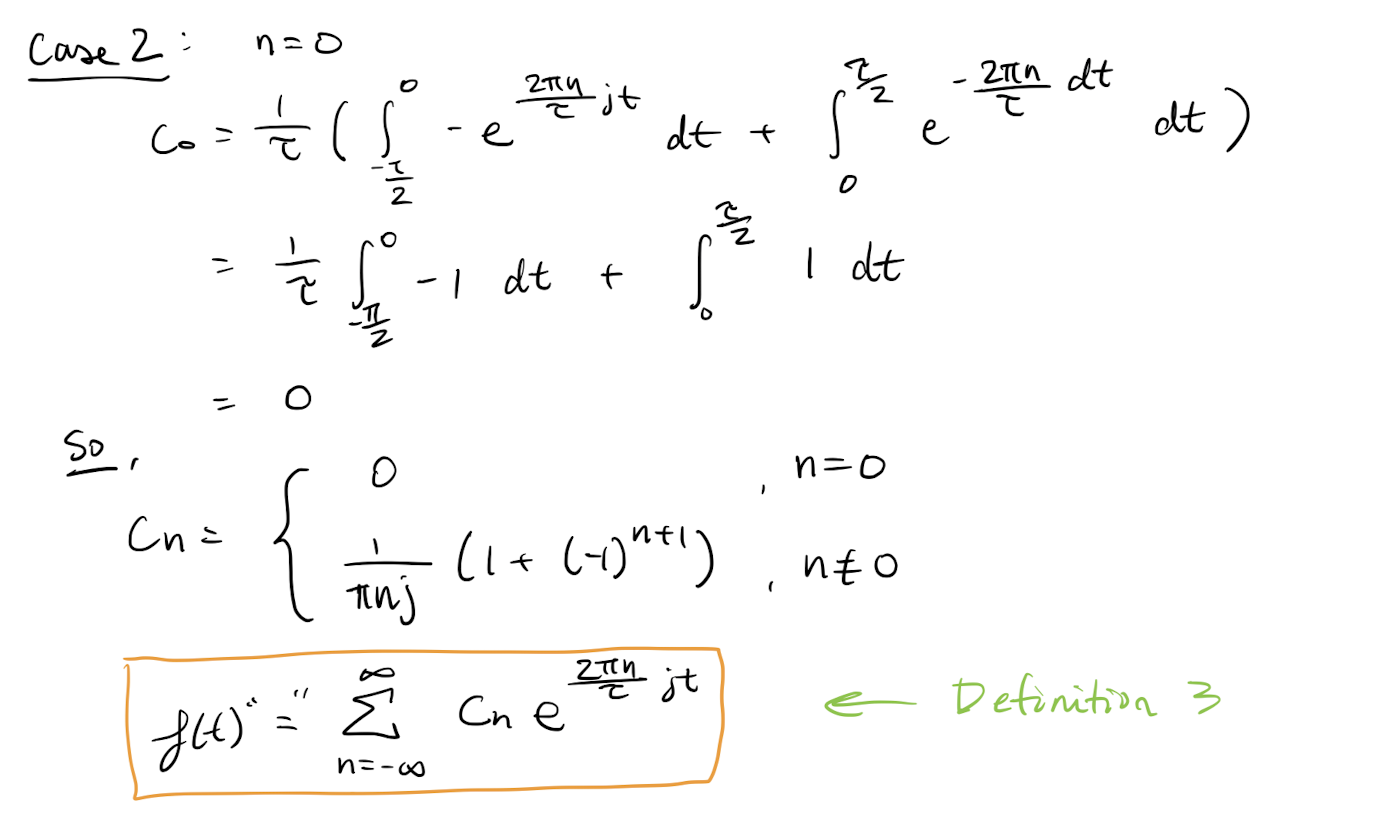

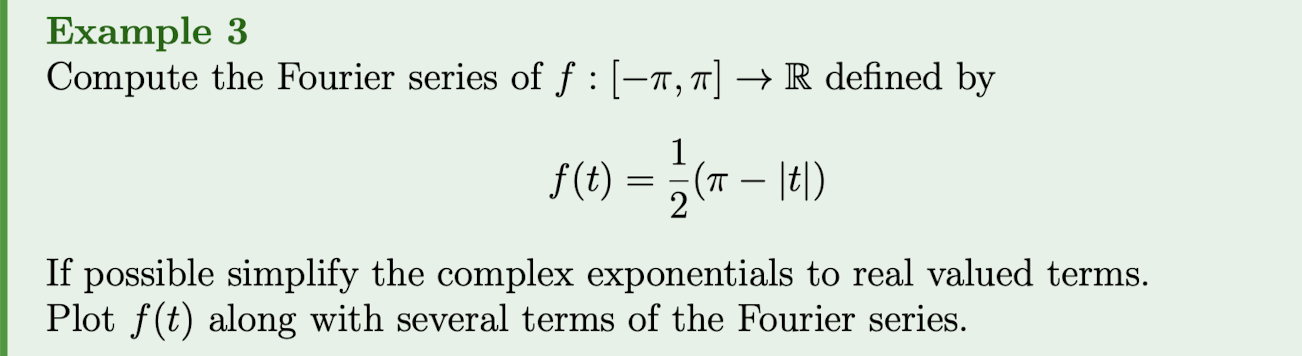

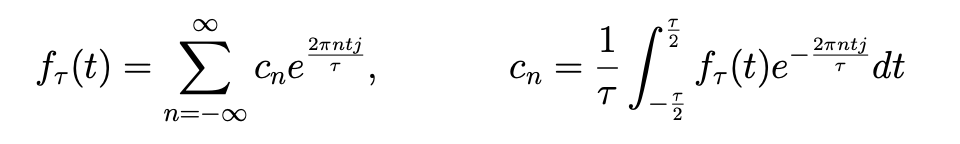

Definition 3: Fourier Series - Complex Form

If then the Fourier series in complex form of is

where the are found by projecting into the basis of complex exponentials.

Theorem 3: Fourier Coefficients for Series in Complex Form

If then the Fourier coefficients of are

.

If is real valued than .

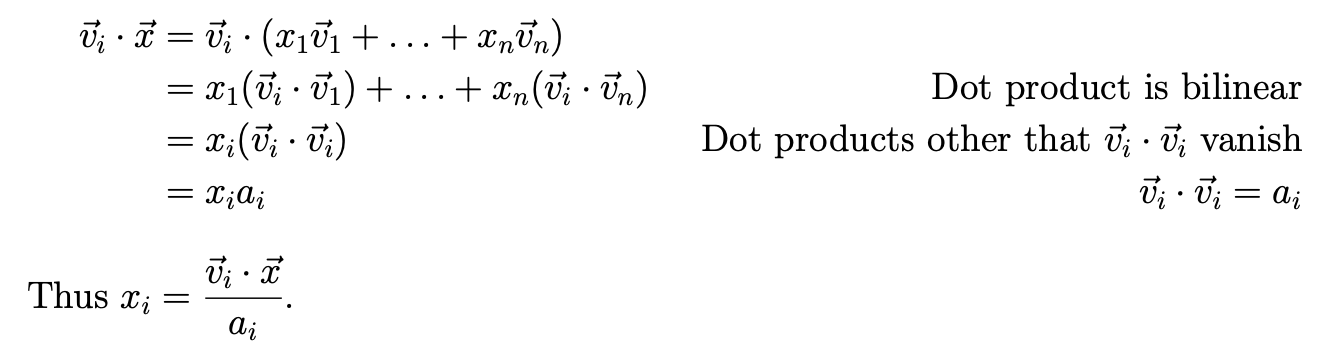

Proof:

Compute the things!!!

Where does the

C_{n}values come from??? And the options...?

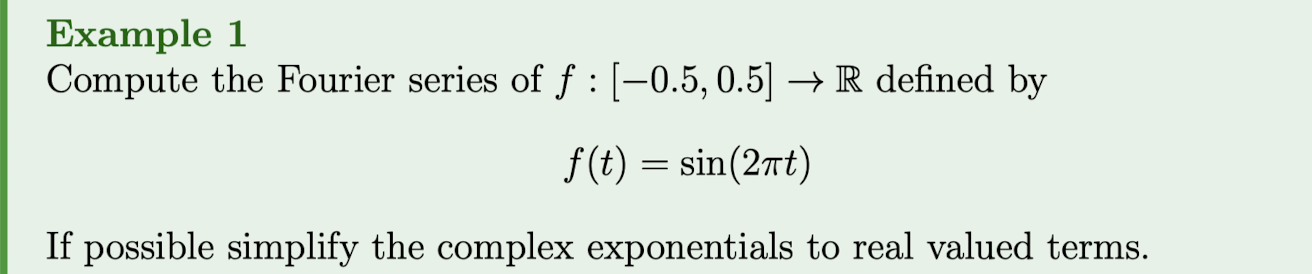

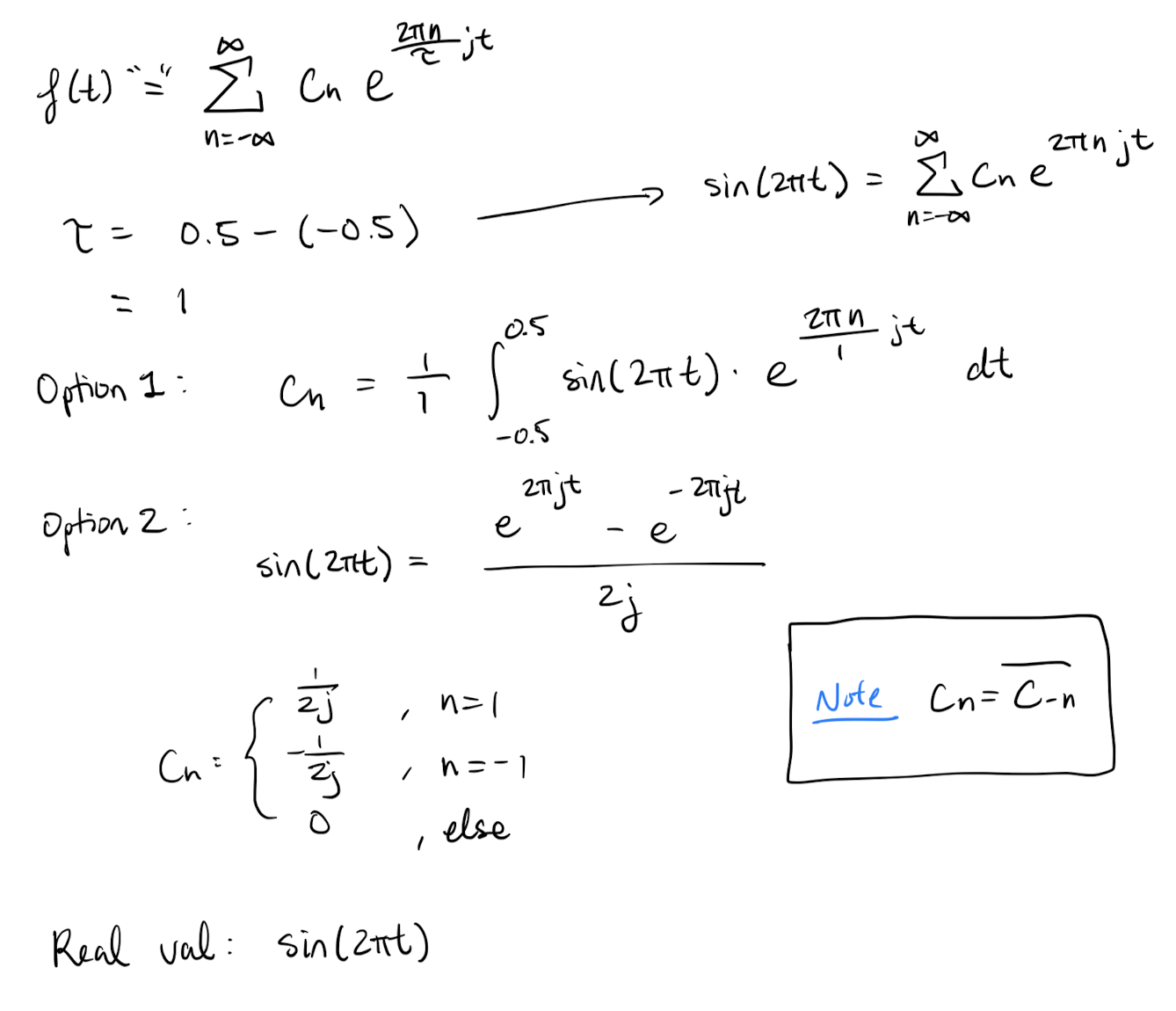

What is the purpose of the Real version???

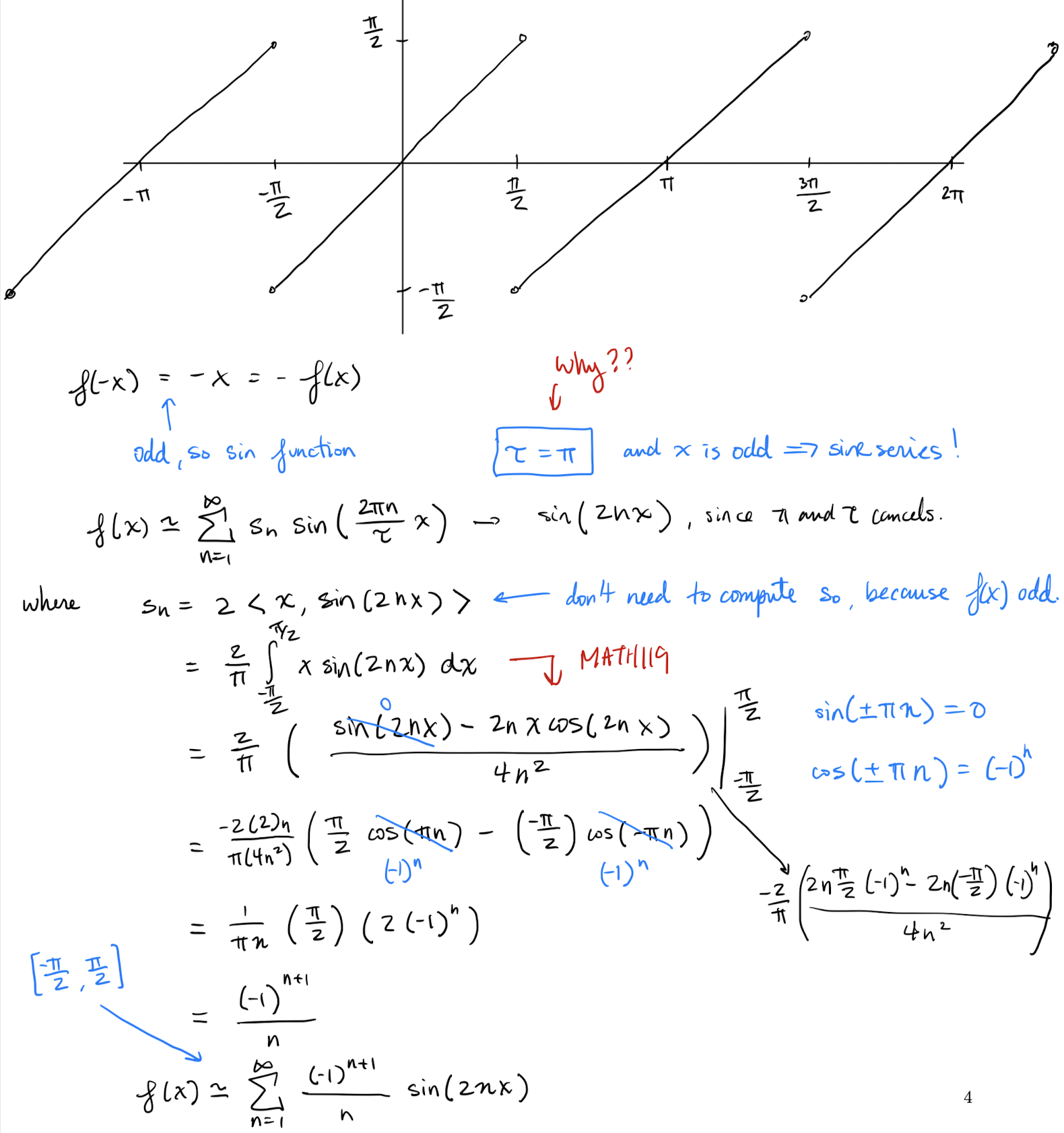

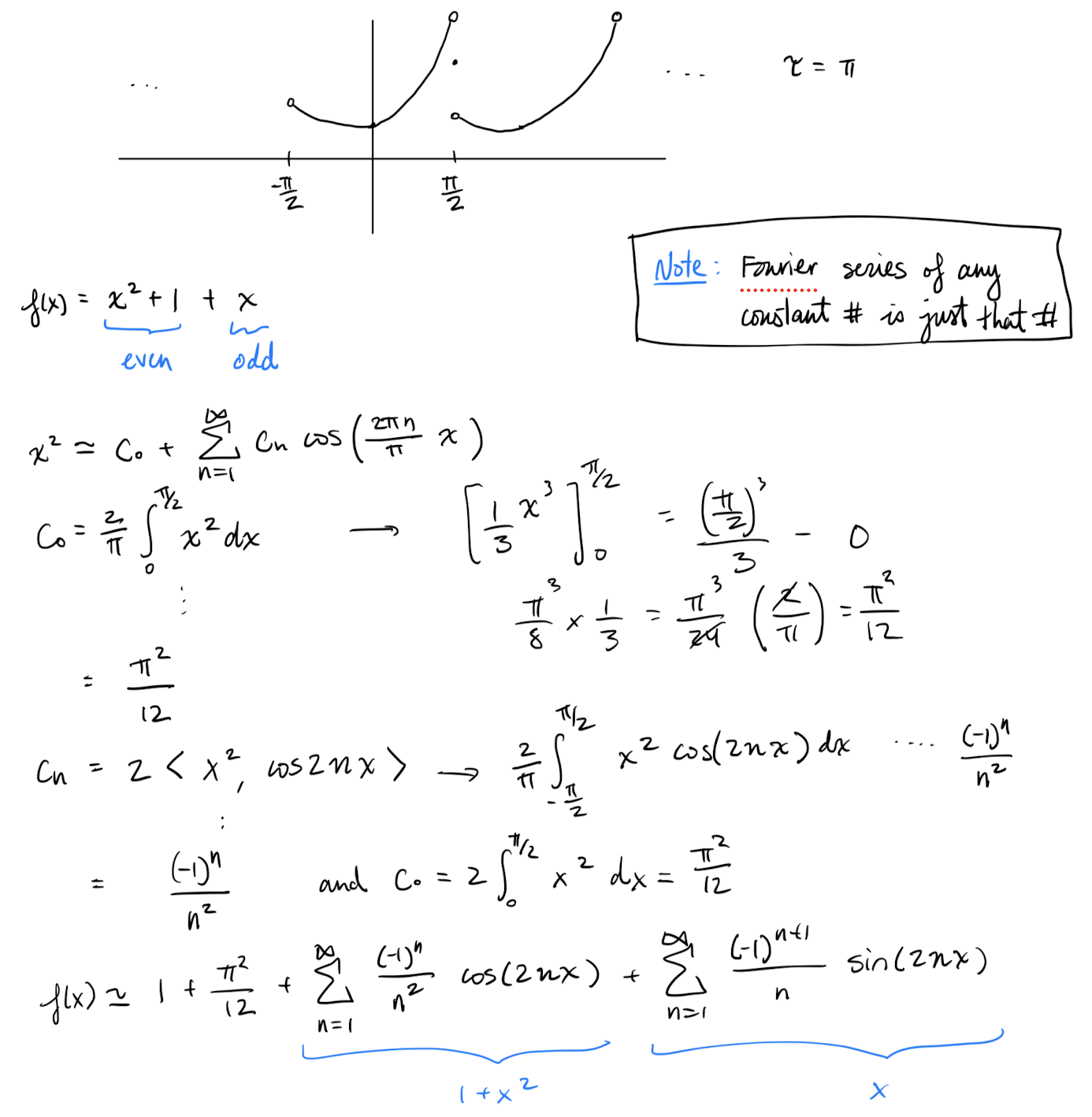

Lecture 22: Real Fourier series and convergence

Lecture goals:

- Know how to find the real Fourier series quickly and

- know the convergence properties of Fourier series.

periodic functions:

Definition 1:

\tauperiodic functionsA function defined on is periodic if for all

.

Generally, we pick the smallest value of such that the above holds.

Theorem 3 () from L21 holds for periodic functions and we would integrate over one period.

If I ask you to find the Fourier series without telling you a domain for

fandfis\tauperiodic,then you first find out the period of and do the computation over a period of .

Fourier sin/cos series:

For a real valued function sometimes we found the Fourier Series simplified to a sum of sine waves and sometimes it resulted in a sum of cos waves. We now elaborate on this:

Definition 2: Even and odd functions

A function is even if

A function is odd

.

Theorem 1

For a real valued, periodic function :

- If is an even then the Fourier series can be simplified to a sum of cos waves.

- If is an odd then the Fourier series can be simplified to a sum of sin waves.

The sums above are called Fourier cosine series and Fourier sine series respectively.

For completion we will write Theorem 3 from L21 for sin and cos series.

Theorem 2: Fourier Sine and Cosine Coefficients

If is a real valued function that is in then

- If is even then the Fourier cosine series for is

where

- If is odd then the Fourier sine series for is

where

Sketch of proof:

- Start with the coefficients in Theorem 3,

- write the complex exponential in terms of sin/cos waves,

- simplify the integrals by using the properties that

- the integral of an odd function over for any is 0,

- that for an odd function and

- that for an even function .

I strongly suggest not blindly memorizing this but to instead memorize Theorem 3 and then understand how it still simplify if there are no sin or cos terms.

This is similar to the computations seen in the last lecture

Theorem 3

If is real then we can decompose it into even and odd functions as follows:

and

.

Sketch of proof: Show that is even, that and that when summed we get .

Theorem 4

Every real valued function in admits a real valued Fourier series with some sin and/or cos terms. i.e. the complex form will always simplify to sin and cos terms.

Sketch of proof: Since is real, it can be decomposed into an even and odd part. The even part gives us a cos series and the off part gives us a sin series.

Need to know how to integrate sin and cos...

It is Integration By Parts…

What is

s_{n}?In theorem 3. In this example, somehow . We find in order to solve the problem .

https://piazza.com/class/lr4fiutz6vg69z/post/441

In the prof’s notes, he did for .

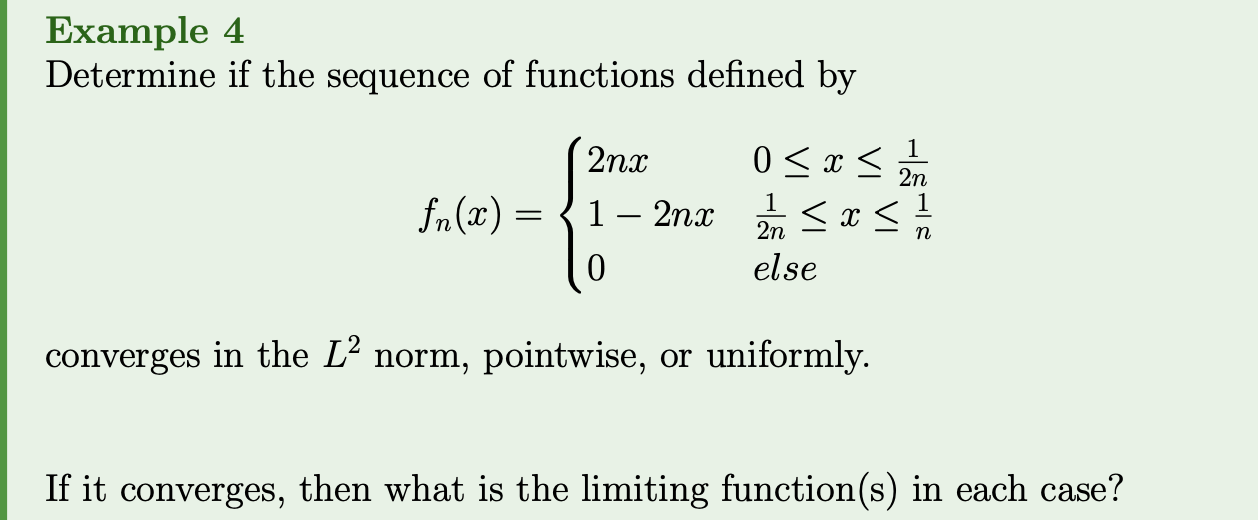

Types of Convergence:

In MATH119 you dealt with the convergence of series of real numbers. Things like

In these cases there is a natural way to define convergence (though this is not actually unique).

With sums of functions like this

there are many different ways to define convergence:

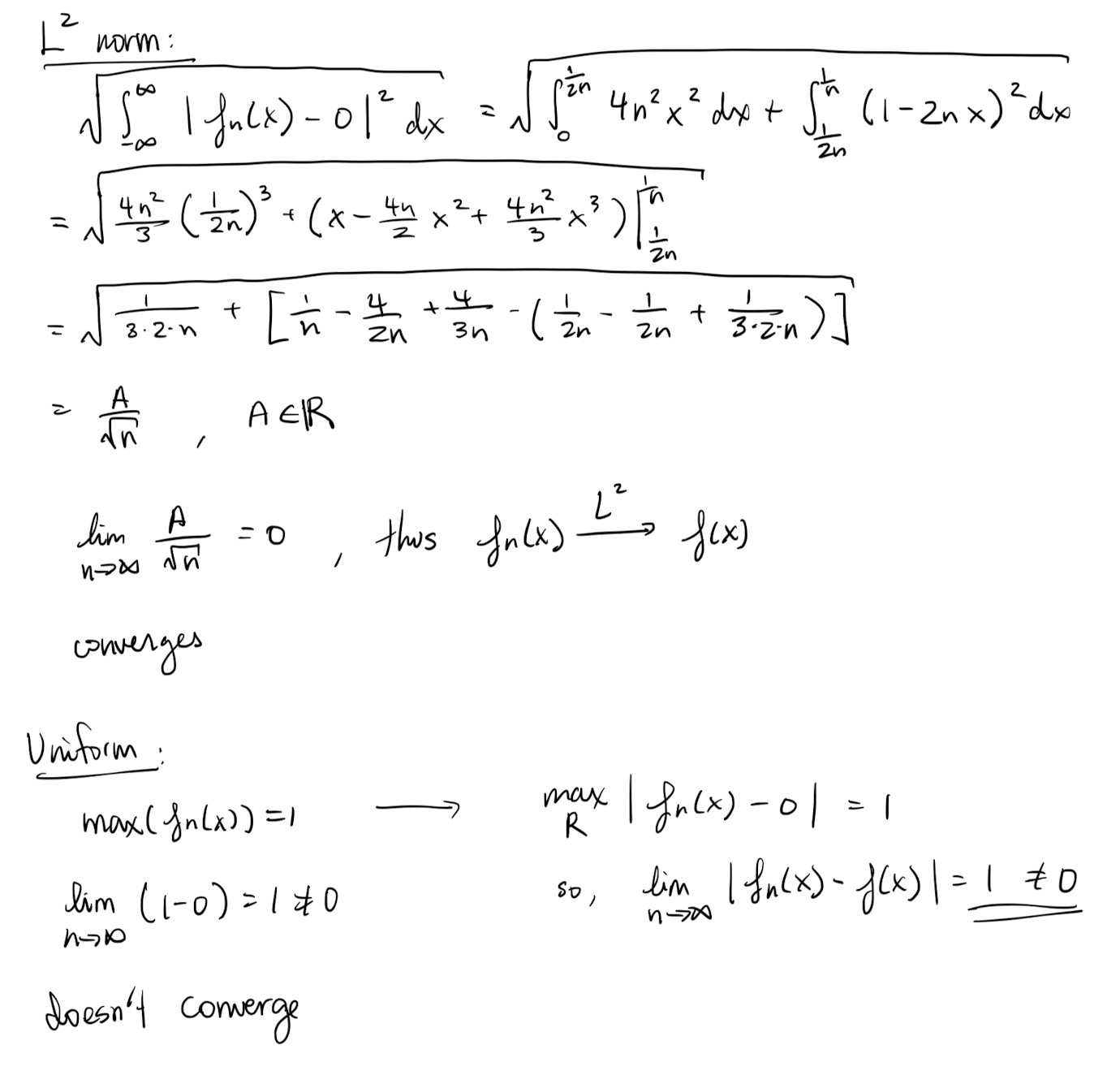

Definition 3: Some Types of Convergence

If is a sequence of functions defined on then we say that

- the sequence converges in the norm, or converges in the mean or converges almost everywhere, to is

tldr; the “average error” goes to 0.

- the sequence pointwise converges to , if for any

tldr; the error at each point goes to 0.

- The sequence uniformly converges to if

If the maximum does not exist then we replace it with the smallest upper bound (called the sup).

tldr; the maximum error converges to 0.

Note that each of the things we take the limit of are just numbers!

Metanote, the first bullet point can be called by all those names only when we talk about functions on a finite interval (that is the case for this course).

- https://piazza.com/class/lr4fiutz6vg69z/post/467

-

- https://piazza.com/class/lr4fiutz6vg69z/post/443: Reasoning for these two cases , .

Convergence of Fourier series:

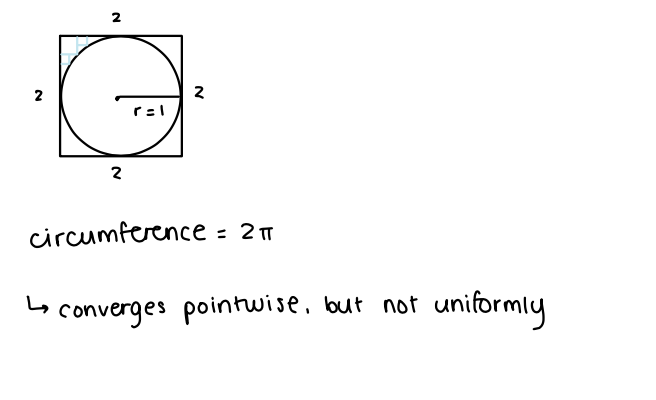

Before talking about the convergence of Fourier series, we need to introduce some new definitions.

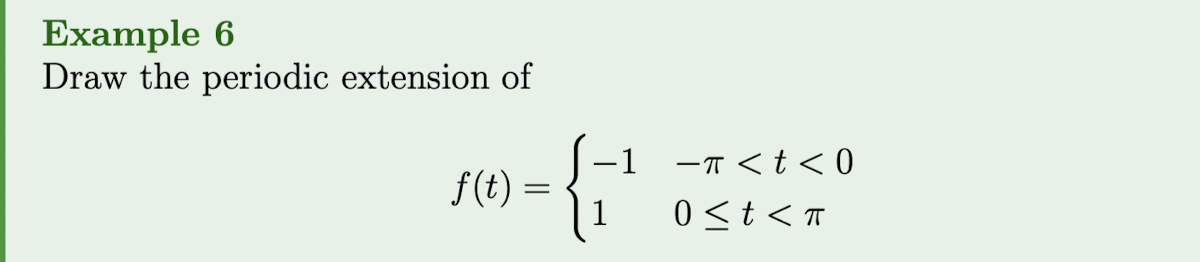

Definition 4: Piecewise

C^{1}A function is a Piecewise (PWC1) on the interval if there is a finite partition such that:

- exists on each interval ,

- is continuous on each interval ,

- and are bounded on each interval .

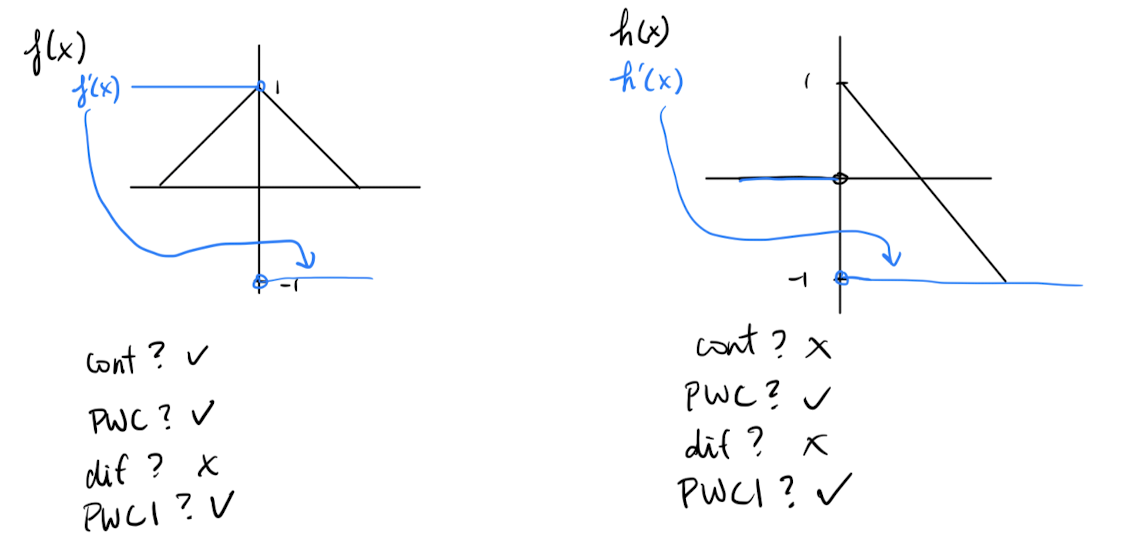

How to determine a function is differentiable and why f(x) and h(x) in the examples are not differentiable?

https://piazza.com/class/lr4fiutz6vg69z/post/478

Differentiable = has a derivative. To see why, ask yourself what the slope is at .

- This function is continuous everywhere except at , where there’s a sharp corner due to the absolute value function.

- This function is continuous everywhere, including at , since the root is defined for negative values of .

- Here, is the unit step function, which equals 1 for and 0 for .

- Similar to , is continuous everywhere except at , where it has a sharp corner due to the absolute value function.

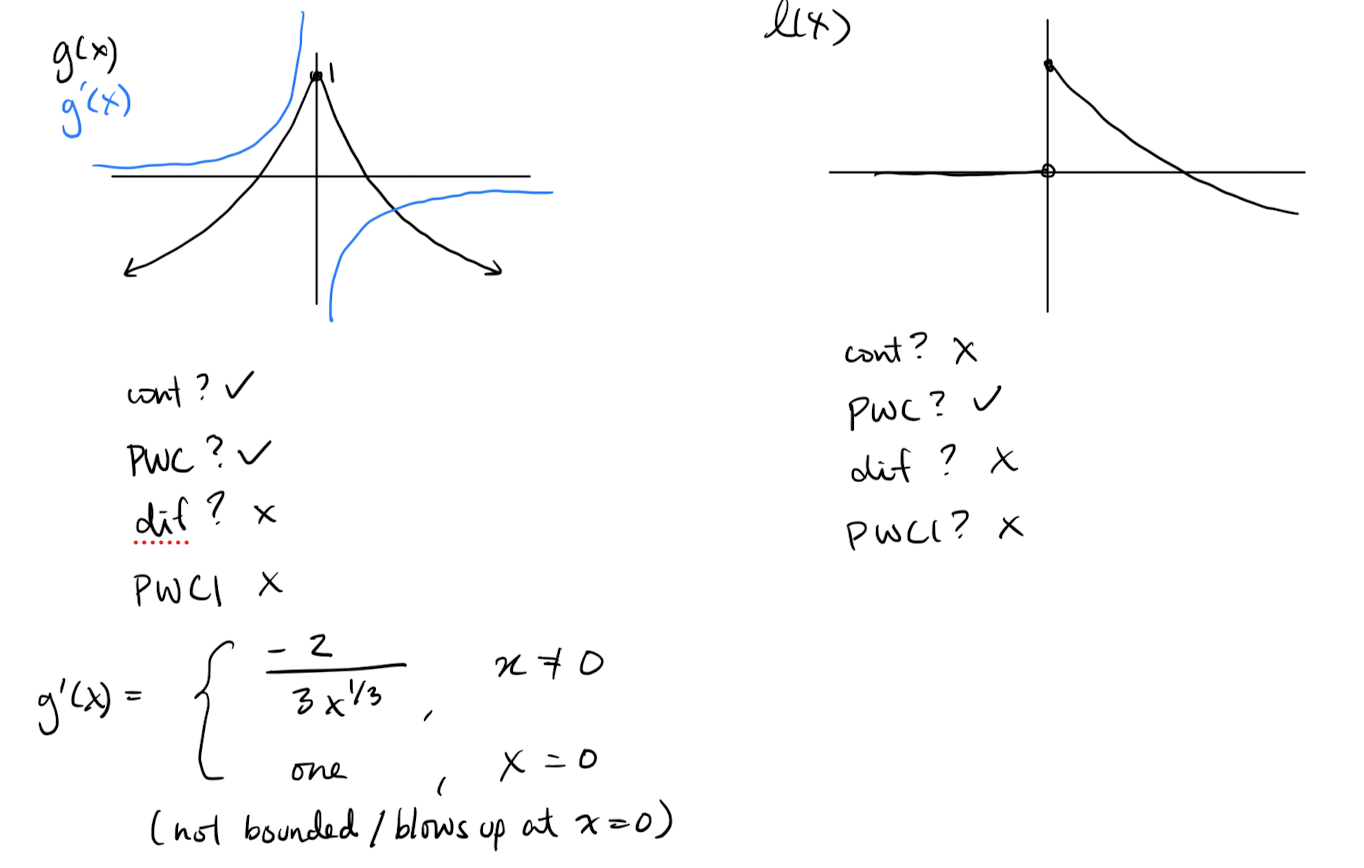

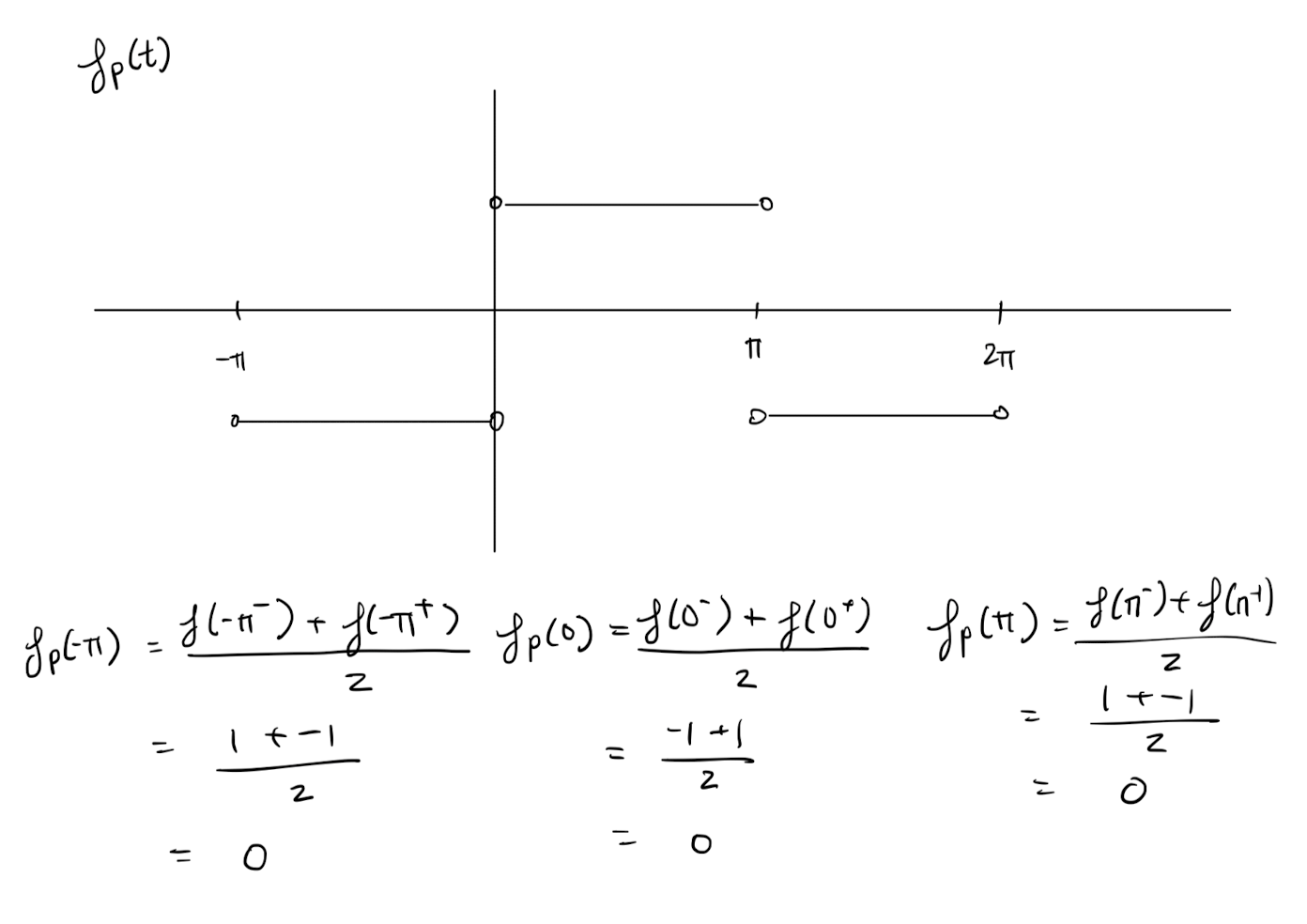

Definition 5: Periodic Extension

The periodic extension of a function defined on is the periodic function such that

- for where is continuous.

- for where is not continuous

Theorem 5: Convergence of Fourier series

Let be the periodic extension of a function .

- The Fourier series of converges in the norm (also in the mean and almost everywhere) to (and also ) on any finite subinterval of .

- If is piecewise then the Fourier series of converges pointwise to for all

- If is piecewise and continuous then the Fourier series of converges uniformly on any finite interval of .

The proof is beyond the scope of this course.

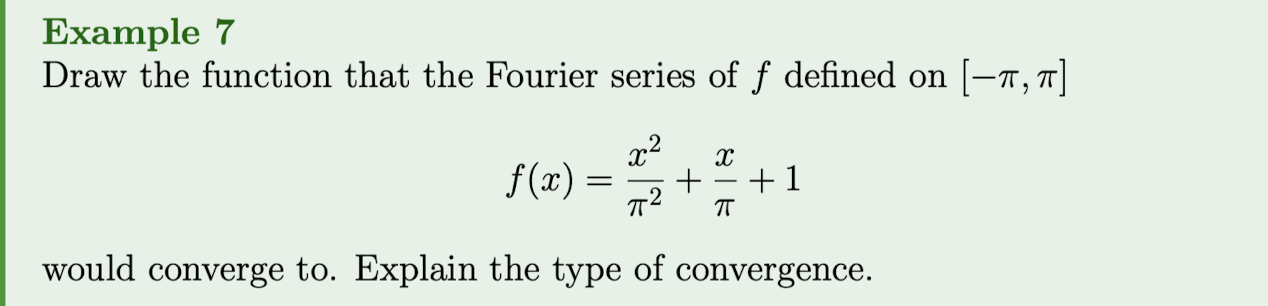

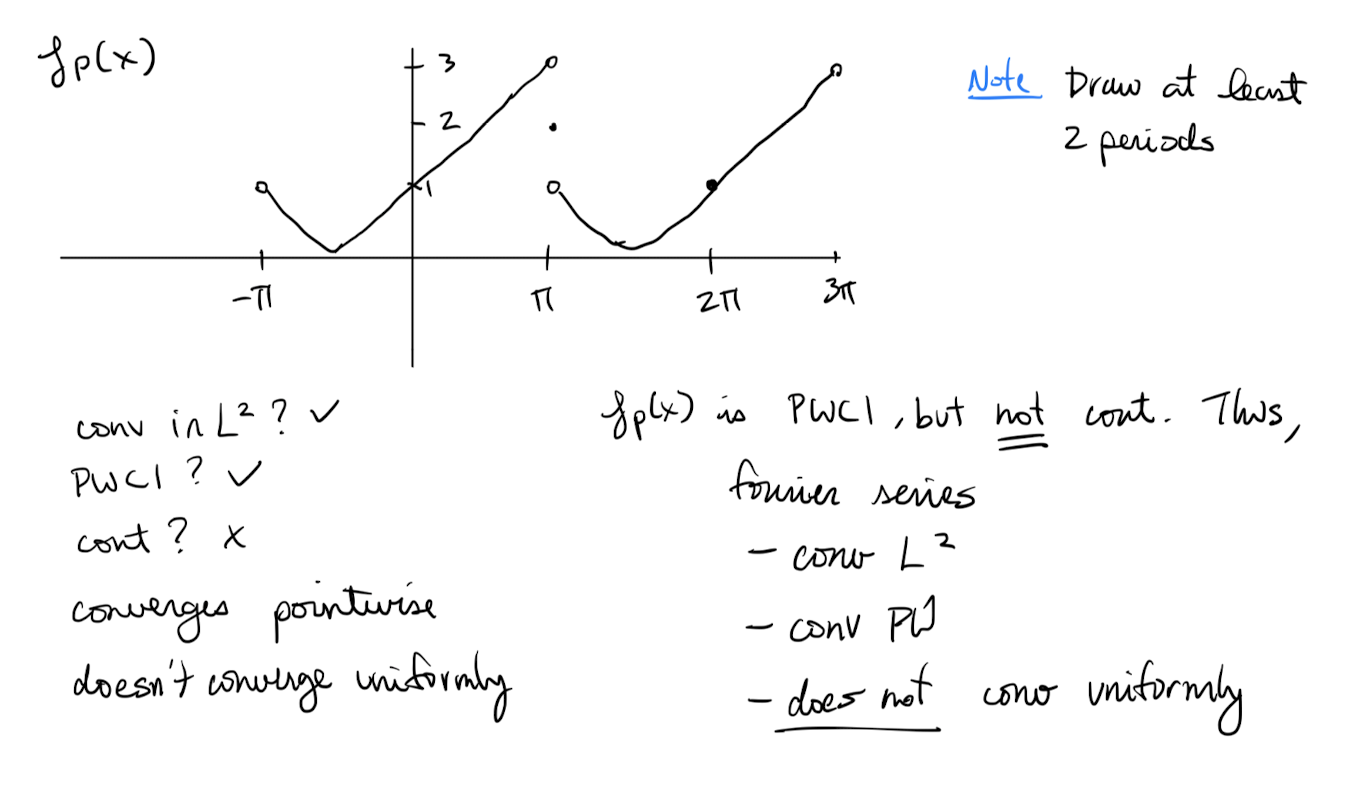

Are we supposed to know how to graph this????

By heart?

Why is it PWC1? and not continuous?

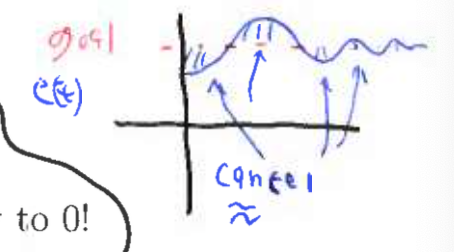

Definition 6: Gibbs Phenomenon

For a function with periodic extension , if is not continuous at some point then truncated Fourier series of will have growing oscillations near the point . This is called Gibbs Phenomenon.

These oscillations do not appear in the infinite sum.

Gibbs Phenomenon is a characteristic behaviour observed in truncated Fourier series when dealing with functions that have discontinuities. Here's a breakdown of the definition:

- Function with Periodic Extension: Consider a function defined on an interval with a periodic extension that repeats the function periodically outside this interval.

- Discontinuity at : If the periodic extension of ff is not continuous at some point t0t0 within its period, then we have a discontinuity at .

- Truncated Fourier Series: When we approximate ff using a truncated Fourier series, we’re essentially using a finite sum of Fourier coefficients to approximate the function. This finite sum doesn’t capture all the frequency components of the original function.

- Growth of Oscillations: Near the point where the periodic extension is discontinuous, the truncated Fourier series will exhibit oscillations that grow as we approach .

- Gibbs Phenomenon: This growth of oscillations near discontinuities in the periodic extension is known as Gibbs Phenomenon.

- Absence in the Infinite Sum: Interestingly, despite these growing oscillations in the truncated series, they do not appear in the infinite sum of the Fourier series. The infinite sum captures all the frequency components of the original function and thus provides a smoother approximation.

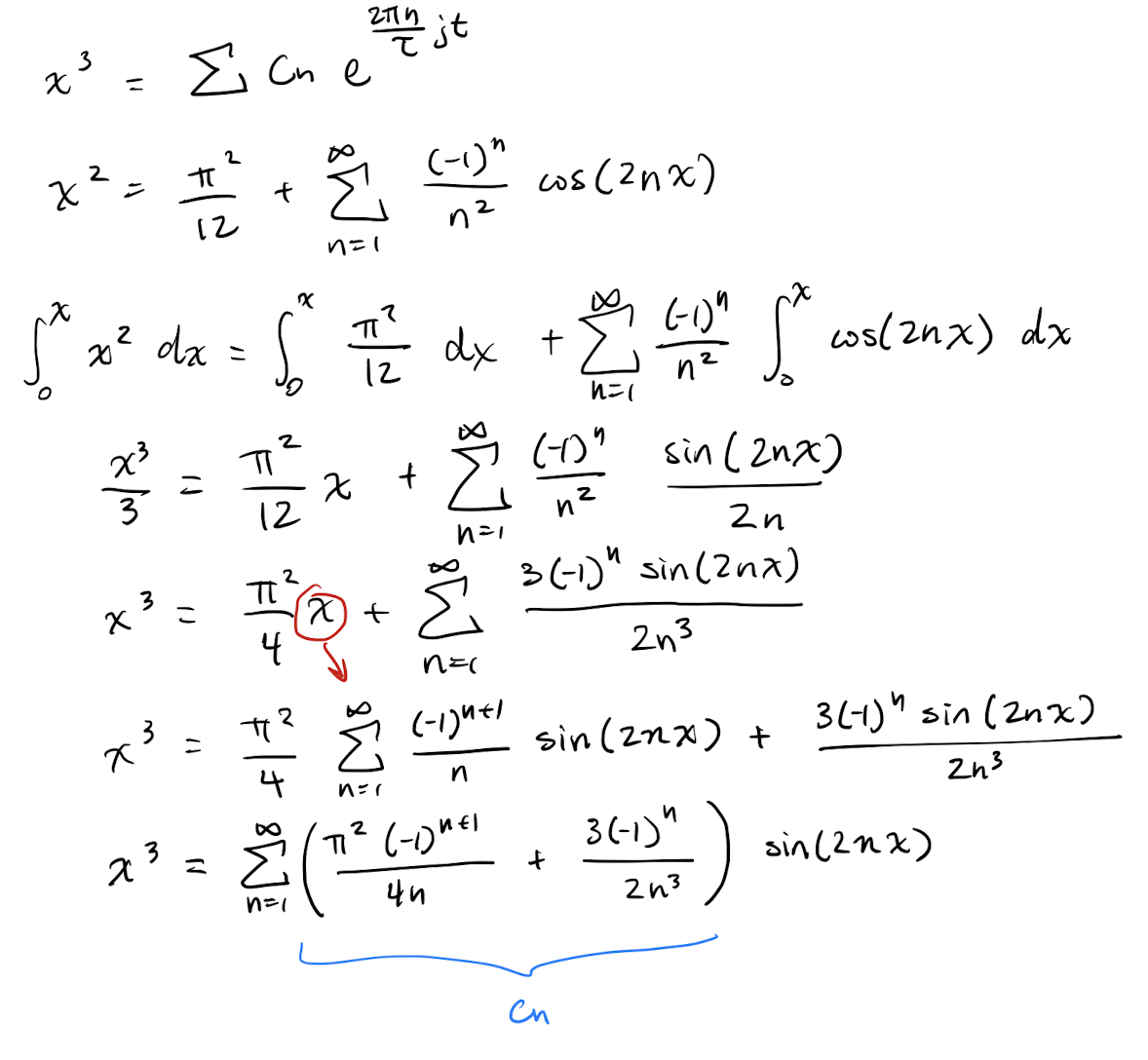

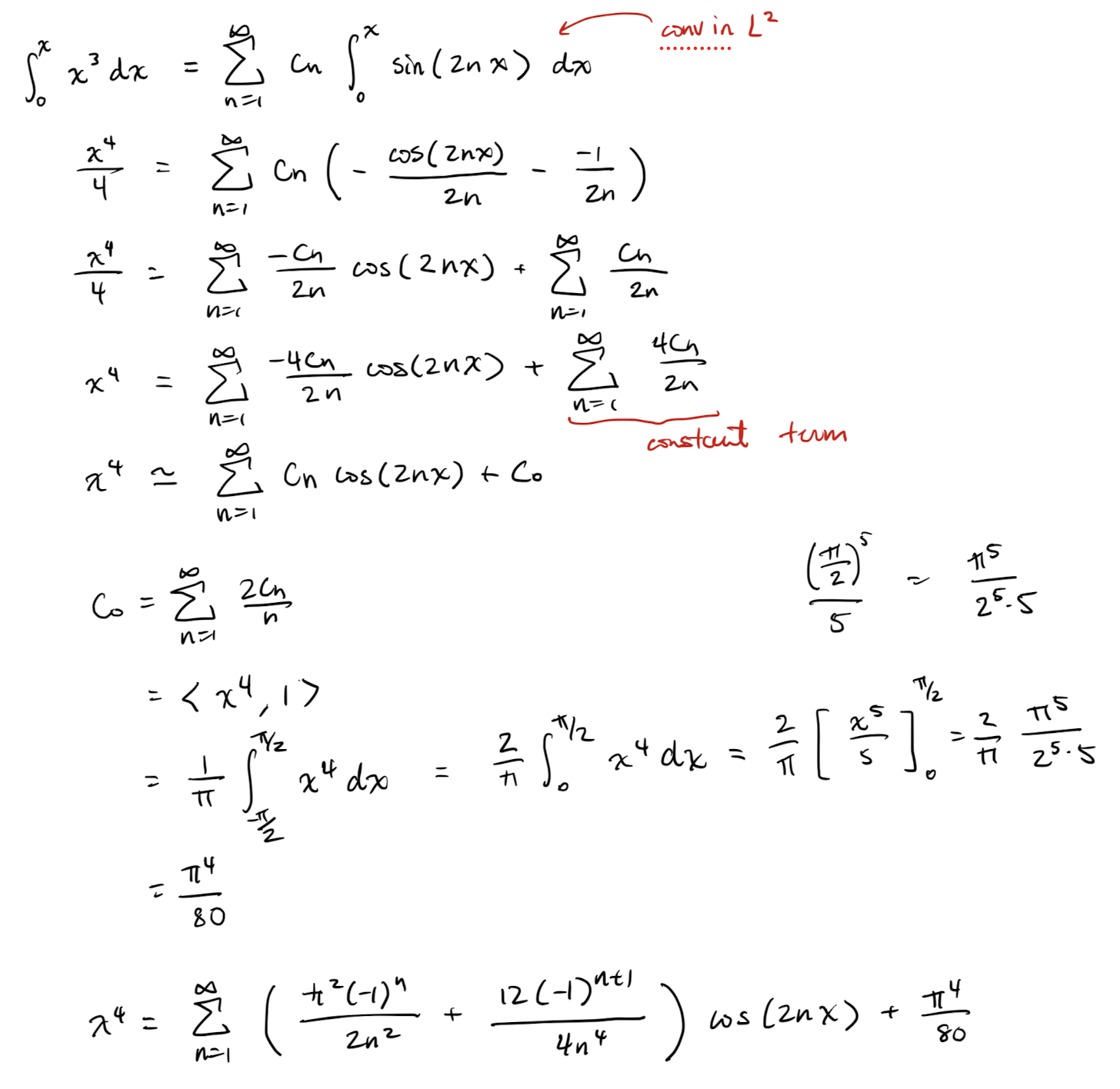

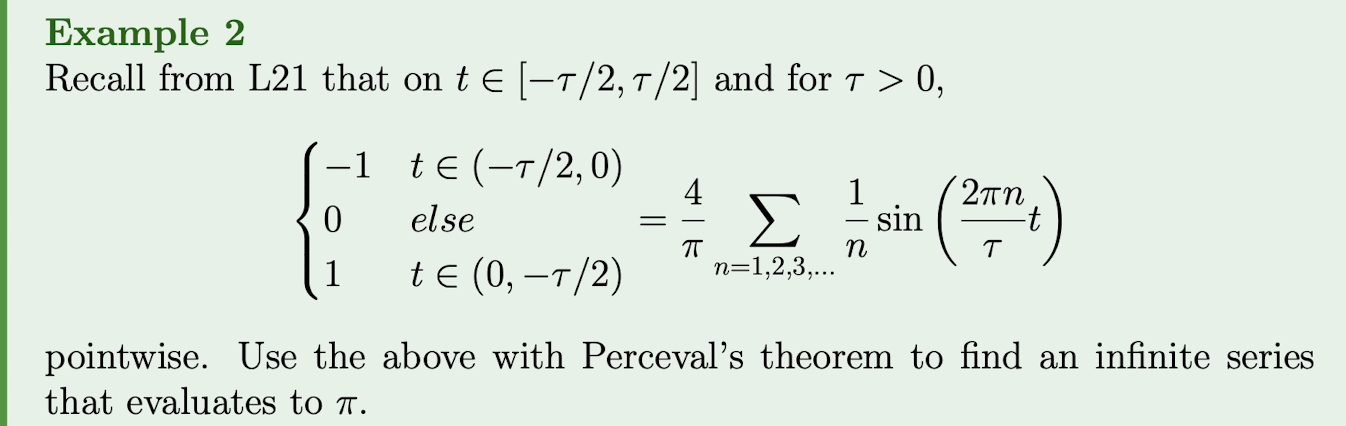

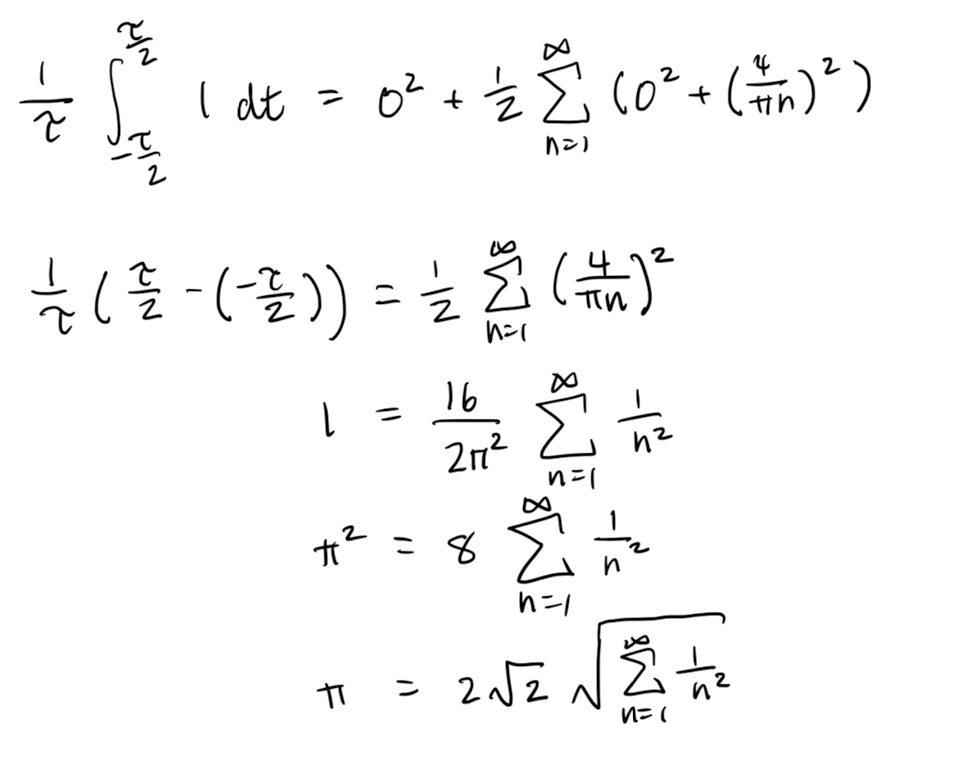

Lecture 23: Differentiating and integrating Fourier series, Persivalle and Dirichlet theorems and Fourier transform

Lecture goals:

- Know when you can term-wise integrate and differentiate Fourier series,

- what the Persivalle and Dirichlet theorems state

- and how to use Persivalle’s theorem.

Integrating and differentiating Fourier series:

For general series of piecewise convergent functions

it is NOT true that

or that

So when can we do this for Fourier series?

Theorem 1: Term-by-term integration of Fourier series

The Fourier series of a PWC1 periodic function can be term-by-term integrated to give a convergent series that pointwise converges (and sometimes uniformly) over any finite interval.

tldr; If PWC1, integral has uniform convergence. Else, pointwise convergence.

Explicitly if is in and

then

and the convergence is at least pointwise.

Note 1: It is because of this theorem, that we can guarantee the existence of the Fourier coefficients derived in L21.

Note 2: If the Fourier series has a non-trivial constant term then when integrated we obtain which means that the RHS might not be a Fourier series.

Theorem 2: Term-by-term differentiation of Fourier series

Let

- be a PWC1 periodic function,