Thread

Thread

Is a set of instructions that represents a single unit of concurrent programing.

Thread of execution is sequence of executable commands that can run on CPU.

They have some state and store some local variables:

- Execution state (ready, running, waiting)

- Saved context when not running

- Execution stack

- Local variables

Multithreading programs use more than one thread (some of the time)

- Program begins with single initial thread (where the main method is)

- Threads can be created and destroyed within programs dynamically

In UNIX system, the child usually gets its parent’s priority…

Benefits of threads:

- Far less time to create a new thread in an existing process than to create a brand-new process

- Takes less time to terminate a thread than a process

- Takes less time to switch between two threads within the same process than to switch between processes

- Threads enhance efficiency in communication between different executing programs.

- In most os, communication between independent process requires intervention of the kernel to provide protection and mechanism needed for communication., Since threads are within same process, they share memory and files, so they can talk to each other without involving the kernel!

How does threads look like in memory?

Like processes, threads have execution states and may synchronize with one another.

Threads in a single-user multiprocessing system:

- Foreground and background work

- Asynchronous processing

- Speed of execution

- On multiprocessor system, multiple threads in a process can execute simultaneously. So if one thread is blocked on an I/O operation, another thread can be executing

- Modular program structure

- some programs are big and have a lot of complicated activities, implementing threads would be the best approach

Several actions that affect all of the threads in a process and that the OS must manage at the process level instead of maintaining all information in thread-level data structures.

Example: Swapping out a process address space out of main memory to make room for the address space of another process. Since all threads in a process share the same address space, al threads are suspended at the same time.

Thread Functionality

Similarly to processes, threads have execution states and may synchronize with each other. Let’s look at two aspects of thread functionality:

Thread States

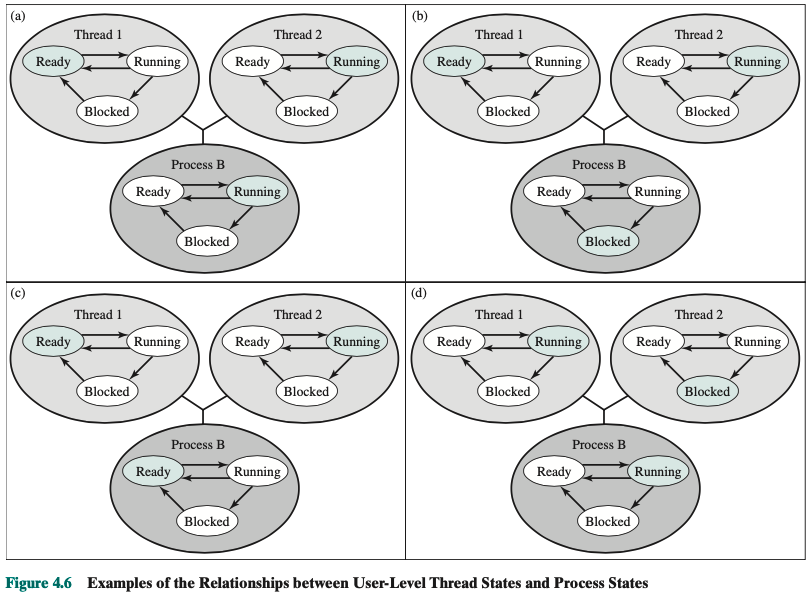

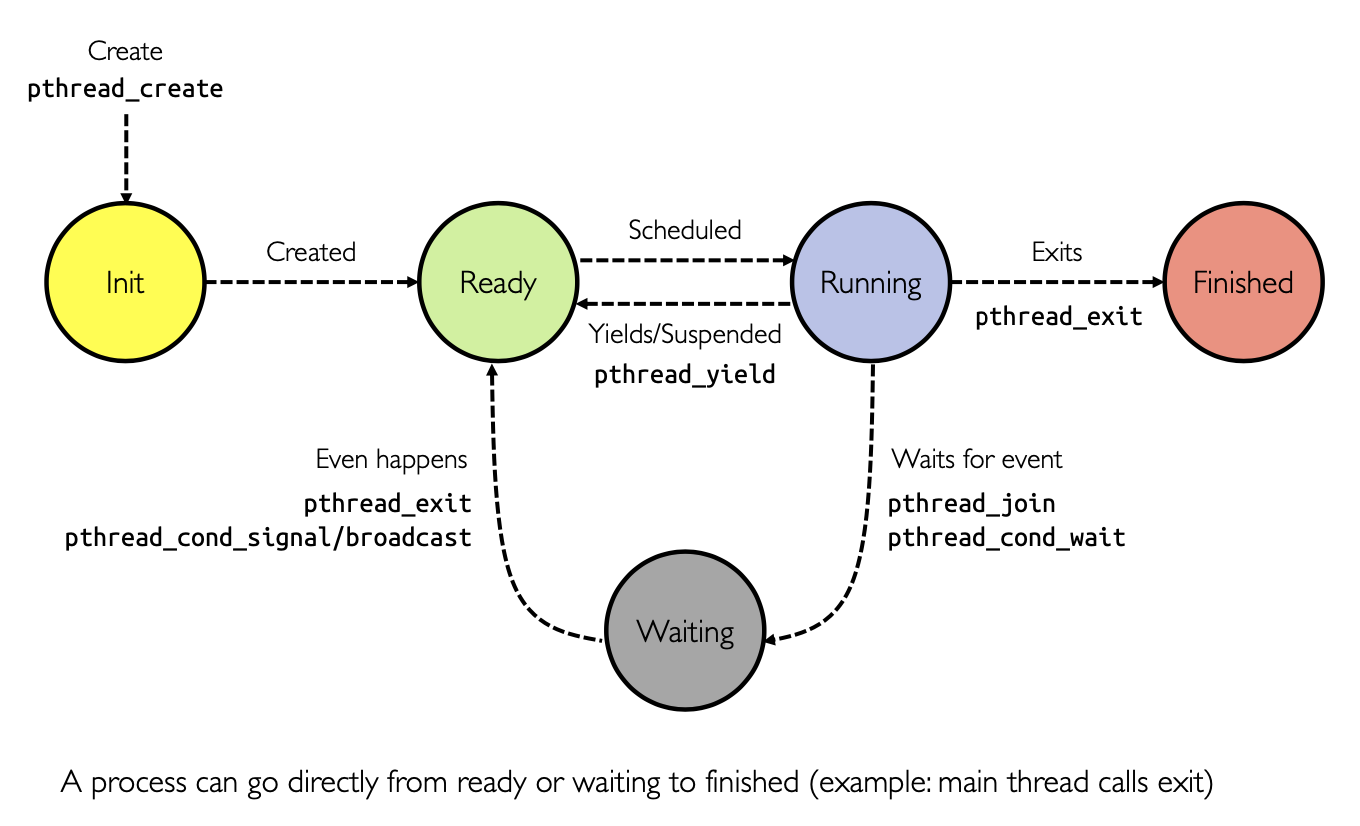

Key states for a thread: Running, Ready, and Blocked.

Why no suspend state?

Does not make sense because such state are process-level concepts. That is, if a process is swapped out, all of its threads are necessarily swapped out because they all share the address space of the process.

4 basic thread operations associated with a change in thread state:

- Spawn

- Spawn another thread

- Creating a new process typically creates new threads

- Block

- Unblock

- Finish

- Deallocate register context and stacks

Does the blocking of a thread results in the blocking of the entire process> If one thread in a process is blocked, does this prevent the running of any other thread in the same process even if that other thread is in a ready state?

We’ll return to this issue in discussion of user-level versus kernel-level threads.

Performance benefits of threads that do not block an entire process:

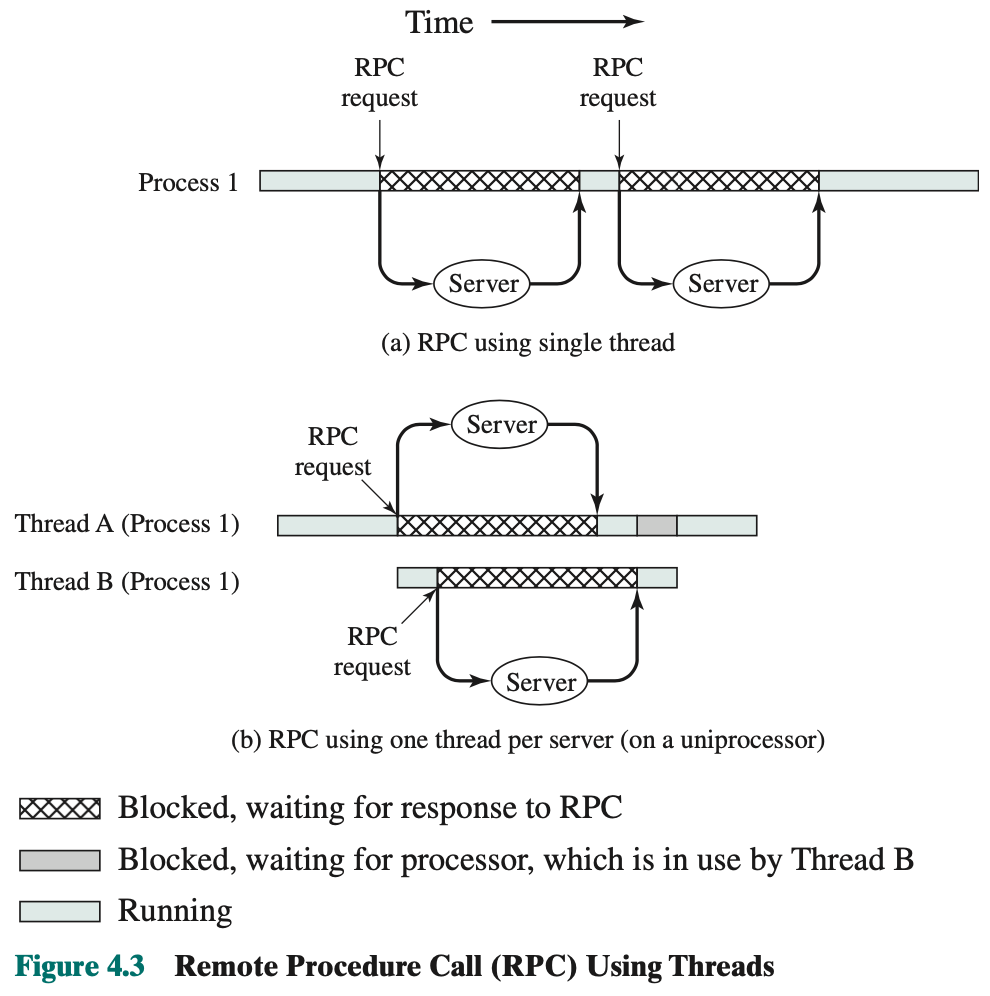

A program that performs two procedure calls (RPCs) to two different hosts to obtain a combined result. In single thread, result is obtained in sequence, so program has to wait for response from server in turn.

Rewriting the program to use a separate thread for each RPC results in a substantial speedup.

I don't understand...

Note that if this program operates on a uniprocessor, the requests must be generated sequentially and the results processed in sequence; however, the program waits concurrently for the two replies.

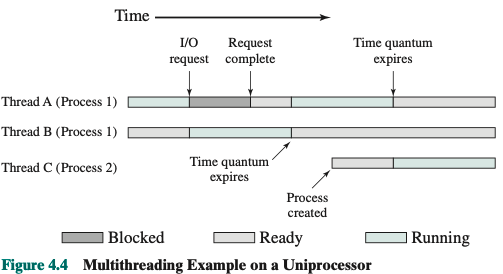

On a uniprocessor, multiprogramming enables the interleaving of multiple threads within multiple processes. In the example of Figure 4.4, three threads in two processes are interleaved on the processor. Execution passes from one thread to another either when the currently running thread is blocked or when its time slice is exhausted

Problem: These RPC are independent.

Thread Synchronization

Threads in same progress share the same address space and other resources, such as open files. Any changes of resource by one thread affects the environment of other threads in the same process. Thus, necessary to synchronize the activities so they don’t interfere with each other and corrupt data.

Types of Threads

There are two broad categories of thread implementation: user-level threads (ULTs) and kernel-level threads (KLTs).

User-Level Threads and Kernel-Level Threads

User-Level Threads:

- All work of thread management done by application, kernel does not know the threads exist

- Threads library manages the threads stack, content, registers, stack pointer when control is passed to that utility by a procedure call. It creates the data structures for that thread and pass control to one of the threads that is in the Ready state.

- Takes place in the user space and within a single process (Figure 4.5a)

Note

A process can be interrupted, either by exhausting its time slice or by being preempted by a higher-priority process, while it is executing code in the threads library.

Thus, a process may be in the midst of a thread switch from one thread to another when interrupted. When that process is resumed, execution con- tinues within the threads library, which completes the thread switch and transfers control to another thread within that process.

Advantages of ULTs instead of KLTs:

- Don’t need to involve the kernel and go to kernel mode to do thread management, switch between.

- Scheduling can be application specific. You can decide and tailor the algorithm for a specific application without disturbing the underlying OS scheduler. Scheduler (OS)

- UTL can run on any OS. Don’t need to change the kernel to support them. Threads library is a separate application level function shared by all applications. Interesting!

Disadvantages:

- In a typical OS, many system calls are blocking. As a result, when a ULT executes a system call, not only is that thread blocked, but also all of the threads within the process are blocked.

- In a pure ULT strategy, a multithreaded application cannot take advantage of multiprocessing. A kernel assigns one process to only one processor at a time. Therefore, only a single thread within a process can execute at a time. In effect, we have application-level multiprogramming within a single process. While this multiprogramming can result in a significant speedup of the application, there are applications that would benefit from the ability to execute portions of code simultaneously. #to-understand

KLT

All of the work of thread management is done by the kernel. There is no thread management code in the application level, simply an application programming interface (API) to the kernel thread facility. Windows is an example of this approach.

Scheduling by the kernel is done on a thread basis.

Advantages:

- the kernel can simultaneously schedule multiple threads from the same process on multiple processors

- if one thread in a process is blocked, the kernel can schedule another thread of the same process

- kernel routines themselves can be multithreaded

Disadvantage:

- the transfer of control from one thread to another within the same process requires a mode switch to the kernel

The POSIX Thread

pthreadrefers to POSIX standard that defines thread behaviour in UNIXpthread_create- Creates new thread to run a function

pthread_exit:- Quit thread and clean up, wake up joiner if any

- To allow other threads to continue execution, the main thread should terminate by calling

pthread_exit()rather than exit(3).

pthread_join()- In parent, wait for children to exit, then return

pthread_yield- Relinquish CPU voluntarily

Thread Lifecycle

Difference between Ready and Waiting states are if we give the CPU to Ready it can start executing, but giving the CPU to a thread that is waiting, it doesn’t necessarily execute since it might be waiting still.