CS343: Concurrent and Parallel Programming

Hardest course in 3B. Taught by Peter Buhr and in uC++.

Course website: https://student.cs.uwaterloo.ca/~cs343/F24/index.shtml

Concepts

- Control Flow

- Exception Handling

- Coroutine

- Task

- Mutual Exclusion

- Busy Waiting

- Barging

- Lock

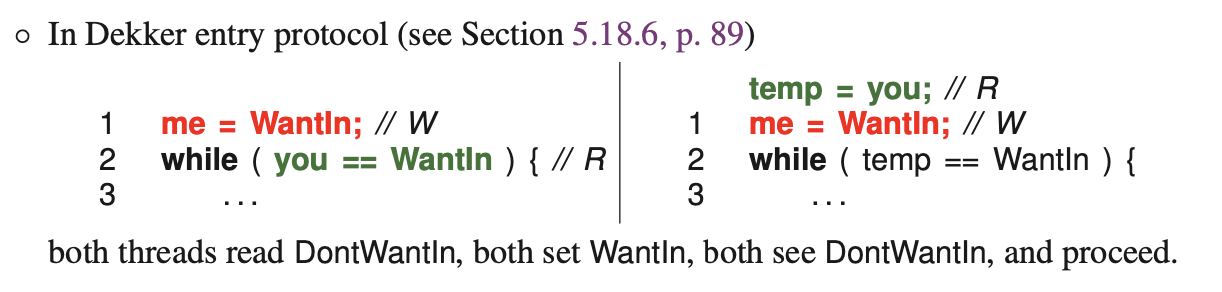

- Dekker’s Algorithm

- Racing

- N-Thread Mutual Exclusion

- Skipped two: Knuth/De Bruijn && Eisenberg and McGuire

- Bakery Algorithm

- Arbiter

- Owner Lock

- Synchronization Lock

- Condition Lock

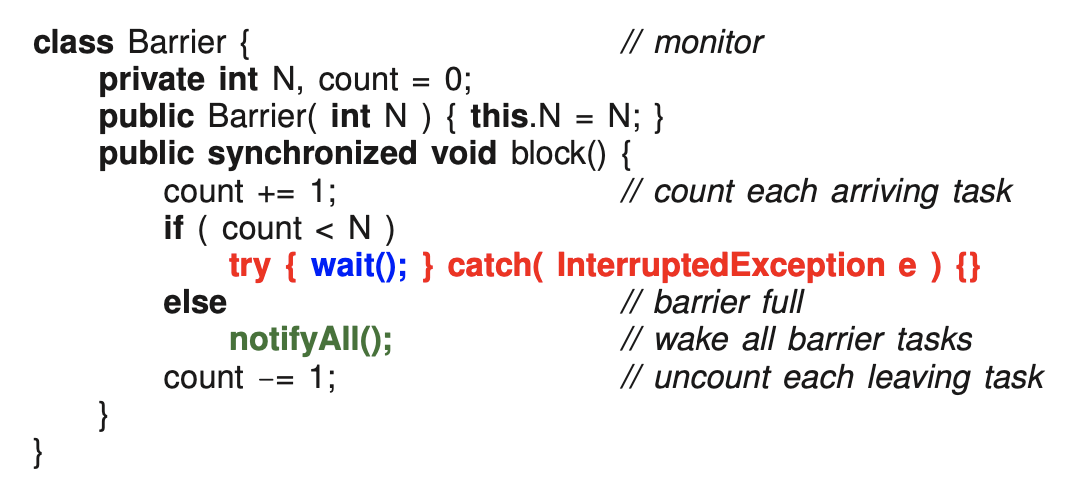

- Barrier

- await

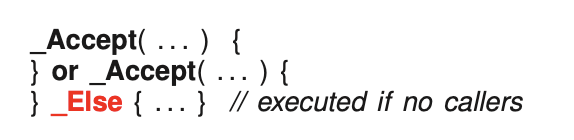

- _Accept

- Monitor (Synchronization)

- External Scheduling

- Internal Scheduling

- I skipped 9.5 External and Internal Scheduling : combining both (note)

- uCondition

- Deadlock

- Future

- Java Monitor

- Lock-Free

- ABA problem

- GPU

- Go

Final

Dec. 14 (Sat.) 2024 @ 9:00-11:30 am in STC 0010, 0020

Note, every instructor is unique emphasizing different points and teaching at different speeds. As a result, some previous exams may have questions about material not covered in the current term and/or missing topics relevant for this term’s exam.

The majority of the final exam covers the material in the course notes from Section 6.3.4 to Section 11.6, inclusive, the section in the course textbook on automatic/implicit-signal monitors, and assignments 4-6.

Goal: is 85%

Want to do well in this course because it’s interesting. Ended up getting 75% on the final. Good enough.

Prep: Final Study CS343, Long Answers CS343

- F22

- W19

- F18

- W23 (long answers)

Thoughts on final and class:

- Long answer is literally the rafter problem from previous finals

- Same format Peter always uses

- CAS: write pseudocode in C

- Instead of banker’s algorithm he asked the other one

- Semaphore for long answer: external, internal and automatic scheduling

- I really enjoyed this course and going to lectures listening to Peter go on about concurrent programming. Amazing course that we have to take, I recommend taking it! 10/10 course. I enjoyed doing the assignments (for the most part) even if they were long asf. Just make sure to start them in advance so it wasn’t that bad looking back.

- Tips:

- Read his textbook whenever you don’t understand the course notes (go to the relevant sections)

- I started reading the textbook for the first half of the course, but as the term went on and we had more and more assignments from other classes, I stopped reading it (only the parts that confused me)

- Start assignments early!

- Find a group of friends, so you can learn together (for the assignments)

- Do practice exams from previous years, it’s the same format every year. Practice, practice and practice

Midterm

Oct 31 (Thu.) 2024 @ 19:00 - 21:00 in BMH1689 / MC4064

The midterm covers the material in the course notes from Section 1 to Section 6.3.3, inclusive, and Assignments 1-3

Our midterm: https://student.cs.uwaterloo.ca/~cs343/F24/exams.shtml

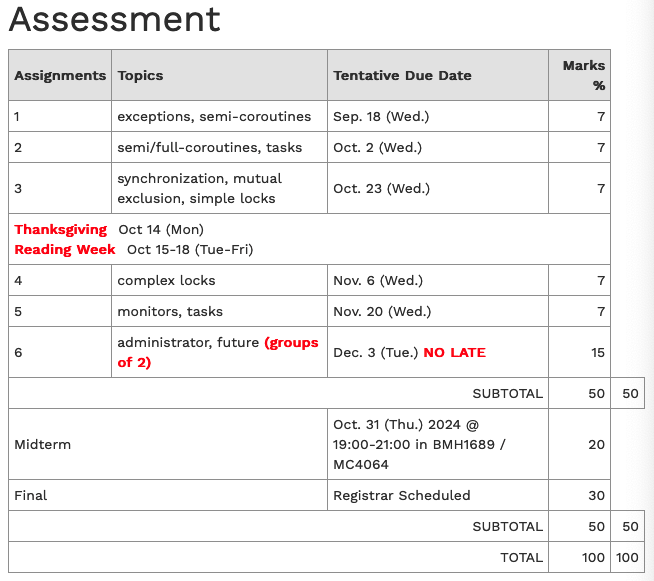

Assignments

To download files for an assignment use the following command, where D is an assignment number 1-6:

wget -m -np -nd -R 'index.html*' -P D https://student.cs.uwaterloo.ca/~cs343/assignments/D/

Lecture 1

Basically, Peter just randomly started roasting us.

Advanced Control Flow (Review)

- while and for are interchangeable

- make sure to give yourself eye-candy by outdenting the breaks within a loop

- A loop exit NEVER needs an else clause.

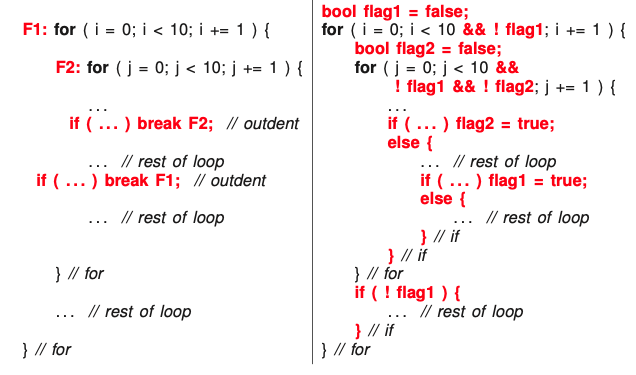

- Flag variables are the variable equivalent to a goto because they can be set/reset/tested at arbitrary locations in a program.

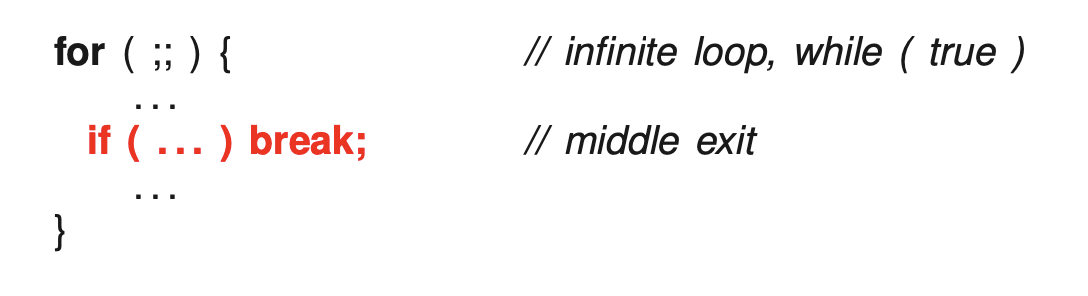

Multi-exit loop

- Multi-exit loop (or mid-test loop) has one or more exit locations occurring within the body of the loop, not just top (while) or bottom (do-while).

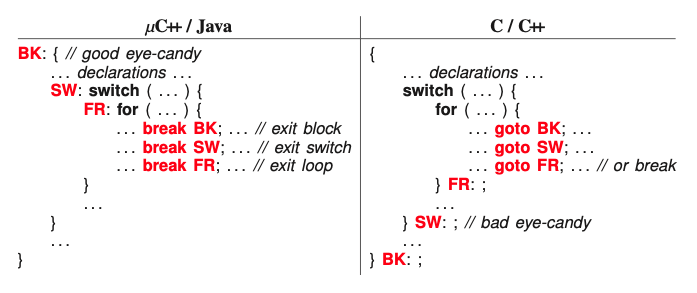

Static multi-level exit

- Static multi-level exit exits multiple control structures where exit point is known at compile time.

- Labelled exit (break/continue) provides this capability.

Note: in Java and micro C++, label breaks exist!

Note: in Java and micro C++, label breaks exist!

Why is it good practice to label all exists?

- Avoid making mistakes

- Avoid all the flag variables with multi-level exit!

-

BUT occasionally a flag variable is necessary!

- When you want to retain a variable from another block

-

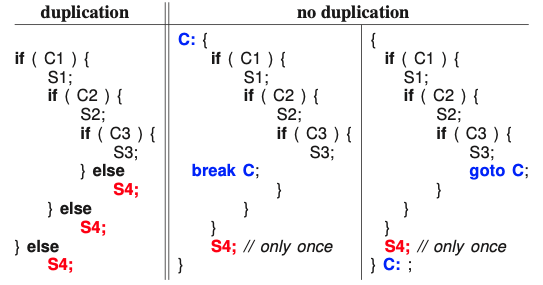

Other uses of multi-level exit to remove duplicate code.

- Normal and labelled break are a goto with limitations.

- Cannot loop (only forward branch) only loop constructs branch back.

- Cannot branch into a control structure

- Only use goto to perform static multi-level exit, e.g., simulate labelled break and continue. So basically, breaks are a more refined/controlled goto statement.

Understand goto

You need to understand the importance of goto’s. We have a bunch of goto in our program. More refined ones. Need to understand for example in a for loop or while, where the goto are.

Dynamic Memory Allocation

Do not use dynamic memory allocation if it can be done on the stack!

Dynamic memory allocations are for loosers.

Situations we need to use the stack:

- When storage must outlive the block in which it is allocated (ownership change).

- When the amount of data read is unknown.

- When an array of objects must be initialized via the object’s constructor and each element has a different value.

- When large local variables are allocated on a small stack.

Lecture 2

A catch is a sequel.

Lecture 3

Thu Sep 12

p.21 of notes:

-

Declare object before calling open(). It also separates normal code and exception handling.

-

Eiffel: contract programming

-

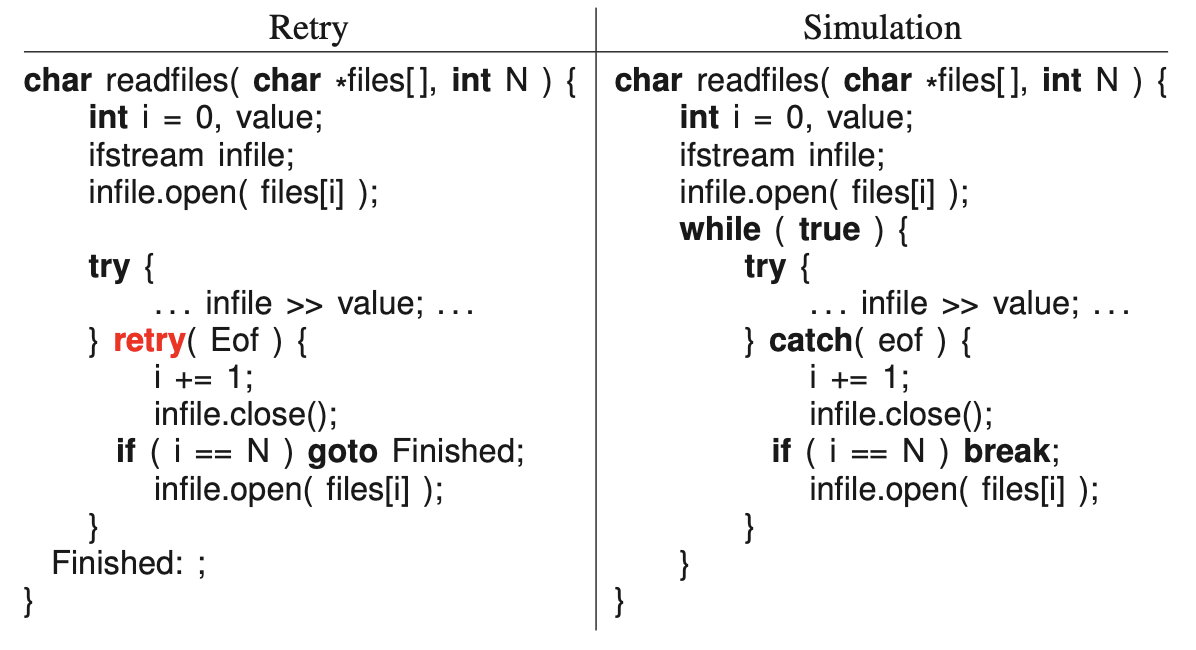

retry: only ends when your contract is fulfilled. So it will continue trying. Magically goes to try after retry block.

-

This is easy to simulate: trivial code which is demonstrate on the right

Resumption

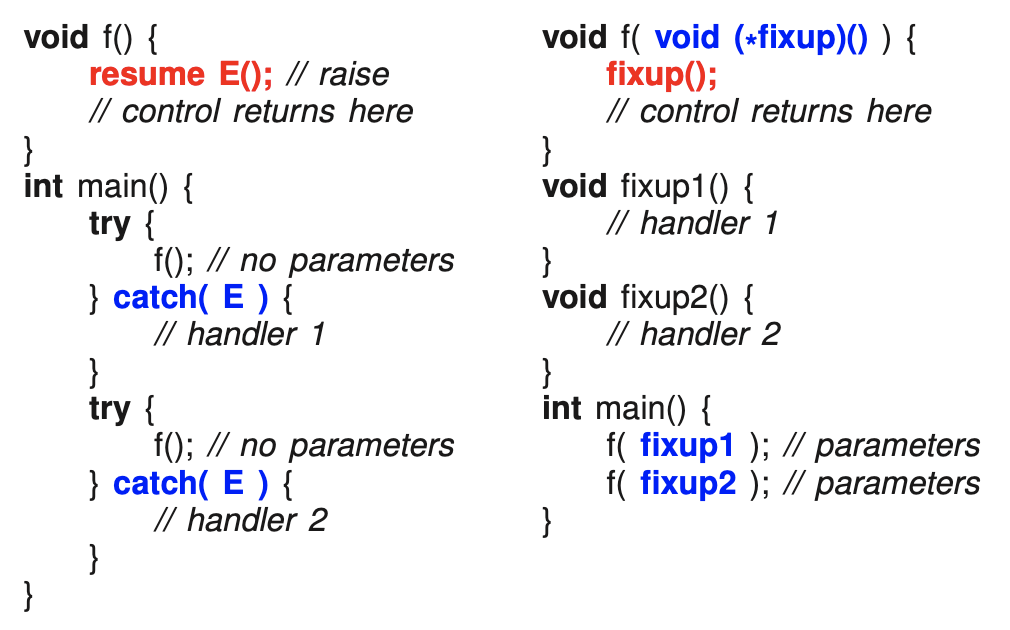

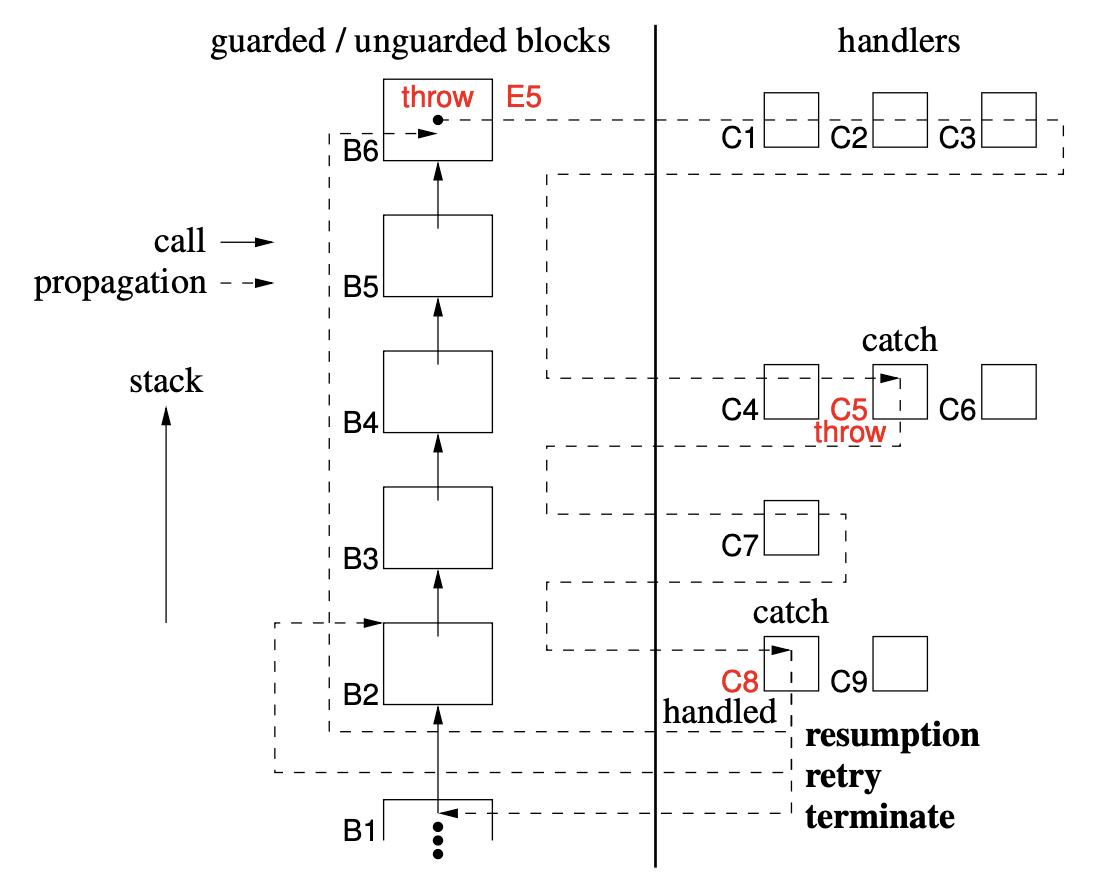

- A resumption handler is a corrective action so a computation can continue.

- stack is not unwound! since dynamic return.

- we choose how things are to be fixup by calling f using different parameters

- Problem: need to pass down the fixup routines

- But with resumption (left): find someone to handle it, then control returns after resume E(). Order n operation to find the call routine and order 1 to resume. Stack is not unwound in this case.

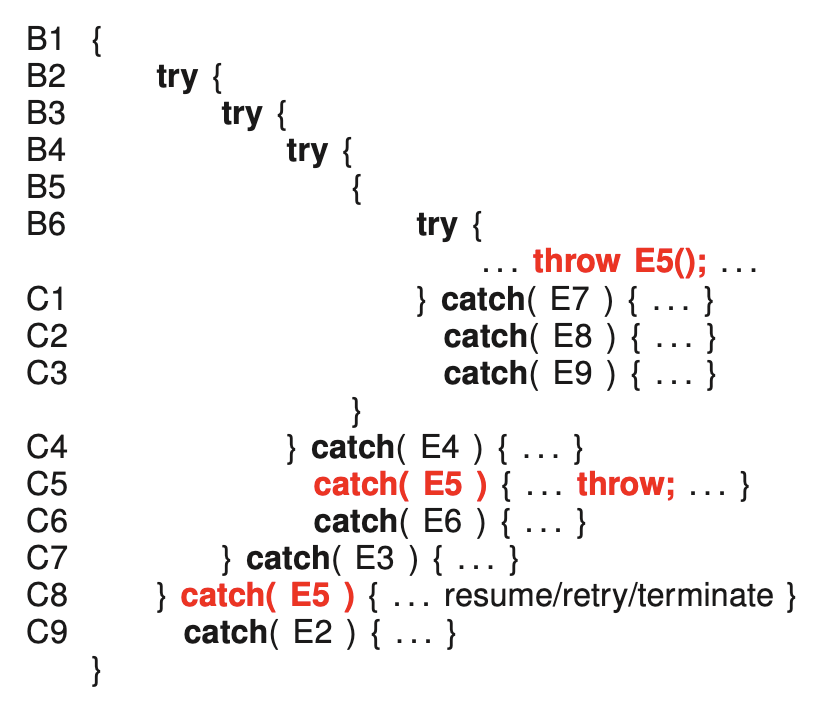

Exceptional Example:

- resumption: goes back to B6 resume() if it were instead of throw

- retry: Eiffel

- terminate

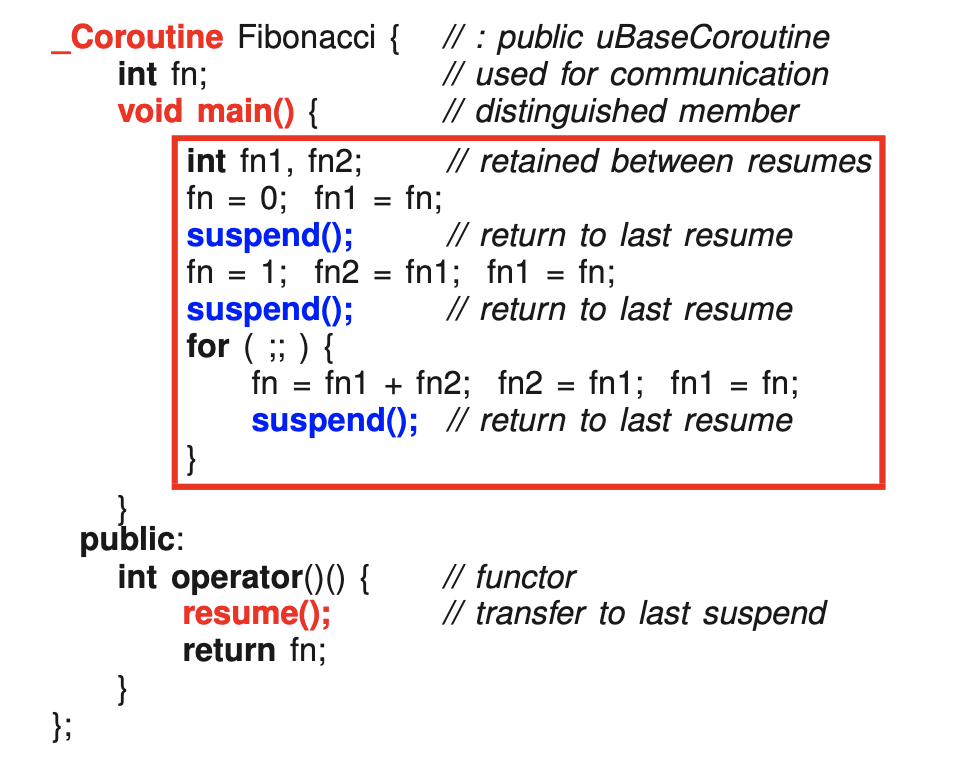

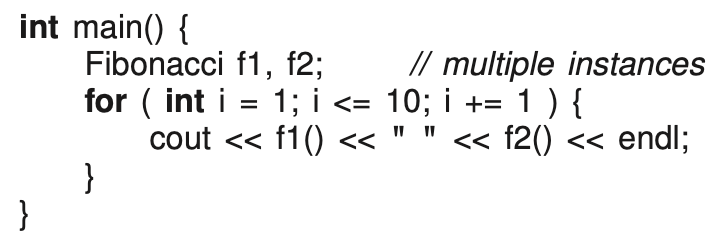

Coroutine

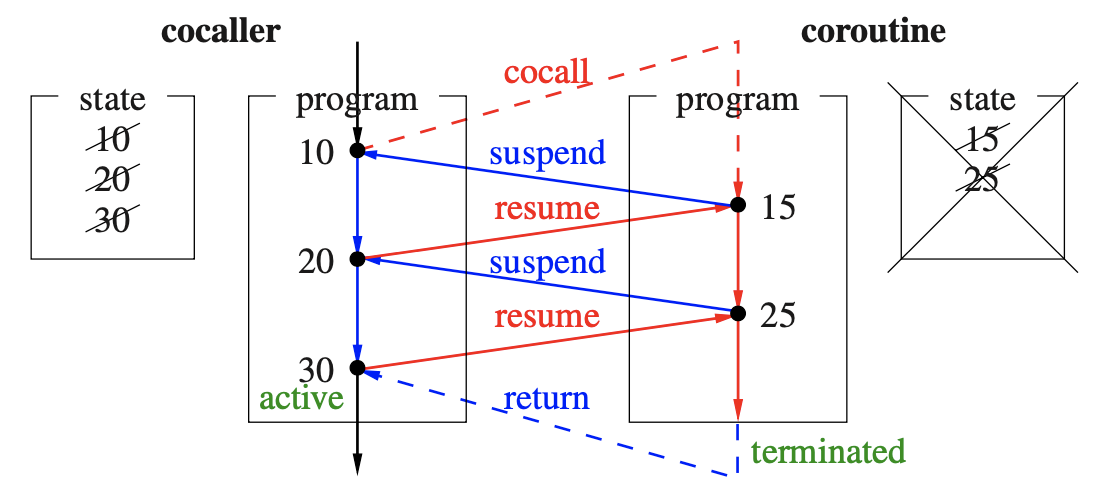

- A coroutine is a routine that can also be suspended at some point and resumed from that point when control returns.

- State of coroutine:

- execution location

- execution state

- execution status - active or inactive or terminated

- Coroutine doesn’t have to start from the top.

- coroutine: fancy sequential program. Not concurrency!

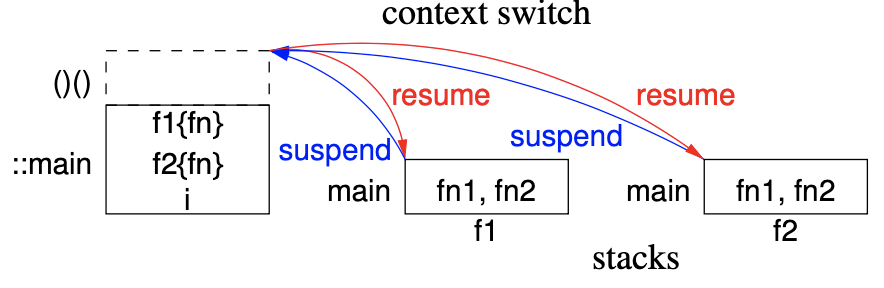

two different approaches for coroutine:

- a semi-coroutine

- a full coroutine

Let’s understand by example:

Semi-Coroutine

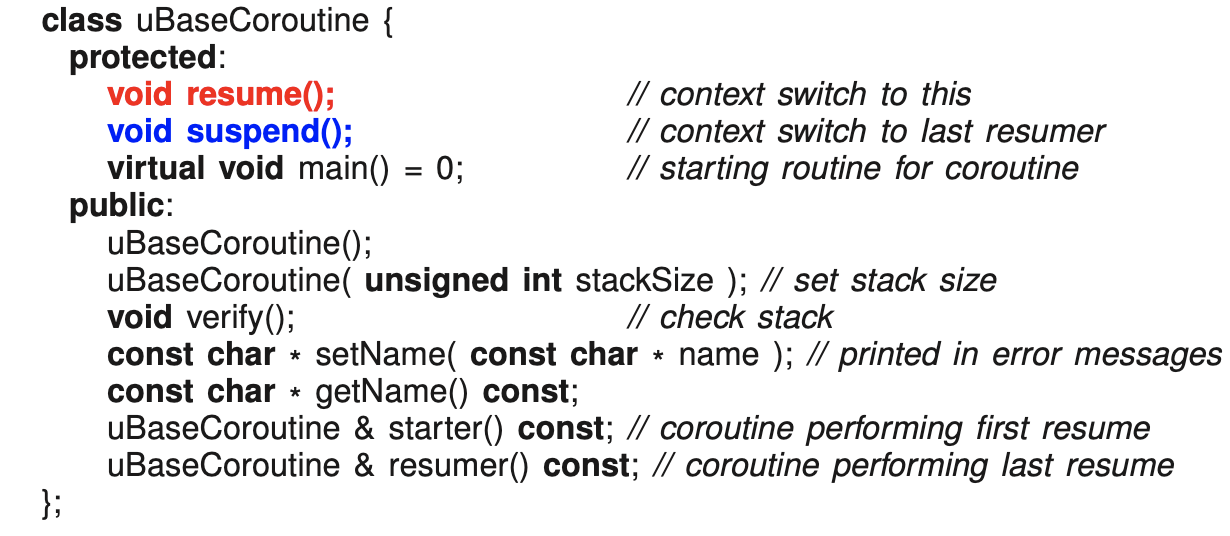

_Coroutine_: new type in micro C++. It has a main()- no execution state

I will trash Python - Peter Bhur

- In a coroutine, the program starts in main().

- only want resume and suspend to access in the coroutine

- All coroutines inherit from base type

uBaseCoroutine

- suspend and resume doesn’t change the stack: they context switch instead

You need to find zen.

Read until Nonlocal Exceptions to do assignment

TODO: write some notes for Coroutine

Nov 7, 2024

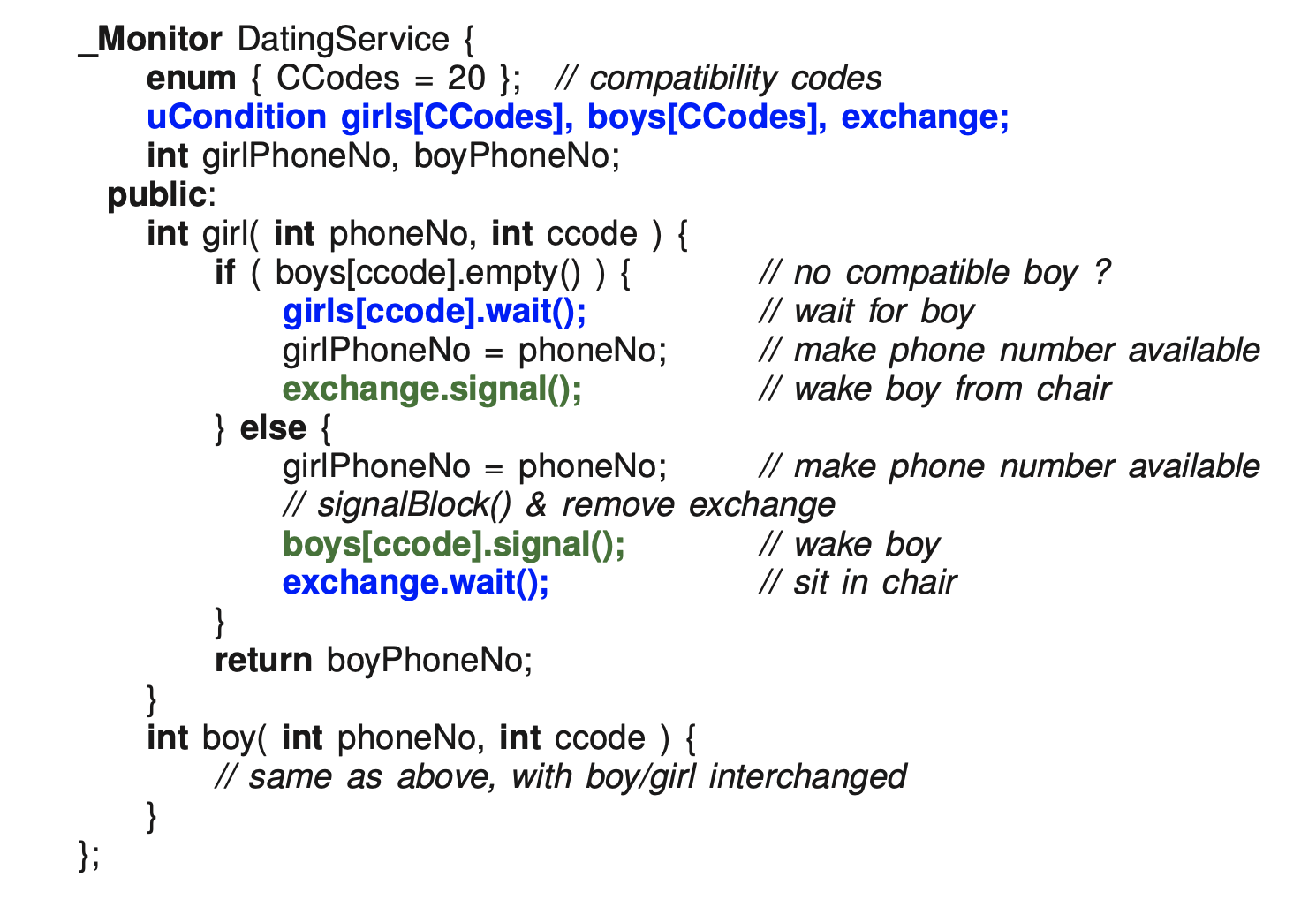

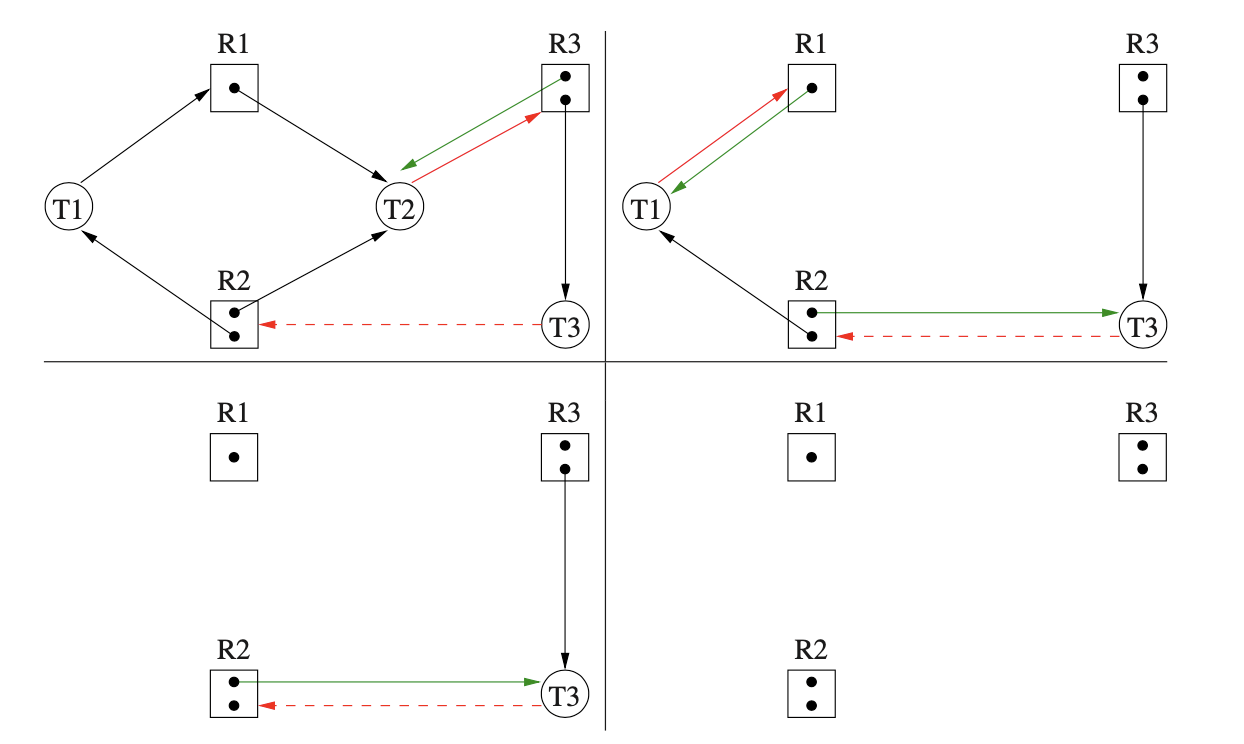

Why cannot use external scheduling??

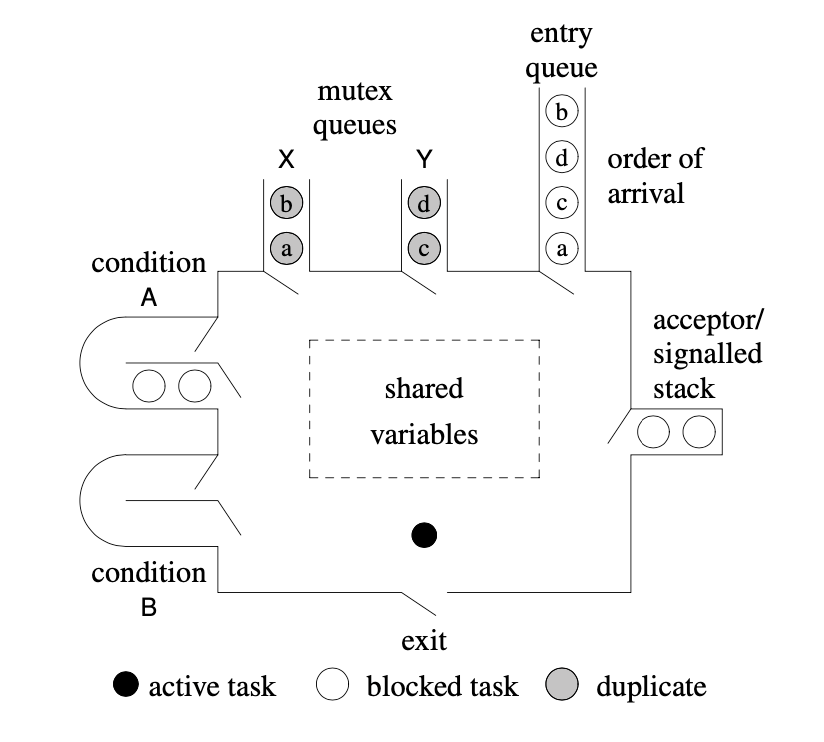

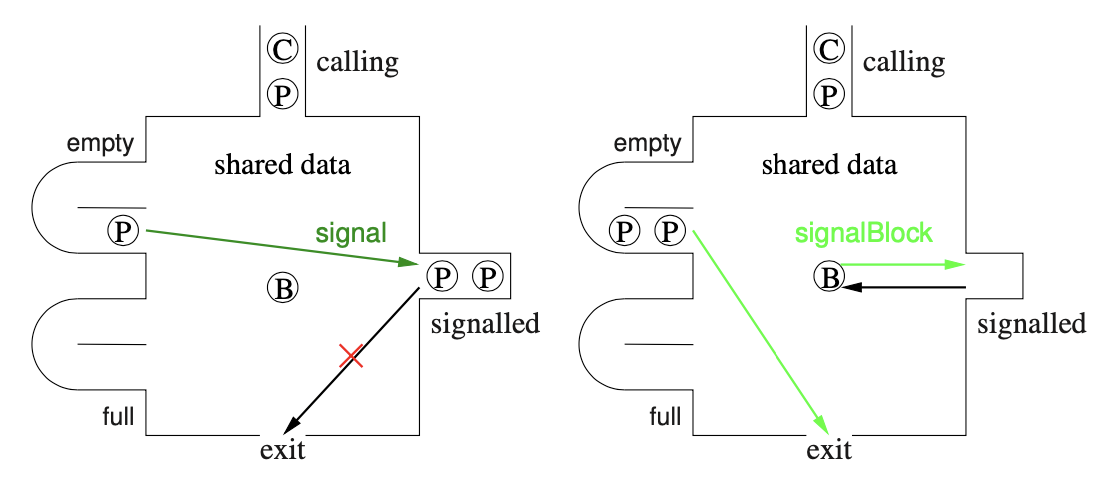

Using internal scheduling: (if you have uCondition bench == internal scheduling)

- signal: delayed/defered

- girl sits down: now boy wakes up.

- boys wake up at

boys[ccode].wait()in the if clause (wakes up exactly where he sat down) - gets phone number and puts it in the

uCondition boys[CCodes] - boy signals the chair

- boy returns the girls phone number

Apparently we don’t need a chair use signalBlock

- replace signal to signalBlock and remove all the exchange.

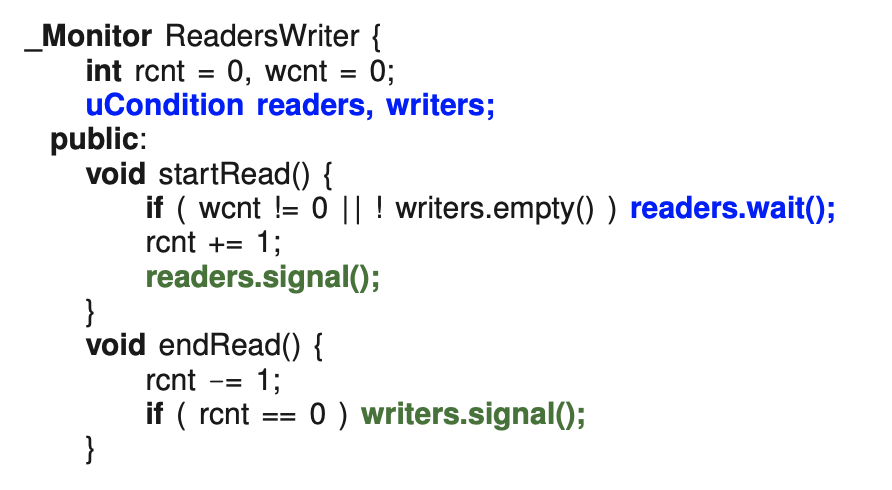

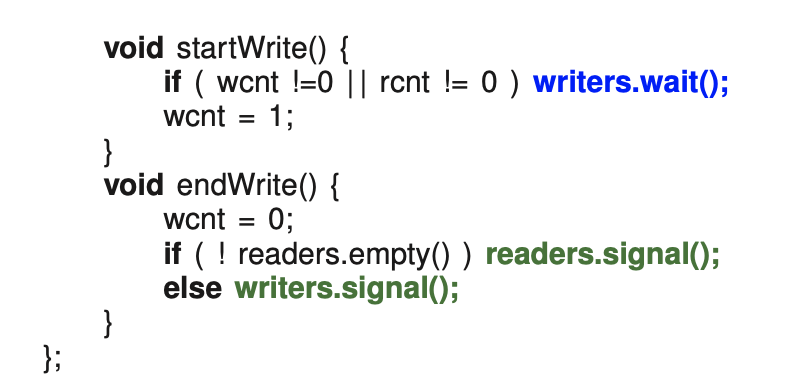

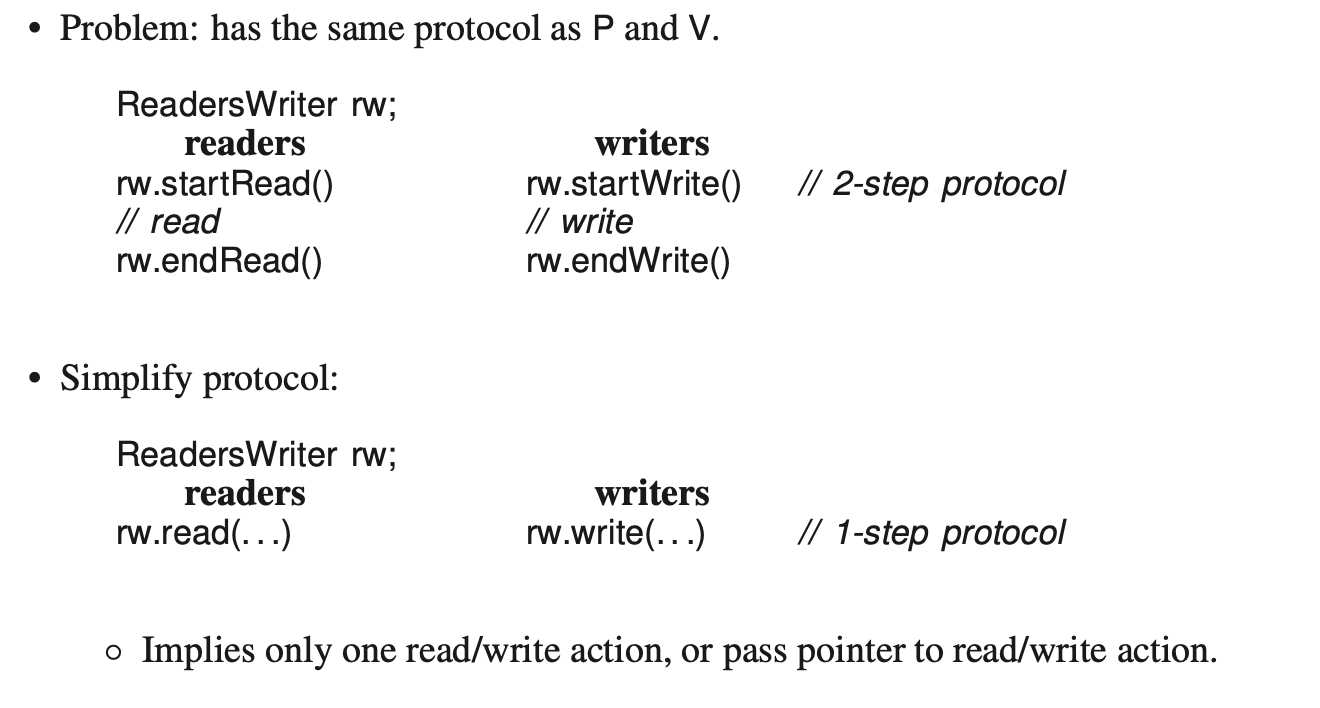

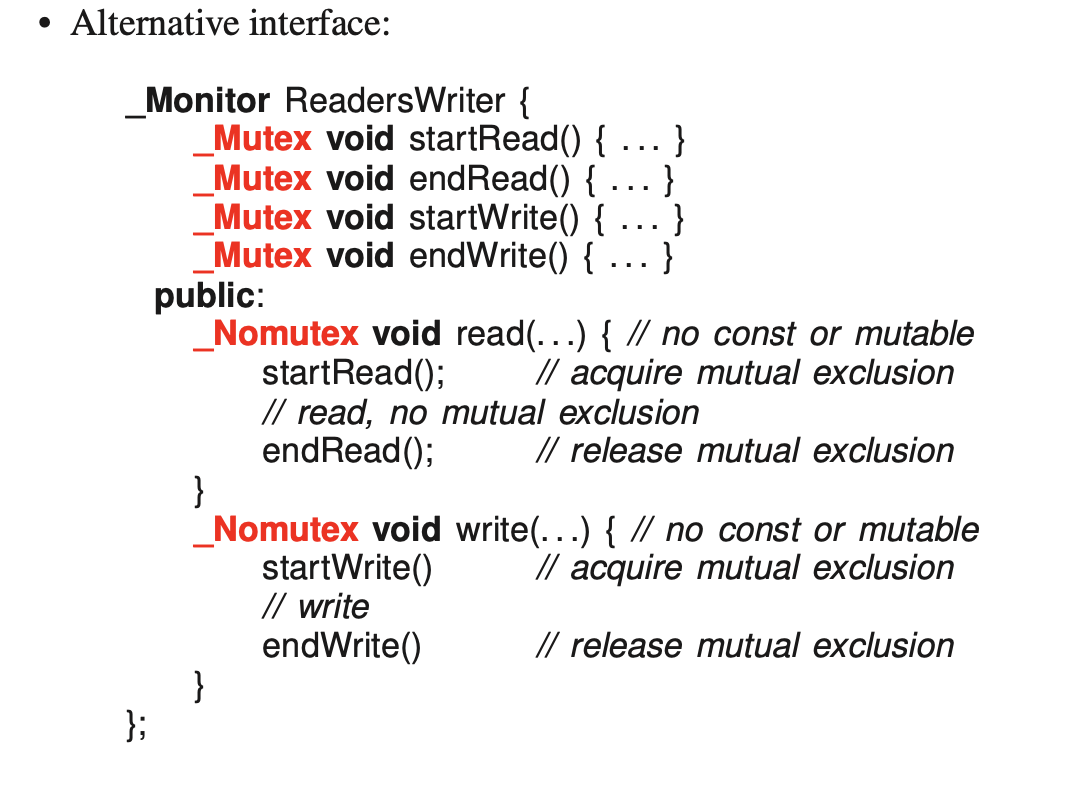

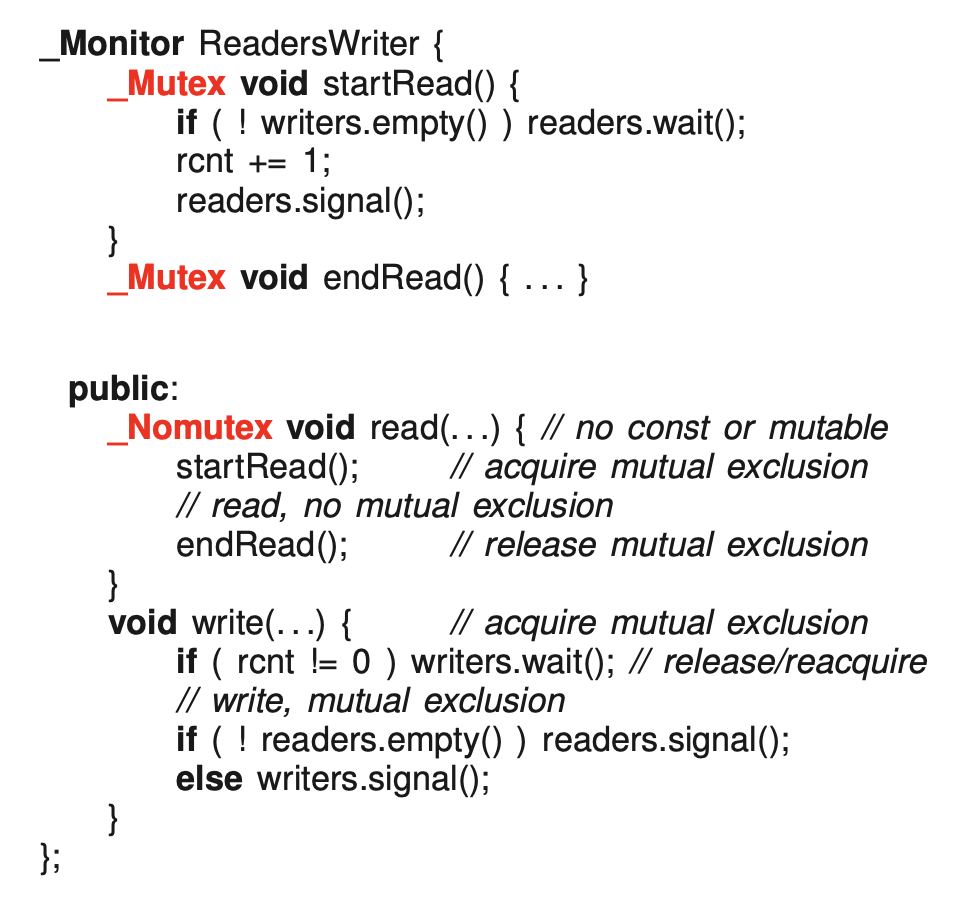

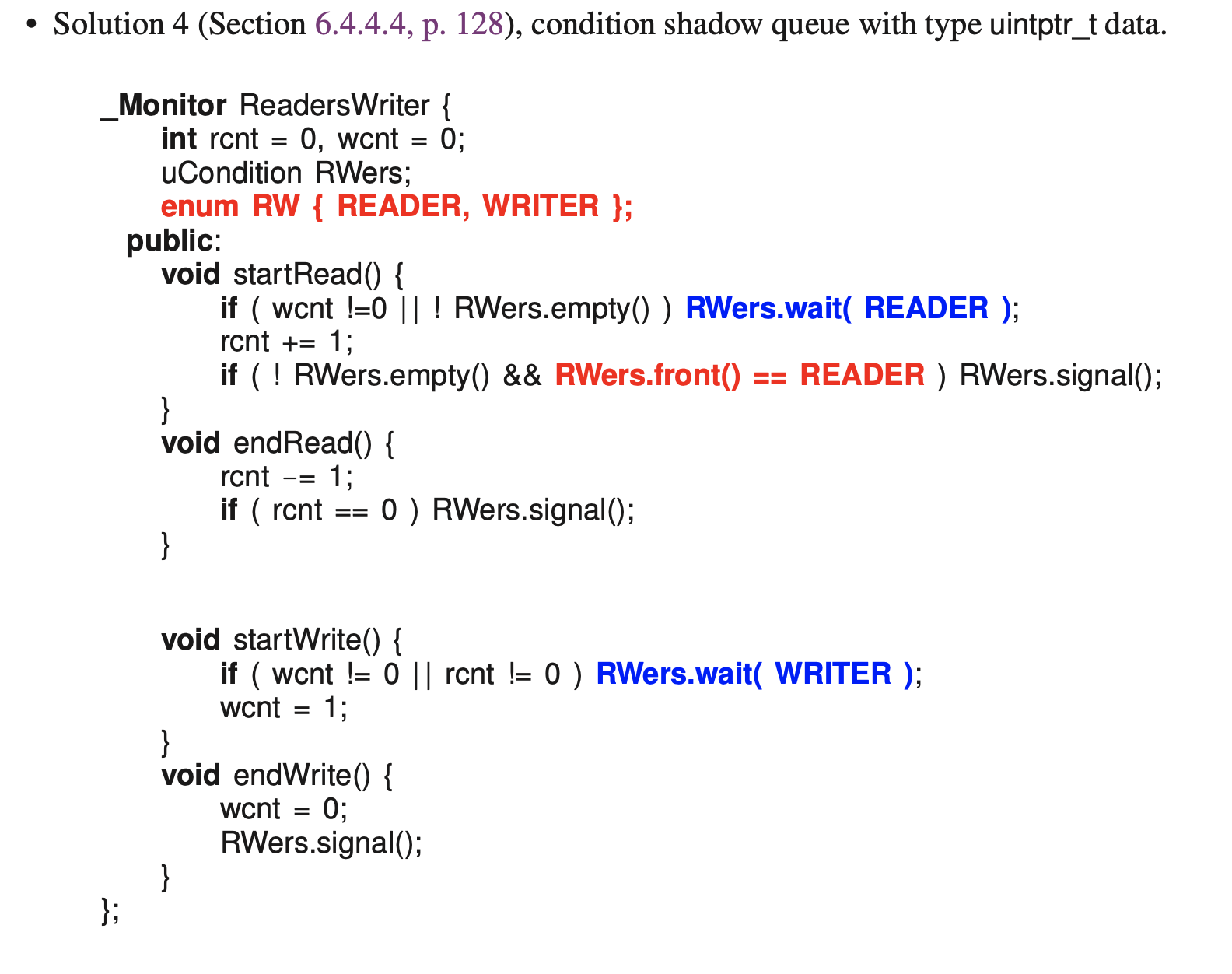

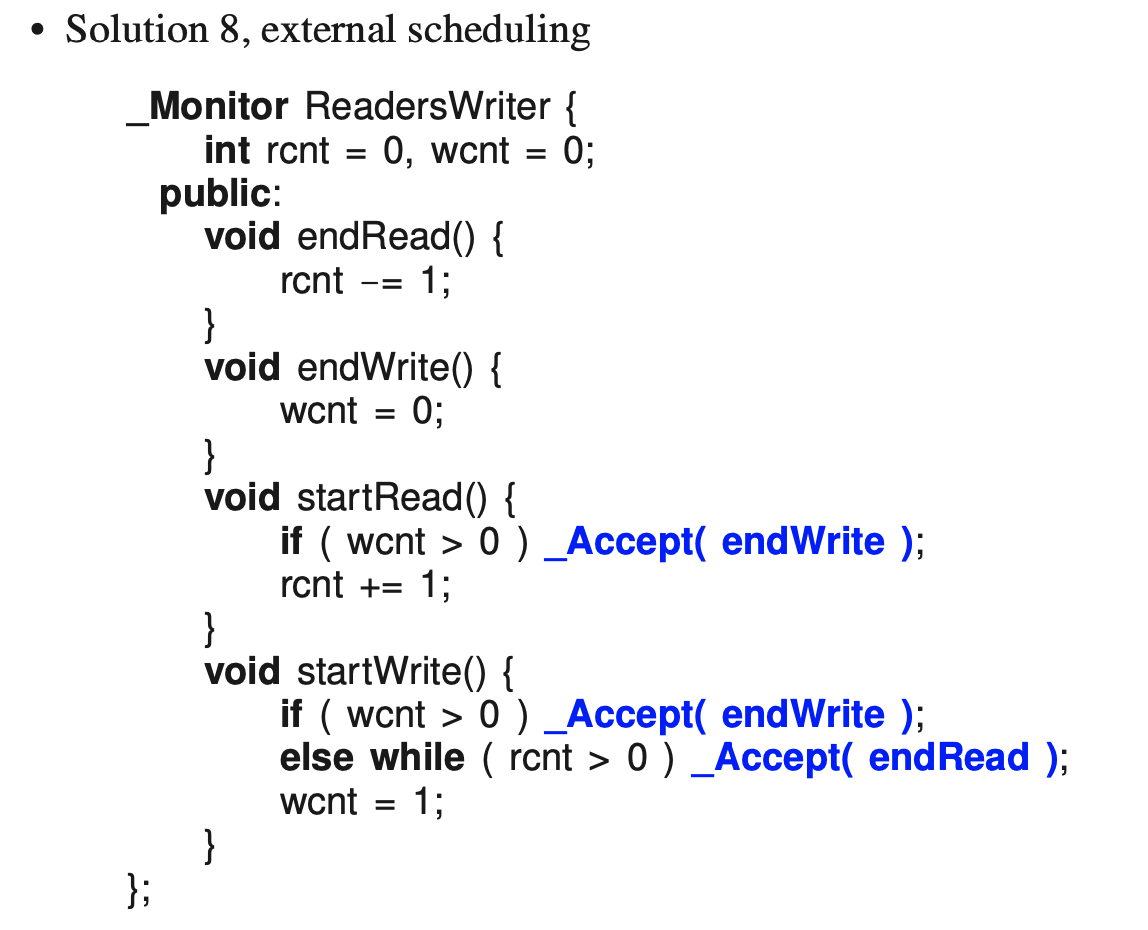

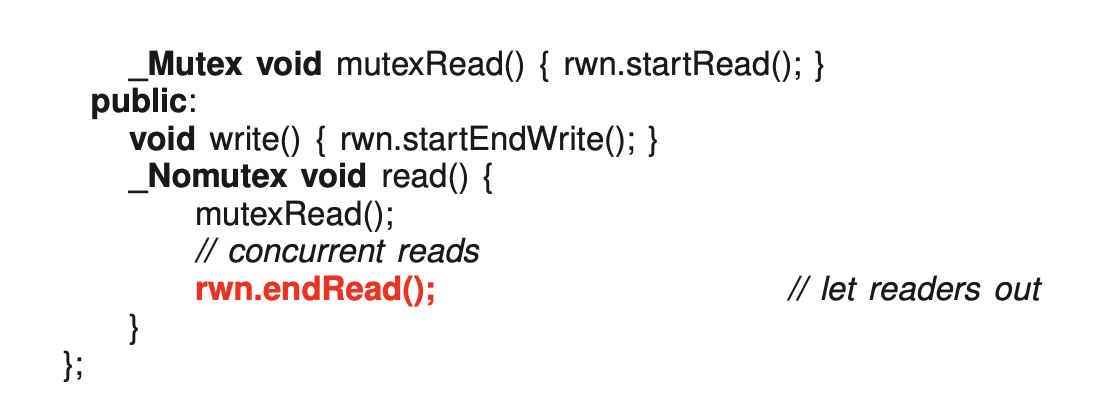

8.5 Readers/Writer

- dont need to check if its empty before calling signal. (does it for you)

- uCondition tells us if someone is on bench (using .empty), so we dont need to have variables that counts compared to semaphore solution

- just put it in a routine

- everything is put inside the lock

- since its nomutex, multiple threads can run the nomutex function at the same time

- doenst make sense for multiple threads in nomutex write

- we further simplify the monitor

- μC++

uCondLock anduSemaphorealso support shadow queues with typeuintptr_tdata.

- 2 counters

- if reader arrives and there is a writer in the room (wcnt > 0). only thing that makes reader happy is for the writer to leave. so shut all the doors except the one that allows the writer to leave.

- Why has the order of the member routines changed?

- definition before use

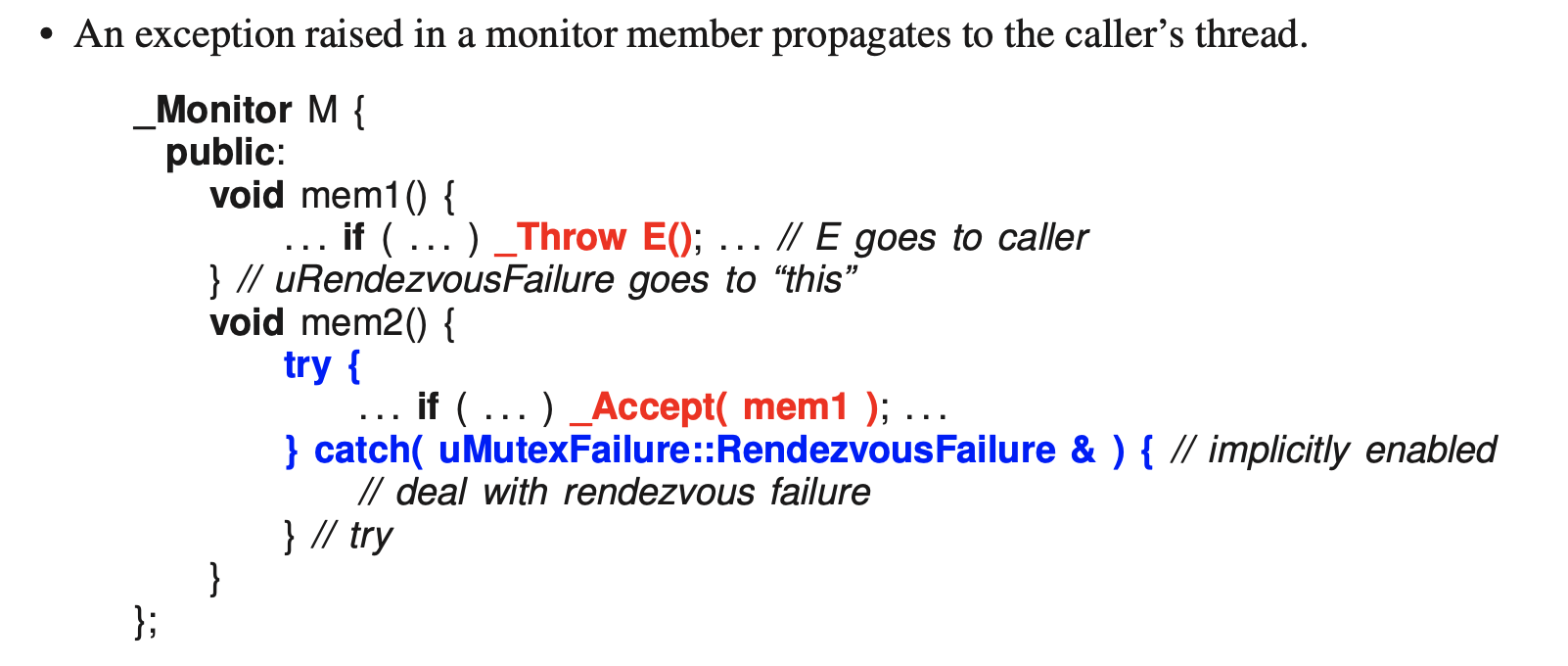

- micro C++ throws a non-local exception to the person sitting on the chair to notify in the cooperation failed

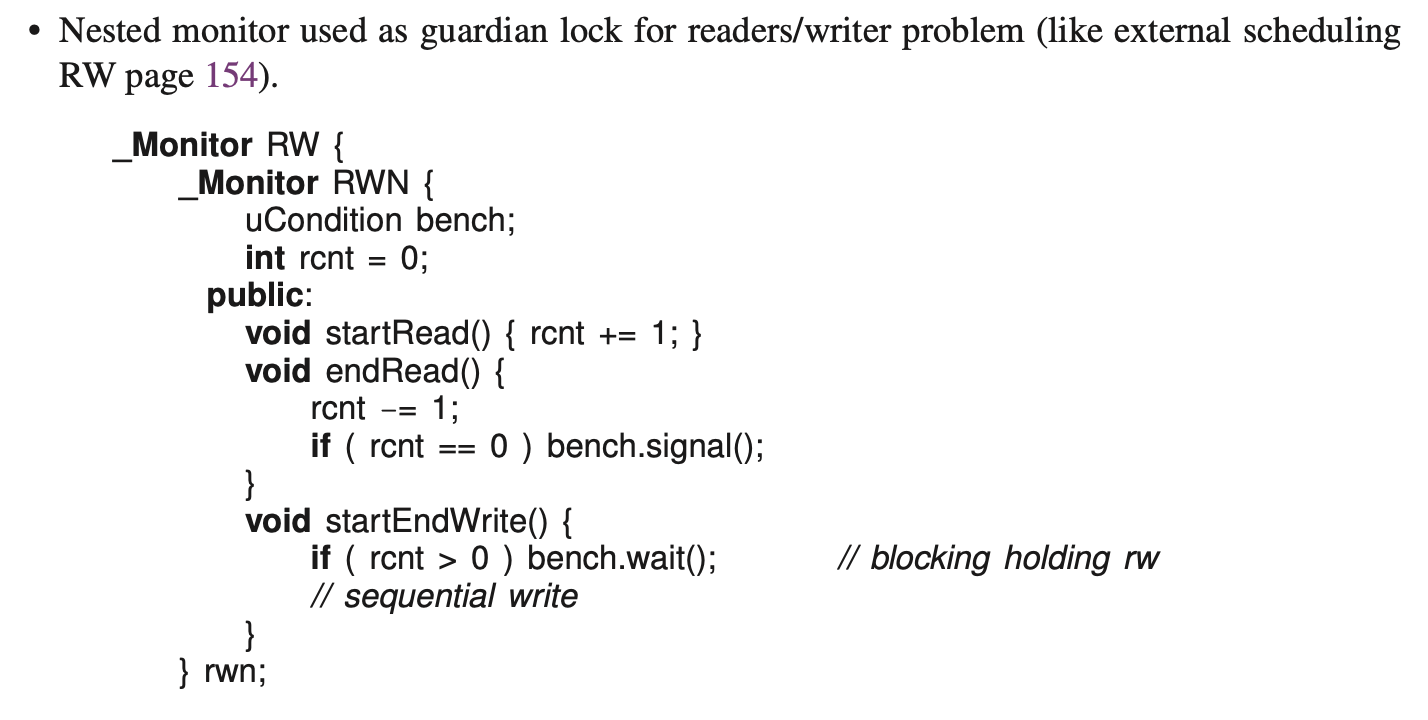

8.7 Nested Monitor Calls

-

Nested monitor problem: acquire monitor (lock) M1, call to monitor M2, and wait on condition in M2.

-

Monitor M2’s mutex lock is released by wait, but monitor M1’s monitor lock is NOT released ⇒ potential deadlock.

-

Releasing all locks can inadvertently release a lock, e.g., incorrectly release M0 before M1.

-

Same problem occurs with locks.

-

Called lock composition problem.

- monitor lock in endRead() and another in read()

- NOT TESTED ON - as well as the semaphore version

Nov12

Intrusive Lists → use it for assignment 5 and 6 (not necessarily need to)

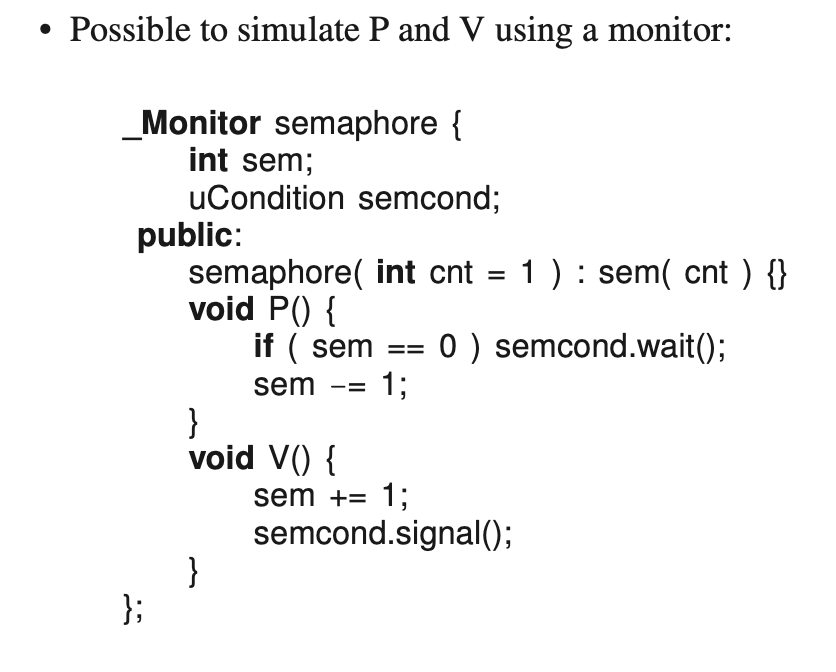

8.9 Counting Semaphore, V, P vs. Condition, Signal, Wait

Difference between counting semaphore and Monitor

multiple Vs may start multiple tasks simultaneously, while multiple signals only start one task at a time because each task must exit serially through the monitor

- Can this simulation be reduced?

- Yes, use external scheduling (remove 2 to 3 lines)

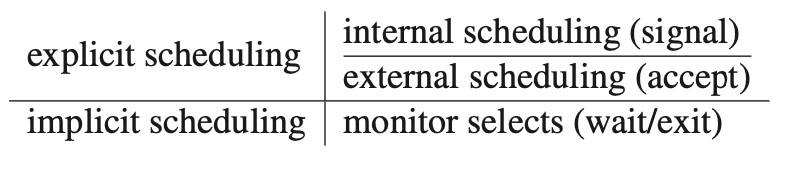

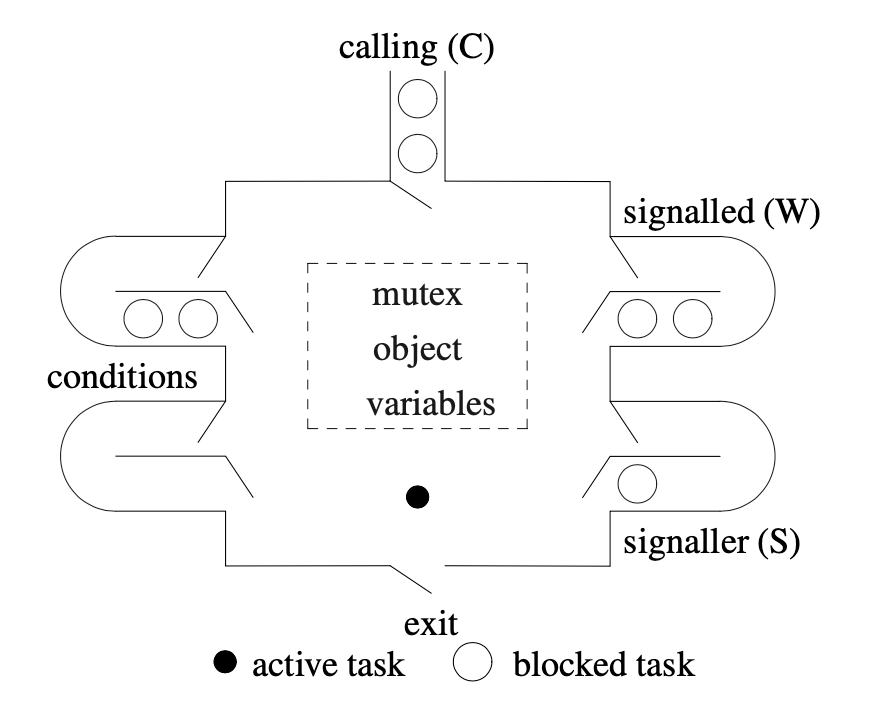

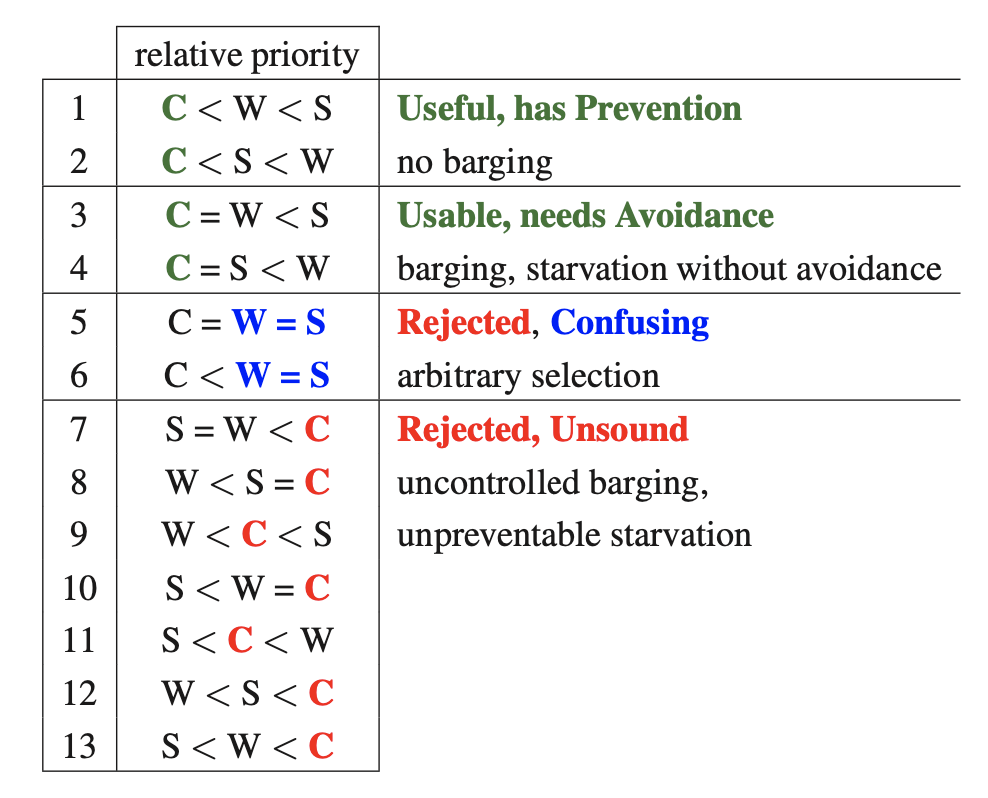

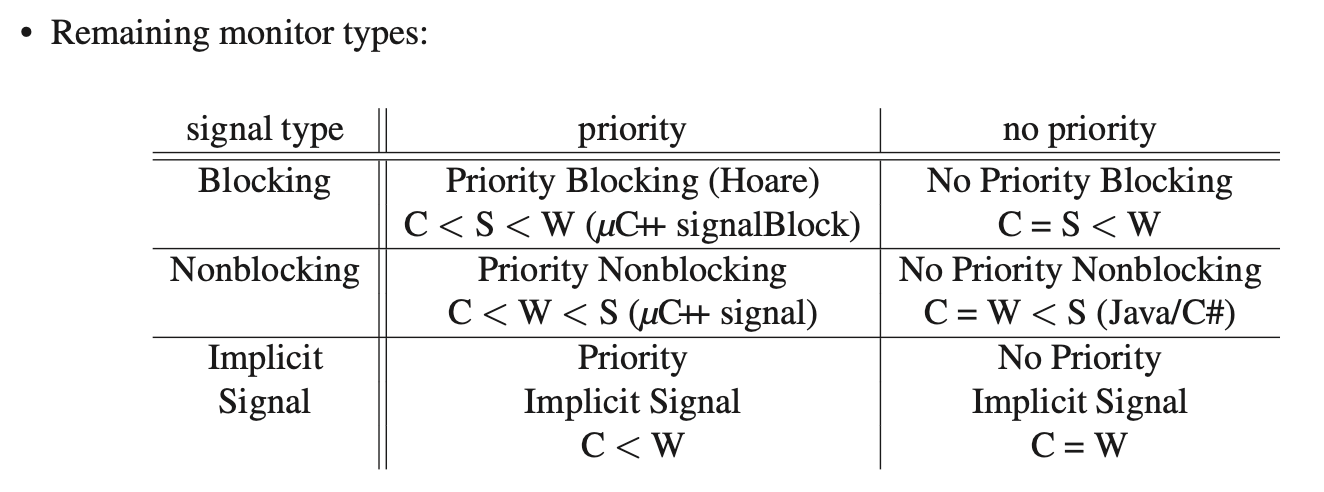

8.10 Monitor Types

Monitor: We do some work and let the compiler do some work.

- Explicit scheduling

- Done undercover by the monitor

- Implicit scheduling

We write out explicit scheduling:

- Monitor kicks in when it becomes empty, try to get more work (wait/exit)

- We take care of who comes in, and signals

Theoretical Monitor:

- It’s ready to rock n roll

- Assigning different relative priorities to these queues creates different monitors (e.g., C < W < S).

- Starvation for case 7-13

- things that got signalled starves if there are constantly new callers coming in

- All unsound

- Case 5-6

- If you do signal and flips a coin, it can either be signalled task or the task that signals comes in

- Arbitrary selection

- Case 3-4

- Flip a coin; either Call or Signalled

- Could need Barging Avoidance, can have starvation without avoidance

- Java monitors

- Case 1-2

- Has barging prevention, no barging

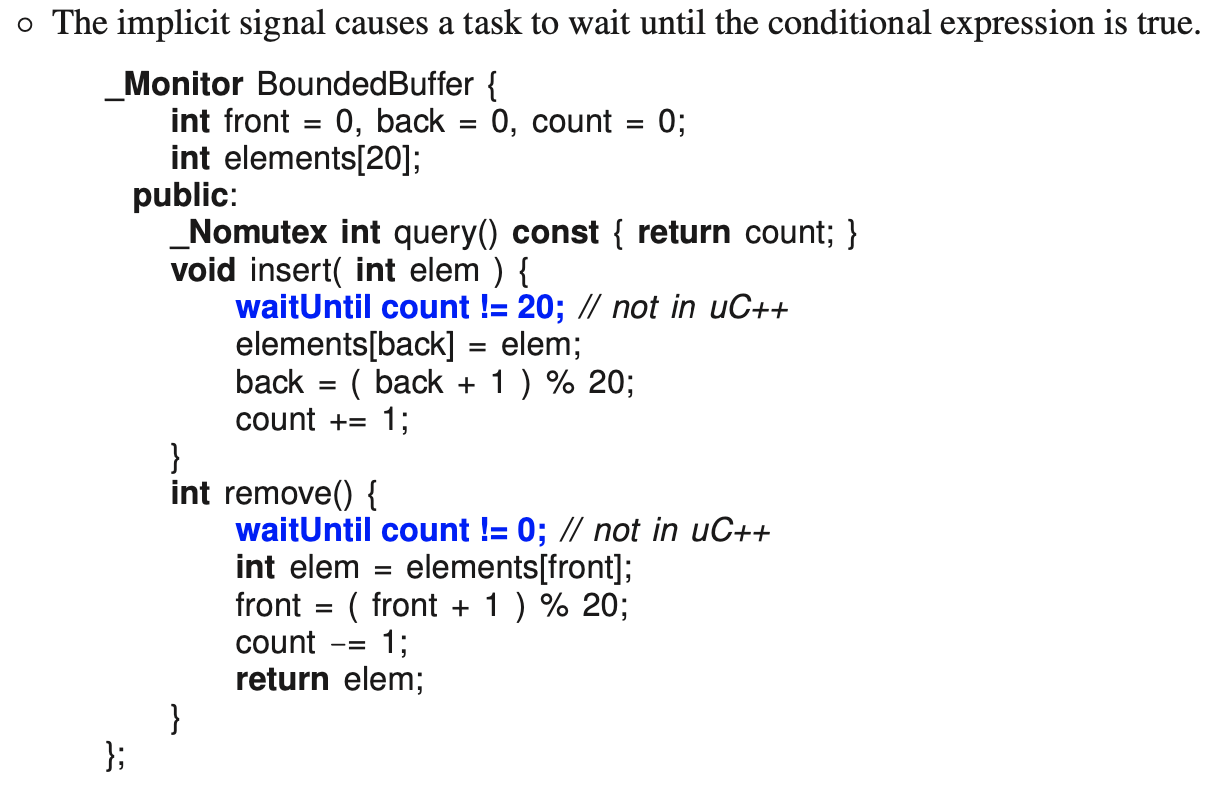

Implicit Signal

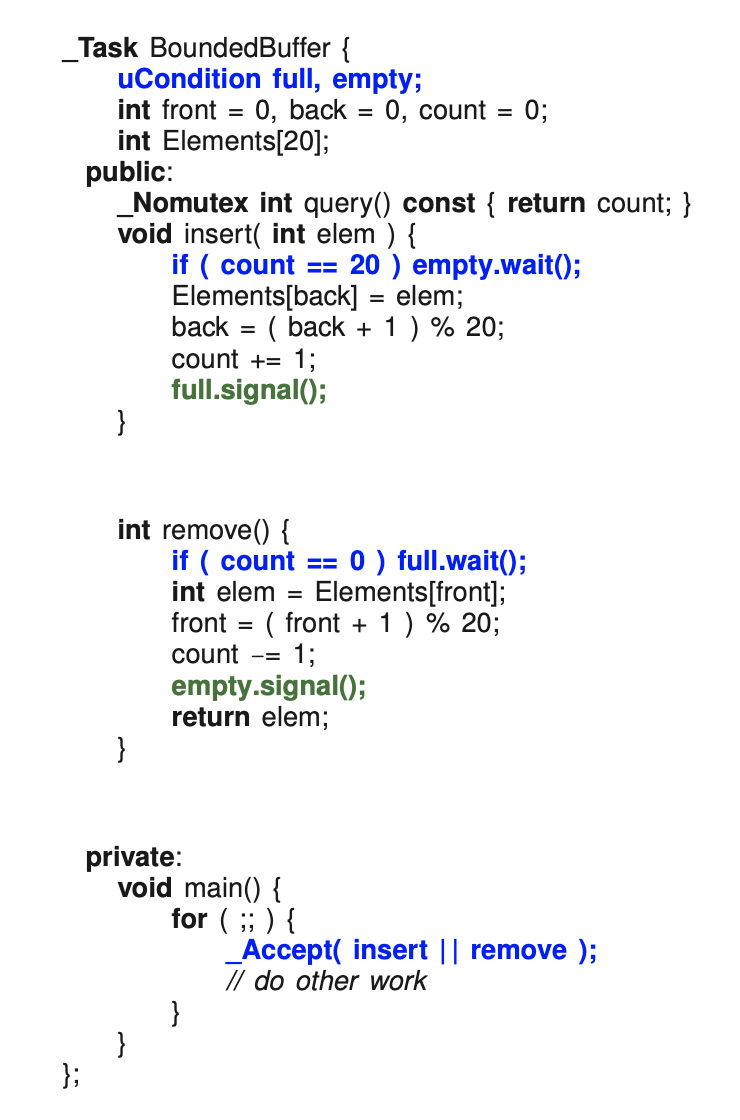

- Write boundedbuffer using a Monitor

- Conditional critical region (in

insert()) - Not busy waiting

- Use waitUntil for assignment 5

- simulate: build order n queue and service it

- 3 solutions for building a waitUntil monitor. 2 of them are based on signal or signalblock (with barging, but still can use it). 3rd solution has no barging

- waitUntil macro and leave macro to: build put you to sleep

- READ TEXTBOOK

- first four comes from the cases

- Last row comes from the BoundedBuffer Monitor

- Priority

- prioritize things inside the monitor

- No priority

- bargers can barge in (i.e., ahead of Ws)

- 2nd row is the 80% case / correct row

- No idea which column is the correct. Still debating jesus

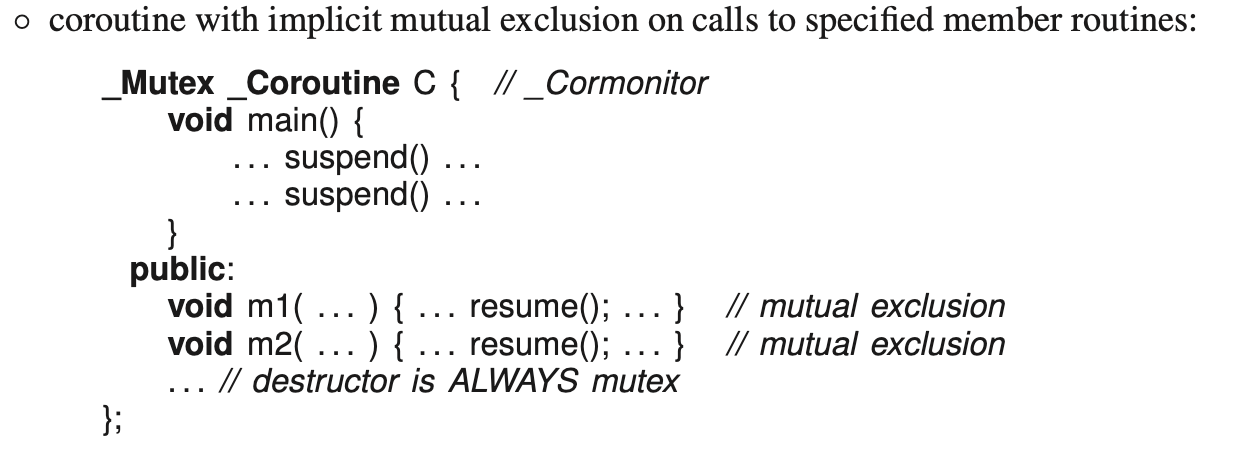

Coroutine Monitor

- can use resume(), suspend(), condition variables (wait(), signal(), signalBlock()) or

_Accepton mutex members - coroutine can now be used by multiple threads, e.g., coroutine print-formatter accessed by multiple threads.

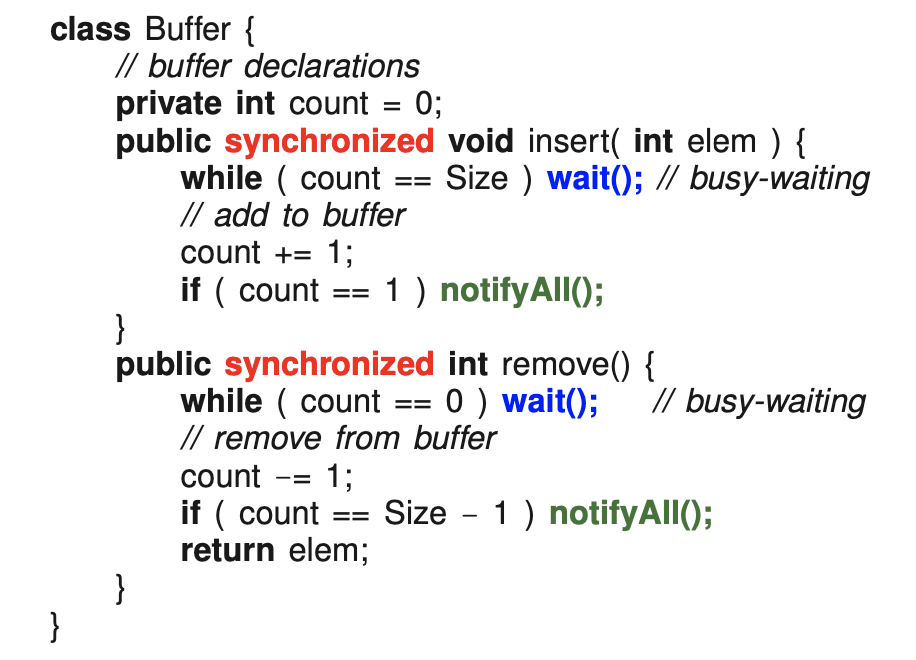

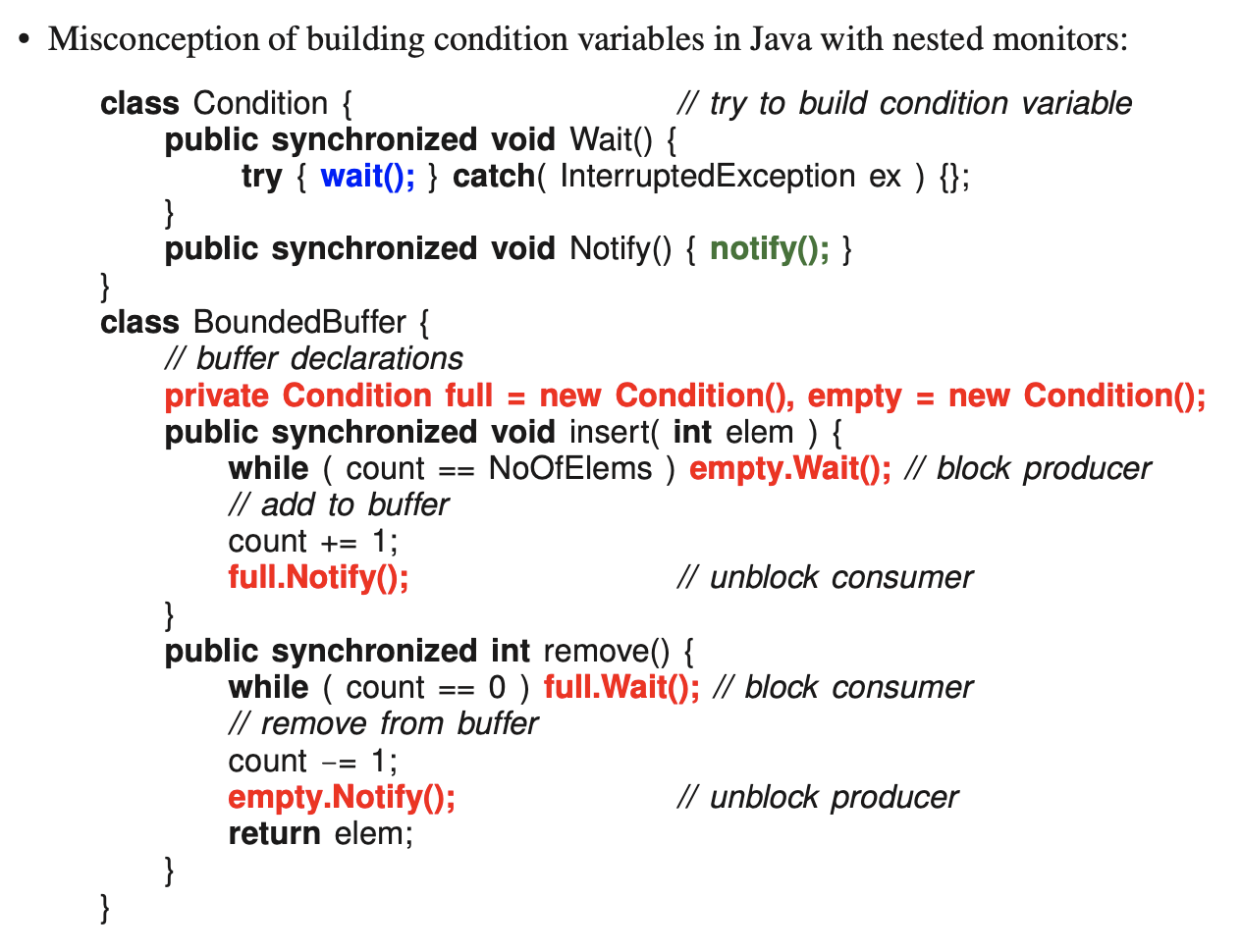

8.11 Java Monitor

- Java has

_Mutexmembers (they used synchronized for mutual exclusion) - Only 1 condition queue

- Has barging

Bounded Buffer:

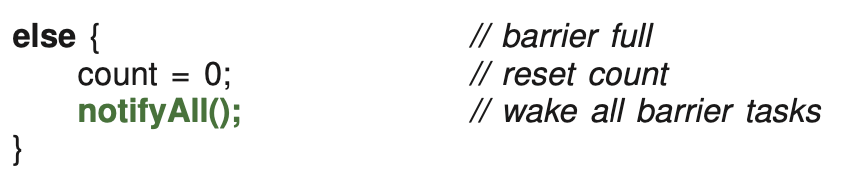

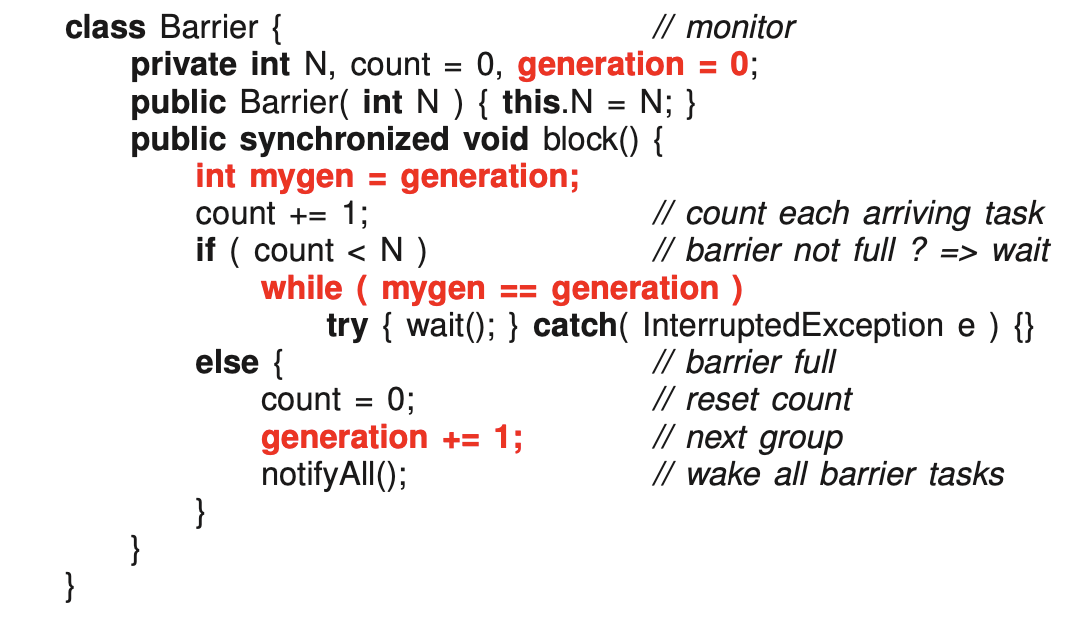

Barrier with java monitor:

- Don’t get on airbus 380

Fix by :

- Instead of counting down, we oscillate between 0 and 1

- Still wrong because of spurious wakeup!!!

- Fixed using tickets (for while condition)

- nested monitor problem. Still doesn’ twork

Nov 14, 2024

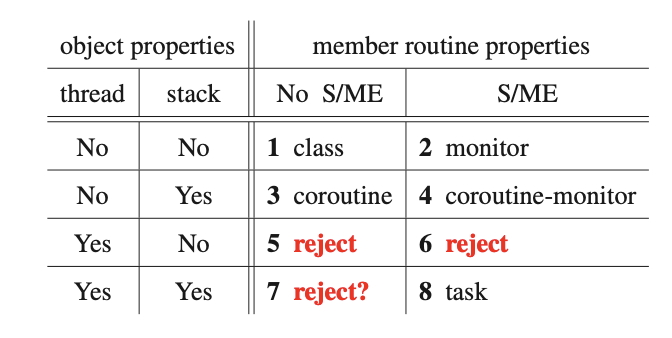

- Last row, reject? is not safe

-

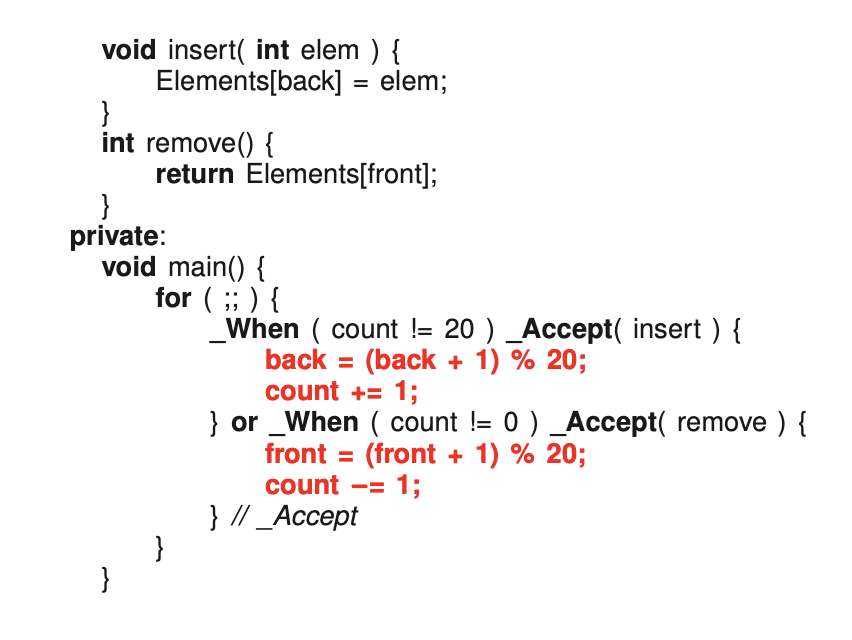

The two lines in insert and remove that are red should be blue. Typo

-

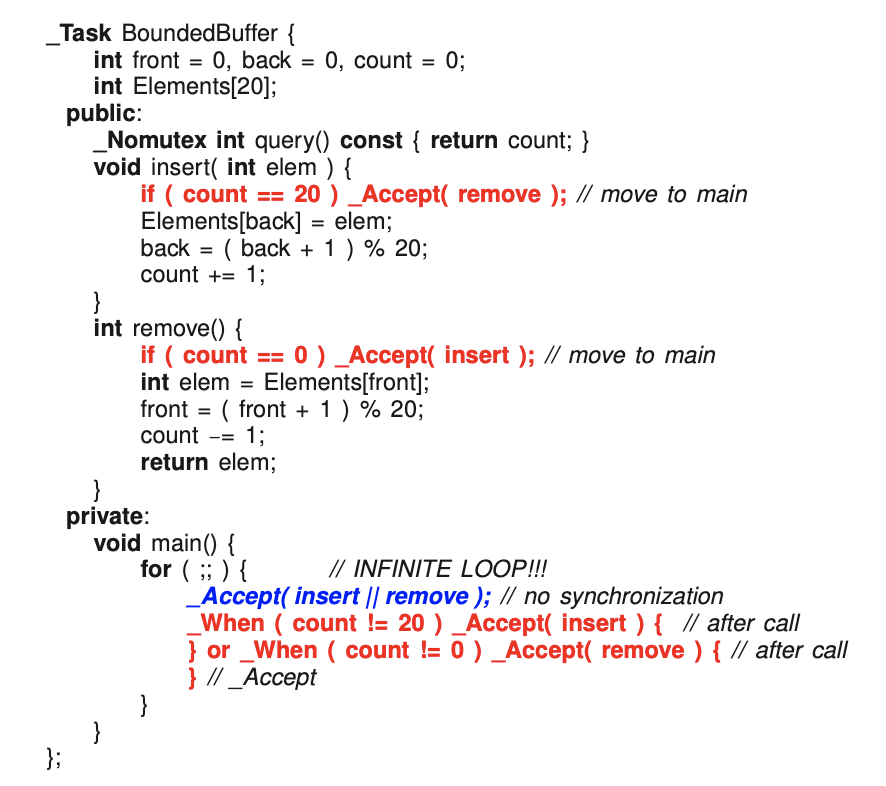

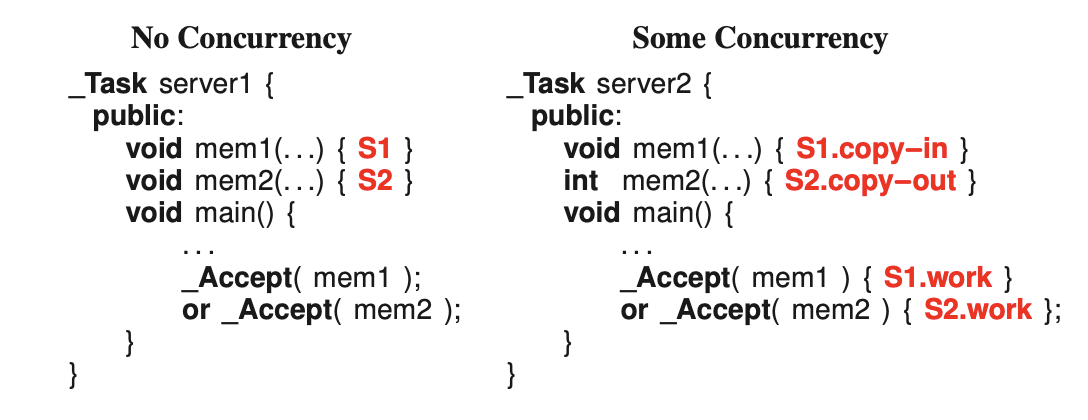

Monitor version of doing external scheduling

-

We want to remove the blue, and push it (synchronization and mutual exclusion) in the main.

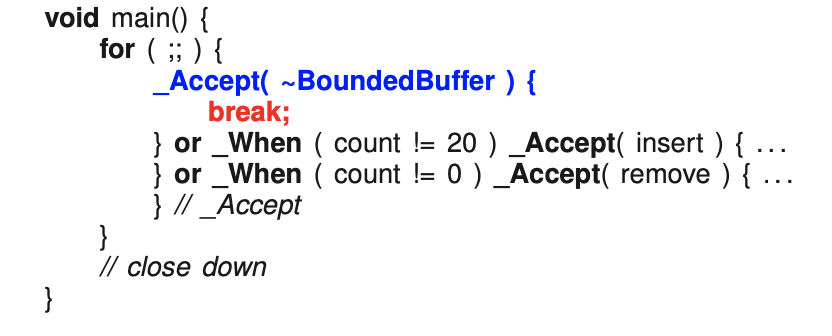

-

_Whenprovides synchronization powers -

Go randomly picks one of insert or remove if both

_Whenis true. -

In miro c++ we have the power to determine which one to prioritize (which bench to look at)

- For example, here we look at insert bench first, then remove bench

-

No starvation can occur, because we cannot insert more when we have more than 20. So we have prevention

-

Once Accept One Call to satisfy your cooperation.

-

Do i need to have for loop in a5????

-

Different from monitor, when we create a Task, it is automatically running, all the doors re closed. Contrast to monitor, nothing is in, all doors are open

-

_Acceptneeds to have a stack, and signalled has a stack

- allows to do try-accept

- dont use: it’s busy-waiting

- reduce the time producer/consumer

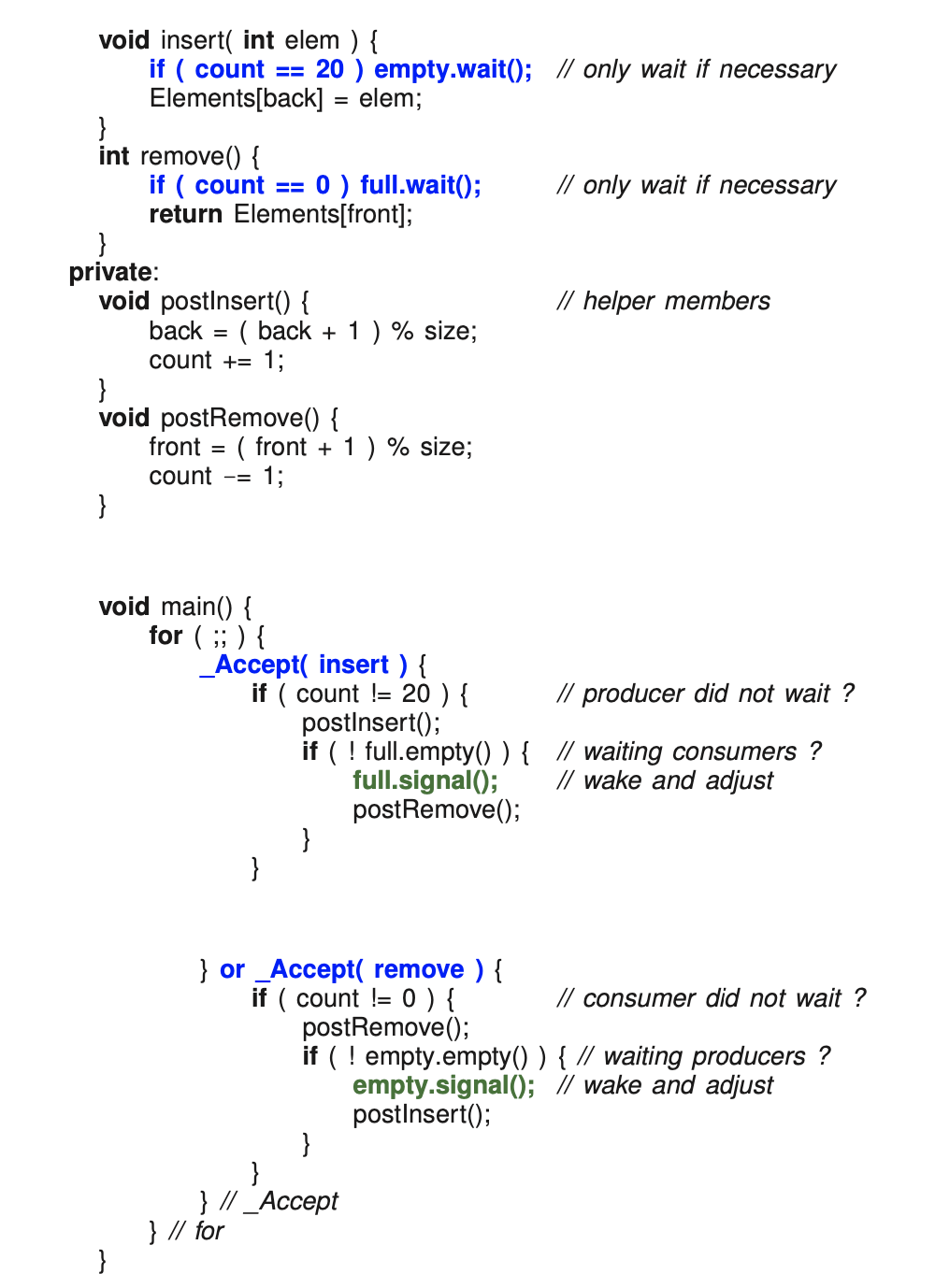

Internal Scheduling

- NOt a rendezvous failure: possibility that the rendezvous happens in the future. We are postponing it to the future. empty.wait() didnt leave, it just sat down in the chair.

- push all the rest of the code down into main

- Everything in insert/remove is to make synchronization work

- Takeaway, you can push everything in main.

- Code doesn’t work: logic is write, but technical error because peter used signals.

- Your full time person is just sitting there! Must use signalBlock

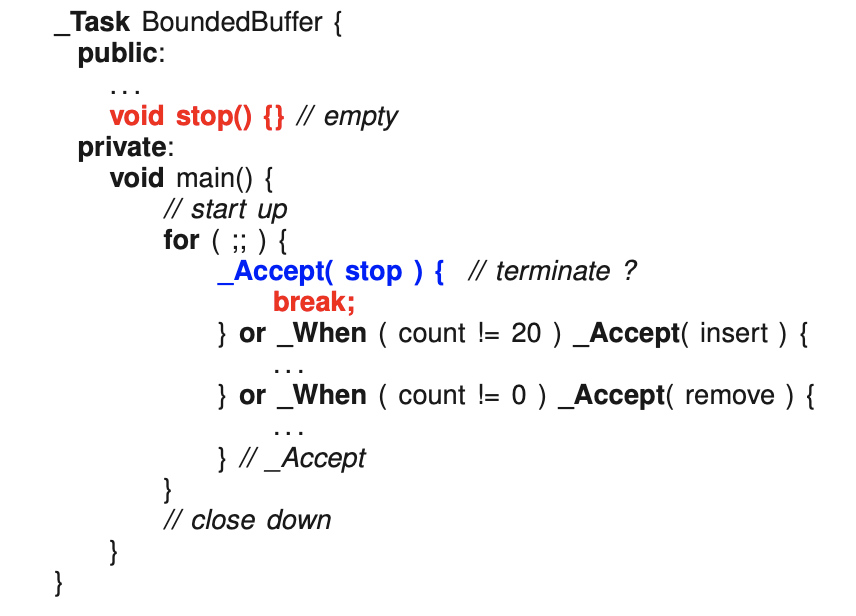

- Nothing in stop(), we just use it to break out in the

_Accept - We put it at the first

_Acceptbecause we want it to stop right away, but we can control it by putting after other_Accepttoo.

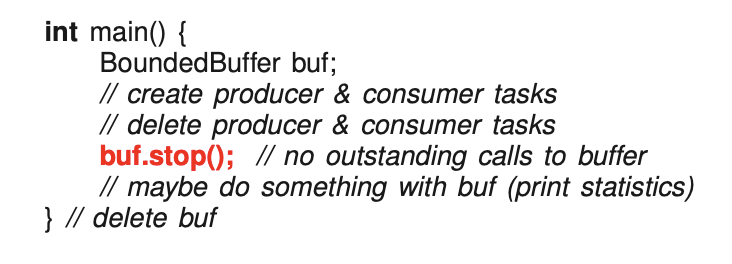

- doesn’t disappear: it’s a monitor (when it runs out the end)

- we can just accept the destructor! → segments fault

- cannot return to the object when it’s already destructed

- make it work like a signal, we don’t put it on the chair

We can do everything on a5.

Goes all the way to 145:

7. Concurrent Errors

Race Condition

- no laws → chaos

No Progress

Livelock

- Massive amount of CPU used → they are all looking at their right

Starvation:

- Every time it looks to the right, there is still someone there.

- The guy is spinning, looking to its right

Big amount of CPU usage: either livelock or starvation

Deadlock: No CPU used.

Two kinds:

- Synchronization

- signal before a wait

- Mutual Exclusion

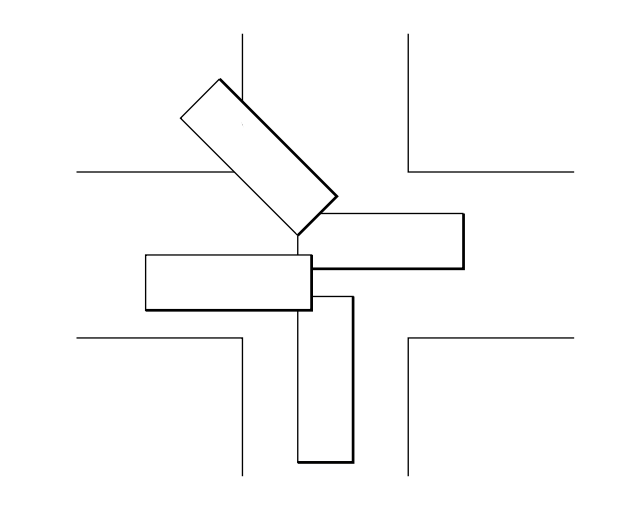

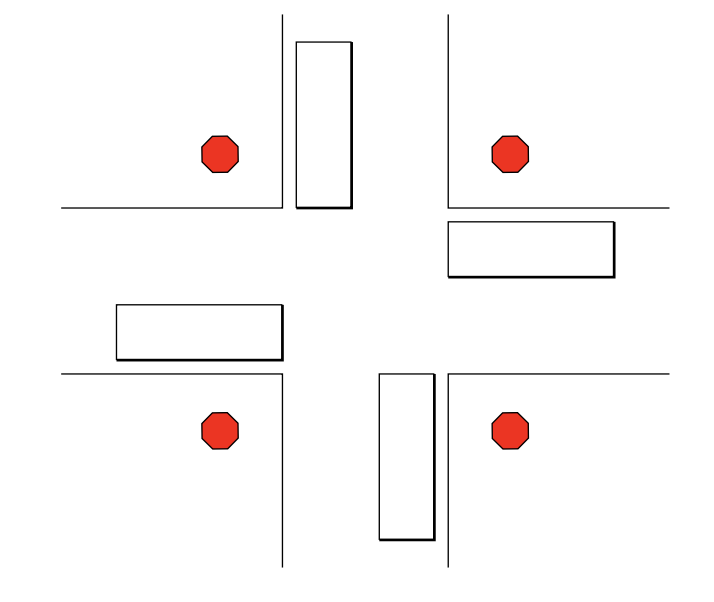

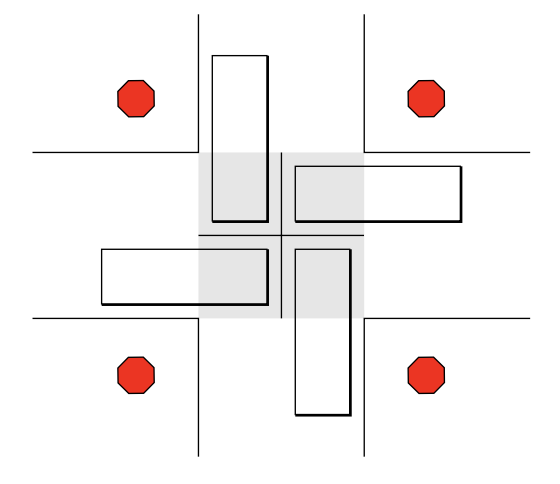

- All four drivers drives into the intersection at the same time.

- They need to come in at the same time

There are 5 conditions that must occur for a set of processes to deadlock.

- A concrete shared-resource requiring mutual exclusion, i.e., exists without a task.

- A task “wanting to drive across the intersection” is not a resource.

- A process holds a resource while waiting for access to a resource held by another process (hold and wait).

- Once a process has gained access to a resource, the runtime system cannot get it back (no preemption).

- There exists a circular wait of processes on resources.

- These conditions must occur simultaneously.

Tuesday Nov 19, 2024

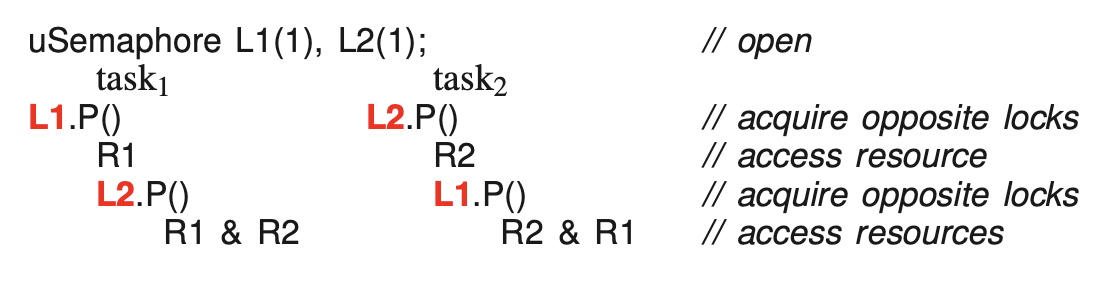

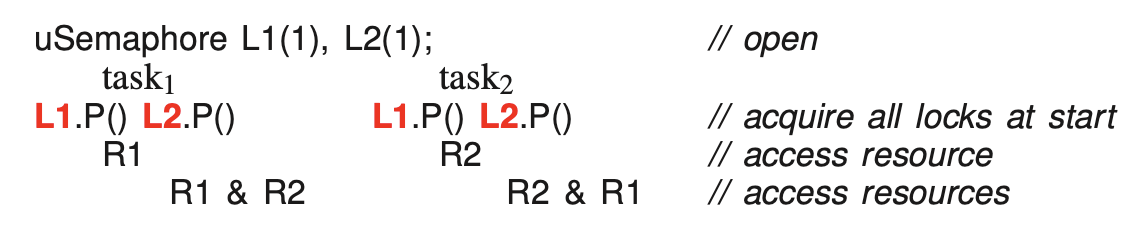

Simple example using semaphores:

7.3 Deadlock Prevention

Deadlock can be prevented by eliminating one of the 5 conditions:

- no mutual exclusion

- silly

- no hold & wait: do not give any resource, unless all resources can be given

- acquire both locks technically

- allow prevention

- no circular wait: by controlling order of resource allocations

- ordered acquiring the locks → so no cycle

- ordered resource policy

- useful in small embedded system, when there’s limited amount of resources that you can order

- prevent simultaneous occurence:

- Show previous 4 rules cannot occur simultaneously

Very high level overview of deadlock prevention!!

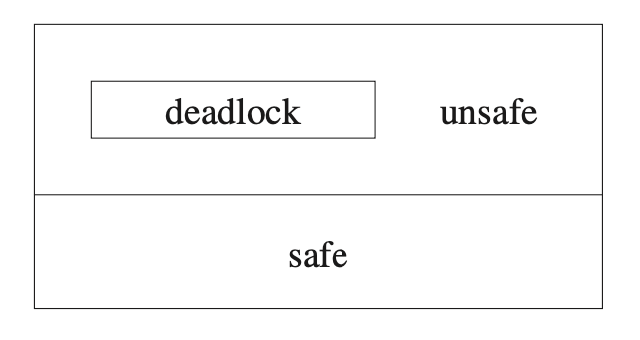

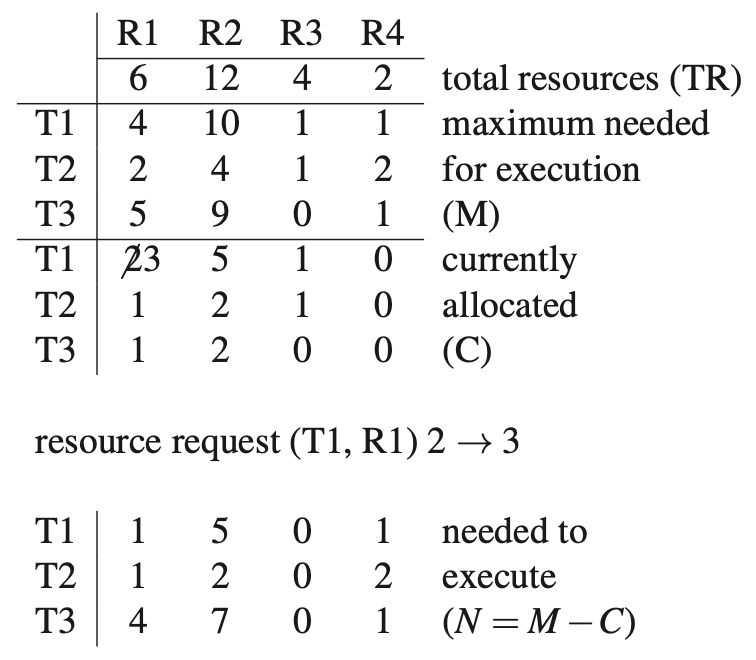

7.4 Deadlock Avoidance

- Monitor all lock blocking and resource allocation to detect any potential formation of deadlock.

- Achieve better resource utilization, but additional overhead to avoid deadlock

Banker’s Algorithm:

- Each Tasks have figured out worst case. which doesn’t make sense how?

- T1 is trying to get another R1, to update 2 to a 3. So we ask oracle and runs the banker’s algorithm to check if its safe or not →deadlocK?

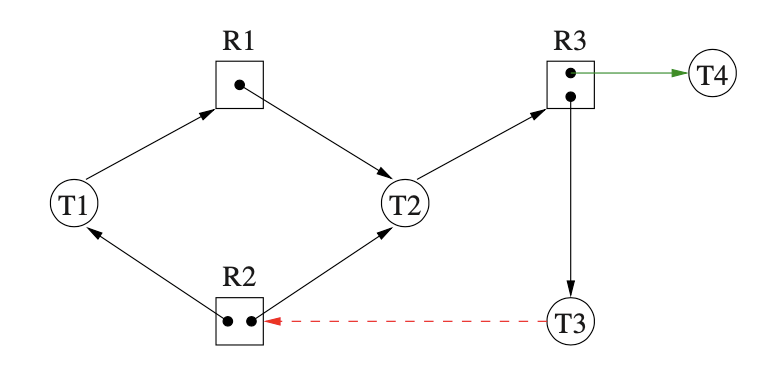

Allocation Graphs

- oracle maintains this graph of resources.

- task come and go in the graph

- oracle cause T3 to block, red line , then check for cycle

Use Rick’s algorithm: graph reduction to locate deadlocks

- pretend to run the task, by checking for only in arrows

Two algorithm that avoids deadlock!: Banker’s and Rick’s algorithm.

7.5 Detection and Recovery

- Let it happen (deadlock), then try to recover from it

- Build the graph every T seconds instead.

- Decision is hard to make, how do we prevent?

- We can restart a task: but it has already changed states.

Which Method To Chose?

Mac, windows do nothing!

Does go detects deadlock? Yes

Go to page 173 page:

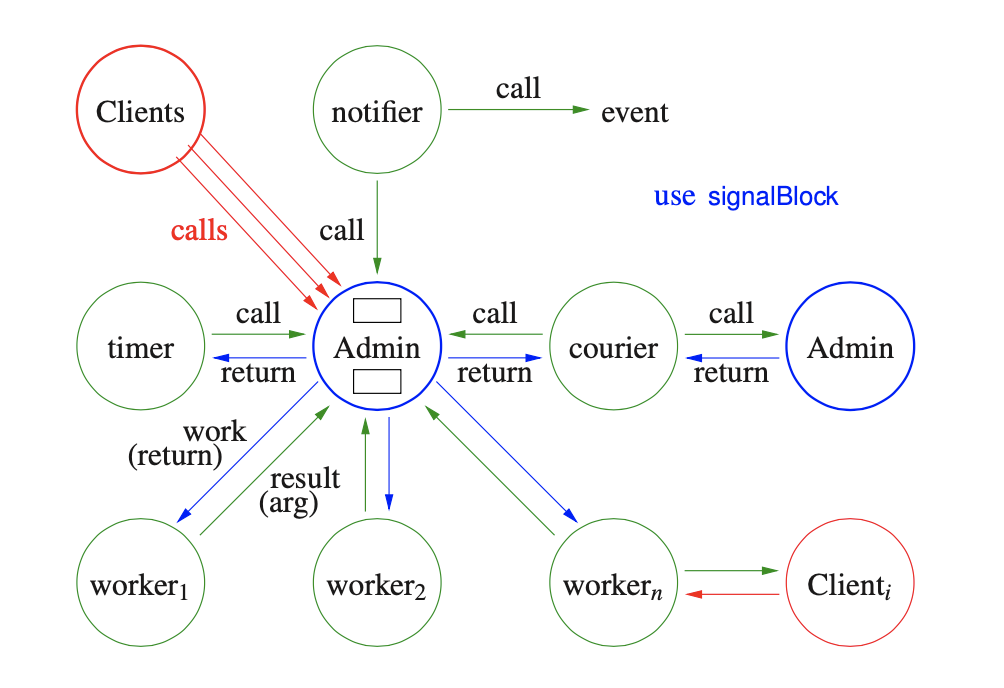

9.3 Increasing Concurrency

171: For voteTask

- move the work in the accept into the workers → Administrator

Administrator:

- something that doesn’t do the real work. does management

- accepts calls and returns values

- It never calls anybody

- Special kind of servers, never calls out, only accepts calls in

- A6

Thu Nov 21, 2024

- notifier worker: waits for a press down keyboard event. When it receives, it calls in to the administrator

- timer: interacts with administrator

- workers: calls in with result of last computation, and ask more work from administrator. if no work, it sits down on a bench in the admin

- Trick: feels like there is two calls → but administrator cannot make calls.

- worker calls in, no work, so it sits down and wait(). this call is still active (in the middle of a call) → will be woken up

- Return the results through the arguments, and work is returned

- Simple workers: only interacts with administrator

- Complex workers: can also interact with client and others potentially

- How can administrators communicate between each other?

- Hire a courrier

- Only interact between each other from a special worker called a courier.

- use signalBlock()!

A6: 4 admin, a pool of couriers (between watcard office and the bank) Bank is an admin. if no money, wait until parent wakes up to give student money. when you have enough money, courier comes back . finesse first worker call (since no argument with result)

Client Side:

- Make an asynchronous call from client to server, that way the caller doesn’t wait for call to complete. puts a buffer → producer will put something in the buffer, that way when the admin decides to accept the call and just gets the info from the buffer.

- not start/wait: we already have a running thread. need to connect to the server. The server thread never ends, keeps running.

- time between these two calls is the magic concurrency

- if result is not ready when second call is made

- caller blocks

- caller has to call again (poll)

Tickets: protocols:

- how to match client with the work it dropped off earlier?

- some kind of protocol to identify the client with the work

- think of dry cleaning

- second call to pull the result by passing the ticket 🎟️

Call-Back Routine:

- Don’t be unreasonable, server cannot stop for you

- Advantage to server: push the results to you

- Each client gets to write their own call-back → flexibility

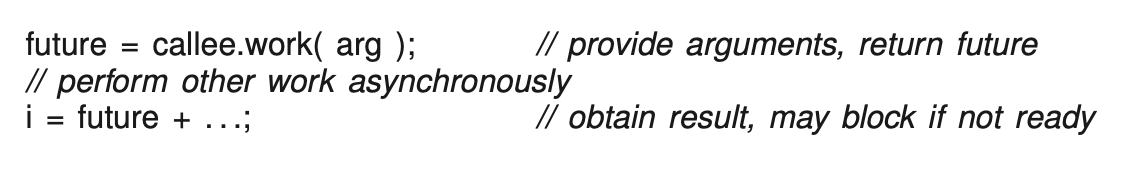

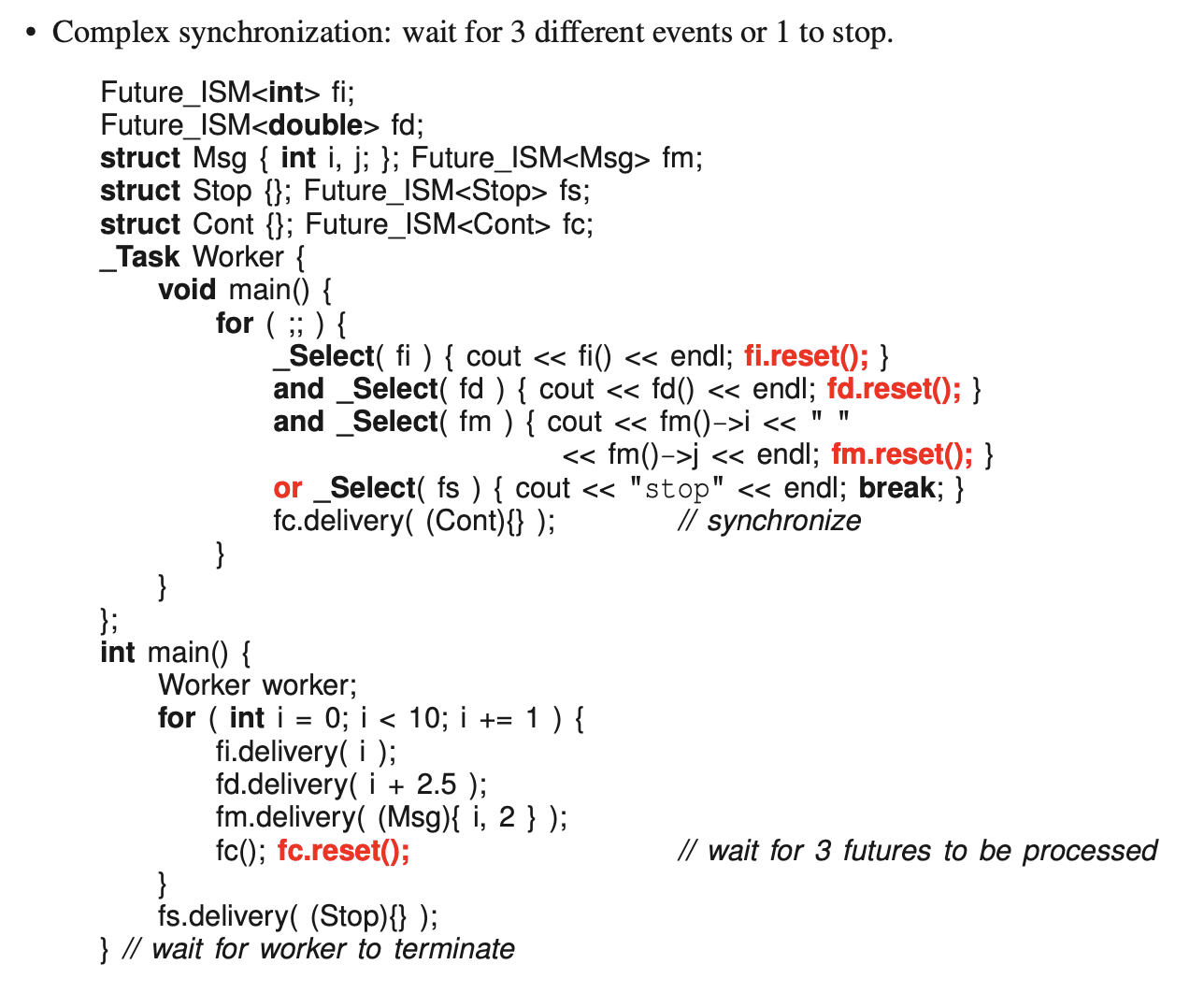

Futures:

- Combine two step protocol down to one

- the future is returned immediately and it is empty.

- caller “believes” the call completed and continues execution with an empty result value.

- future will eventually be filled in some future time

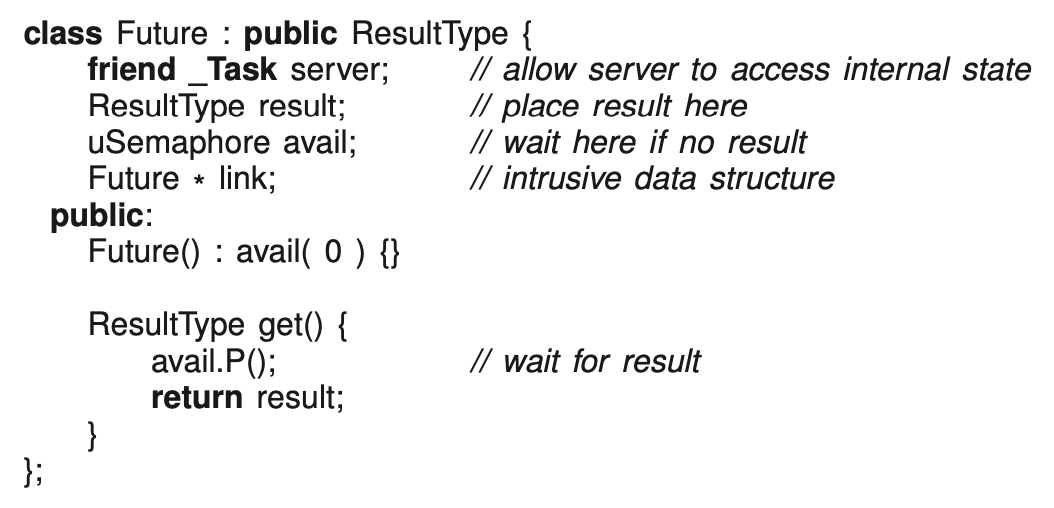

Sketch of a general future:

- link field: allows server (gives out futures), we need to find that future later on.

- one technique is have a chain of futures so we can scan the list and find it

- server creates the future, which clients get ahold of, so at the end, it’s the client’s job to delete it. transfer of ownership and deletion

- theory: future should be ok and don’t need to worry about it

Use

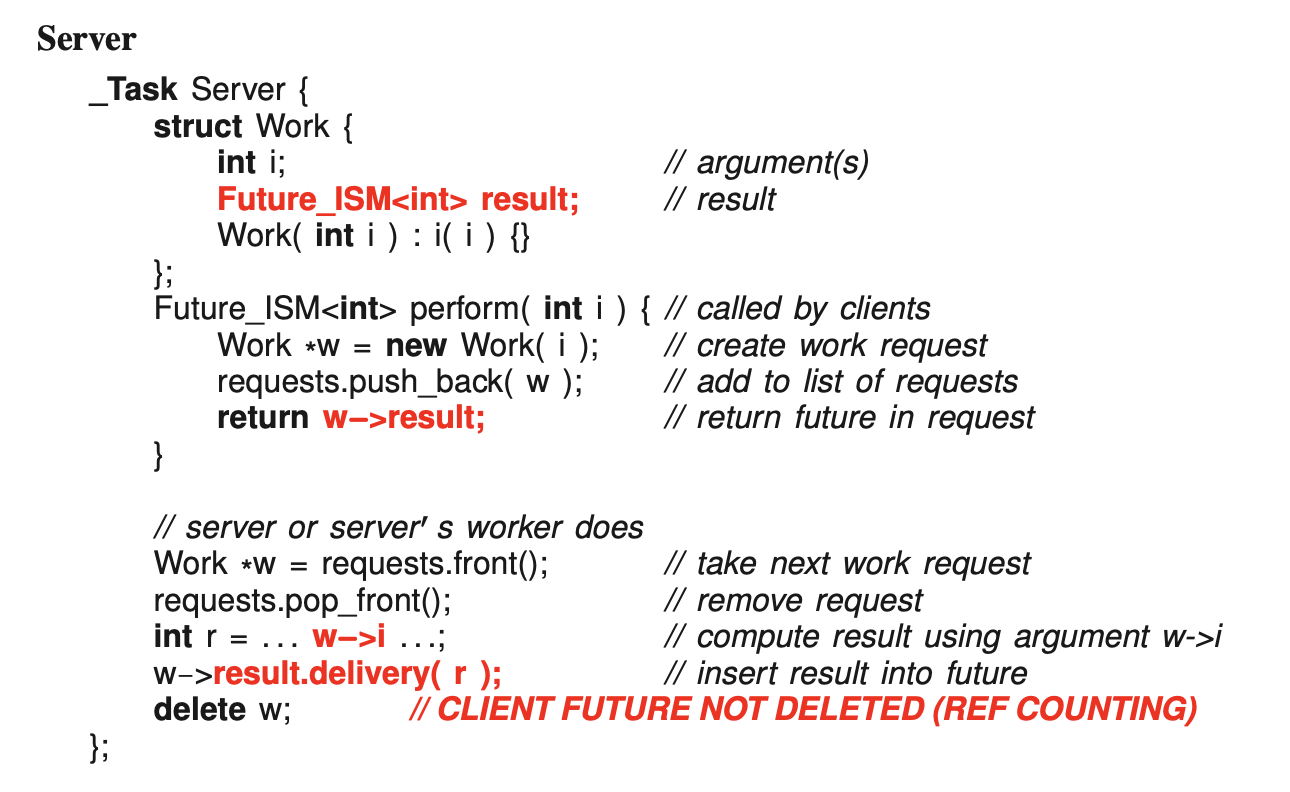

Future_ISM<T>!!!!

Client:

- create array of 10 future integers

- each one can hold an integer, but initially empty

- pass integer in and will compute some value : for the server → will eventually give me a result x10 → server has 10 workers doing this

- get the result:

f[i]()→ we either wait for result or get it right away- two calls? first call does the heavyweight. second call just gets it.

- Problem in c++: << operator has equal priority, so we don’t know which one will call first, which one does the heavy lifting.

f[3]=3: we want to change the future. BUT you cannot change it. because you can share the future with other threads, want to guarantee others can get the right value. Only read only, write once variable.- You can use

reset() cancel(): really weak. not safe dont use it- After the future result is retrieved, it can be retrieved again cheaply (no blocking

- acquire the lock on the stream

osacquire(cout), then we go to sleep with the lock waiting!! withf[i]() - you can have a future pointer! server will eventually give me back answer to the pointer. pointer points to the place where future returns the answer. no one can change this pointer. you can change the thing that it is pointing at

Server:

- Work unit: data passed, future we give back to client → both part of the call

f[i] = server.perform(i) - perform routine: create work unit and push onto a list (to remember). and send back the future. I have a copy and client also have a copy. They are reference counts. That is if someone deletes theirs, you still have a copy of it.

- server’s worker: take a work off the list, compute it, calls a delivery to stick it onto the future.

- either in server or worker, you need to decide

- You can stick an exception in the future!! must dynamically allocate a future!

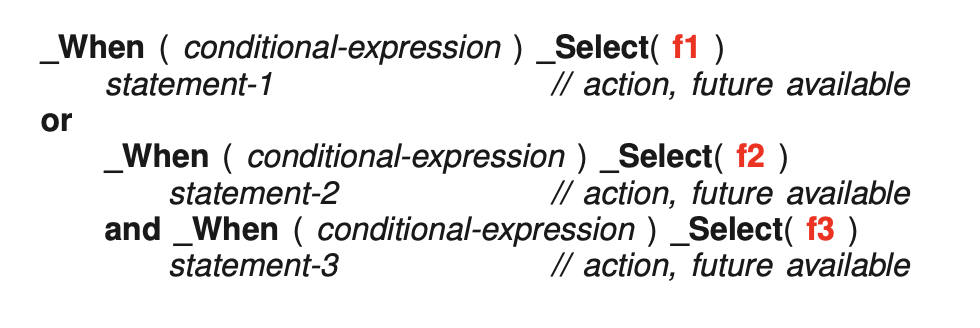

Complex Future Access (client side):

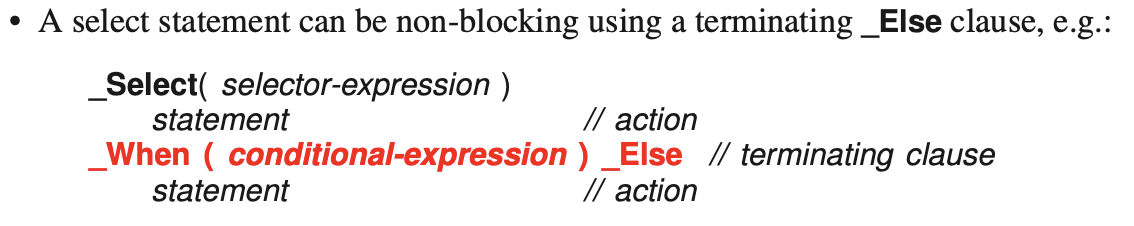

_Selectallows you to select a future and do the work_Select( f1 || f2 && f3 );

- What we want to do is pull it out to its long form:

- we can put statements after each one of them and put

_Whenclauses!

- we can put statements after each one of them and put

- f1 becomes available, executes statement-1 and can continue do other work after.

- f2 becomes available, executes statement-2, then blocks until f3 becomes available.

- f3 becomes available, executes statement-3, it executes statement-3 and continue

Tues Nov 26 2024

Pull futures into their long form so we can add statements afterwards!

Difference between future selector and accept?

Accept only have or, future can have or and and.

- In vendingMachine, you want the student to go to sleep

- Highly unlikely to have a program with futures like this: just an example

- Go have select but doesn’t work with futures but with channels (I think it’s what threads are called in go)

- He’s just showing off with the select statements. On assignment we only need to do tiny select statements an a bit of futures

10. Optimization

What is the -O flag for compilation?

Compiler tries to shove everything into one file. All the work we do gets thrown in the garbage. But we only do it for ourselves.

General forms of optimizations (-O):

- reordering: data and code are reordered to increase performance in certain contexts

- eliding: removal of unnecessary data, data accesses and computation (inlining code)

- replication: everything can bed duplicated

Need to be isomorphic → generate the same result every time!

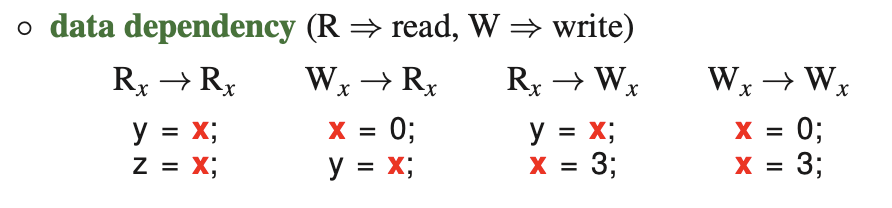

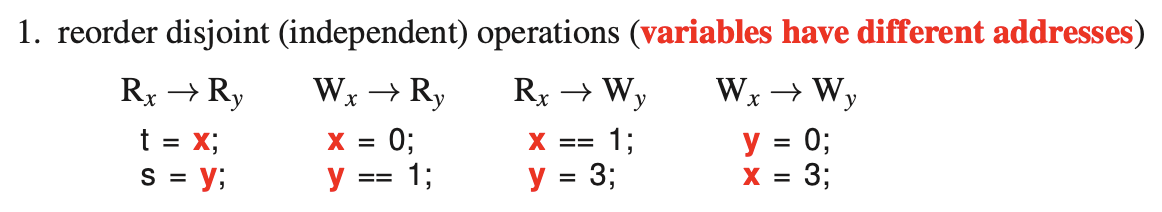

10.1 Sequential Optimizations

- Compiler asks if we can flip the two and it will get the same thing?

- First one yes, second one no, third one no, fourth one no

- Can flip all of them

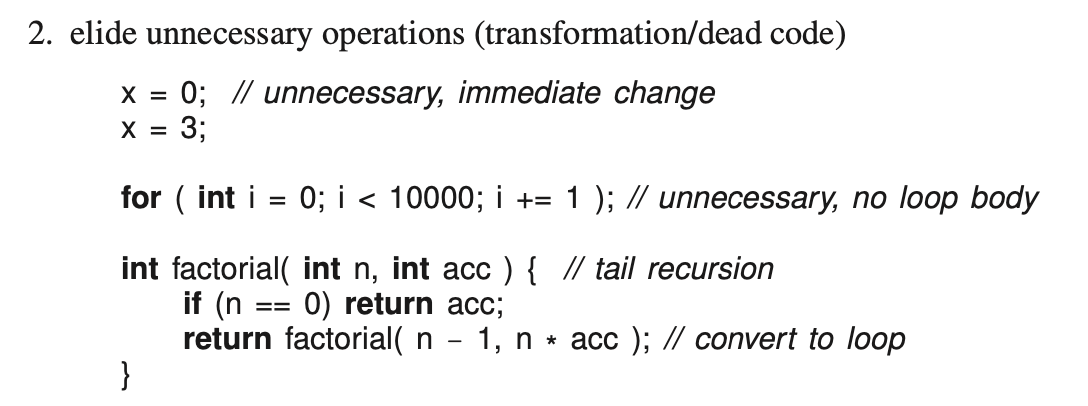

Elide code:

- compiler will get rid of the unnecessary loop with no body

- compiler will try to see if the function is tail recursive, and convert it to a loop? Got rid of recursion and replaced it to looping

We have a integer and floating computation unit. So they can be computed at the same time on the chip. Changing sequential to a micro parallel program, with small bits of parallelism.

Compiler will utilize the hardware’s environment to optimize your program.

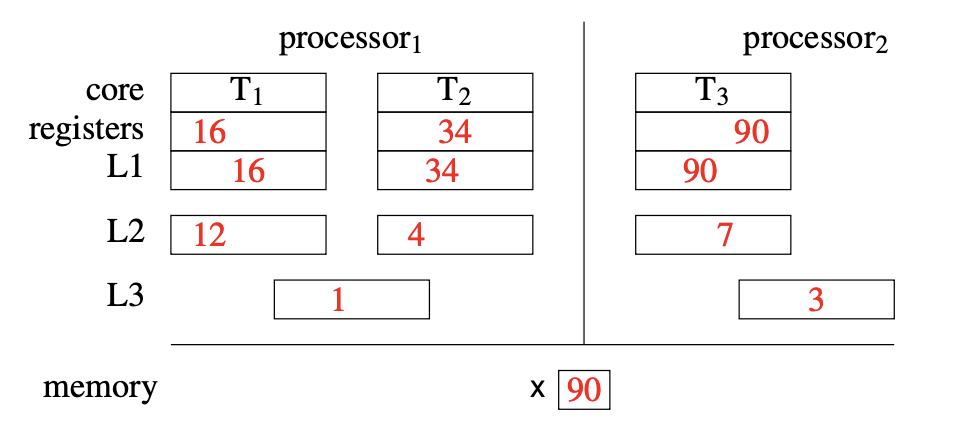

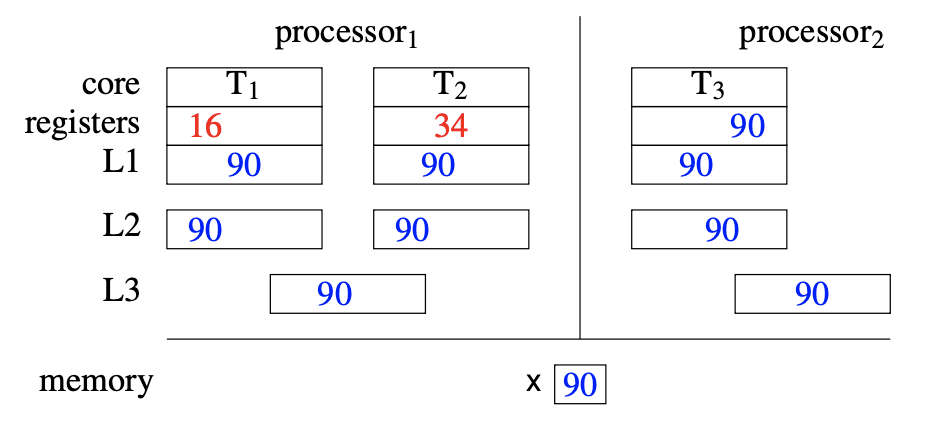

10.2 Memory Hierarchy

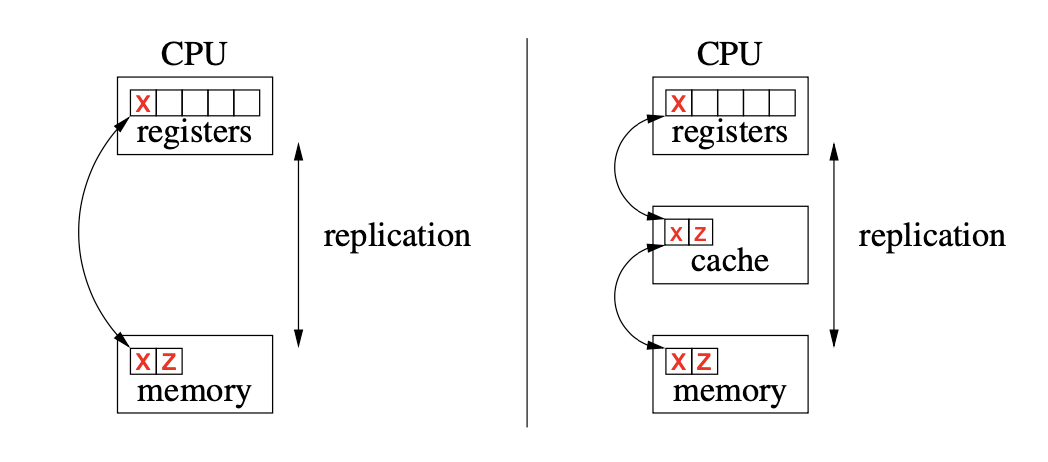

- Virtual memory → cache (what he’s talking about)

- Paging: from disk to memory

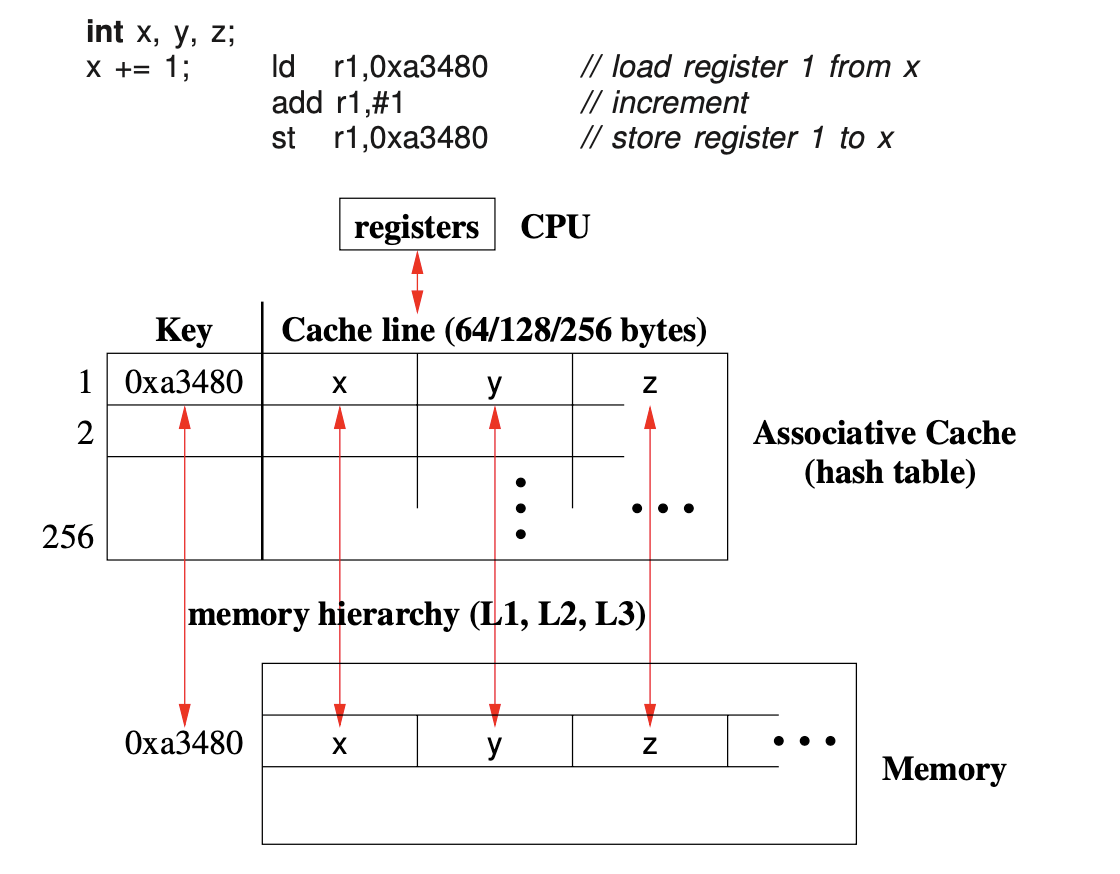

- Arithmetic can only be done in the registers

- 1000 times difference between disk and memory, and another 1000 times difference between memory and CPU

- once x is in the register, we lazily let → principle of locality. let it sit in the cache because maybe: lazily pull and eagerly push

Cache Review

- Solution: use hardware cache to stage data without pushing to memory

- apparently if you just print the x, y, z, the addressees are in reverse order

- pull x into cache, then load it to register (256 bytes are pulled in, just like paging, bringing more than you need, you bring in the whole page)

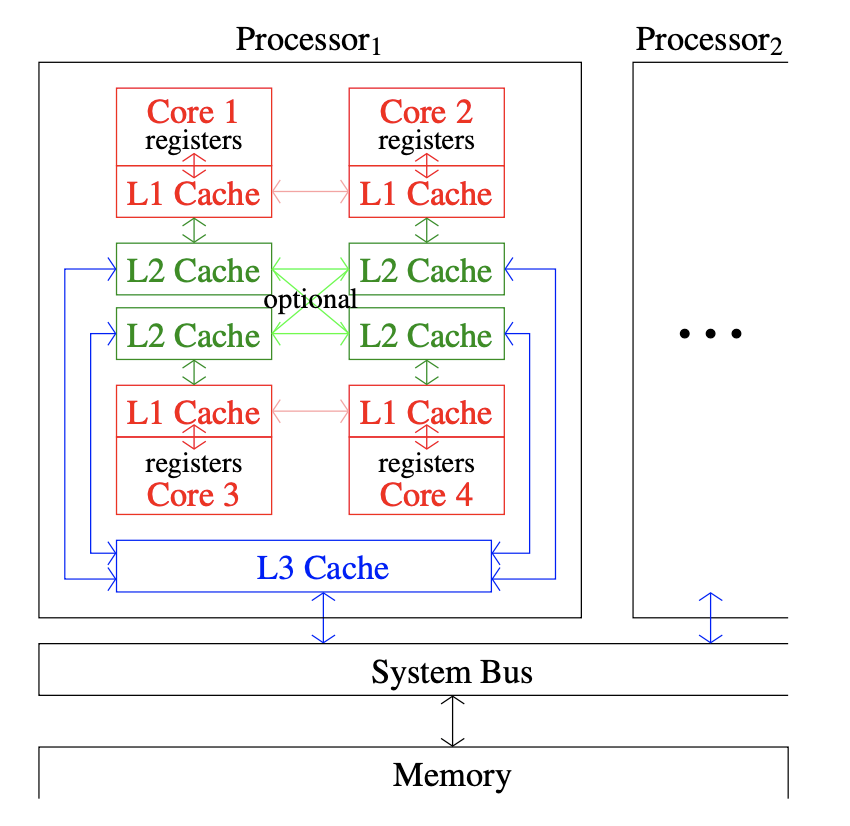

- socket

- each core has registers

- Registers are NOT shared, but cache/memory/disk are SHARED.

- Cache: instruction and data cache? might be split

- 3-4 levels of cache to get to registers

- ssd on your disk drive

- hierarchy just keeps getting bigger

- Stick structure for x for sequential program in memory

- madness, which one is the real x??

- Tree structure

- If T1 needs to push the value into L1 from register, it will flow thorugh all of them

- If we did this eagerly, we stop system, run protocol to update the cache. Does it work? no

- What actually happens is that the cache updates lazily

- We are going to see stale data

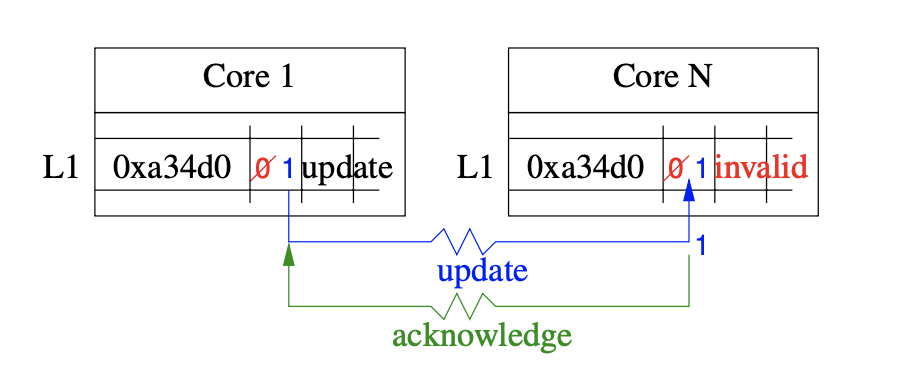

10.3 Concurrent Optimizations

Concurrent execution: weak memory ordering → can read stale data

Only reordering disjoint reads doesn’t cause any problems

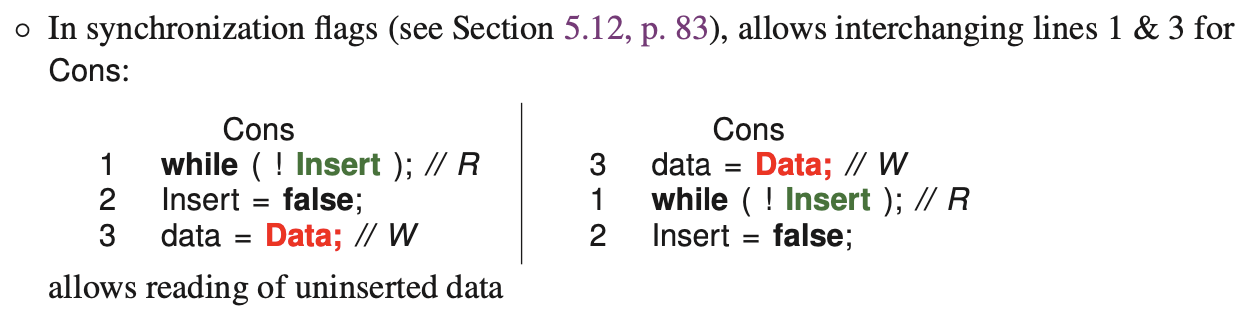

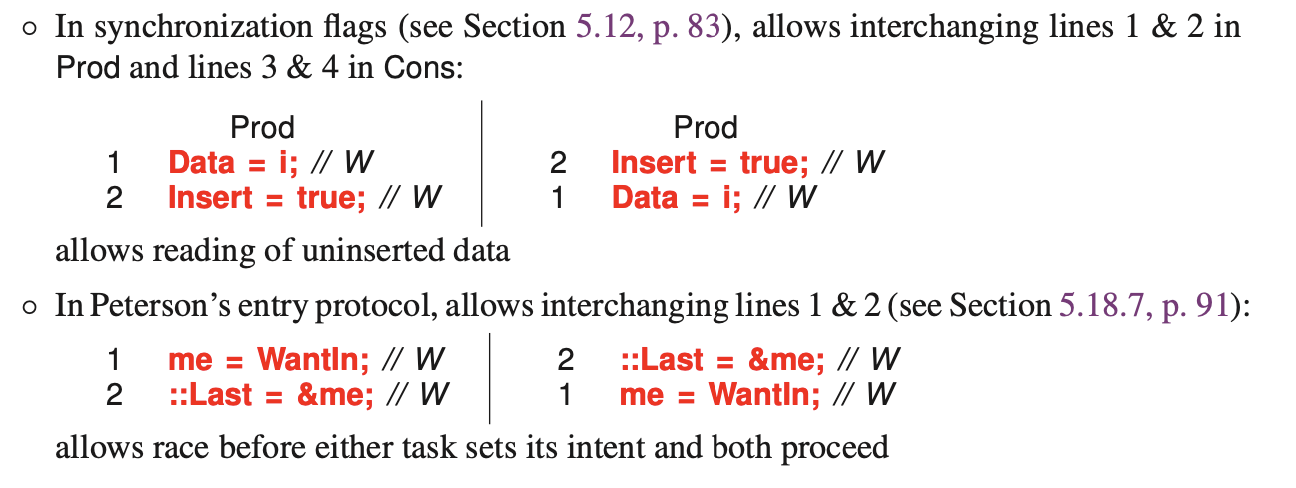

Problems:

- compiler is breaking concurrent program

- Compiler f it up

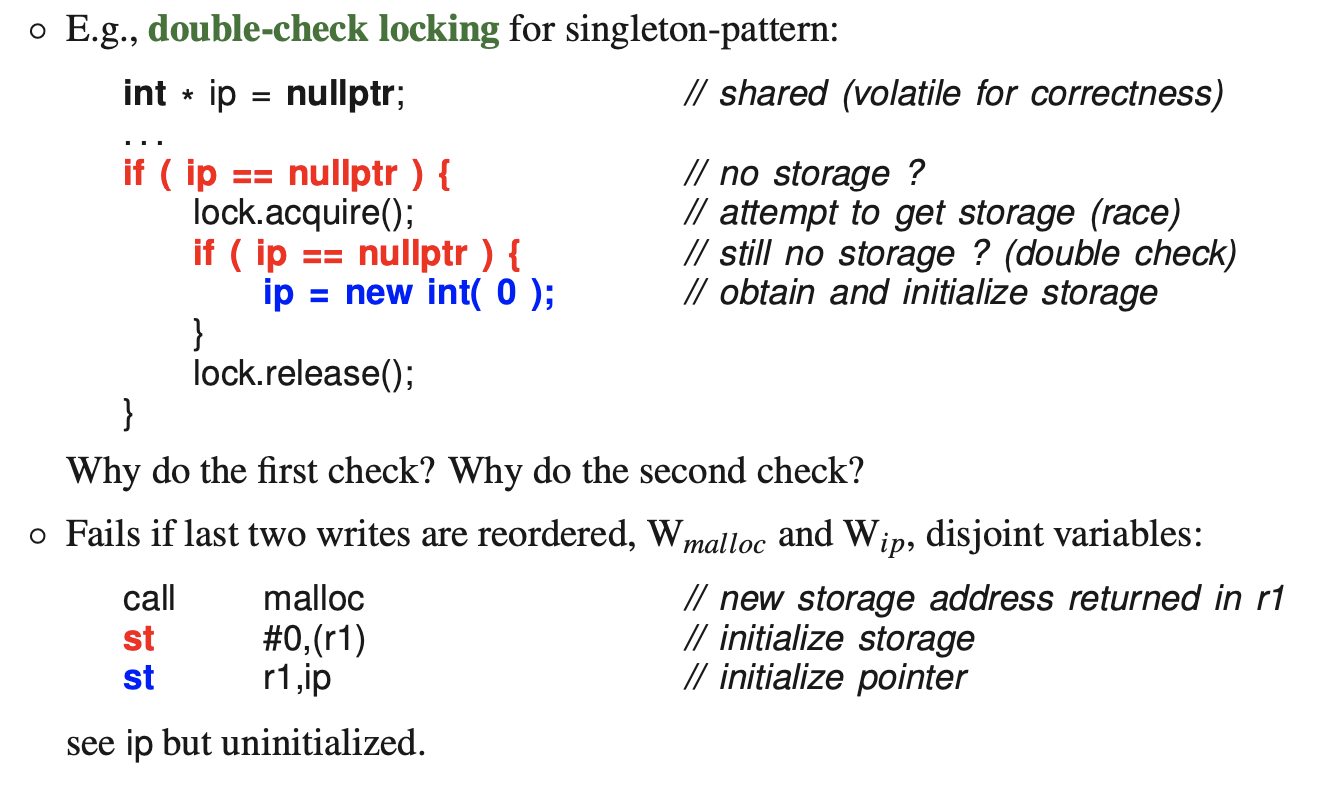

Example: double-check locking

- changing from sequential to concurrent version

- What can happen, you can have a race, enter and all see that the ip is null. Compete to the lock, one wins. Double check → if it has been done, before.

- BUT, the blue line is wrong

- But the compiler: sees two writes, and says that we can flip it.

Thu Nov 28, 2024

Eliding:

- Elide reads value by copying into register: load by replicating

Replication:

- Both compiler and hardware are moving things around

10.4 Memory Model

- Compiler knows what kind of machine your running on, and will make it work

- Don’t need to know the subtle differences (even Peter doesn’t remember)

- Our laptop is running TSO

- Arm macs have RC → harder to do concurrency

10.5 Preventing Optimization Problems

- Moving shared variables can cause race conditions!

- We are trying to write race free programs in this course

- Peter accepting defeat

- Two approaches

- add hoc

- formal

volatile→ need to store it back to memory- When to use volatile?

- If program doesn’t work and put in volatile and it works. Then your program needs volatile

- C/C++ vs. Java volatile

- They are different.

- Java volatile is stronger, it prevents disjoint reordering

- disadvantages: too conservative? assuming all programs are sequential consistency (SC)

Dekker for TSO: (doubt we need this)

- do green and red or blue and red.

- if you don’t do red, it still works, it won’t run as fast

11 Other Approaches

11.1 Atomic (Lock-Free) Data Structures

You can make struct, queues, basically data structures concurrent friendly (lock free).

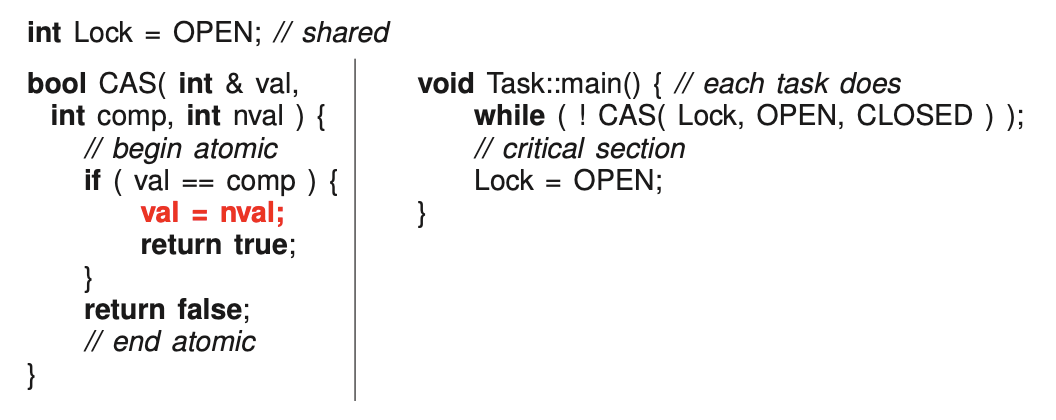

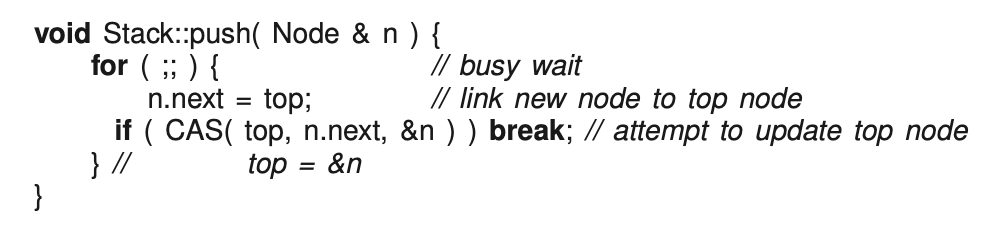

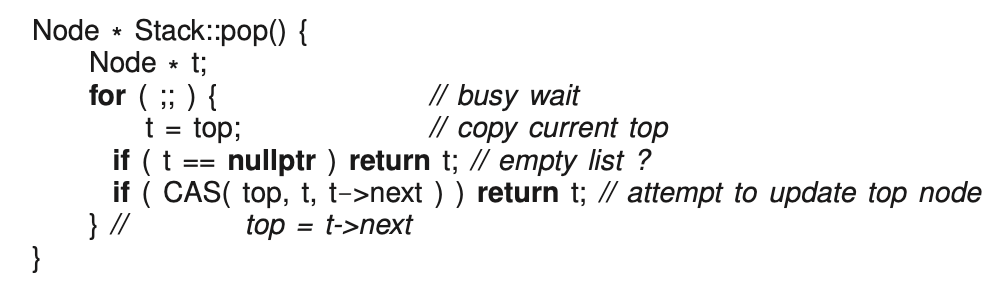

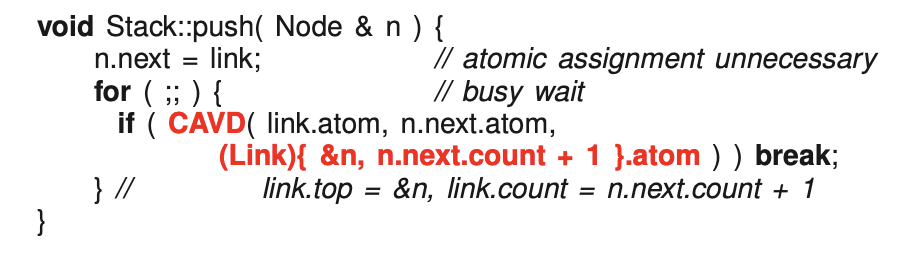

11.1.1 Compare and Set Instruction

CAS : compare-and-set does not prevent ABA problem.

- read, and sometimes you will write. conditional write: new atomic instruction

- we can use it (CAS) to build mutual exclusion

- if lock is open, set it to close

How to push a node onto the stack using CAS and pop:

- use copy to change value into

11.1.3 ABA Problem

An interview question!

Context-switch happened and B is gone/popped. Stack is corrupted!

- What is the name of the problem that occurs if only the CAS instruction is used to build a lock-free stack?

- ABA problem

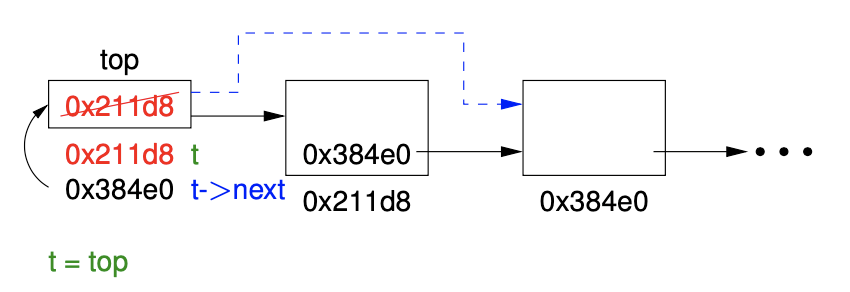

11.1.4 Hardware Fix

Put a ticket counter.

- counter with header node

- compiler trick so type matches

- We only count the pushes

- Atomically update the new top pointer and new ticket counter value.

- Pop doesn’t have anything special

- How does this help?

- we start with 3 on the stack, that is 3 pushes

- context-switch, since they pushed A back on to remove B, ticket value is not 3, it is 4 since it pushed! so it fails.

- what lock free people do, to ensure they don’t have ABA problem with their data structures

One other problem that can happen: we dereference pointer to ask about the next field. this dereference might already be corrupted. reaching out for a random storage. → Segment fault can happen

11.1.5 Hardware/Software Fix

Trevor brown → lock free data structure

Keep track of data structures that got removed. If we access pointers and check if it’s on the hazard list.

Building micro garbage collection data structure.

There are c++ lock free data structures.

Locks v.s lock-free

- lock-free has no ownership (hold-and-wait) no deadlock

- lock-free can only handle limited set of critical sections lock can protect arbitrarily complex critical section versus

- lock-free no panacea, performance unclear

- combine lock and lock free?

11.2 Exotic Atomic Instruction

VAX computer → used to be the best computer. Has a atomic instruction to insert and remove from a doubly linked list. Hardware people can help the lock-free people!

Load link, and Store conditional! → someone does the work for you and watches the memory

If we go back to ABA problem. It fixes it.

In theory, we want multiple watchpoints to avoid ABA problem.

Intel came up with Software Transactional Memory → really slow no one uses it.

Tue Dec 3, 2024

STM → hard to do it: no one is working in this area (according to peter)

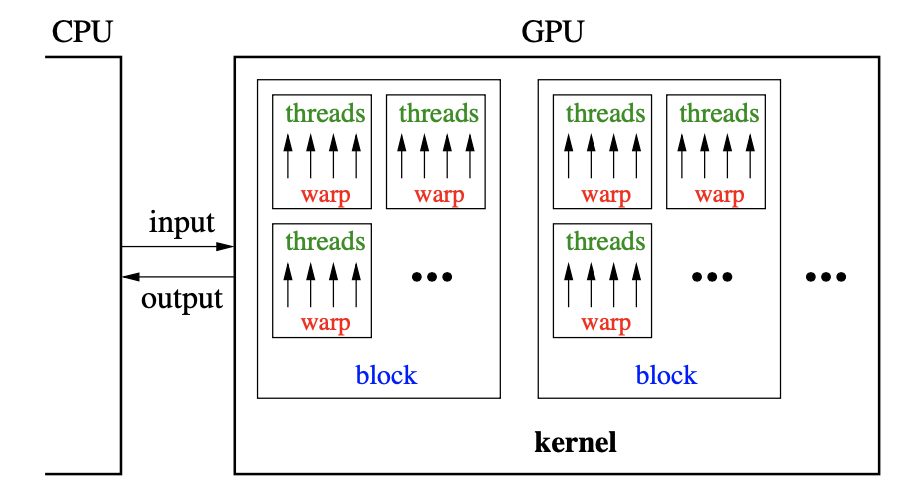

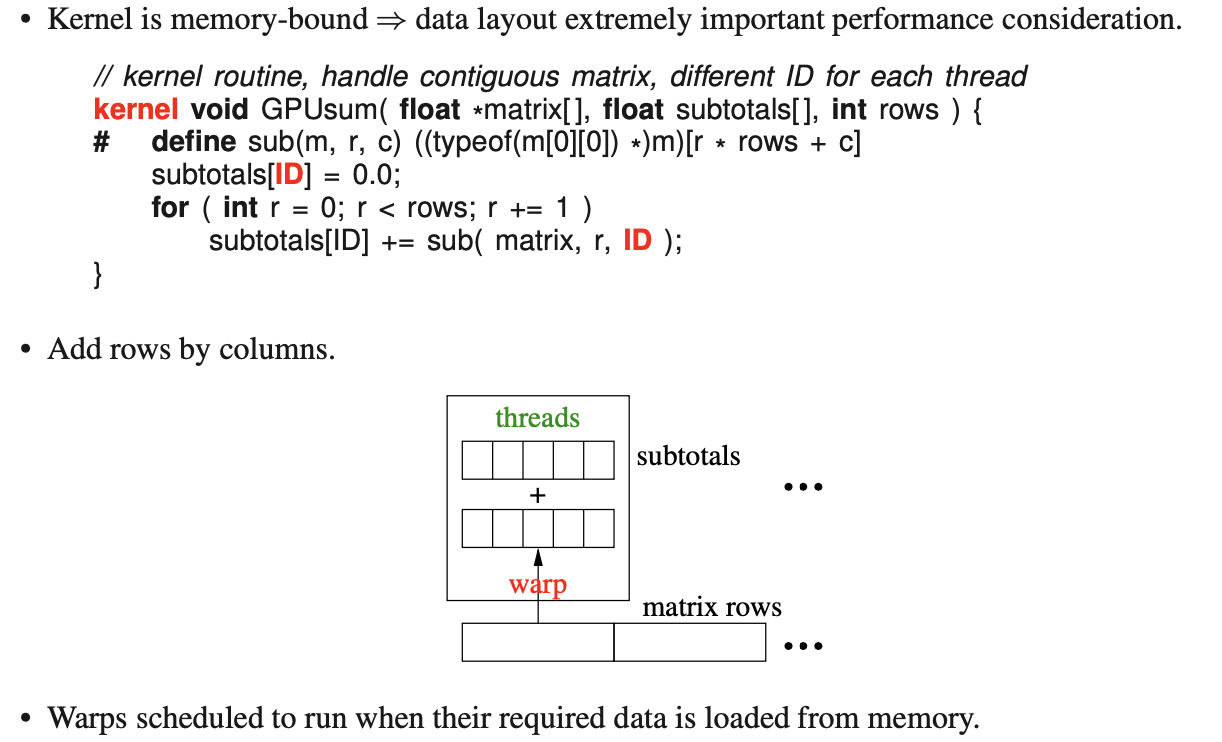

11.3 General-Purpose GPU (GPGPU)

Coprocessor → sits beside the CPU, connected by a bus. Very fast communication, high bandwidth connection

SIMD (GPU): everyone has to execute the same.

- no cache, but a lot of registers.

- threads, warp, kernel, etc they all have their own memory

- we have to figure out the memory

- compiler people use vector-processing to do faster memcopy for instance.

- nobody is using the vector-processing unit that is sitting in our laptop

11.4 Concurrency Languages

Ada

- protected type in ada is the monitor

- cannot use ada’s waituntil to code up the dating service, since ada forces the waituntil at the beginning

Ada came up with the requeue statement, changing internal scheduling to external scheduling.

The problem: need to pick up all the computations if it is to be put on another accepted statement.

In contrast, waiting on a condition variable automatically saves the execution location and any partially computed state Coroutine

11.4.2 SR/Concurrent C++

Example of people trying to get rid of external scheduling.

Not allowed to use

introduced by clause → calculates for each true when clause and selects the minimum

11.4.3 Java

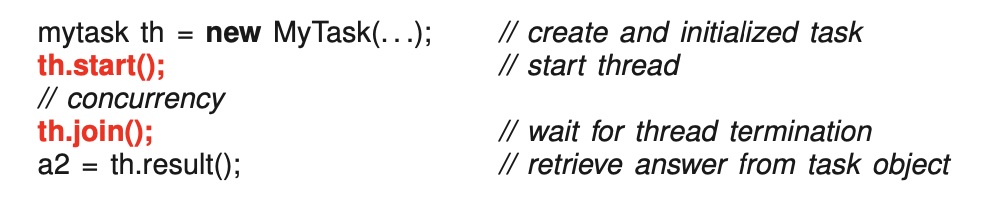

Need to call the start() routine. If you don’t call start(), it’s an object, not a Thread yet.

In microC++, the curly brackets do the join, then deletes it. But not in Java.

Java program:

- In java: any class object has to be declared on the heap

- Like μC++, when the task’s thread terminates, it becomes an object, hence allowing the call to result to retrieve a result.

- see section 8.11 p.161 for monitors

- Java is using heavy kernel threads

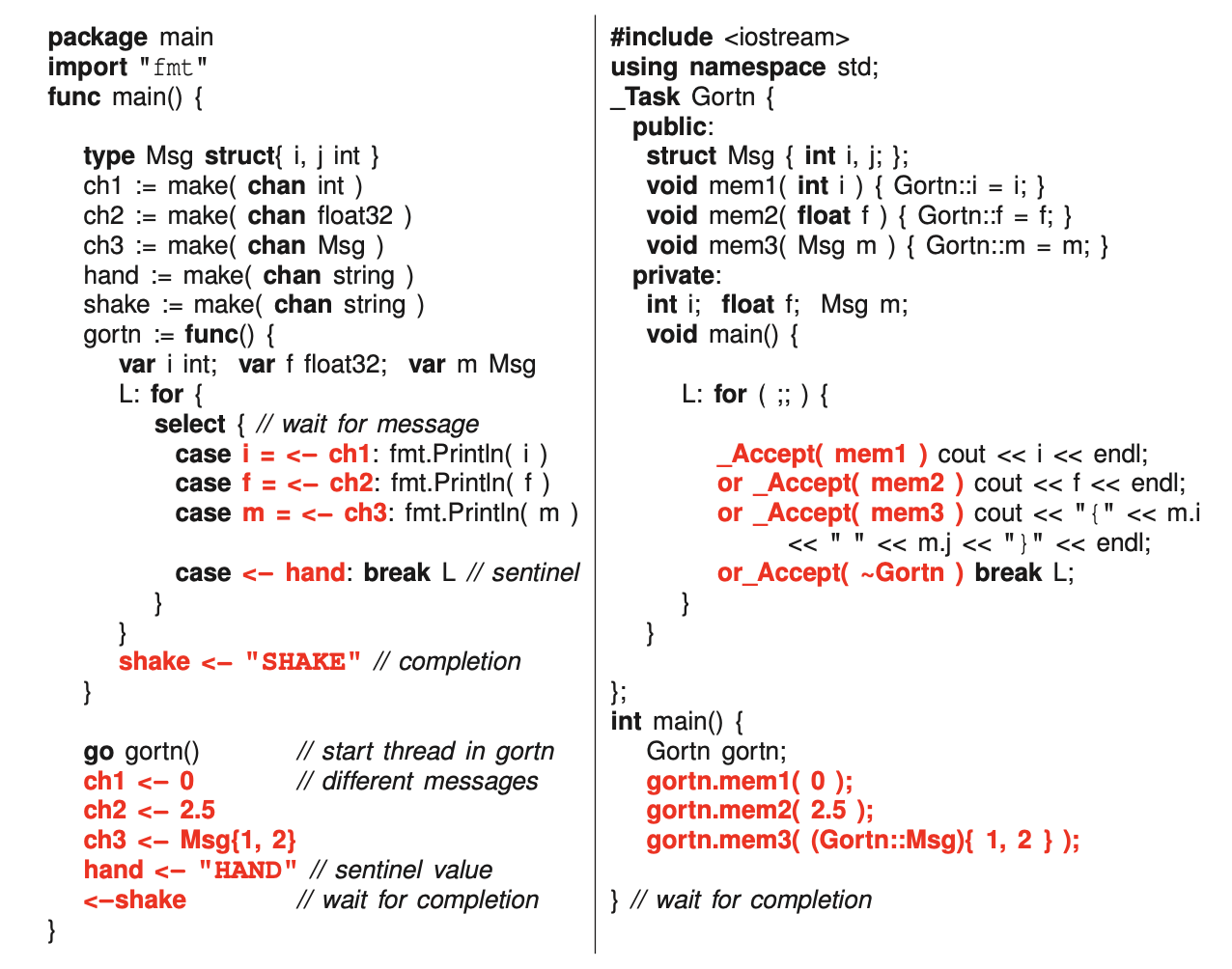

11.4.4 Go

MTV sanfran

C forall: Go on steroids, but backwards compatible with go

- Non-object-oriented, light-weight (like microC++)

- light-weight threads runs on top of kernel

go foo(3,f)// start thread in routine foo- go routines are tasks

- go is just like a start in function foo

- no address given, we don’t know the name of the thread, we just start them

- no way to get a return value

- no direct communication!

- instantly terminates… we need to wait for all the threads to finish, not necessarily easy to do that.

- threads communicate through channel

- peter don’t like channel: you have a paradigm shift. but 90% time you do call/return, one paradigm call/return and works nicely.

- channel are just a bounded buffer

- operator ← send/receive

- Go vs. microC++

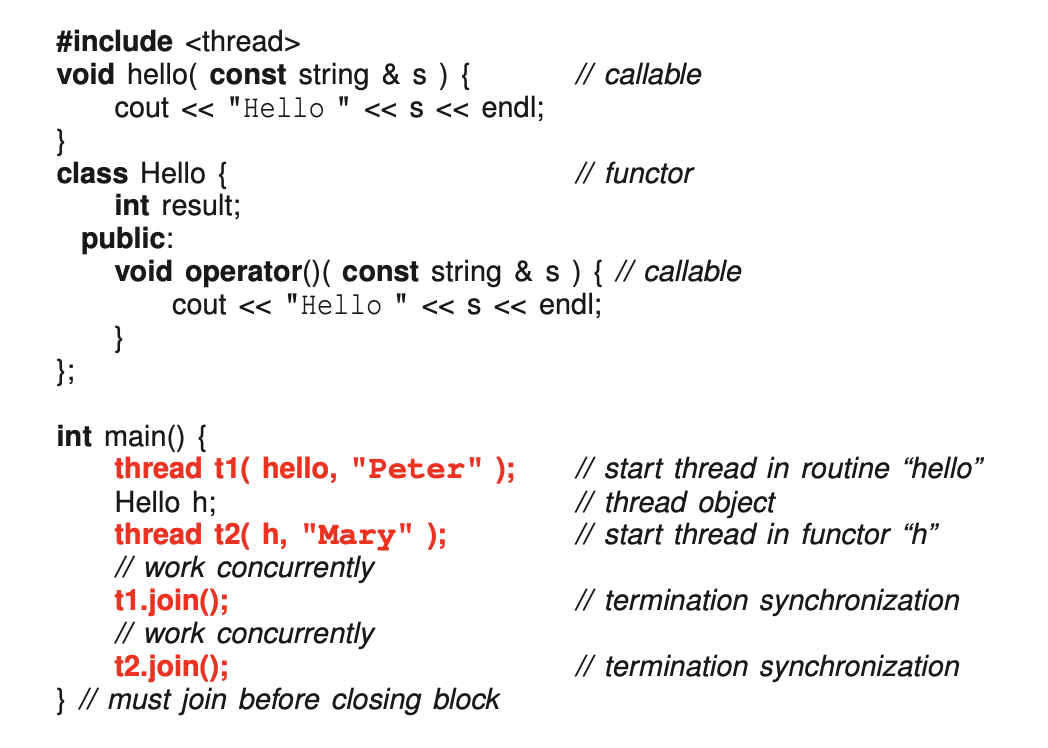

11.4.5 C++11 Concurrency

- 2 way of start threads: callable and functor

- main: C++ doesn’t do implicit join with the curly brackets like how microC++ does. you need to explicitly call join.

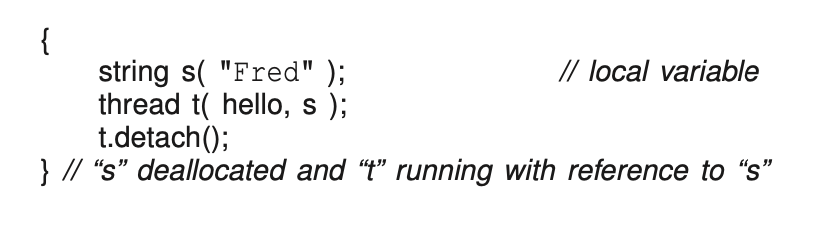

- every threads need to have join: you can have a hidden parent that does all the join or something

- thread keeps on running, but storage s is gone, you’ll have problems if the thread try to reach that memory

Locks:

- have mutex, condition, but has barging, etc.

11.5 Threads & Locks Libraries

java.util.concurrent: Doug came up with this famous library

He built actors without the message passing: Executor/Future (actors with futures, instead of with message)

11.5.2 Pthreads

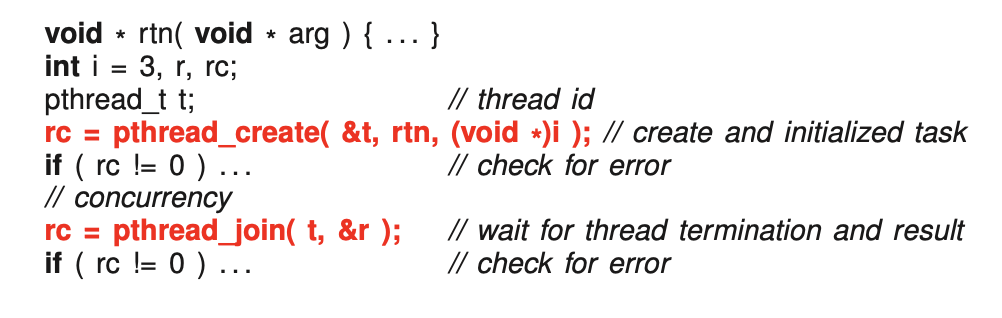

Created for C.

- type unsafe

- no external scheduling, only internal scheduling that has barging and spurious wake up probably

Insert function example in notes

- we need while because of barging and spurious wake up

11.6 OpenMP

#pragma omp: BEGIN … END

#pragma mp parallel for: COFOR

also has barrier: …

The End.