Final Study CS343

Fall 22 Final

1.

- a) When creating a cyclic barrier, an extra Coordinator task is often created. What is the advantage of the Coordinator?

- The Coordinator can accumulate results (subtotals) while Workers are reinitialized.

- b) In a barrier lock, explain the purpose of members

blockandlastblock: member blocks each task at block until all task has arrived and calledblock. And last task that calls block does not block and releases other tasks (cooperation). They leave together (synchronization).last: Last is implicitly called by the Nth thread that triggers the barrier release.

- c) Explain how a semaphore is used to create the synchronization pattern, i.e., do S1 before S2. Use words not code.

- Semaphore is initialized to 0: means that any thread attempting to perform a “P” (wait operation → acquire) on this semaphore will block until another thread increments it by performing a “V”.

- Thread executing S1: after completing the CS or action S1, this thread performs a V operation on the semaphore. The V increments the semaphore’s value from 0 to 1, signalling that S1 has been completed.

- Thread executing S2: Before starting S2, this thread performs a “P” operation on the semaphore. Since semaphore is initialized to 0, it must wait (block) until another thread signals it by performing the “V” operation. Once “V” occurs (after S1), the semaphore is incremented to 1, and the waiting thread is unblocked, allowing S2 to execute.

- d) Explain the difference between a simple and complex critical section?

- A simple critical section has only one thread in it

- A complex critical section can have multiple threads in it

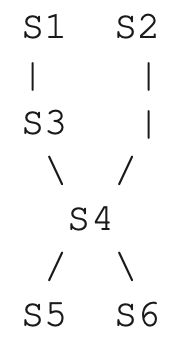

- e) Given the following precedence graph

construct an optimal solution, i.e., minimal threads and locks, using COBEGIN and COEND in conjunction with binary semaphores using P and V to achieve the precedence graph. Use BEGIN and END to make several statements into a single statement and show the initial value (0/1) for all semaphores. Name your semaphores , e.g., to simplify marking.

construct an optimal solution, i.e., minimal threads and locks, using COBEGIN and COEND in conjunction with binary semaphores using P and V to achieve the precedence graph. Use BEGIN and END to make several statements into a single statement and show the initial value (0/1) for all semaphores. Name your semaphores , e.g., to simplify marking.

COBEGIN

BEGIN S1; S3; END

S2;

COEND

S4;

COBEGIN

S5;

S6;

COEND- f) Is staleness/freshness a form of barging?

- Yes → temporal barging with staleness/freshness

- g) Explain the purpose of a shadow queue with respects to locks.

- The shadow queues is used to retain the kind of waiting task on semaphores/lock

- h) With respect to the readers/writer problem, there are two kinds of temporal reordering that can lead to incorrect behaviour. Describe these two reorderings.

- Barging between reader/writer threads and

- barging among the writer threads. (Look into it, where in the notes does it say?)

2.

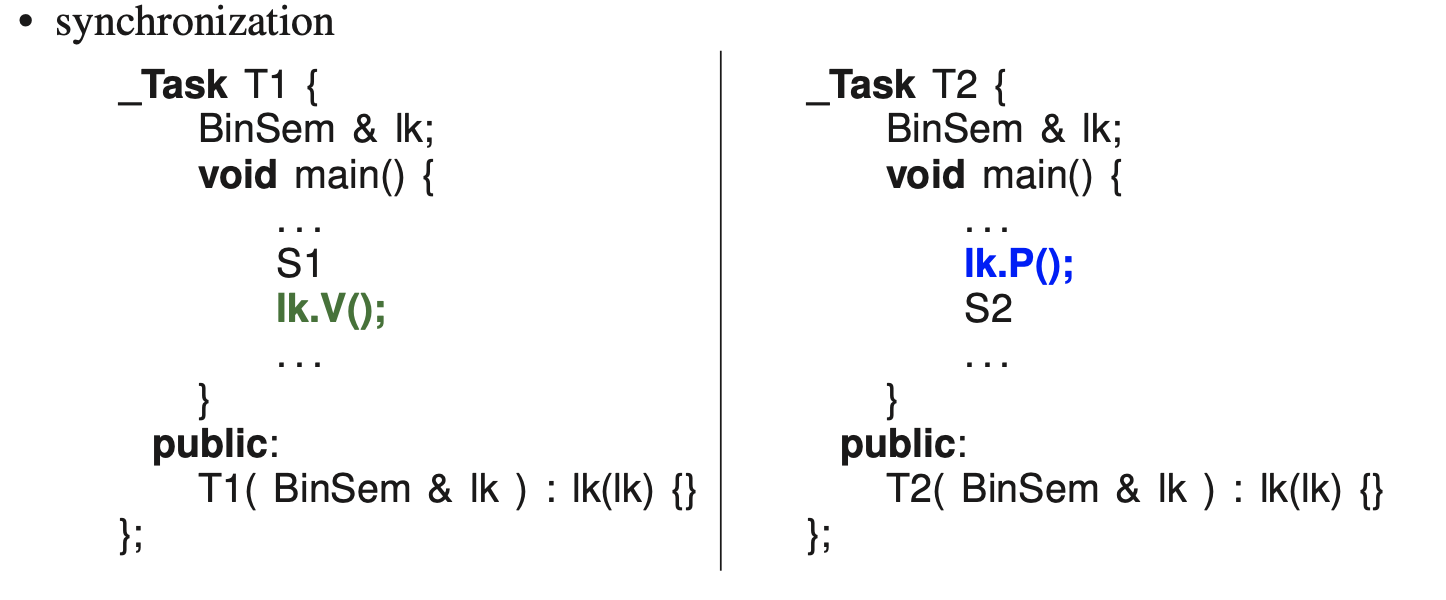

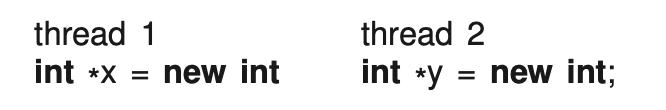

- a) What two aspects of concurrency may be missing when there is a race condition?

- synchronization

- mutual exclusion

- Page 137. 7 Concurrent Errors

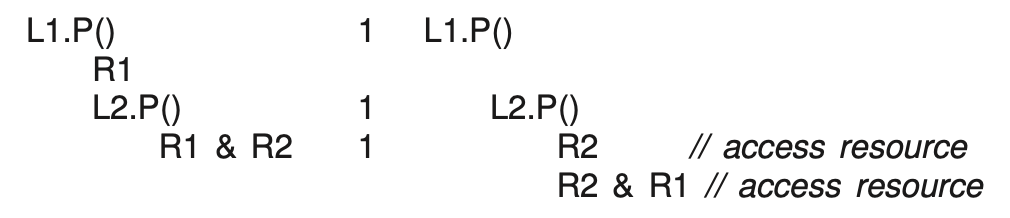

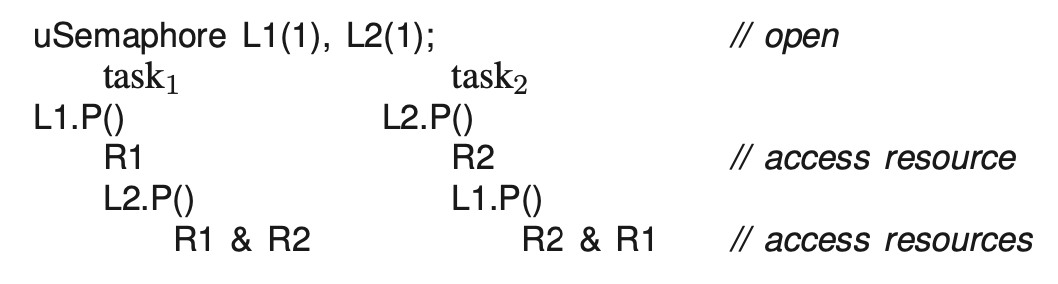

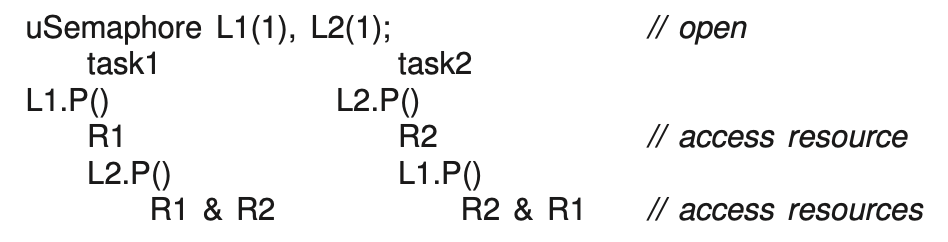

- b) 6 marks Restructure the following code in two different ways so deadlock is prevented.

Look at notes p.140 of the actual pdf

Version 1 - Acquire all required resources at once: idea is that each task acquires all necessary resources before starting to work on any of them. If a task cannot acquire all the required locks, it releases the lock it has already acquired and retries

uSemaphore L1(1), L2(1);

// v1

Task_1

L1.P() L2.P()

R1

R1 & R2

Task_2

L1.P() L2.P()

R2

R2 & R1Version 2 - Enforce a Lock Acquisition Order: tasks acquire locks in a consistent, predefined order (e.g., always acquire L1 first then L2). This removes the circular wait condition, as there is no scenario where one task holds L2 and wait for L1, while another task holds L1 and waits for L2.

uSemaphore L1(1), L2(1);

// v2

Task_1

L1.P()

R1

L2.P()

R1 & R2

Task_2

L1.P()

L2.P()

R2

R2 & R1So Task_1 acquires L1 first by calling L1.P(), then acquires L2 by calling L2.P(). It access R1 and R2 in this order. Task_2 also acquires L1 first by calling L1.P(), then L2.P(). Accesses R2 and R1 in this order.

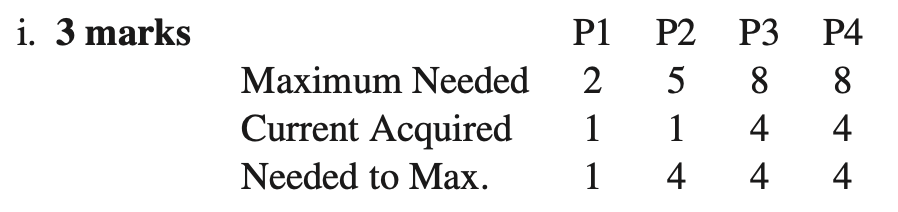

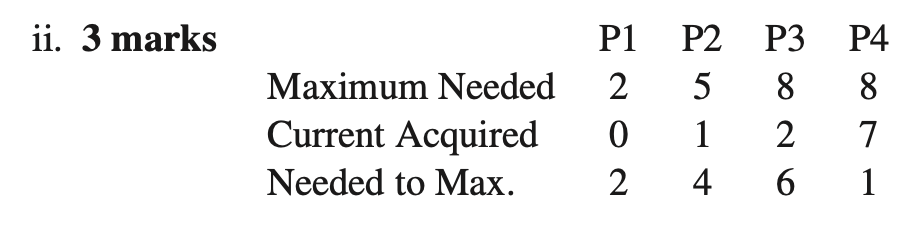

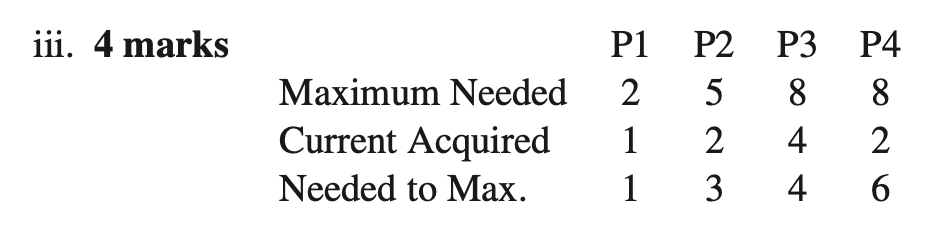

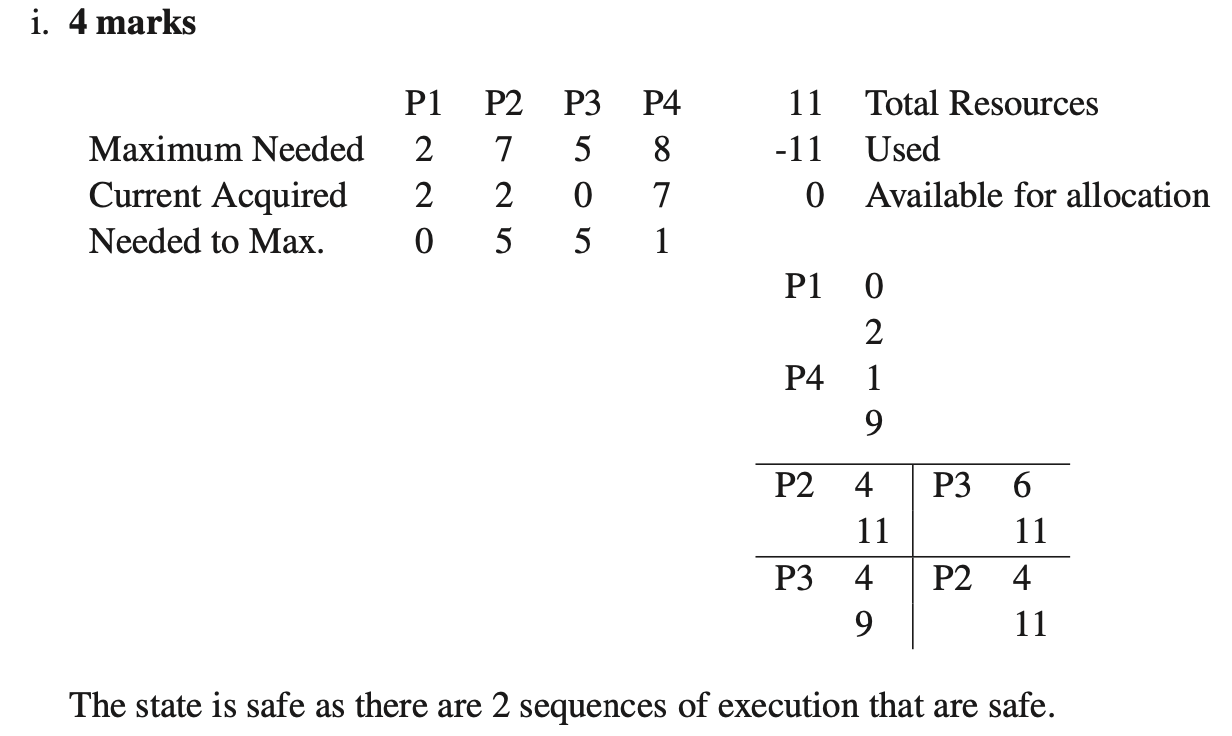

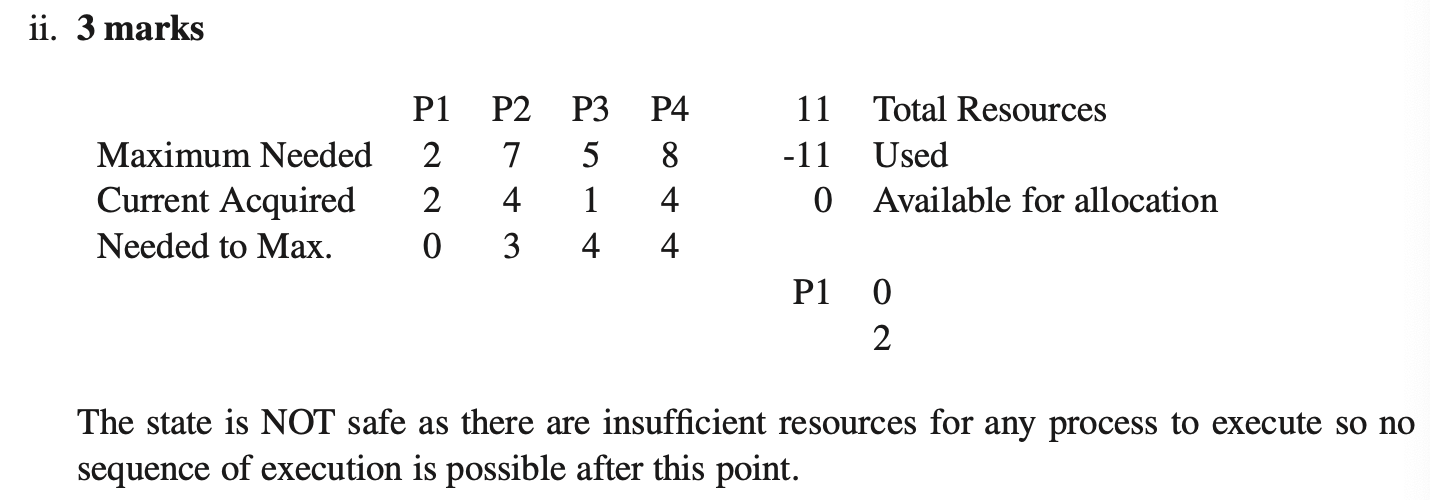

- c) Consider a system in which there is a single resource with 11 identical units. The system uses the banker’s algorithm to avoid deadlock (Deadlock avoidance). Suppose there are four processes with maximum resource requirements of 2, 5, 8, and 8, respectively. A system state is denoted by , where is the number of resource units held by . Which of the following states are safe? Justify your answers with the steps of the banker’s algorithm and a concluding statement.

- NOT safe

- Safe!

Don't know why this is safe...

Update: know how to do them now.

- Safe

3.

-

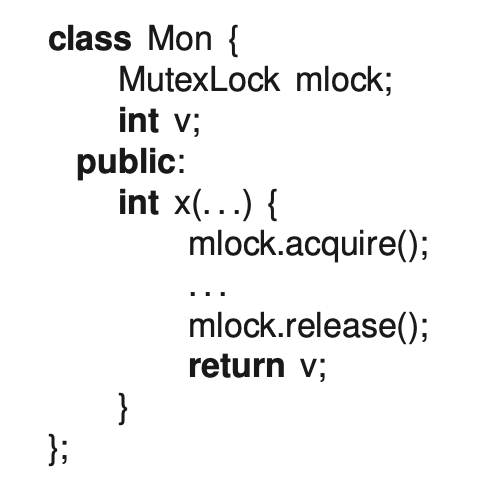

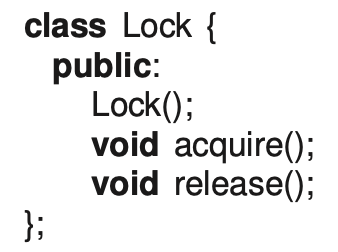

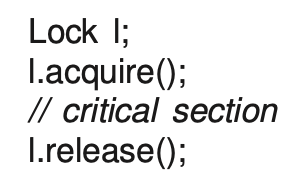

a) The following monitor simulation using a mutex lock has a problem returning

v. Explain the problem and how to fix it. Use words not code.

- A thread can be preempted between the lock release and returning

v, allowingvto change before the preemption continues with the wrongv. Copyvto a local temporary (i assume in the critical section), release the lock, return the temporary.

- A thread can be preempted between the lock release and returning

-

b) What is unusual about signalling a condition in a monitor?

- current thread needs to finish, leave the monitor so that the signalled condition thread that it unblocks can proceed???

- Correct answer: The signal is delayed because the signaller holds the monitor lock.

- The waiting thread (which was blocked, waiting for the condition) cannot resume execution right away, even though it has been signaled. This is because the signaled thread needs to acquire the monitor lock to proceed, but the lock is still held by the signaling thread.

- The signaling thread must finish its execution inside the monitor and release the lock. Only then can the signaled thread acquire the lock and proceed.

- This delayed signaling is counterintuitive because one might expect that signaling immediately transfers control to the waiting thread. Instead, the signal merely “wakes up” the waiting thread, which then waits to acquire the monitor lock before proceeding.

-

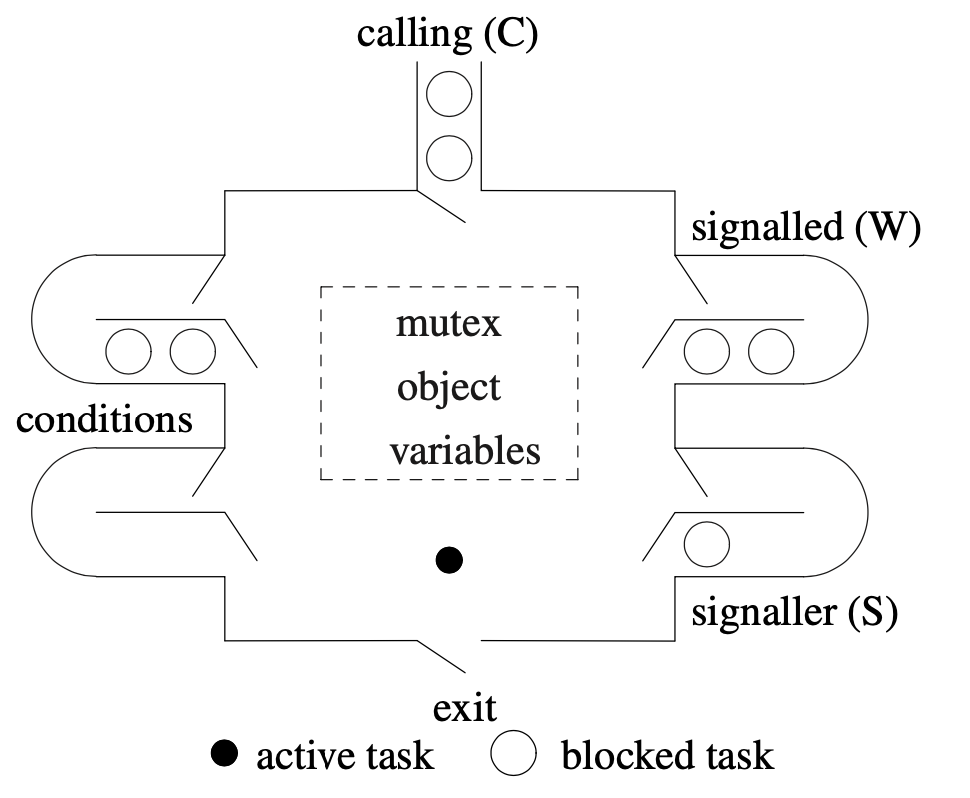

c) Explain the difference between

signalandsignalBlockon a monitor condition variable.- signal: the signalled thread does not execute immediately, the signaller thread still runs until it exits the monitor, then the signalled thread runs.

- signalBlock: the signalled thread executes, and the signaller thread sits down

- From answer: For signal, the signalled thread is postponed and signaller thread continues. For signalBlock the signaller thread is postponed and signalled thread continues.

-

d) Does _Accept behave like a

signalorsignalBlock?signalBlock

-

e) Give two situations where a monitor uses a

_Nomutexpublic member- When it wants to have a monitor inside of a monitor? WRONG

- Answer:

- read-only member to access data

- hen a member of the monitor is read-only and does not affect the state of the shared resource in a way that could cause inconsistency or data corruption, the

_Nomutexattribute can be used. This allows multiple threads to access the data concurrently without requiring mutex locking, which can improve performance when contention for read-access is high.

- hen a member of the monitor is read-only and does not affect the state of the shared resource in a way that could cause inconsistency or data corruption, the

- combining multi-step protocol into a single call that still requires a complex critical-section

- read-only member to access data

-

f) Explain the transformation from this general monitor into the specific μC++ monitor.

- Answer: The signaller queue is optimized away because the signaller has the highest priority so it does not need to block.

The signalled queue is changed to a stack needed for_Accept. Find the page number that explains this shit (p.159)? Where it says C < W < S or C = W < S. (Found: they also talk about this p.447-448, acceptor/signalled stack LIFO order and to avoid starvation or staleness that the general monitor uses (no temporal ordering))

- Answer: The signaller queue is optimized away because the signaller has the highest priority so it does not need to block.

4.

- a) Explain two capabilities added with the long form of

_Accept/Selectstatements.- Don’t need a million of if statements

- Answer:

- Adding

_Whenclauses before each_Accept/Selectclause and a statement afterwards

- Adding

- b) Explain a rendezvous failure

- Answer: If an accepted member fails with an exception, the acceptor is notified with a

RendezvousFailureexception. - Explanation:

- Why it’s useful?

- The

RendezvousFailuremechanism ensures that the monitor is aware of the failure in the synchronization process. - Allows the monitor or the caller thread to handle the exception and take actions.

- If an accepted member function throws an exception, the caller gets that exception on its stack.

- The monitor (acceptor) is notified of the failure through the

RendezvousFailureexception. - This mechanism ensures that both the caller and the monitor are aware of the failed cooperation (rendezvous) and can handle it accordingly.

- The

- As mentioned in Section 2.9.2.2, p. 22, the accept statement forms a rendezvous between the acceptor and the accepted tasks, where a rendezvous is a point in time at which both tasks wait for a section of code to execute before continuing.

- When one thread calls a monitor member function and waits for the callee to explicitly accept the call using

_Accept. If the accepted member function encounters an error and throws an exception, this exception propagates on the caller’s thread (the thread that initiated the call). Upon exiting the monitor due to the exception, the monitor thread raises a non-localRendezvousFailureexception. ThisRendezvousFailureexception notifies the acceptor (monitor) about the failed rendezvous (i.e., the failure to complete the synchronization or method execution as expected). The monitor code can explicitly catch theRendezvousFailureexception to deal with the failure scenario gracefully, as shown in the provided code:

- Why it’s useful?

- Answer: If an accepted member fails with an exception, the acceptor is notified with a

try {

_Accept(mem1);

} catch (uMutexFailure::RendezvousFailure &e) {

// Handle the failure

}- c) Explain how a courier task works

- A courier carries message between administrators so the administrators do not make calls to communicate with each other

- perform a potentially blocking call on behalf of the administrator

- Note: you should also be able to explain other types of workers such as timer, notifier, simple worker, and complex worker

- d) Does a future increase client or server side concurrency?

- client side

- Future It allows the client to initiate a request and then proceed with other work without blocking while waiting for the result.

- The client sends a request (e.g., to a server or an asynchronous task) and receives a future object.

- The future allows the client to either poll or wait for the result later, enabling it to perform other tasks in the meantime.

- e) Explain the semantics of the following

_Selectstatement:_Select(f1 || f2 && f3);- We continue execution before the selector-expression f1 is satisfied or f2 and f3 are both satisfied. It is blocked until either future f1 or both f2 and f3 are available.

- The

_Selectblocks until either future f1 or both f2 and f3 are available.

5.

- a) Are registers shared among different cores on a multiprocessor computer?

- No (p.189)

- Unlike registers, all cache values are shared across the computer

- b) How does data replication work in a cache hierarchy?

- 10.3.3 Replication p.192

- Answer: Data values are replicated from memory through the cache levels into registers.

- Explanation:

- In a system with a memory hierarchy, data replication refers to the process of copying data from one level of the hierarchy to another, typically moving it closer to the processor for faster access.

- Main memory to cache: when the cpu needs data that is not present in the cache (a cache miss), the data is fetched from main memory and replicated in the last-level cache (e.g., L3)

- From there, it may be further replicated into higher-level caches (e.g., L2 and L1)

- Through cache levels: each level of the cache hierarchy holds a subset of data, typically the most recently or frequently accessed data

- Data replication ensures that a copy of the data moves progressively closer to the CPU as needed: L3 cache → L2 cache → L1 cache

- Into registers: Once the required data reaches the L1 cache, the processor may load it into registers for immediate computation

- c) How does volatile prevent problems when programming with race conditions?

- Answer: volatile ensures variables are loaded/stored frequently to/from registers.

- Explanation:

volatileensures variables are always loaded from and stored to main memory, preventing the compiler from optimizing them into registers. This ensures the most up-to-date value is accessed, reducing issues with race conditions caused by stale or cached values.

- d) What is the name of the problem that occurs if only the CAS instruction is used to build a lock-free stack?

- Answer: ABA

- What is Lock-Free Stack? (11.1.2 p.198)

- ABA problem? (11.1.3 p.199)

- e) How do goroutines communicate in the programming language Go?

- Answer: channels (11.4.4 Go)

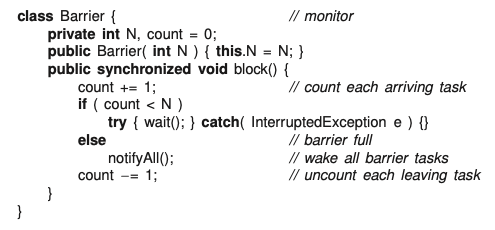

- f) Given the following Java monitor to implement a barrier:

- i) Explain why the barrier is incorrect

- Barging:

- When the

Nthtask callsnotifyAll(), it releases all waiting threads and leaves the monitor to perform its own next step (e.g., the step) - However, before the waiting threads can proceed, the

Nthtask may race back into the monitor and callblock()again for its step - When this happens, the

Nthtask sees thecountvalue still atN(since the waiting threads have not yet decremented it), incorrectly starting its step before the other tasks have completed their step

- When the

- Barging:

- ii) Explain a simple correction to make it work

- To fix the problem, the

Nthtask (the one that wakes up all the others) should resetcountto0before releasing the waiting tasks (barging avoidance) - This ensures that when it races back into the barrier, it won’t mistakenly assume the barrier is ready for the next round

- To fix the problem, the

- iii) Explain what unusual phenomenon prevents the simple solution from working

- The issue arises from spurious wakeups

- In java, a thread waiting on a condition variable (

wait()) can sometimes be awakened without being notified. This is called spurious wakeup. - If spurious wakeup occurs, some threads may proceed prematurely, disrupting the barrier’s functionality

- i) Explain why the barrier is incorrect

6.

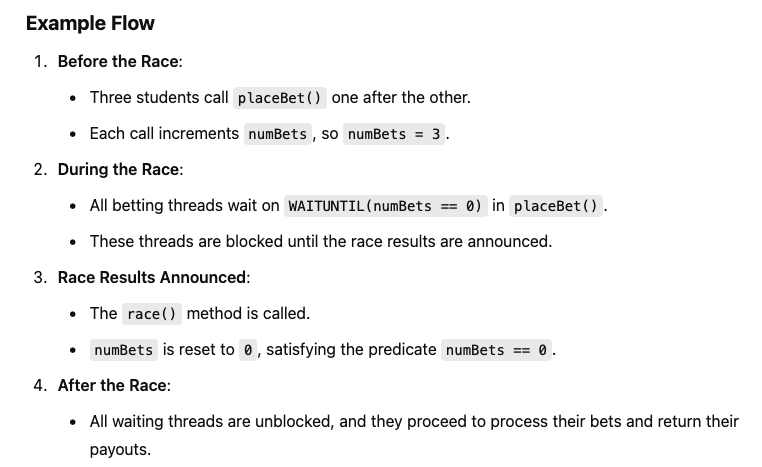

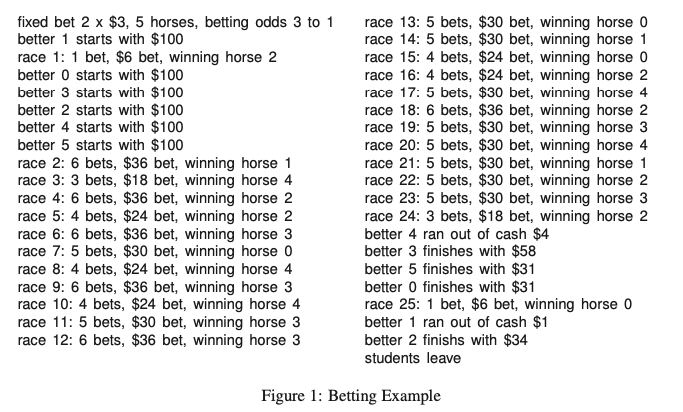

A group of students go to the horse races to gamble their tuition money. Each race, a student bets a fixed amount of money on two different horses, which is subtracted from their tuition money. After the race is over, if a student picks a winner, the amount won is added to their tuition money. The students gamble until one (or more in the simultaneous case) loses enough money they cannot make the fixed bet. Once a student cannot bet, all the students leave the race track and stop betting.

Figure 1 shows an example day at the races. Consult this example to understand what your monitor needs to handle. DO NOT print any output or compute any values needed only for printing!

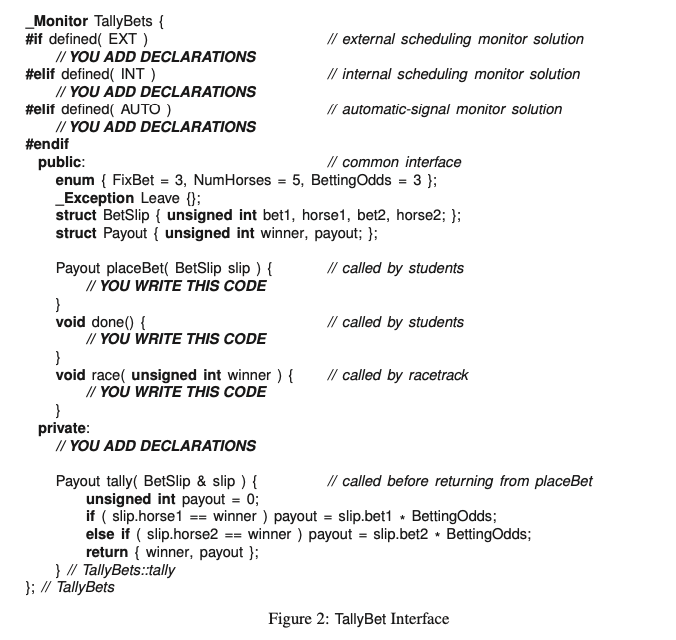

Figure 2 shows the betting interface (you may only add code in the designated areas). (Do not copy the starting code into your answer booklet.) Write the tally-betting office using 3 kinds of monitors.

- Member placeBet (you implement) is called by a student to place a bet on 2 horses using BetSlip.

- Member done (you implement) is called by a student with insufficient money to bet. After which, arriving and waiting students receive a Leave exception indicating its time to stop betting.

- Member race (you implement) is randomly called by the racetrack to indicate a race is run and deliver the winning horse.

- Member tally (given) is called to compute the race results for each student’s bet. Called to generate the return value from placeBet.

Note

Write only the code for the TallyBets monitors; do not write or create the student, racetrack, or program main. Assume the program main shuts down the racetrack after the students leave. No error checking is required.

Implement the betting monitor TallyBets using:

- a) External scheduling Declarations:

unsigned int winner;

bool shutdownStarted = false;

TallyBets::Payout TallyBets::placeBet(BetSlip slip) {

if(shutdownStarted) _Throw Leave();

try {

_Accept(done) {

} or _Accept(race) {

} or _Accept(placeBet) {

}

} catch(uMutexFailure::RendezvousFailure & ) {}

if(shutdownStarted) _Throw Leave();

return tally(slip);

} // TallyBets::placeBet

void TallyBets::race(unsigned int winner) {

TallyBets::winner = winner;

} // TallyBets::race

void done() {

shutdownStarted = true;

} // TallyBets::done

Summary of Monitor's Behaviour

- Students place bets and wait for race results

- The racetrack announces winners via

race, enabling students to calculate payouts- If a student runs out of money, they call

done, initiating a shutdown- The shutdown prevents further betting and ensures all students leave the system gracefully

Once the monitor has finished handling the higher-priority call (

doneorrace), it revisits pending calls likeplaceBet, allowing blocked students to resume execution.

- Race Track Calls

race:- The racetrack calls

raceto announce the winner of a race. This updates the monitor’s internal state (e.g., thewinnervariable). - Students’

placeBetcalls depend on this state being updated before they tally their bets.

- The racetrack calls

- Students Call

placeBet:- Students call

placeBetrepeatedly during betting, and the monitor must ensure:- Bets are correctly tallied using the most recent race results.

placeBetdoesn’t proceed if the monitor is shutting down (doneis called).

- Students call

- Students Call

done:- When a student can no longer bet, they call

done. This setsshutdownStarted = trueand signals all other students to stop betting.

- When a student can no longer bet, they call

Question

- How can we prevent from calling placeBet before done or race?

- Does calling placeBet before done or race be a problem?

- Why aren’t we doing _Accept(done || race) and then _Acccept(placeBet)?

- b) Internal scheduling Declarations:

uCondition bench;

TallyBets::Payout TallyBets::placeBet(BetSlip slip) {

if(shutdownStarted) _Throw Leave();

bench.wait();

bench.signal();

if(shutdownStarted) _Throw Leave();

return tally(slip);

} // TallyBets::placeBet

void TallyBets::race(unisgned int winner) {

TallyBets::winner = winner;

bench.signal();

} // TallyBets::race

void done(){

shutdownStarted = true;

} // TallyBets::done

Use Condition Variable bench to manage synchronization between students placing bets, racetrack declaring winners and the monitor handling shutdowns.

placeBet Method:

- Purpose: Allows students to place bets, wait for race results, or handle shutdowns.

- Steps:

- Checks if

shutdownStartedis true. If so, throws aLeaveexception to indicate betting is over. - Calls

bench.wait()to put the calling thread into a wait state until it is signaled (either by a race completion or another student’s action). - Calls

bench.signal()to wake up the next thread waiting on the condition variable. - Checks again if

shutdownStartedis true after waking up (to handle potential shutdown during the wait). - Calculates the payout for the student using the

tallymethod and returns it.

- Checks if

race Method:

-

Purpose: Receives the winning horse for the current race and signals waiting threads to proceed.

-

Steps:

- Updates the

winnervariable with the horse number provided by the racetrack. - Calls

bench.signal()to wake up one of the threads waiting on thebenchcondition variable (e.g., a student waiting to calculate their payout).

- Updates the

-

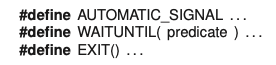

c) Implicit (automatic) signalling, using only the following three macros.

Macro

Macro AUTOMATIC_SIGNALis placed only once in an automatic-signal monitor as a private member, and contains any private variables needed to implement the automatic-signal monitor. MacroWAITUNTILis used to wait until the predicate evaluates to true. MacroEXITis called whenever control leaves the monitor.

Key features of Automatic Signalling

AUTOMATIC_SIGNALMacro:- Declares private state variables required for synchronization.

- In this case, it is used to manage signalling behaviour and ensures threads waiting for a condition are automatically notified when the condition becomes true.

WAITUNTIL(predicate, ...)Macro:- A thread waits until the specified

predicateevaluates to true. - This macro internally ensures efficient waiting (possibly using condition variables) and automatically blocks/unblocks threads as needed.

- A thread waits until the specified

EXIT()Macro:- Called whenever control exits the monitor.

- Ensures proper cleanup and possibly signals other threads waiting on condition variables.

AUTOMATIC_SIGNAL;

unsigned int numBets = 0;

TallyBets::Payout TallyBets::placeBet(BetSlip slip) {

if(shutdownStarted) _Throw Leave();

numBets += 1;

WAITUNTIL(numBets == 0, , );

if(shutdownStarted) _Throw Leave();

EXIT();

return tally(slip);

} // TallyBets::placeBet

void TallyBets::race(unsigned int winner) {

TallyBets::winner = winner;

numBets = 0;

EXIT();

} // TallyBets::race

void done() {

shutdownStarted = true;

} // TallyBets::doneplaceBet(BetSlip slip)

- Purpose: Handles student bets and synchronizes access to the monitor.

- Steps:

- Checks if the monitor is in shutdown mode (

shutdownStarted):- If true, raises the

Leaveexception, indicating that betting has ended.

- If true, raises the

- Increments the

numBetsvariable to track the number of active bets. - Calls

WAITUNTIL(numBets == 0, , ):- Waits until all bets have been tallied (i.e., when

numBetsreaches 0). - This ensures synchronization between betting and race result processing.

- Waits until all bets have been tallied (i.e., when

- Rechecks

shutdownStartedafter waking up to handle a potential shutdown state. - Calls

EXIT()when exiting the monitor, ensuring proper signaling and cleanup.

- Checks if the monitor is in shutdown mode (

race(unsigned int winner)

- Purpose: Processes the results of a race and signals waiting students.

- Steps:

- Updates the

winnervariable with the winning horse number. - Resets

numBetsto 0, indicating that all bets have been processed. - Calls

EXIT()to signal waiting threads and ensure proper cleanup.

- Updates the

done()

- Purpose: Initiates shutdown of the monitor.

- Steps:

- Sets the

shutdownStartedflag totrue, ensuring no further bets can be placed.

- Sets the

Summary

- synchronizes access to betting logic using

WAITUNTIL- betting threads wait on the

WAITUNTILmacro until the race results are announced

7.

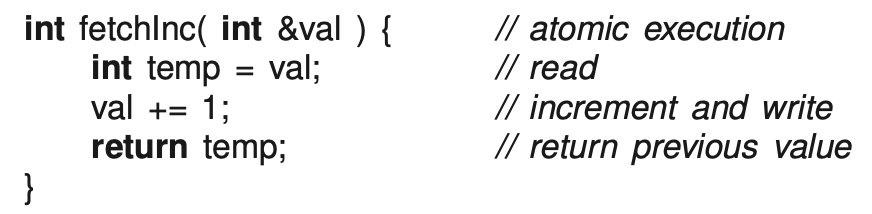

- Write an administrator task to perform the same

TallyBetsas question 6. The only interface changes are:

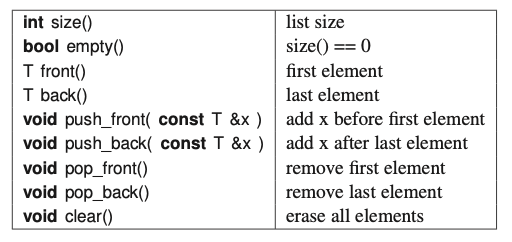

#include <list>

#include <uFuture.h>

_Task TallyBets {

// YOU ADD DECLARATIONS

public:

TallyBets(unsigned int numStuds); // num of students

typedef Future_ISM<Payout> FPayout; // futute type

FPayout placeBet(BetSlip slip); // return future payout

...

private:

// YOU ADD DECLARATIONS

}; // TallyBetswhere the constructor is passed the number of students in the group and placeBet returns a future to a payout, which is filled in after a race for each student.

Ensure the TallyBets task does as much administration work as possible; a monitor-style solution will receive little or no marks. Write only the code for the TallyBets task; do not write or create the student, racetrack, or program main. Assume the program main shuts down the racetrack after the students leave. No error checking is required.

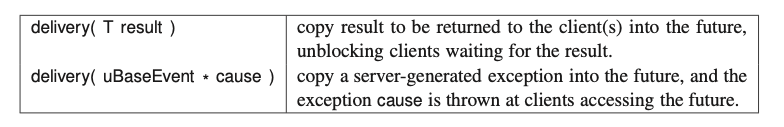

microC++ future server operations are:

The C++ list operations are:

Answer:

struct Work {

BetSlip slip;

FPayout fpayout;

Work(BetSlip slip): slip(slip) {}

};

FPayout placeBet(BetSlip slip);

Work* node;

list<Work*> students; // students waiting for race results

unsigned int numStuds;

TallyBets(unsigned int numStuds): numStuds(numStuds) {}

TallyBets::FPayout TallyBets::placeBet(BetSlip slip) {

node = new Work(slip);

return node->fpayout; // why?

} // TallyBets::placeBet

// same as monitor

void TallyBets::race(unsigned int winner) {}

// same as monitor

void TallyBets::done(){}

void TallyBets::main() {

for(;;) {

_Accept(done) {

break;

} or _Accept(race) {

while(!students.empty()) {

Work* n = students.front();

students.pop_front();

n->fpayout.delivery(tally(n->slip));

delete n;

} // while

} or _Accept(placeBet) {

students.push_back(node); // store future

} // _Accept

} // for

// Students not waiting on futures.

unsigned int rem - numStuds - 1 - students.size();

for(unsigned int b = 0; b < rem; b+=1) {

_Accept(placeBet) {

students.push_back(node);

Work* n = students.front();

students.pop_front();

n->fpayout.delivery(new Leave());

delete n;

} or _Accept(done) {}

} // for

// Students waiting on futures

while(!students.empty()) {

Work* n = students.front();

students.pop_front();

n->fpayout.delivery(new Leave());

delete n;

} // while

} // TallyBets::main

Alternatively, put student.push_back(node) in placeBet().

- The Primary Loop (

for(;;)) This is the main loop where theTallyBetstask is actively processing requests. This loop has three primary activities:

- Accepting Done Signal: The task exits this loop if the

done()method is called. This happens when a student can no longer place bets due to insufficient funds, signalling the end of the betting session for all. - Handling Race Results: When a race occurs (

_Accept(race)), the task processes all queued bets (from thestudentslist). For each bet:- It calculates the payout based on the race results (

tally(n->slip)). - Delivers the computed result to the corresponding future (

n->fpayout.delivery()). - Removes the

Workobject from the queue and deletes it to free memory.

- It calculates the payout based on the race results (

- Accepting New Bets: New bets are received (

_Accept(placeBet)). The newly createdWorkobject (node) from theplaceBet()call is added to thestudentslist, queuing it for processing once the next race occurs.

- Handling Students Not Waiting on Futures

After the

done()signal is received and the primary loop exits, there are two groups of students to handle:

- Students Not Yet Processed: These are students who might initiate a

placeBetcall after thedone()signal but before the system completely shuts down. Each of these bets is handled by:- Accepting the bet and adding it to the

studentslist. - Immediately delivering a

Leaveexception via the future associated with the bet (n->fpayout.delivery(new Leave())). This informs the student that betting has ended, and they should not expect a valid payout.

- Accepting the bet and adding it to the

- This loop runs only a few times, equal to the number of students minus those who have already been processed or are waiting in the queue.

- Final Cleanup for Students Waiting on Futures The last segment ensures that any remaining students (who have placed bets and are waiting for outcomes that will never come because betting is done) are also cleaned up:

- Delivering Leave Exceptions: Each remaining

Workobject in thestudentslist is processed, delivering aLeaveexception to indicate that no further processing will occur, and the bet is effectively cancelled. - This cleanup is crucial to ensure that no student thread remains indefinitely blocked on a future that will never be completed with a valid betting outcome.

Purpose of

Workand Managing Futures

- Why Create a

WorkObject?: EachWorkobject encapsulates a bet and the mechanism (FPayout) to asynchronously deliver the result of that bet. This design allows the betting task to accept bets, process them later, and communicate the outcomes independently of the betting actions.- Delivery Mechanics: The use of

n->fpayout.delivery()in different contexts (tally(n->slip)for a valid payout andnew Leave()for an exception) demonstrates how the system uses futures to manage asynchronous communication.delivery()either provides the computed result or an exception based on the race’s occurrence or the betting session’s conclusion.

Why delete

Workobjects?

- Object Lifecycle Completion: Once the result is delivered to the future, the

Workobject has fulfilled its purpose. The encapsulatedBetSlipand the payout result have both been processed, so there’s no further need to retain the object.- Safe Access and Deletion: Deleting

nimmediately after thedeliverycall is safe because the future (FPayout) does not need theWorkobject once the delivery is completed. The result has been transmitted to the client (student), and the future no longer interacts with theWorkobject.

Explanation: TallyBets task represents an administrator of a betting system where bets are placed on horse races.

- Struct Work:

- Represents a work item for each bet placed by a student.

- Contains a

BetSlipand aFPayout(a future that will eventually hold the payout result). - Constructor initializes the

BetSlip.

- Member Variables:

list<Work*> students: A list storing pointers toWorkobjects representing each student’s bet awaiting the race outcome.unsigned int numStuds: The number of students participating in the betting.

- Constructor:

- Simply stores the number of students participating.

- placeBet:

- Creates a new

Workobject for each bet. - Returns the

FPayoutassociated with theWorkobject. Initially, this future does not contain any data (fpayout), which is why the comment queries why it returns an empty future.

- Creates a new

- race and done Methods:

- These methods are placeholders and should contain logic to handle the race results and the termination condition respectively.

- main Function:

- Core of the task, handling the lifecycle of betting operations.

- Uses

_Acceptto handle concurrency, managing incoming requests to place bets, handle race results, and shutdown operations. - During a race, it processes all waiting bets, computes payouts, delivers them through the futures, and cleans up.

- After receiving a

donesignal, it handles any remaining students by delivering aLeaveexception through the future, indicating no more betting.

- Futures (

FPayout):- Used to asynchronously deliver the result of a bet once it’s known, which is after a race completes.

- The

placeBetmethod instantiates aWorkobject and returns its future immediately, which will be filled later. - In the

mainfunction, when a race is processed, each student’s bet is evaluated, and the result is delivered throughfpayout.delivery(tally(n->slip)).

- Delivery of Results or Exceptions:

- If the bet processing completes successfully,

delivery(T result)is called with the calculated payout. - If the betting is to be stopped prematurely (e.g., when a

doneis triggered),delivery(uBaseEvent *cause)is used to throw an exception to the client accessing the future, which would typically trigger handling of an exception in the client code.

- If the bet processing completes successfully,

Winter 19 Final

1.

- a) Why is a buffer used in concurrent programming, e.g., producer/consumer problem, and what must be true about the execution performance of threads adding and removing elements for the buffer to be useful?

- A buffer smoothes out small, temporary differences in speed between adding and removing threads so them seldom block.

- The speed of the adding and removing threads must be virtually equal or the buffer is always full or empty.

- b) For a bounded buffer with multiple adding/removing threads, discuss the two kinds of locking that are required for correct behaviour.

- Synchronization is required to prevent adding/removing when the buffer is full/empty.

- using like Condition Variable (Used with mutexes, condition variables allow threads to block on a condition (e.g., “buffer is not full” or “buffer is not empty”) and be woken up when the condition changes. This mechanism helps in efficiently managing wait states.) or Semaphore (count number of fillable spaces or available items directly, allowing producers and consumers to increment or decrement the semaphore counts appropriately as they add or remove items.)

- Mutual exclusion is required to atomically add/remove elements at each end of the buffer.

- These locks ensure that when one thread is performing an add or remove operation, no other thread can perform any operations that modify the buffer. This prevents scenarios such as two producers trying to add to the buffer simultaneously and corrupting the structure or data.

- Synchronization is required to prevent adding/removing when the buffer is full/empty.

- c) What is the relationship between baton passing and barging?

- the baton passing relates to barging since there are certain rules associate with this baton passing which are: only one baton, nobody can move in entry/exit code unless they have the baton, and once the baton is released, cannot read/write variables in the entry/exit code anymore. so this solves the problem of mutual exclusion thus barging (found on page 124 6.4.3 Lock Techniques)

- Baton passing inherently prevents barging because it controls lock ownership transition among threads:

- Answer: Baton passing conceptually passes the lock when unblocking to achieve barging prevention.

- the baton passing relates to barging since there are certain rules associate with this baton passing which are: only one baton, nobody can move in entry/exit code unless they have the baton, and once the baton is released, cannot read/write variables in the entry/exit code anymore. so this solves the problem of mutual exclusion thus barging (found on page 124 6.4.3 Lock Techniques)

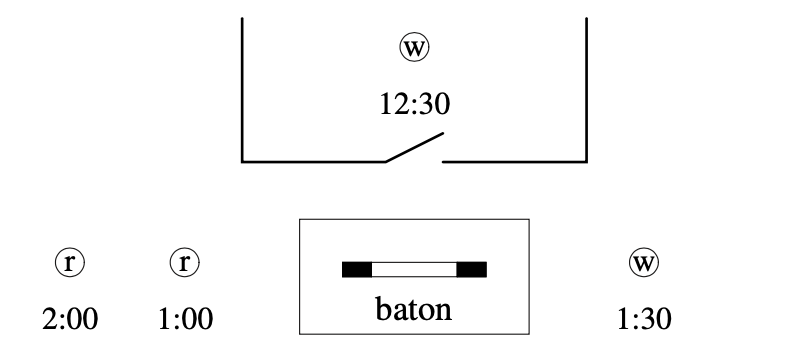

- d) Given the following readers/writers snapshot:

and the 12:30 writer exits the critical section at 2:30, explain a scenario resulting in staleness and one resulting in freshness.

and the 12:30 writer exits the critical section at 2:30, explain a scenario resulting in staleness and one resulting in freshness.

- Staleness: both readers 1:00 and 2:00 enter 2:00 reader reads data that is stale stale; should read 1:30 write instead, but it’s reading 12:30 instead… 1:00 should read 12:30, then 2:00 should be reading 1:30!!

- Freshness: readers enters and read 12:30 data (never seen) 1:00 reader reads data that is too fresh (i.e., missed reading 12:30!)

- e) When solving the staleness/freshness problem, is eventual progress sufficient? If not, what is sufficient?

- No, it’s not sufficient, because even if we continue, we’ve already read the wrong data in the wrong order.

- To solve the staleness/freshness problem more effectively, a First-Come, First-Served (FCFS) or FIFO (First In, First Out) discipline is often more appropriate:

- Order Preservation: Read and write requests are processed in the order they arrive. This preserves the causality of events and ensures that any read operation retrieves the most recent data available at the time the read was requested

- Prevents Barging: Similar to the baton passing mentioned earlier, FCFS prevents newer operations from jumping ahead of older ones maintain freshness

- By strictly adhering to the order of operations, FCFS ensures that all reads are as fresh as possible given the operations that preceded them

- Answer: No, FCFS (FIFO)

- f) Split binary-semaphores have this pattern

Xdel += 1; entry.V() INTRERRUPTED HERE Xwait.P(). Assume an arriving reader thread must block so it releases the entry lock (puts down the baton) but is interrupted before blocking on the reader bench. Assume a leaving writer thread acquires the entry lock (picks up the baton), sees the reader(rdel > 0), and V’s the read bench. Explain why the reader does not block forever as it is not yet blocked on the read bench.- Answer: The V on the read bench (semaphore) is remembered by the semaphore counter, so when the reader finally restarts and P’s on the read bench, it does not block.

- Scenario:

- Reader Thread:

- Action: Attempts to acquire a lock (releases the entry lock or “puts down the baton”) but gets interrupted before it can block itself on the semaphore associated with the reader’s waiting queue (read bench).

- Writer Thread:

- Action: Finishes its task and picks up the entry lock (or “baton”). It notices that there’s at least one reader that has been delayed (

rdel > 0) and thus performs a signal operationV()on the read bench.

- Action: Finishes its task and picks up the entry lock (or “baton”). It notices that there’s at least one reader that has been delayed (

- Reader Thread:

- the reader thread is interrupted right before it can block on the read bench. Meanwhile, the writer thread signals the read bench by calling

V(). This increments the semaphore’s counter associated with the read bench. Here’s the sequence of events that prevents the reader from blocking indefinitely:- Semaphore Increment: The writer’s

V()operation increases the semaphore count for the read bench. This is crucial because the semaphore count now reflects that there is one unclaimed permit available for acquisition. - Reader Restarts: When the reader thread eventually resumes and calls

P()on the read bench, it checks the semaphore count.- Since the semaphore count was incremented by the writer’s

V(), even though the reader was not yet blocked, the count is positive. - The reader thread finds the semaphore’s count to be positive and thus can proceed without blocking. It decrements the count and continues its execution.

- Since the semaphore count was incremented by the writer’s

- Semaphore Increment: The writer’s

2.

- a) What is a race condition and why is it difficult to locate?

- Race condition is when two or more resources think its time to enter the critical section, so they enter at the same time. It’s difficult to locate because program doesn’t stop right away.

- Answer: A race condition occurs when there is a missing synchronization or mutual exclusion. It is hard to locate because the program runs but problems do not occur immediately because of non-determinism.

- Non-determinism: behaviour of the system under a race condition does not repeat in a consistent manner with each execution of the program. The race condition may only manifest under particular sequences of events or timings, hard to reproduce.

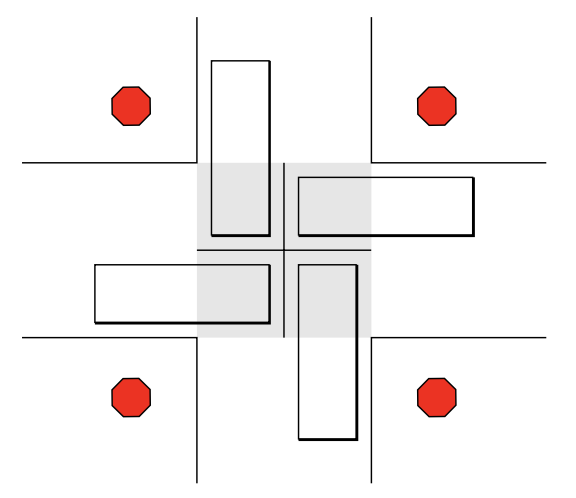

- b) Given a 4-way traffic intersection where cars drive on the right-hand side of the road:

- i. How many independent critical sections are there and explain what they are?

- 4, the quadrants over which the cars drive

- ii. What is the maximum number of critical sections that can be used simultaneously without causing a problem and how does it happen?

- 4, right hand turns

- iii. For a single car, what is the shortest chain of simultaneous critical sections

(holds > 1)and how does it happen?- 2, driving through the intersection

- iv. For a single car, what is the longest chain of simultaneous critical sections

(holds > 1)and how does it happen?- 3, when car performs a left turn

- v. What is the minimum number of critical sections that needs to be held simultaneously for a deadlock (gridlock) and how does it happen?

- 4, the cars move in their quadrant simultaneously

- i. How many independent critical sections are there and explain what they are?

- c) i. Is the following code fragment a potential synchronization or mutual exclusion deadlock?

- mutual exclusion deadlock: task 1 and task 2 are both waiting for resources while holding resources… (p.140 7.3.2 Mutual Exclusion Prevention)

- ii. Restructure the code fragment so it is deadlock-free.

- iii. Why is the performance of the restructured code not as good as the original code?

- Resource utilization is reduced because task 2 holds R1 longer than necessary

- d) Consider a system in which there is a single resource with 11 identical units. The system uses the banker’s algorithm to avoid deadlock. Suppose there are four processes with maximum resource requirements of 2, 7, 5, and 8 units, respectively. A system state is denoted by ( ), where is the number of resource units held by . Which of the following states are safe? Justify your answers.

- i. ( 2 2 0 7 )

- Since needs 0 more units to complete, it can finish and release its resource. So 2 released.

- Then after finishes, can acquire the necessary 1 unit so its 7+1 = 8, finish, and release it. So we have 9 resource released by the end of

- After that I don’t understand the diagram. In my head it should be acquires 5, and finish, so we have 11. Then acquires 5, and finish.

- ii. ( 2 4 1 4 )

- finishes and release 2

- But then, none of , , or can continue with additional freed 2 resource. So the state is NOT safe as there are insufficient resources for any process to execute so no sequence of execution is possible after this point.

- i. ( 2 2 0 7 )

3.

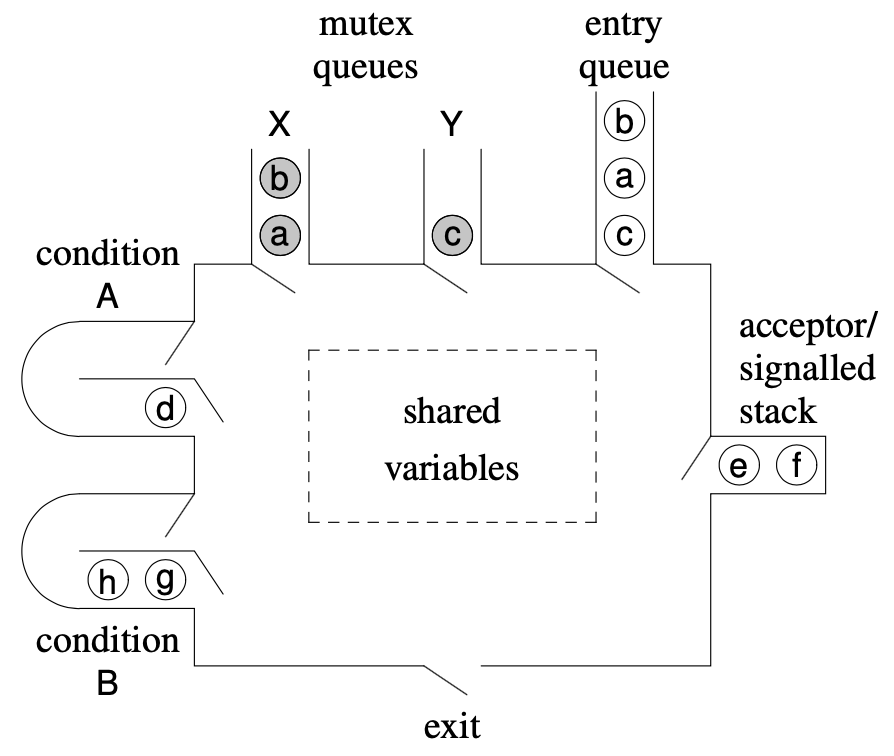

- a) Given the following snapshots of a ++ monitor during execution

- i. The monitor is empty, which task next enters the monitor?

- e

- ii. Which tasks have called

_Mutexmembers and which_Mutexmember did each call?- tasks a and b called mutex member X

- tasks c called mutex member Y

- iii. Which tasks have executed a

waitand on which condition variables?- task d executed a

waiton condition A - task h and g executed a

waiton condition B

- task d executed a

- iv. Why do some tasks appear twice in the diagram?

- Mutex queues are auxiliary queues to allow access for the accept statementto-understand

- ?????

- The note about mutex queues being auxiliary queues for access refers to the optimization in handling thread synchronization. These queues organize threads based on their access requirements to shared resources, enabling quick decisions about which thread can enter the critical section next, minimizing waiting time and computational overhead.

- Mutex queues are auxiliary queues to allow access for the accept statementto-understand

- v. Explain two different scenarios that can result in tasks “e” and “f” to appear in the given order on the acceptor/signalled stack.

- an accept statement blocked f and another one in the accept blocked e

- Answer: tasks f accepts a mutex queue with e at the front, and e does an accept.to-understand OR tasks e and f were on a condition variable and both were signalled (so both moved from condition queue to acceptor/signalled stack)

- explicit scheduling occurs when: (p.158)

- an accept statement blocks the active task on the acceptor stack and makes a task ready from the specified mutex member queue

- a signal moves a task from the specified condition to the signalled stack

- explicit scheduling occurs when: (p.158)

- vi. Assume a task in the monitor does a signal on condition A, which task continues using the monitor.

- The task that did the signal on condition A continues using the monitor. (Correct answer) Then when it exits the monitor or finishes, the task signalled task on condition A continues

- Answer: signalling task

- vii. Assume a task in the monitor does a signal on condition A, exits the monitor, and barging is allowed, which tasks could next enter the monitor?

- Signalled task can continue (since the signalling task exits the monitor) OR the barging task can enter (calling task? I think it’s just a task on the calling queue.)

- Answer: signalled task or a calling task (what’s the calling task in this case?)

- viii. Assume a task in the monitor does a signal-block on condition A, which task continues using the monitor.

- task d that is on the condition A queue continues (or signalled task)

- ix. Assume a task in the monitor does a signal-all (notifyall) on condition B, which task continues using the monitor and where do the other tasks go?

- the signaller continues? (Correct, the signalling task continues) the tasks on condition B so in the picture tasks h and g goes on the A/S stack

- Answer: signalling task, and tasks g h are moved to the A/S stack

- x. Assume a task in the monitor does an

_Accept(X), which task continues using the monitor, and which tasks are on the acceptor/signalled stack?- the front of mutex member X uses monitor, so in the picture a uses monitor. And the signalling task gets put on the acceptor/signalled stack. (accepting task gets put on A/S stack)

- Answer: a enters, and the accepting task, e and f are on A/S stack

- i. The monitor is empty, which task next enters the monitor?

- b) Can a monitor have a RendezvousFailure? Explain how it can or cannot happen.

- Yes a monitor can have a RendezvousFailure. A RendezvousFailure happens so the accepted task raise error can notify the acceptor of an error that occurred. So the monitor can know the error

- Answer: Yes, when the accepted call raises an exception, the acceptor receives the implicit RendezvousFailure from the caller.

- c) ++ monitors do not have barging. How does ++ achieve this property?

- Answer: ++ monitors prevent barging by giving the acceptor/signaller (A/S) stack highest priority before looking at the calling queue (C < W < S).

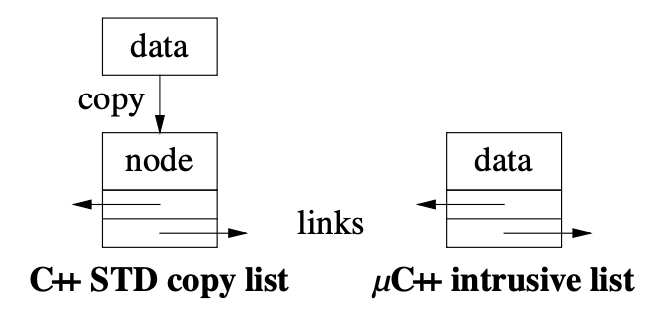

- d) C++ STD containers copy data into nodes and link the nodes. ++ containers require the link fields to be part of the data, called intrusive lists. Name 2 advantages of intrusive lists

- faster since it’s on the stack, data field not always used

- Answer: advantage: no heap allocation to create a node and no data copy to the node. disadvantage: Link fields occupy space in the data and may be used infrequently or not at all.

4.

- a) Explain why ++ does not provide a type generator (language construct) composed of the execution properties: thread, no stack, synchronization/mutual-exclusion.

- Answer: Without a stack, a thread has no where to start execution.

- bruh i don’t understand the question. what are they asking?to-understand

- b) Write two distinct but equivalent

_Acceptstatements, one in short form and one in long.

_Accept(mem1 || mem2){S1;}

OR

_Accept(mem1){

S1;

} or _Accept(mem2){

S1;

}- c) Java has a

startmember in the base task to start the task’s thread, while ++ does not have astartmember. Show the ++ pattern to simulate the Javastartpattern.

_Task T {

public:

void start(){}

void main(){

_Accept(start) ; // 1st line

...

}

}- d) When a task’s thread ends, it turns into what kind of object?

- It turns to a normal class object. (Wrong bruh)

- Answer: Monitor

- e) Explain why you are told over and over again to move code from a task’s mutex members into the task main.

- Answer: The task’s thread needs to do the work or why create it, and the concurrency is inhibited for the caller.to-understand

- reduce duration of which mutexes are hold

- goal is to make sure that each thread can do as much work as possible without unnecessary waiting, aligning with the philosophy that threads should be created because there is work to be done, not just to be managed.

- f) What property makes an administrator different from a normal server?

- administrators do not make any calls. they use couriers to communicate between other administrators.

- Answer: The administrator never makes a blocking call (calls out).

- g) What is the connection between asynchronous call and futures?

- asynchronous calls basically wait for futures to return a value in order to do something with it but in the meantime, it can do other work.

- Answer: An asynchronous call returning a result needs a mechanism (future) to match a completed result with the calling client

5.

- a) Explain why modern computers have a cache.

- Answer: Caching transparently hides the latency of accessing main memory.

- Caches are much faster types of memory located closer to the CPU. They store copies of data from frequently accessed memory locations. Because caches are faster than RAM and closer to the processor, they significantly reduce the time the CPU spends waiting for data, effectively bridging the speed gap between the CPU and the main memory.

- Cache memory provides data to the CPU almost instantaneously compared to main memory, which significantly reduces the latency in data retrieval.

- b) Once there is a cache, why is cache coherence necessary?

- We want to make sure that all value of a variable is updated across the cache so they can be consistent. Since all cache values are shared across the computer, a variable can be replicated in numerous locations. We want to make sure the variable is the same

- Answer: Cache coherence ensures a shared value is uniformly updated across the cache giving a consistent view of values.

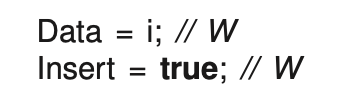

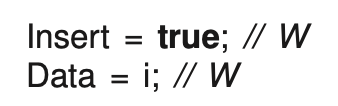

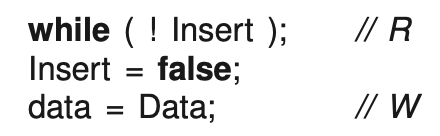

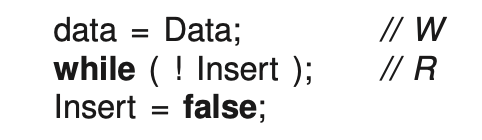

- c) Sequential optimization allows reordering to . Show this reordering for the following code and explain how this reordering causes a failure scenario for the producer when creating synchronization.

- Allows reading of stale data

- The reordered sequence first sets the

Insertflag totrueand then updates theDatavariable toi. This reordering can result in a critical race condition. - Suppose there’s a consumer thread that continuously checks the value of

Insert. Once it seesInsertset totrue, it reads the value ofData. In the original ordering, this would be safe becauseDatawould have already been assignedi. However, in the reordered version, there’s a risk that the consumer readsDataafterInsertis set totruebut beforeDatahas been updated toi. As a result, the consumer could read stale or uninitialized data fromData.

- d) 2 marks

- i. What optimizations problem does the C++ declaration-qualifier volatile prevent for concurrent programming.

- It makes sure that data is often loaded/read into registers , and prevent compiler optimization to mess things up (I this correct?)

- Answer: Declaration qualifier volatile prevents variables from being hidden in registers.

- ii. How does the optimization cause problems?

- Answer: A shared flag is loaded into a register and checked there, hence it is impossible to see the flag change.

- Visibility Issues: If a variable that is shared among multiple threads is stored in a register, updates made to it in one thread may not be visible to other threads. For example, if one thread sets a flag to indicate that some data is ready, and this flag variable is stored in a register, other threads might continue to see the old value of the flag if they are reading the flag from a register that was loaded with the old value. This leads to the threads not recognizing updates made by others.

- The use of

volatilein C++ is a way to ensure that variables are accessed directly from memory each time they are referenced in the code, which is critical in ensuring data consistency in multi-threaded applications or in interfacing with hardware where the state can change independently of the program flow.

- i. What optimizations problem does the C++ declaration-qualifier volatile prevent for concurrent programming.

- e) Explain how the double-wide compare-and-set instruction solves the ABA problem for stack pop.

- We count the number of times it has been pushed, so that way if a context switch happens, and it pops off nodes from the stack for instance, the counter will be modified.

- Answer: A counter is added to count pushes, which the CAVD saves automatically. The counter ensures the second push of A in ABA has a different count value from the first push.

- Associate counter (ticket) with node. And we increment counter in push (only count pushes), that way pop can detect ABA problem if a node is re-pushed

- f) In C++11 concurrency, explain the unusual connection between task creation and termination.

- Thread creation starts the task, but in order for it to terminate, you need to call

join. - Answer: Threads are declared but a

joinmember is used rather than deallocation for termination synchronization.

- Thread creation starts the task, but in order for it to terminate, you need to call

6.

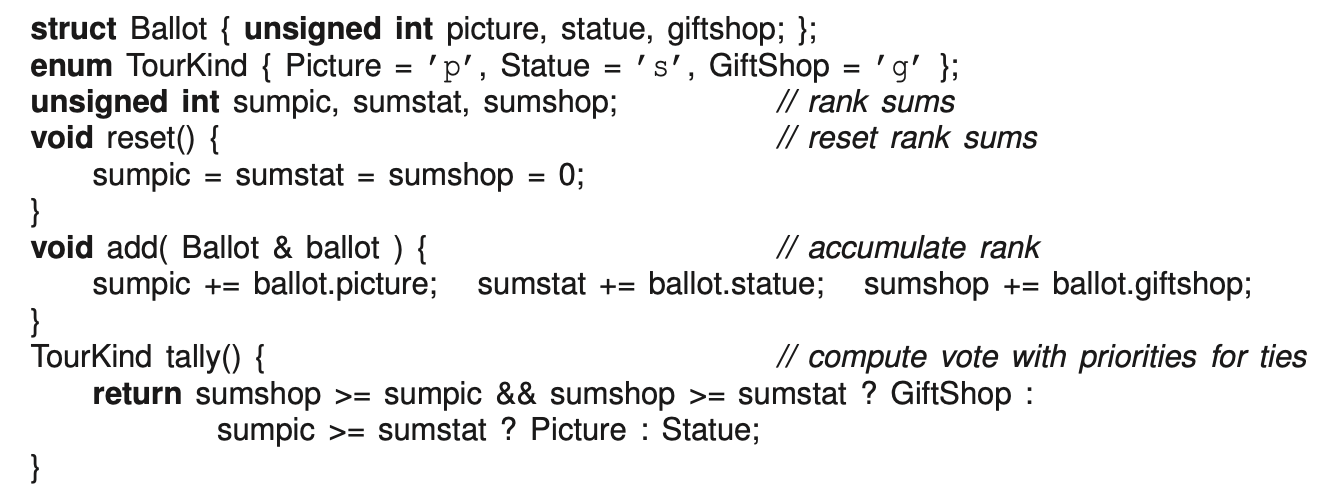

Consider the following situation involving a tour group of V tourists. The tourists arrive at the Louvre museum for a tour. However, a tour group can only be composed of G people at a time, otherwise the tourists cannot hear the guide. As well, there are 3 kinds of tours at the Louvre: pictures, statues and gift shop. Therefore, each group of tourists must vote among themselves to select the kind of tour to take. Voting is a ranked ballot, where each tourist ranks the 3 tours with values 0, 1, 2, where 2 is the highest rank. Tallying the votes sums the ranks for each kind of tour and selects the highest ranking. Assume the following declarations and member routines are available to handle voting:

Do not copy this code into your answer booklet.

Figure 1 shows the interface for the vote-tallier class with the given code for the public members and private declarations (you may only add code in the designated areas). Denote the location of added code with the labels L1, L2, L3, L4, L5, L6. Depending on the implementation, some label sections are empty. Do not write or create the tourist tasks.

The vote routine does not return until group votes are cast. The groups are formed based on voter arrival; e.g., for a group of 3, if voters 2, 5, 8 cast their votes first, they form the first group, etc. The tour size G may not evenly divide the number of tourists, resulting in a quorum failure when the remaining tourists is less than G. Note, even when V is a multiple of G and tourists take multiple tours, a quorum failure can occur. When a tourist finishes taking tours and leaves the Louvre Museum, they always call done (even if it receives a Quorum exception). TallyVotes detects a quorum failure when the number of remaining voters is less than the group size. When a tourist calls done, they may cooperate if there is a quorum failure by helping to unblock waiting voters. At this point, any new calls to vote immediately raise exception Quorum, and any waiting voters must be unblocked so they can raise exception Quorum.

Implement a vote-tallier for G-way voting using:

- a) external scheduling

L1: // Don't need any variables

L2:

if(voters < group) _Throw Quorum();

L3:

try {

for(;;) {

_Accept(done) {

if(voters < group) break;

} or _Accept(vote){

}

} // for

} catch (uMutexFailure::RendezvousFailure &){

} // try

L5:

if(voters < group) _Throw Quorum();

- b) internal scheduling

L1:

uCondition bench;

L2:

if(voters < group) _Throw Quorum();

L3:

bench.wait();

bench.signal();

if(voters < group) _Throw Quorum();

L4:

bench.signal();

L6:

if(voters < group) bench.signal();

- c) implicit (automatic) signalling, using only the 3 macros defined below Assume the existence of the following preprocessor macros for implicit (automatic) signalling (6c):

#define AUTOMATIC_SIGNAL ...

#define WAITUNTIL( cond ) ...

#define RETURN( expr... ) ... // gcc variable number of parametersMacro AUTOMATIC_SIGNAL is placed only once in an automatic-signal monitor as a private member, and contains any private variables needed to implement the automatic-signal monitor. Macro WAITUNTIL is used to delay until the cond evaluates to true. Macro RETURN is used to return from a public routine of an automatic-signal monitor, where expr is optionally used for returning a value.

L1:

AUTOMATIC_SIGNAL;

L2:

if(voters < group) _Throw Quorum();

L3:

WAITUNTIL(numVotes == 0, , );

if(voters < group) _Throw Quorum();

L5:

RETURN(talliedResult);

L6:

if(voters < group) numVotes == 0;

RETURN();

7.

29 marks Consider the tally vote as described in question 6, and assume the declarations and member routines to handle voting are directly available. Write an administrator task to coordinate voting to form a tour group and match a tour group with a guide. Figure 2 shows the interface for the vote-tallier with the given code for the public members and private declarations (you may NOT add any private variables or public routines; private helper routines are allowed).

At creation, a vote-tallier is passed the number of voters, size of a voting group, and number of guides. The vote-tallying members are:

- vote: is called by a

Touristtask passing a ranked ballot. A Vote work request is created and pushed at the end the votes vector. The vote member returns immediately with a future tour so the tourist can go to the “restroom” before the tour starts. The kind of tour will be delivered to all the future tours after a group has formed and a guide is available. If there is a quorum failure, a Quorum exception is delivered in the future tour. - done: is always called by a

Touristtask when the tourist finishes taking tours and leaves the Museum (even if it receives a Quorum or Closed exception). - tour: is called by a Guide task to indicate it is ready to take a group on a tour. Each guide blocks until a complete group is available and is returned the kind of tour for a group.

When the vote-tallier’s destructor is invoked it closes for the day and delivers exception Closed into all outstanding future tours and informs all waiting and returning guides of the closure by raising exception Closed in member tour.

Ensure the TallyVotes task does as much administration works as possible; a monitor-style solution will receive little or no marks. Assume the program main creates the TallyVotes, Tourist and Guide tasks. Write only the TallyVotes::main member, do not write the program main or the tourists and guide tasks.

μC++ future server operations are:

- delivery( T result ) – copy result to be returned to the client(s) into the future, unblocking clients waiting for the result.

- exception( uBaseEvent * cause ) – copy a server-generated exception into the future, and the exception cause is thrown at clients accessing the future.

void flush(bool kind) {

for(int i = 0; i < votes.size(); i++) {

votes[i]->(kind ? new Quorum : new Closed);

} // for

votes.clear();

}

void main(){

for(;;){

_Accept(~TallyVotes){ // shutdown

break;

} or _Accept(done) { // voter leaves

voters -= 1;

if(voters < group && voters.size()) { // failure?

flush(true); // Quorum failure

}

} or _Accept(vote) { // voter

} or _Accept(tour) { //guide

if(!wguides.empty() && voters.size() > group) {

for(int i = 0; i < group; i++){ //compute rank

add(voters->[i]->ballot);

} // for

gtour = tally(); // computer vote

for(int i = 0; i < group; i++) { // put vote in futures

votes[i]->ftour.delivery(gtour);

delete votes[i];

} // for

votes.erase(votes.begin(), votes.begin()+group) // shorten

wguides.signalBlock(); // unblock guide

reset(); // reset vote counters

} // if

} // Accept

} // for

// Shut down and tell the tourists/guides to go home

closed = true;

for(int i = 0; i < guides; i++){

if(wguides.empty()) _Accept(tour);

} // for

flush(false); // CLosed failure

}- from my understanding, in

_Accept(tour), notice how we block until group is available intour(). So when monitor accept call to tour, it blocks, and then execution goes into the_Acceptblock. - so since we know the group size, for each group, we add up the votes (accumulate rank) from each voter? (like we go over the votes vector and add up all the votes to like 3 separate global values

sumpic, sumstat, sumshop), thentally()will compute which tour this group is going to go on which is stored ingtour. Then we put the votes in the future usingvotes[i]->ftour.delivery(gtour), which putsgtourin every singlevotes[i], then deletevotes[i](future is not deleted). Then we shorten the votes vector for next time. Unblock the guide usingsignalBlock()that way, it stops the current execution in this Accept, and we wake up back in thetour()function when we blocked, right afterwguides.wait(), then tell guide to close by throwing Closed() and return the tour kind that we’ve stored ingtour. Then in the accept we rest() the vote counters for next time. - Shutdown part: don’t really get it now. Why do we go through the guides and

_Accept(tour)if there are no more guides anymore. Then we unblock the blocked guides bywguides.signalBlock(); - Lastly, we

flush(false)which indicates Closed exception

Fall 2018 Final

1.

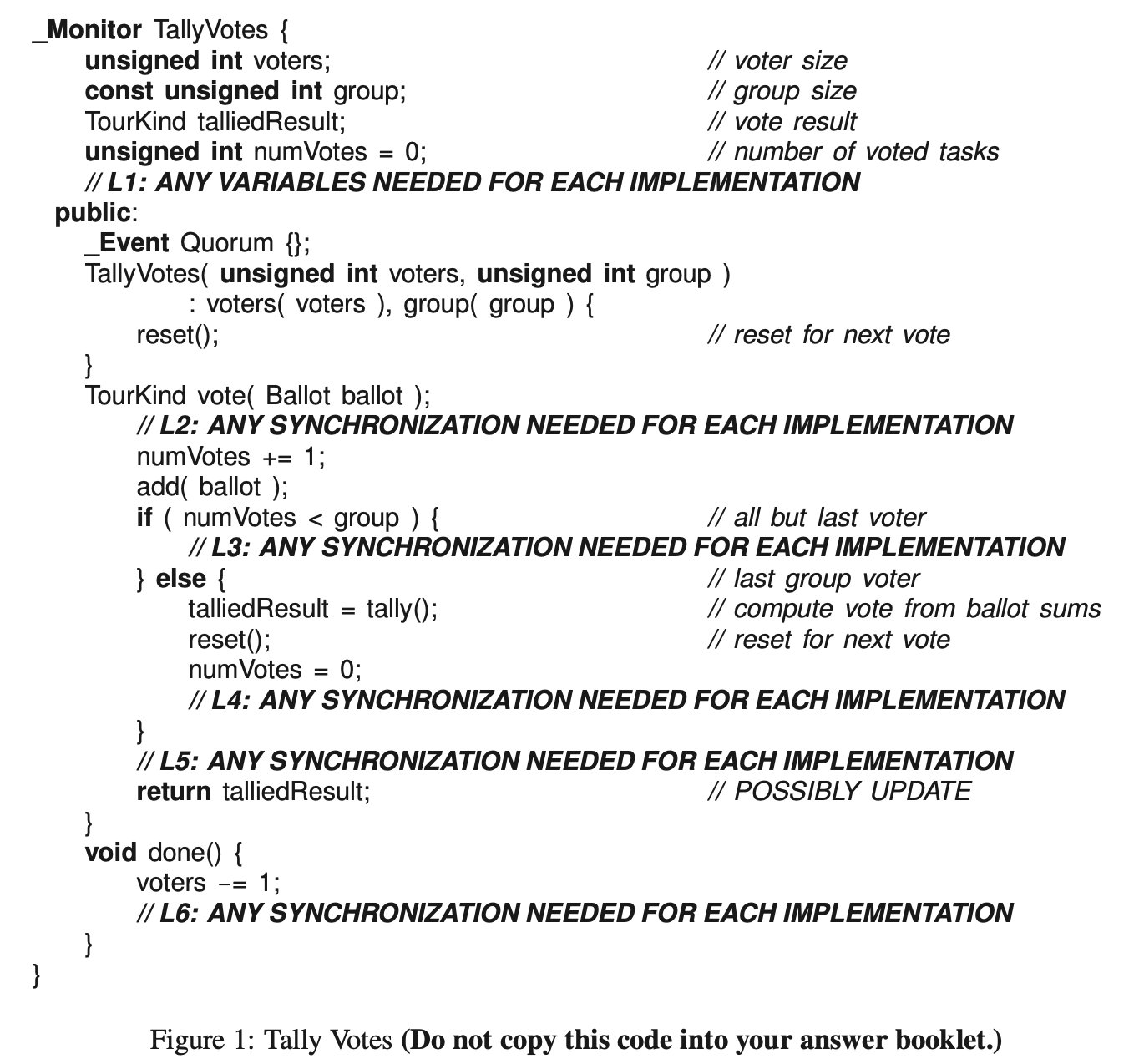

- a) i. The fetch-and-increment instruction atomically reads and increments a memory location. The instruction has one parameter: a reference to a value. The action of this single atomic instruction is the same as the following C routine if it could be performed as a single atomic operation without interruption with respect to the given parameter:

Show how this atomic instruction can be used to build mutual exclusion by completing a class with the following public interface (you may only add a public destructor and private members):

Show how this atomic instruction can be used to build mutual exclusion by completing a class with the following public interface (you may only add a public destructor and private members):

which is used as follows:

which is used as follows:

Your solution may use busy waiting, must deal with starvation, and you may not assume assignment is atomic.

Your solution may use busy waiting, must deal with starvation, and you may not assume assignment is atomic.

class Lock {

unsigned tickets, serving;

public:

Lock(): tickets(0), serving(0) {}

void acquire() { // entry protocol

int ticket = fetchInc(tickets); // obtain a ticket

while(ticket != serving) {}

}

void release() {

fetchInc(serving);

}

};tickets: This variable keeps track of the number of tickets issued to threads attempting to enter the critical section. Each thread acquires a unique ticket number when it callsacquire().serving: This variable tracks the ticket number currently being served — i.e., the thread that is allowed to enter the critical section.- Constructor (

Lock()):- Initializes both

ticketsandservingto0. This means that no tickets have been issued initially, and no ticket is being served.

- Initializes both

acquire()Method:- Ticket Issuance: Each call to

acquire()results infetchInc(tickets), which atomically increments theticketscounter and returns the previous value to the calling thread. This value represents the “ticket number” for the calling thread. - Busy Waiting: The thread then enters a busy-wait loop (

while (ticket != serving) {}). It will only break out of this loop when its ticket number matches theservingcounter, indicating that it’s the thread’s turn to enter the critical section.

- Ticket Issuance: Each call to

release()Method:- Increment

serving: After the thread has finished executing its critical section, it callsrelease(), which increments theservingcounter by one viafetchInc(serving). This operation signals that the next thread (the one with the subsequent ticket number) can now enter the critical section.

- Increment

- ii. Is there any limitation (failure scenario) in your solution?

- Answer: If 4+ billion (assume 4 byte integers) tasks arrive simultaneously, the tickets over- flow and threads get the same ticket.

- b) i. For the baton-passing locking-technique, list the baton passing rules

- only one batton

- if you get the baton, you can go into the critical section, and no one else can move nobody moves in the entry/exit code unless they have the baton

- when baton is released, cannot read/write variables in entry/exit

- ii. How many bytes of storage does it take to implement a baton? Explain

- 0 bytes, it’s just a concept. There isn’t a real baton

- c) i. What is the potential problem with this coding sequence, and what is the name of the problem?

... entry.V(); Xwait.P(); ...- Answer: A time-slice can happen between the V and P which can result in a task barging or staleness so waiting is non-temporal (non-FIFO) order!!!!

- Barging: When

entry.V()is called, it releases or signals the semaphore, potentially allowing another task waiting on this semaphore to proceed. If a context switch occurs right after this and beforeXwait.P()is called, other tasks that were not originally waiting onXwaitmay run and potentially modify shared resources or state thatXwait.P()expects to be unchanged. This disruption can lead to unexpected behaviour because the original task does not immediately block after releasing the entry semaphore, allowing other tasks to “barge” in.

- Barging: When

- Answer: A time-slice can happen between the V and P which can result in a task barging or staleness so waiting is non-temporal (non-FIFO) order!!!!

- ii. Declare a semaphore member to fix this problem and explain how it would work?

- Answer: Member

Xwait.P(entry), which atomically blocks onXwaitand unlocks entry. - Two in one.

- Answer: Member

- d) Assume a solution to the readers/writer problem handling temporal order using a single FIFO bench for the readers/writers and a chair. Briefly, explain how the overall solution works.

- Answer: When the chair is empty, tasks at the front of the bench are unblocked until there is a writer that cannot enter. This writer waits in the chair, and the chair is always unblocked (priority) before the bench.

- to-understand

2.

- a) List the five conditions for a mutual-exclusion deadlock?

- A concrete shared-resource requiring mutual exclusion, i.e., exists without a task. (a task “wanting to drive across the intersection” is not a resource)

- A process holds a resource while waiting for access to a resource held by another process (hold and wait)

- Once a process has gained access to a resource, the runtime system cannot get it back (no preemption)

- There exists a circular wait of processes on resources

- These conditions must occur simultaneously

- b) Are the weeping angels around the Tardis in a livelock or deadlock? Explain.

- The angels are in a livelock because, after the humans leave, and a cardboard is used to cover one of the angels eyes, it can move and then so can the other angels. The angels are not holding any resource or waiting for a resource (no hold and wait cycle).

- c) Explain the basic idea behind the ordered-resource allocation policy to prevent dead-lock (be brief), and name the mutual-exclusion deadlock-condition it prevents.

- Always acquire the locks in a certain order, it prevents hold and wait

- Answer: Order resources and allocate resources in that order to prevent a hold-and-wait cycle.

- d) Suggest what a programmer should and should not do if the deadlock-avoidance Oracle says NO to a resource allocation.

- Answer: should release some resources, should not block or busy wait

3.

- a) Explain why a solution for a bounded buffer with multiple producers/consumers can be more efficient using semaphores rather than a monitor.

- Answer: A monitor solution cannot allow simultaneous insert/remove to an appropriate buffer because of the mutual exclusion property.

- b) Explain how the three parts of the conditional-critical region are incorporated into the object-oriented model.

- SHARED declarations become private monitor declarations, REGION statements become public monitor members, AWAIT clauses become

_Acceptor signal/wait statements.to-understand

- SHARED declarations become private monitor declarations, REGION statements become public monitor members, AWAIT clauses become

- c) Why is external scheduling easier than internal scheduling?

- The monitor automatically handles everything for you, blocking and unblocking threads.

- Answer: External scheduling is simpler because unblocking (signalling) is implicit.

- d) 2. Write the code for an OR accept and an AND accept for mutex members M1 and M2.

- Easy

- e) Explain the difference between μC++ signal and signalBlock.

- For signal the signalling task continues execution until it waits or exits, and the signalled task is delayed (on the A/S stack). For signalBlock the signalling task is delayed (on the A/S stack), and the signalled task continues execution until it waits or exits.

- f) What is the nested-monitor problem and what concurrent error can it generate?

- Answer: A task calls into monitor M1 and monitor M2, and waits in M2, releasing M2’s monitor lock but not M1’s monitor lock. Because M1’s monitor lock is not released, a signalling task may not be able to enter M2 to signal the waiting task, leading to a deadlock.

- Task A acquires the lock for

M1. - Task A enters

M2and blocks, releasingM2’s lock but still holdingM1’s lock. - Task B, which is responsible for signalling Task A in

M2, attempts to enterM2. - Task B cannot proceed because it needs

M1’s lock to perform the operation that eventually leads to signalling Task A. - Neither task can proceed, resulting in a deadlock.

- Task A acquires the lock for

- Answer: A task calls into monitor M1 and monitor M2, and waits in M2, releasing M2’s monitor lock but not M1’s monitor lock. Because M1’s monitor lock is not released, a signalling task may not be able to enter M2 to signal the waiting task, leading to a deadlock.

- g) When monitors are classified using C (calling), W (signalled), and S (signaller) priorities, explain why monitors are rejected for W = S?

- You can’t just have either signaller or signalled task continue next! bruh.

- Answer: 4. Too confusing because either the signalled or signaller task can randomly continue in the monitor.

- h) Excluding spurious wakeup, name two aspects of a Java monitor that makes it difficult to use. Do not describe them, i.e., very short answers.

- you have barging,

- Answer: only one condition variable and barging

- i) Do you believe in spurious wakeup? (yes or no)

- no

4.

- a) Explain why μC++ does not provide a type generator (language construct) composed of the execution properties: thread, stack, no synchronization/mutual-exclusion.

- Without a stack, a thread has no where to start execution.

- b) Write a code fragment that generates a RendezvousFailure and show how to deal with the failure.

void mem1() {

...

_Throw Exception();

}

void main() {

try {

_Accept(mem1) {

...

}

} catch(uMutexFailure::RendezvousFailure& e) {

...

}

}- c) When a server accepts a member routine and the client waits (blocks) in the member, what happens to the rendezvous and how does the server deal with it?

- Answer: The rendezvous is postponed or subdivided, and the server must fulfill the rendezvous later and unblock the client.

- d) i. Explain why accepting a task’s destructor should not work

- Answer:

_Acceptshould block, run the destructor, and then unblock, but the object is gone (deleted)!. - ii. Explain how μC++ makes it work.

- Answer:

_Acceptcontinues running and the destructor call is postponed on the A/S stack.

- Answer:

- Answer:

- e) After cancelling a future, what happens when the future is accessed and why are aspects of cancellation difficult to deal with?

- Accessing a cancelled future raises an exception

- There is race condition between the canceller and the processing/accessing of the future

5.

- a) Virtual memory increases performance between hardware components __ and __ , while caching increases performance between __ and __ .

- disk and memory

- memory and registers

- b) Explain the concurrent caching problem that might occur in the following code:

- Answer: The heap memory-allocator may place variables x and y on the same cache line resulting in false sharing.to-understand

- c) Sequential optimization allows reordering Rx → Wy to Wy → Rx. Show how this reordering is possible when creating synchronization and explain the failure scenario.

- Answer: Allows reading of uninserted data

- Why are we not swapping R and W?to-understand

- d) A GPU is a coprocessor of the CPU. Why does this architecture cause a bottleneck in processing data by the GPU?

- Answer: All data to be processes must be copied from the CPU to the GPU, and all results copied back.to-understand

- e) Explain how the Ada requeue statement may be used to simulate internal scheduling using external scheduling.

- No idea.

- Answer:

requeuecancels the current call (request) to a task, reschedules the call on another (usually non-public) mutex member of the task, and accepts it later.to-understand

- f) In the Go programming language, threads are anonymous, precluding direct communication. Explain the alternative communication mechanism used by Go threads.

- use channels to communicate between threads

- Answer: Go uses channels to support direct communication. Go uses a select statement to choose among a number of channels for data or block until data arrives.

- g) Are Java executors an implicit or explicit concurrency system?

- implicit

- programmer doesn’t manage

6.

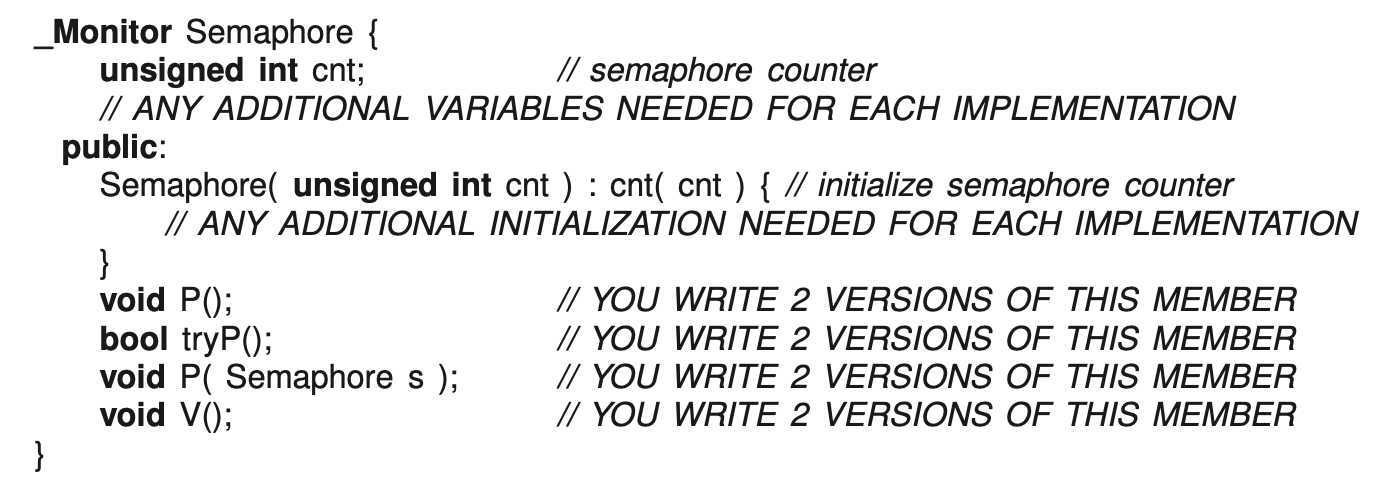

A counting semaphore lock performs synchronization and mutual exclusion. Using a μC++ monitor,

write a counting semaphore implementing the P, tryP, P(s), and V semaphore operations using:

- a) external scheduling

- b) internal scheduling

The semaphore class has the following interface (you may add a public destructor and private members):

External scheduling:

_Monitor Semaphore {

unsigned int cnt;

public:

Semaphore(unsigned int cnt) : cnt(cnt) {}

void P(){

if(cnt == 0) {

for(;;) {

_Accept(V) {

} _Accept(try P()){

}

} // for

} // if

cnt--;

}

bool tryP(){

if(cnt == 0) {

return false

}

cnt--; // why? didn't have it initially

return true;

}

void P(Semaphore s) {

s.V();

P();

}

void V() {

cnt++;

}

}counter of a semaphore only goes from 0 to 1.

P(Semaphore s)This sequence can occur in scenarios where:

- A higher-level resource allocation must be made available (

s.V()). - Immediately after, the current process or thread attempts to acquire the resource (

P()). This could be because other tasks may also compete for the resource, and the code ensures the resource is claimed for use immediately after signalling availability.

Internal scheduling:

_Monitor Semaphore {

unsigned int cnt;

uCondition bench;

public:

Semaphore(unsigned int cnt) : cnt(cnt) {}

void P(){

if(cnt == 0) bench.wait();

cnt--;

}

bool tryP(){

if(cnt == 0) return false;

cnt--;

return true;

}

void P(Semaphore s) {

s.signal();

P();

}

void V() {

cnt--;

bench.signal();

}

}7.

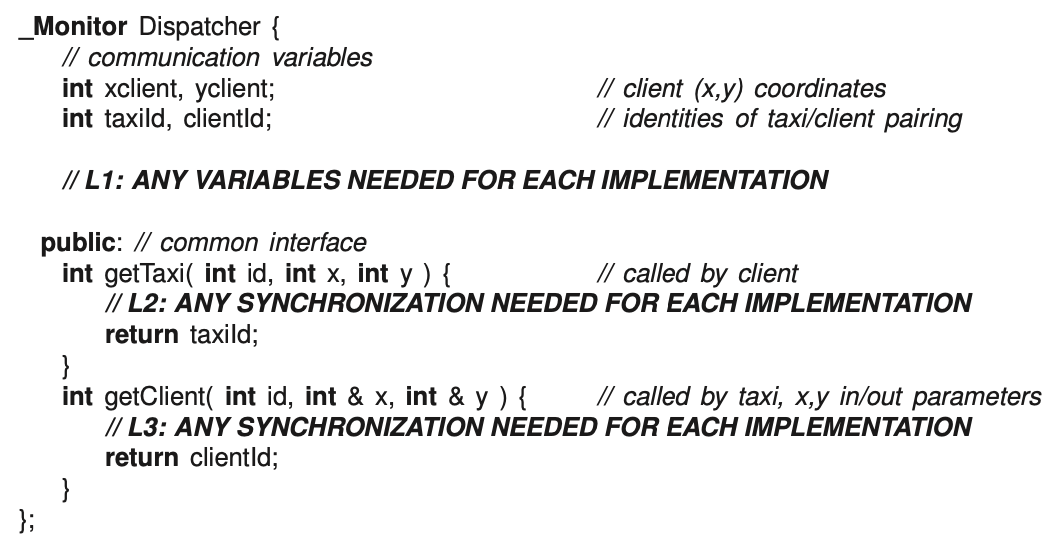

Write an administrator task for the Maple Leaf Taxi company. At Maple Leaf Taxi, there are N taxis located throughout the city. The dispatcher’s job is to take requests from clients for a taxi and to dispatch the closest taxi to the client for a pickup. The dispatcher also takes requests from taxis for the next client at the start of the day and after each client is delivered to their destination. Figure 1 contains the starting code for the dispatcher administrator task (you may not change this interface; you may add private members in the task). (Do not copy the starting code into your answer booklet.)