CS341: Algorithms

A continuation of CS240 Everything can be found on Learn. Taught by Armin Jamshidpey in W2024. Really enjoyed this course.

Lecture notes from another prof: https://cs.uwaterloo.ca/~lapchi/cs341/notes.https://cs.uwaterloo.ca/~lapchi/cs341/notes.htmlhtml

Textbook CLRS is very good. Textbook answers: https://github.com/wojtask/clrs4e-solutions.

Midterm Midterm CS341

Final - Tuesday April 16 2024

Some information about the final exam:

- It covers Lec8-Lec18. The topics are mainly about greedy algorithms, dynamic programming, and NP-completeness. We won’t specifically ask a direct question about Lec1-Lec7 (which were tested for the midterm), but we assume knowledge from these lectures (e.g. we can use DFS to check whether a directed graph is acyclic, use BFS to compute shortest paths, etc) and you may need to use them.

The proof of the Cook-Levin Theorem will not be asked. From Lec17 and Lec18, you don’t need proofs.

-

No notes and books allowed. No electronic devices (e.g. calculators) allowed. There is no reference sheet.

-

You can assume the material in the notes without providing details. You can also assume the content in CS 240 and Math 239 without providing details. No other assumptions can be made without providing details, not from homework, tutorials, reference books, etc. For example, if we ask you a question that is from homework/tutorials, we expect you to answer this question from scratch (instead of saying that we have done it in homework/tutorials).

-

You will be given partial marks even though you do not know how to solve the problem completely (e.g. having a slower algorithm, having some rough ideas about the reductions, etc).

-

For preparation, go through the lecture notes, the homework, the tutorial notes, and the good problems in textbooks (CLRS, KT, DPV).

-

Make sure you read each question on the exam carefully and completely

Topics:

- Dijkstra’s algorithm

- Greedy algorithms

- MST

- Kruskal’s algorithm

- Dynamic Programming

- NP

Focus on later part of the course. Apparently L17 and L18 don’t need to know. Just know the NP-complete problems: https://piazza.com/class/lr45czd8jab7cz/post/1200

To review:

- Recursion tree

- Review midterm

- Do final practice problems

- Do all tutorials

- Focus on NP problem (they are going to ask at least one or two)

Concepts

Lecture 2

Lecture 3 - 4

- Master Theorem

- Recursion-Tree Method

- Recurrence Relations

- Karatsuba Algorithm

- Strassen Algorithm

- Median of medians

- Quickselect - selection algorithm

Lecture 5

Lecture 6

Lecture 7

Lecture 8

Lecture 9

Lecture 10

Lecture 11

- Dynamic Programming

- Interval Scheduling Problem

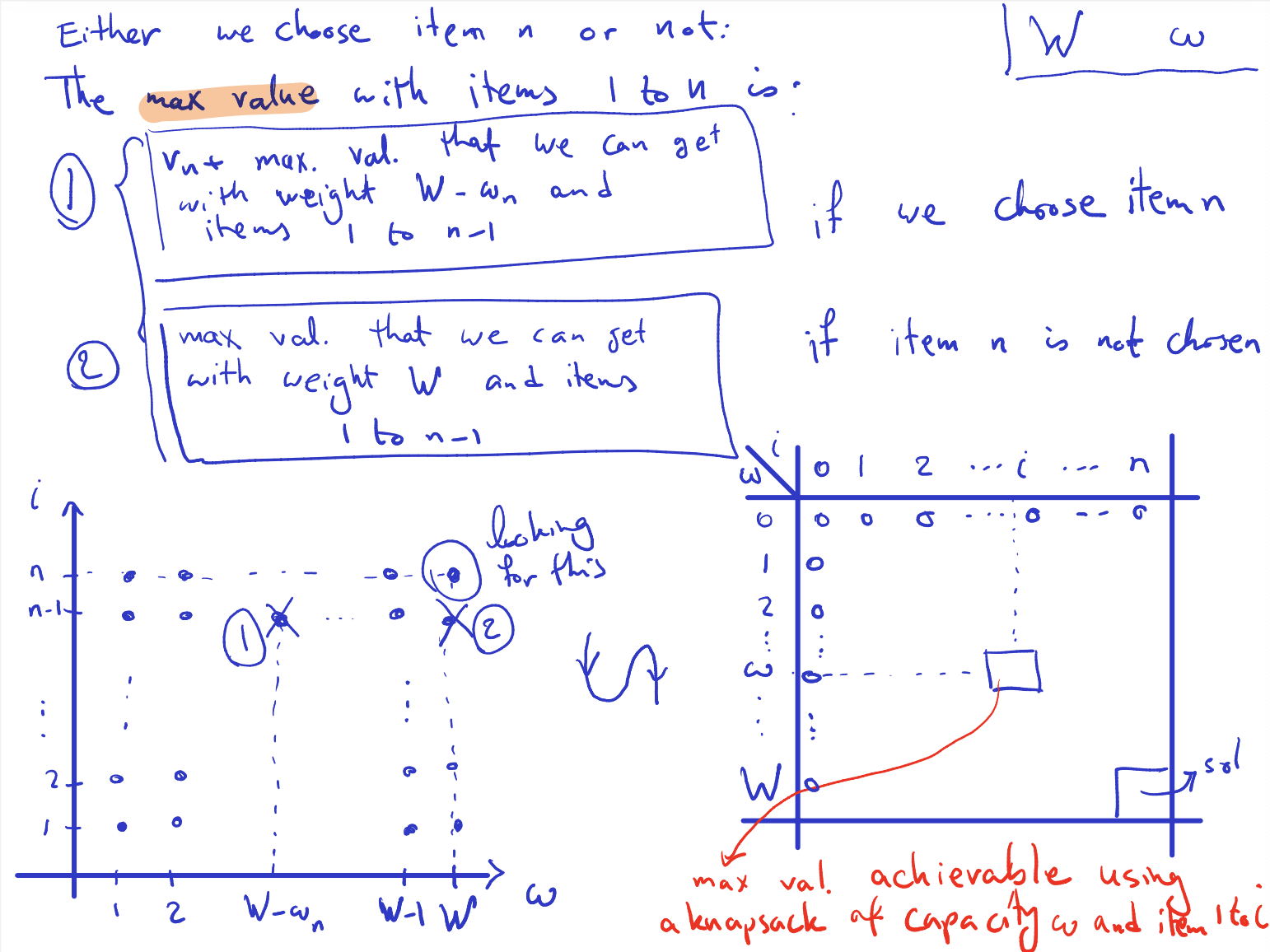

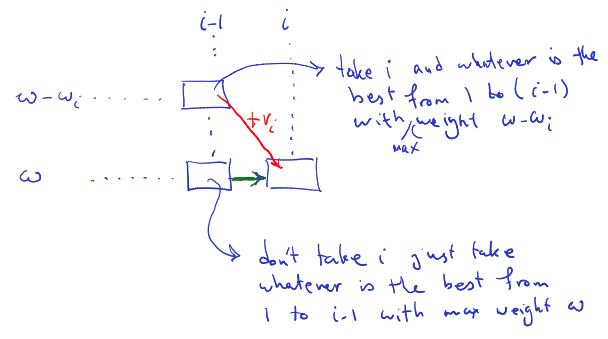

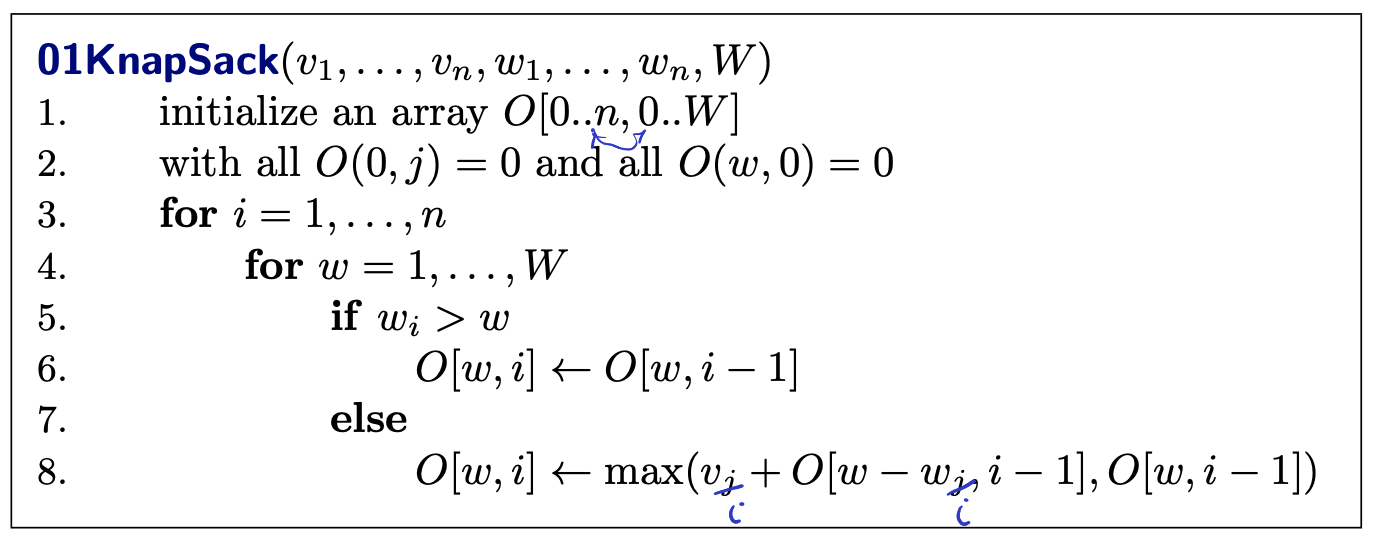

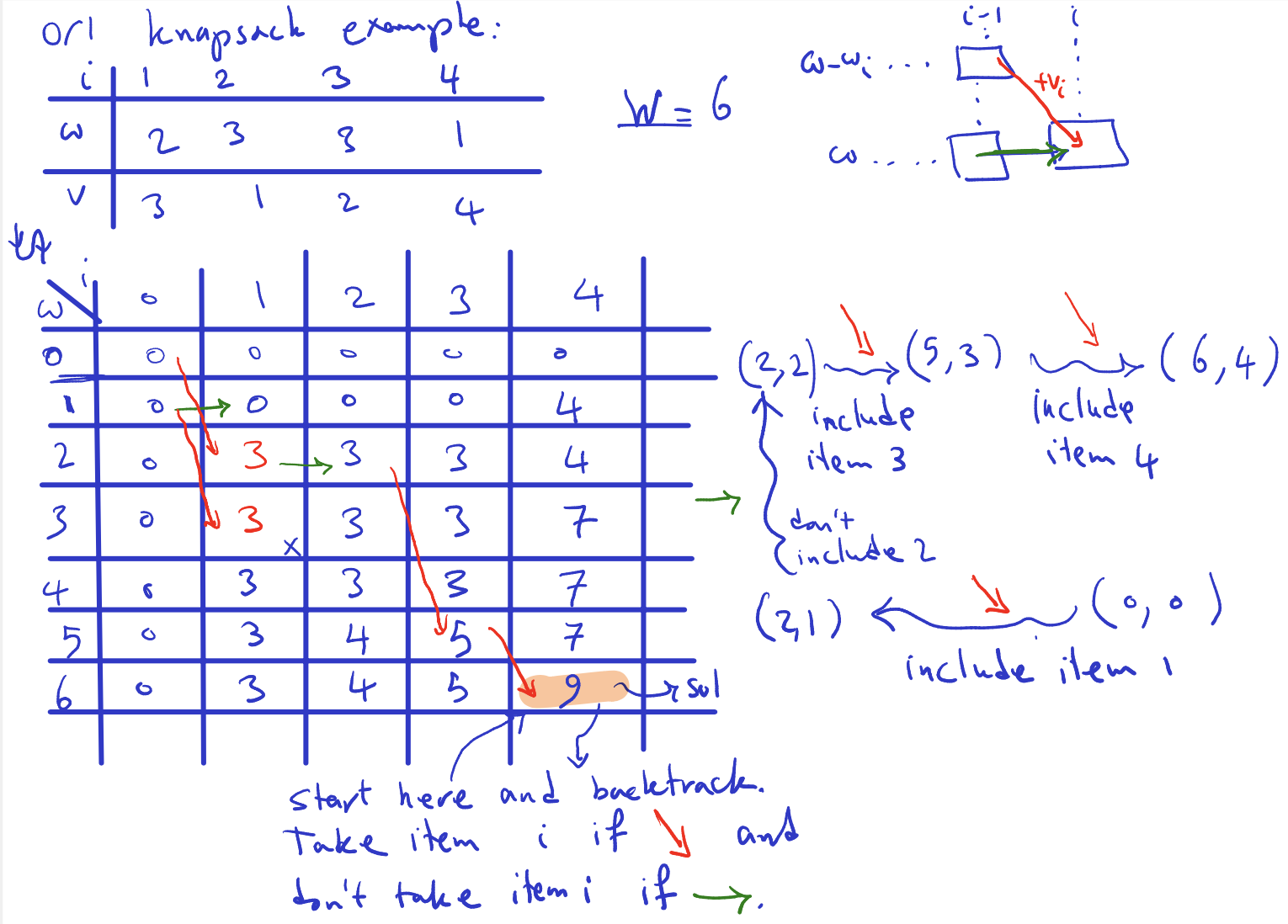

- Knapsack Problem

Lecture 12

Lecture 13

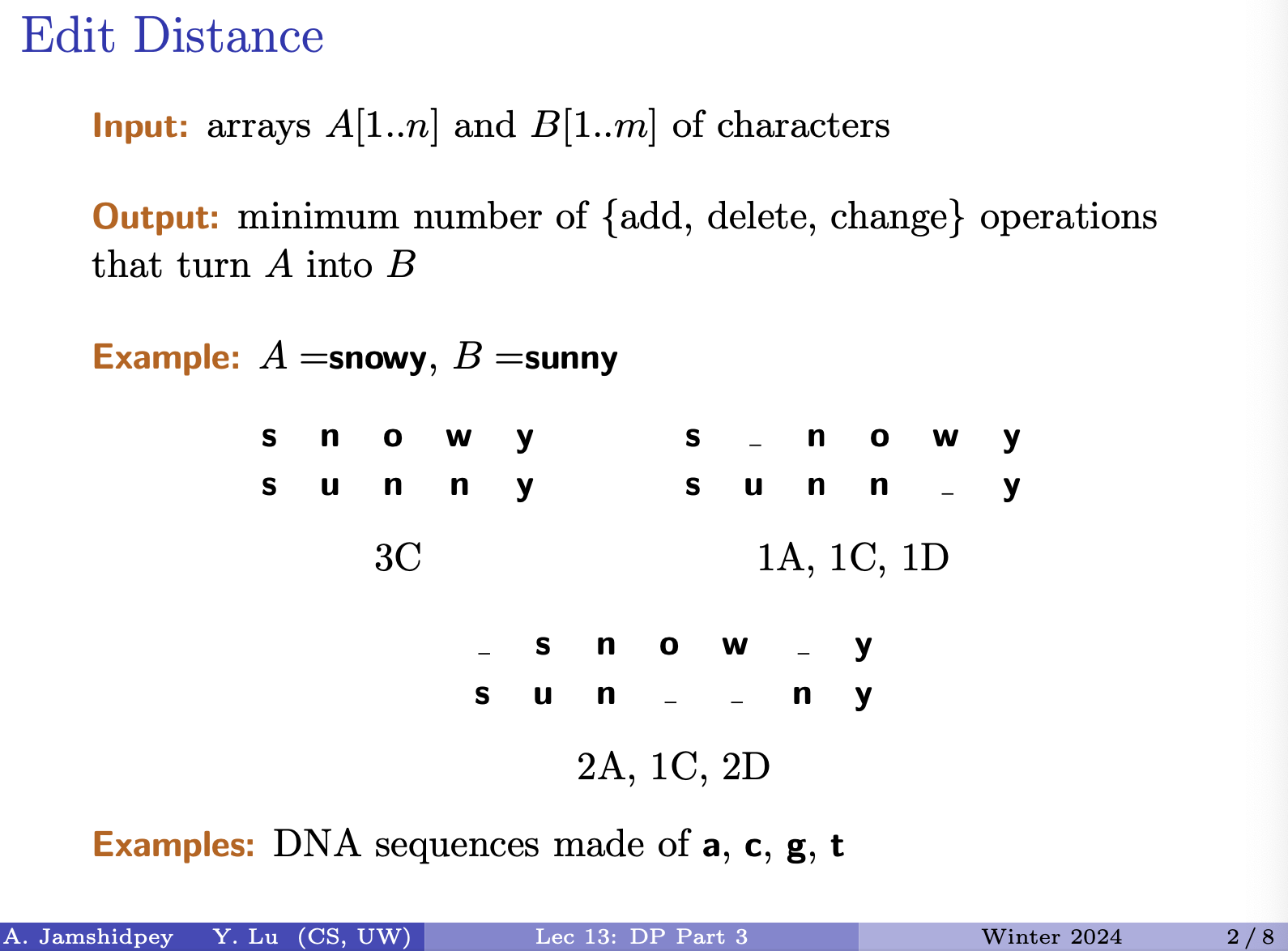

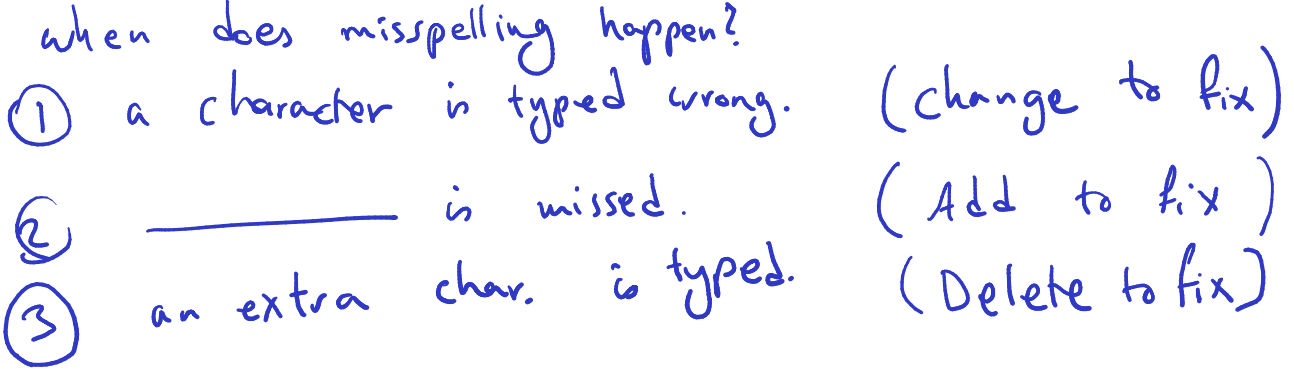

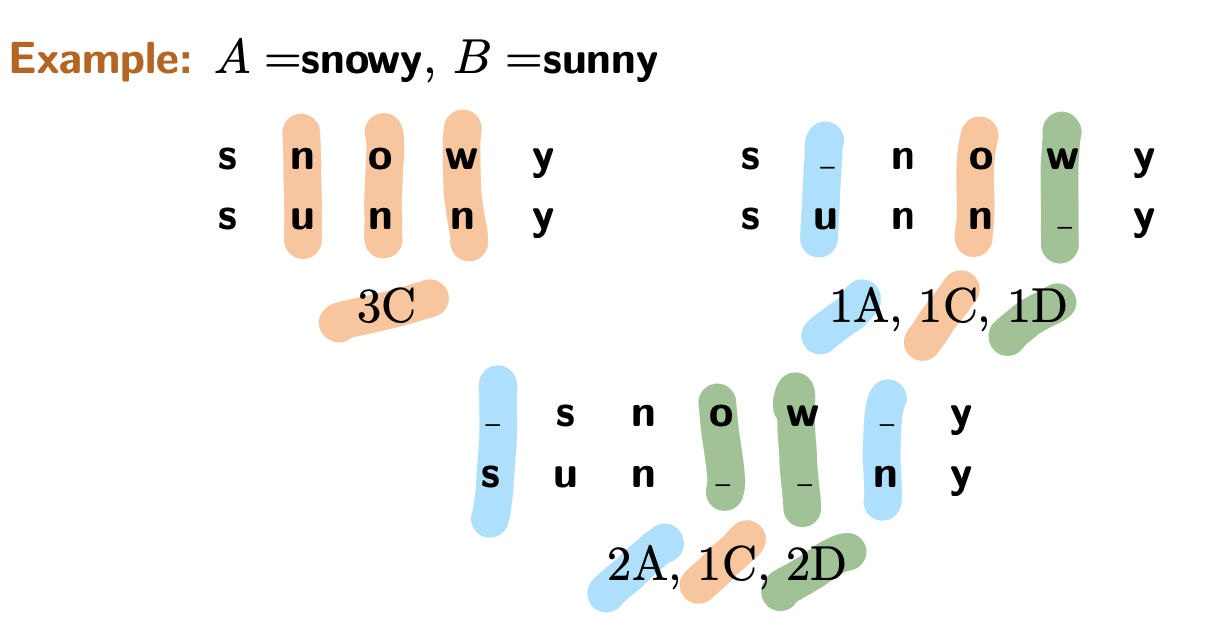

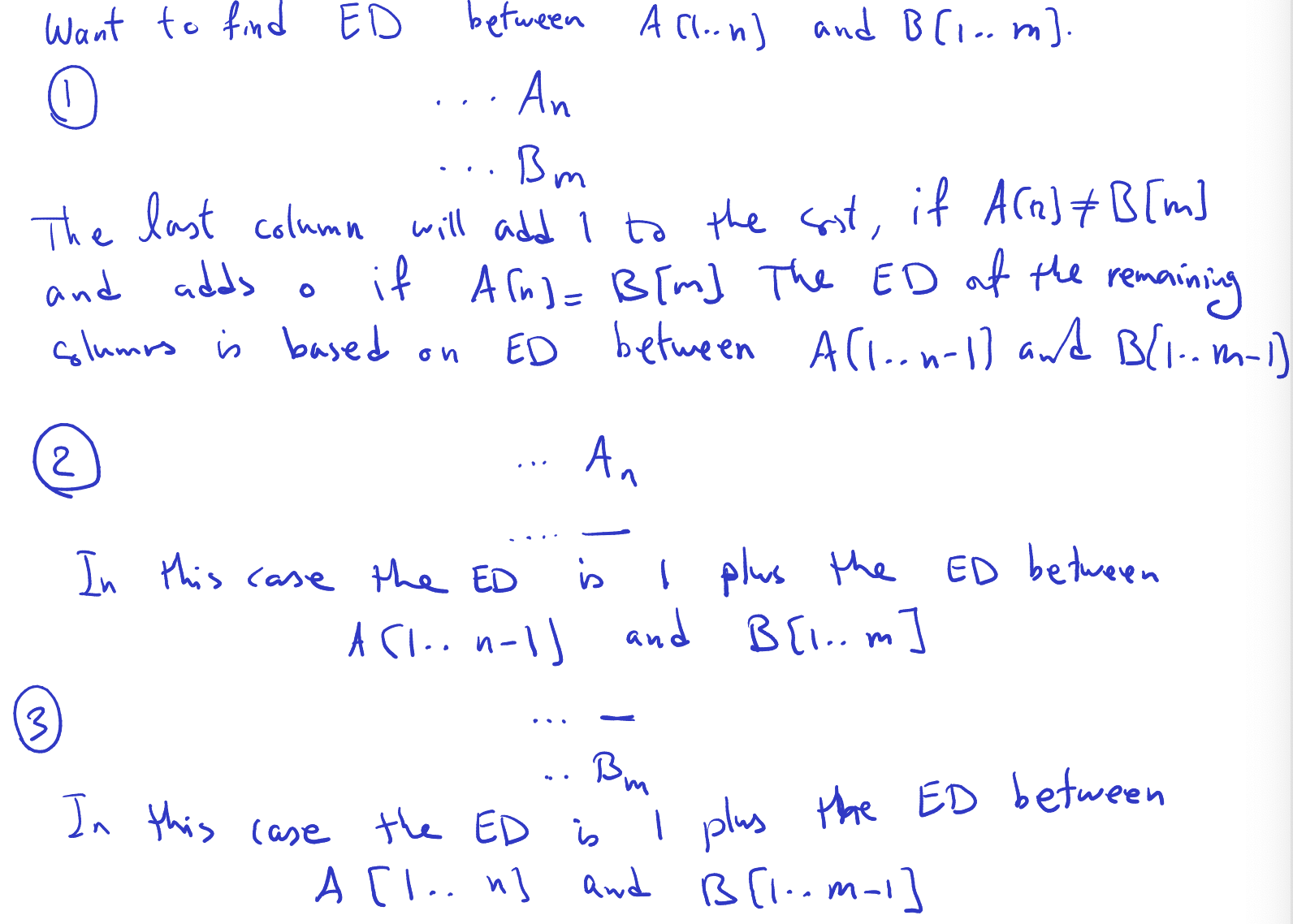

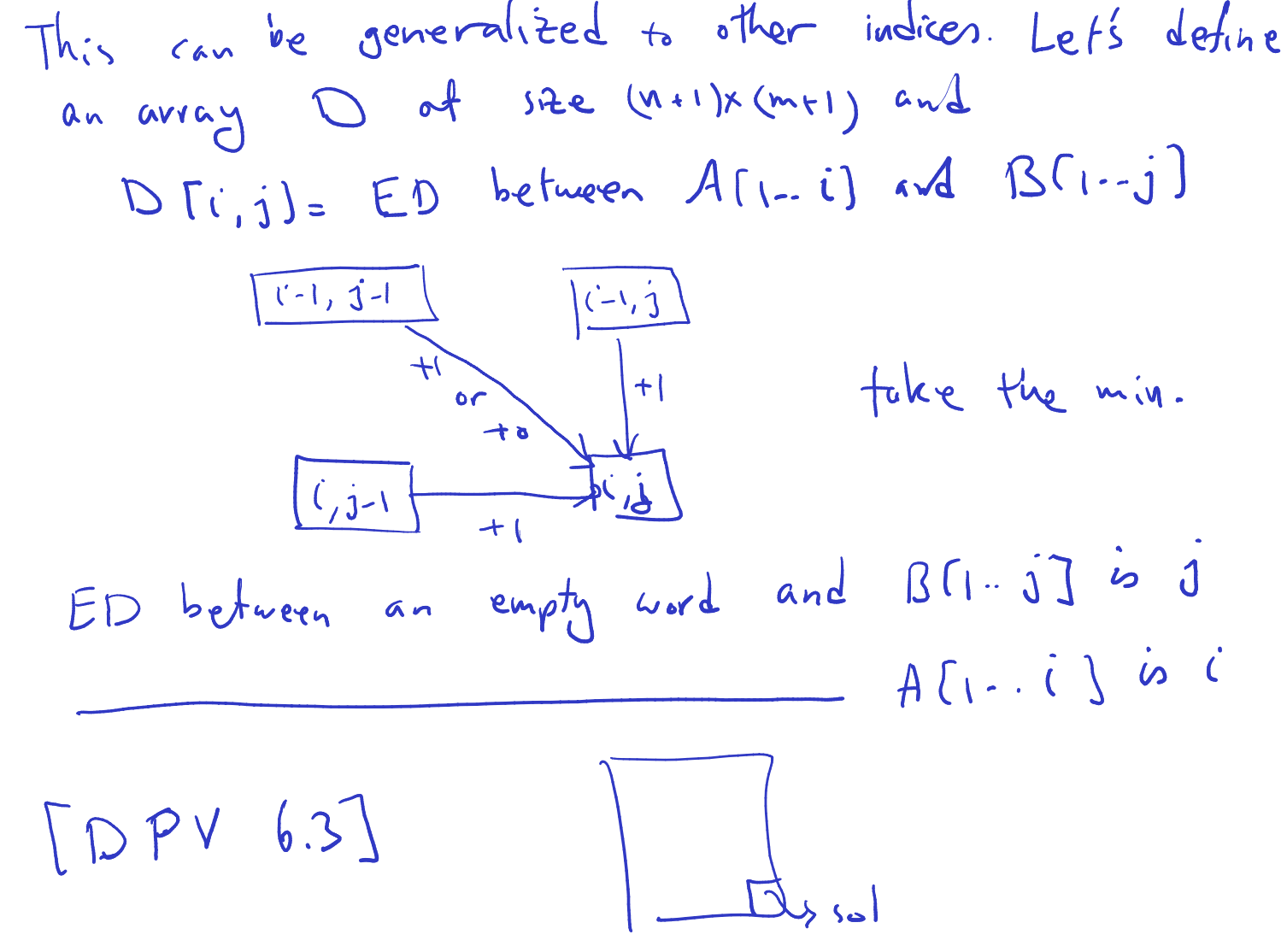

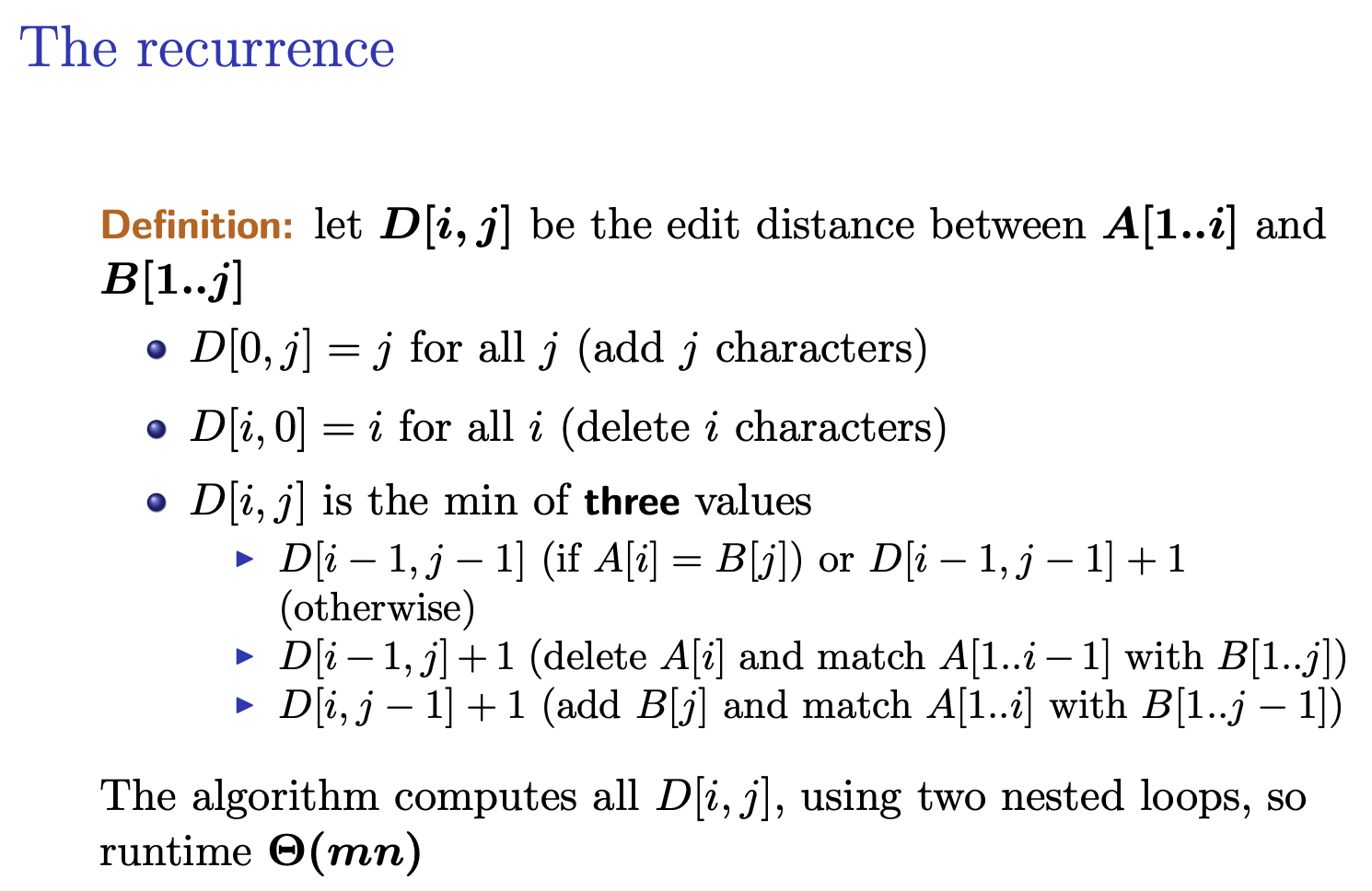

- Edit Distance

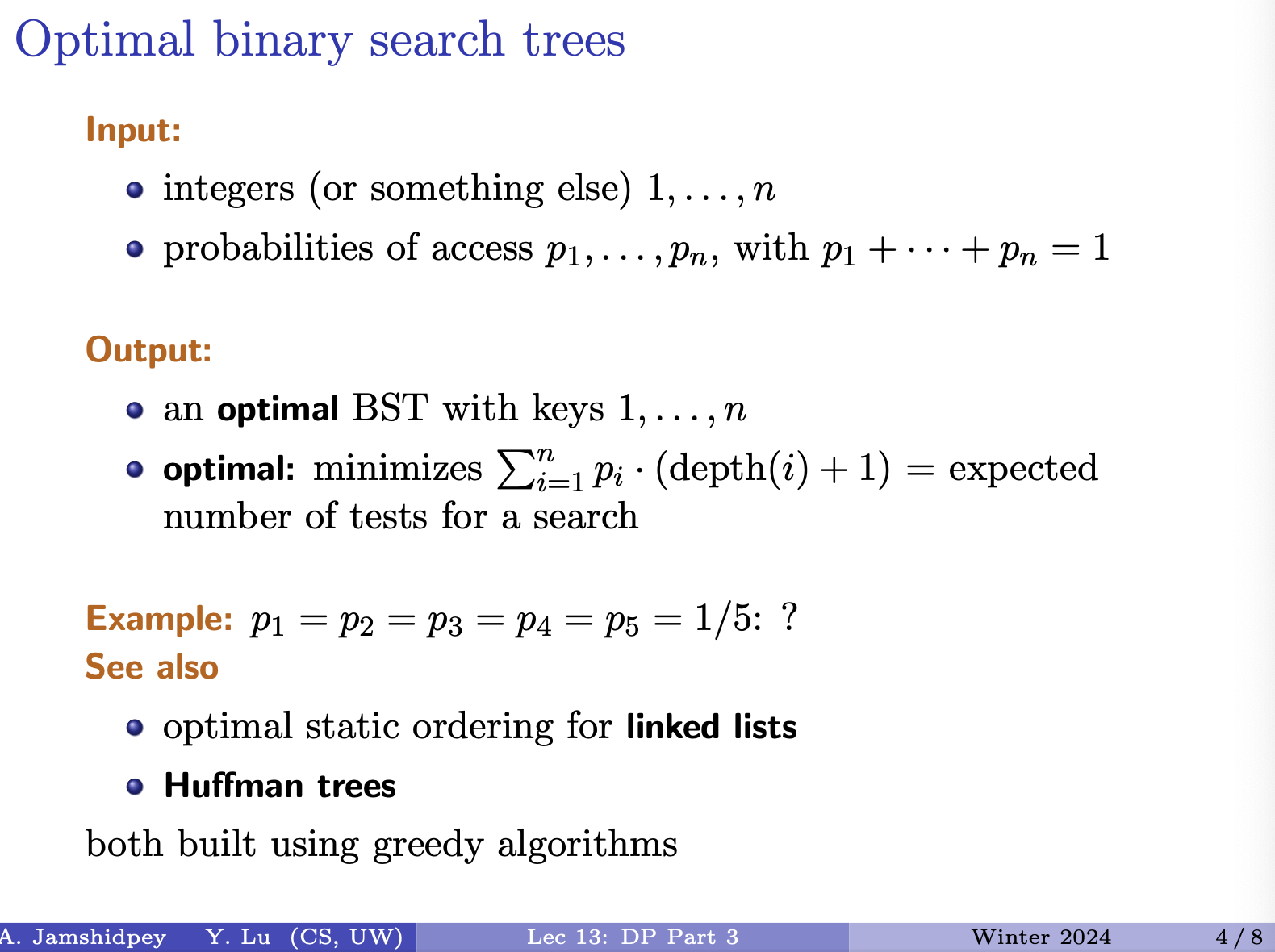

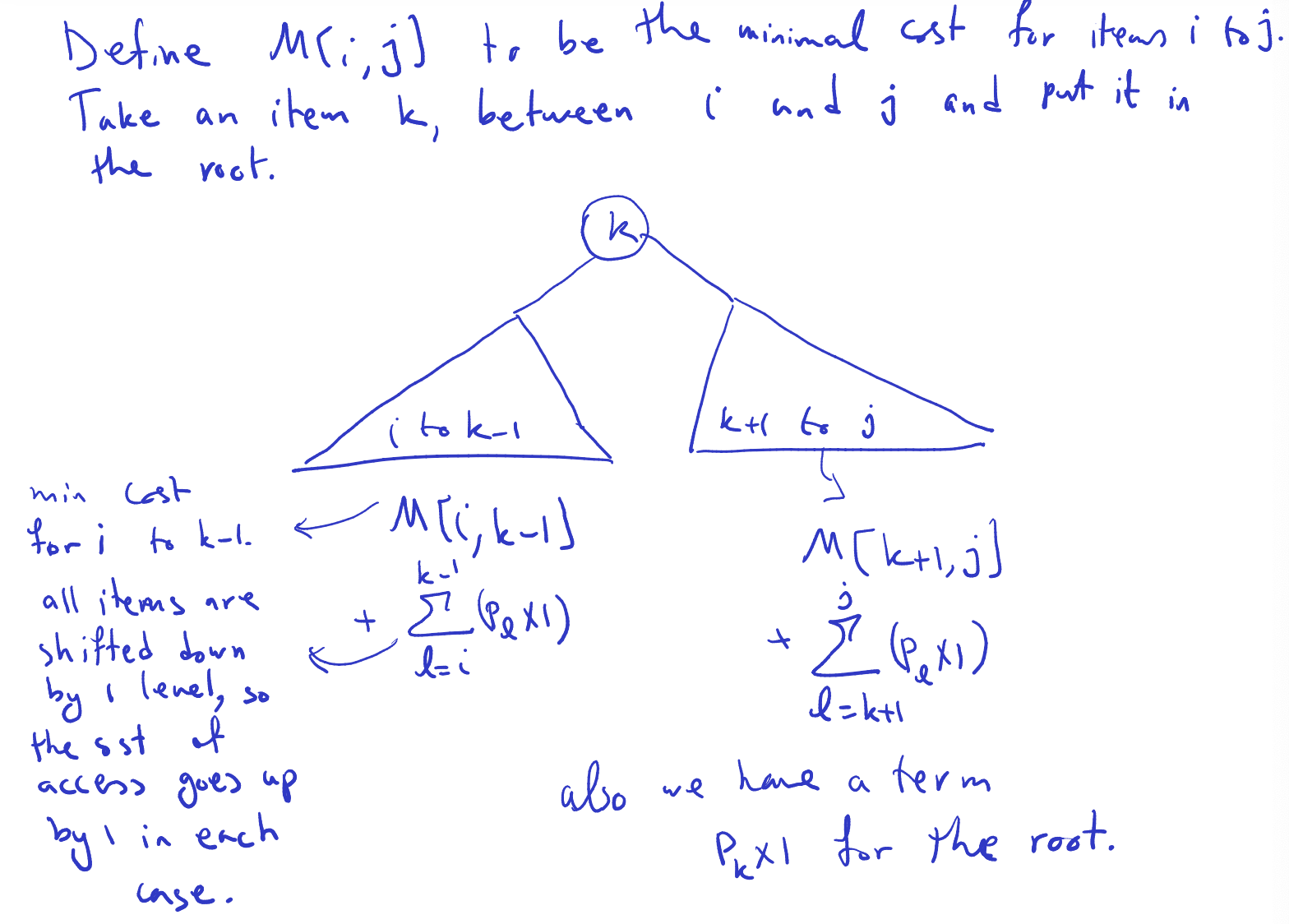

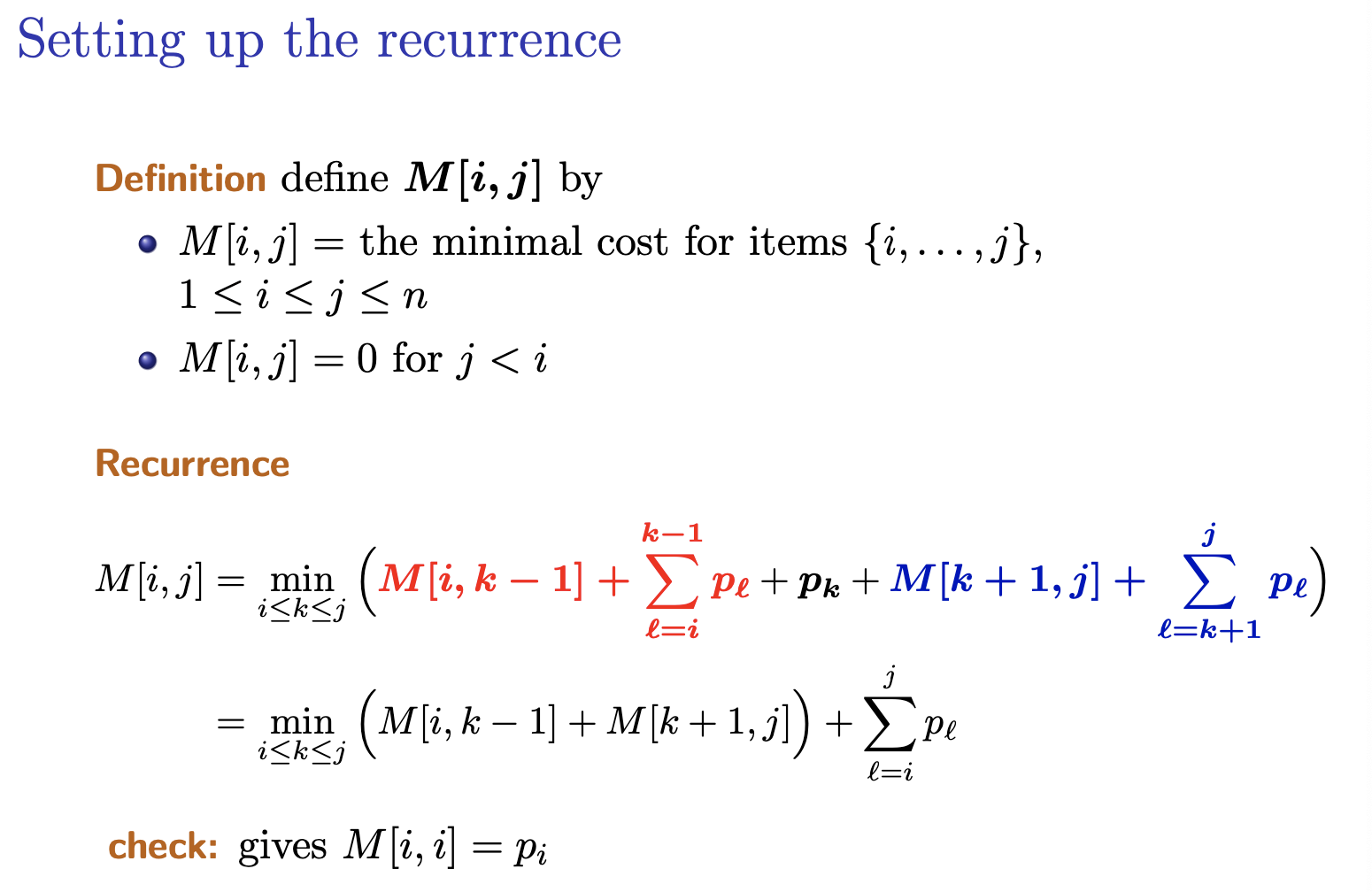

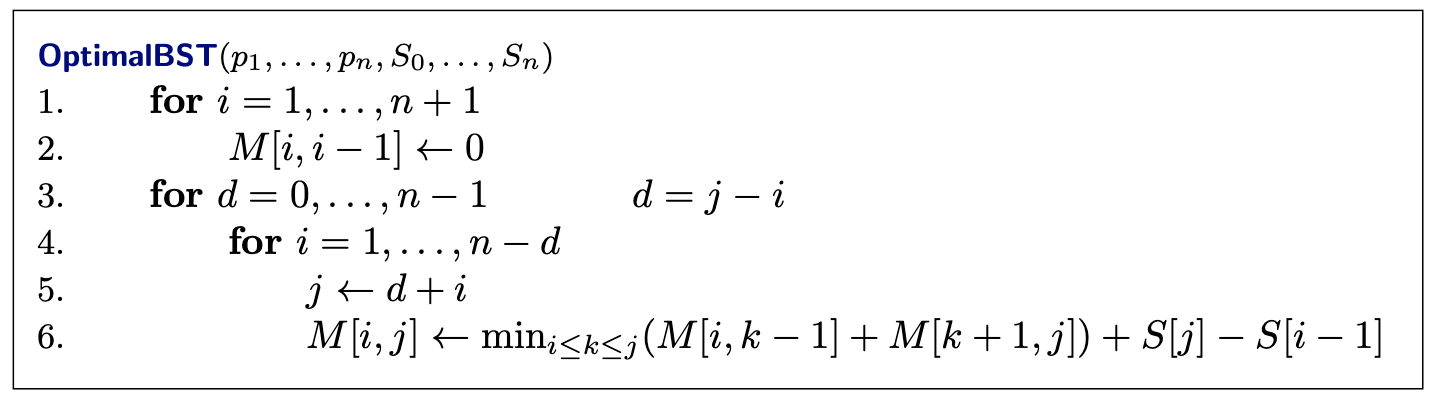

- Optimal Binary Search Trees

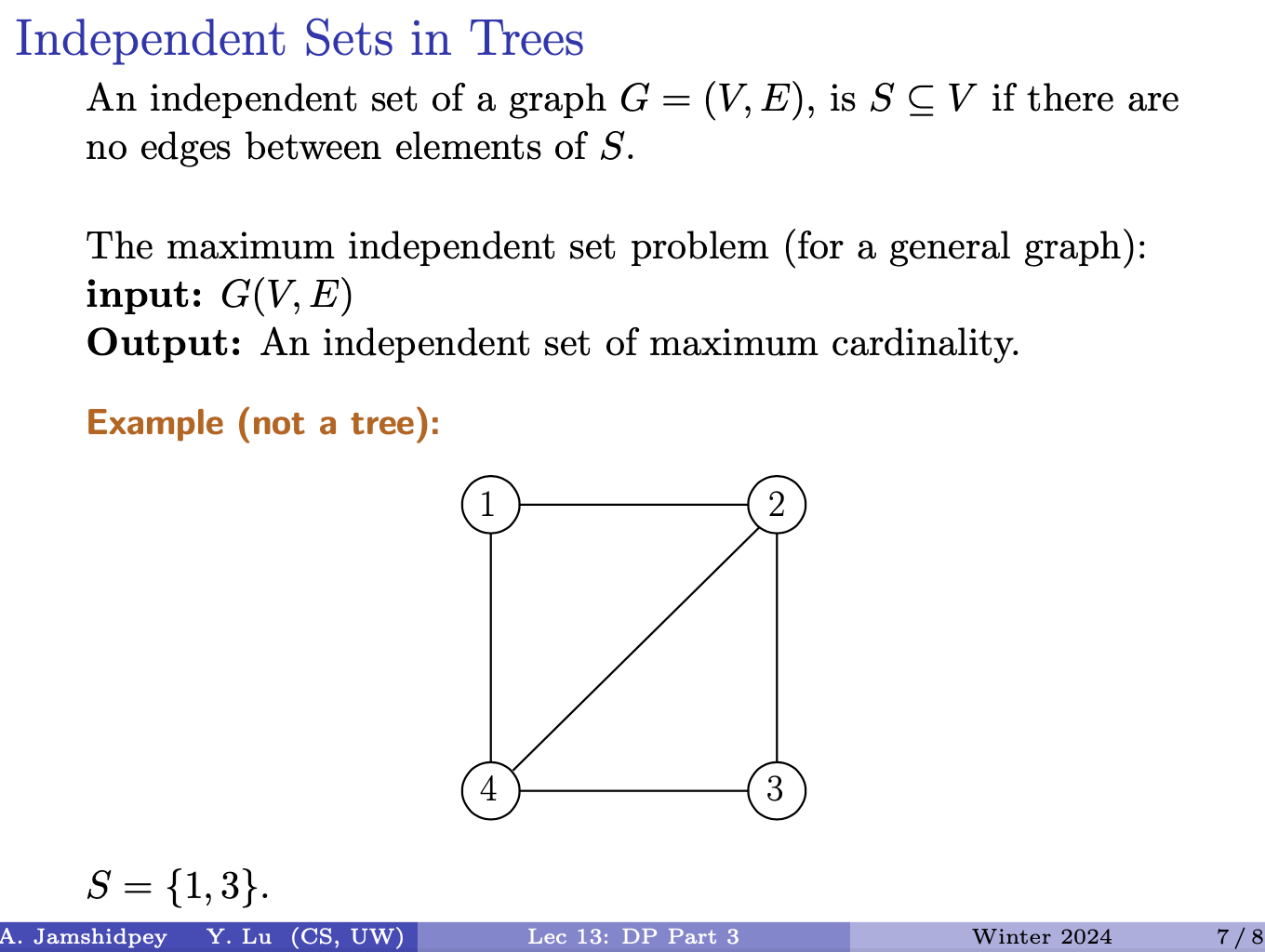

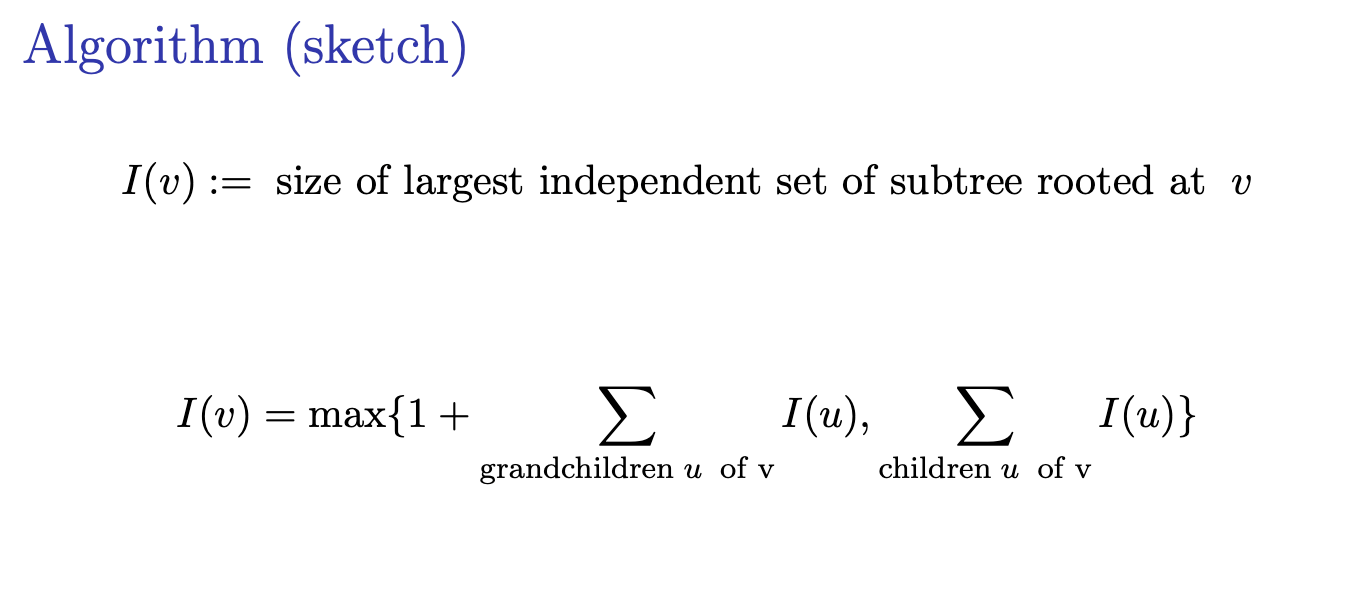

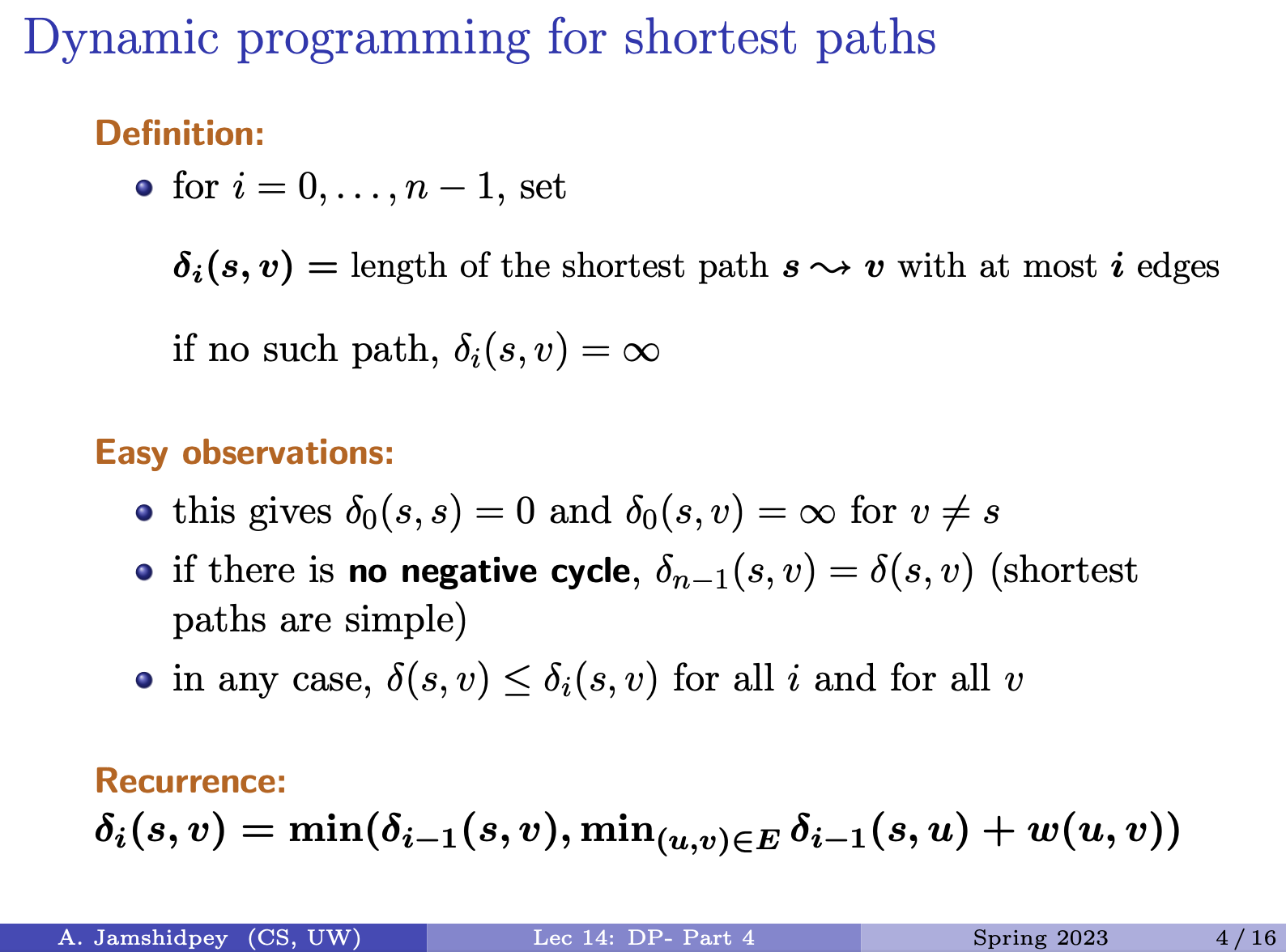

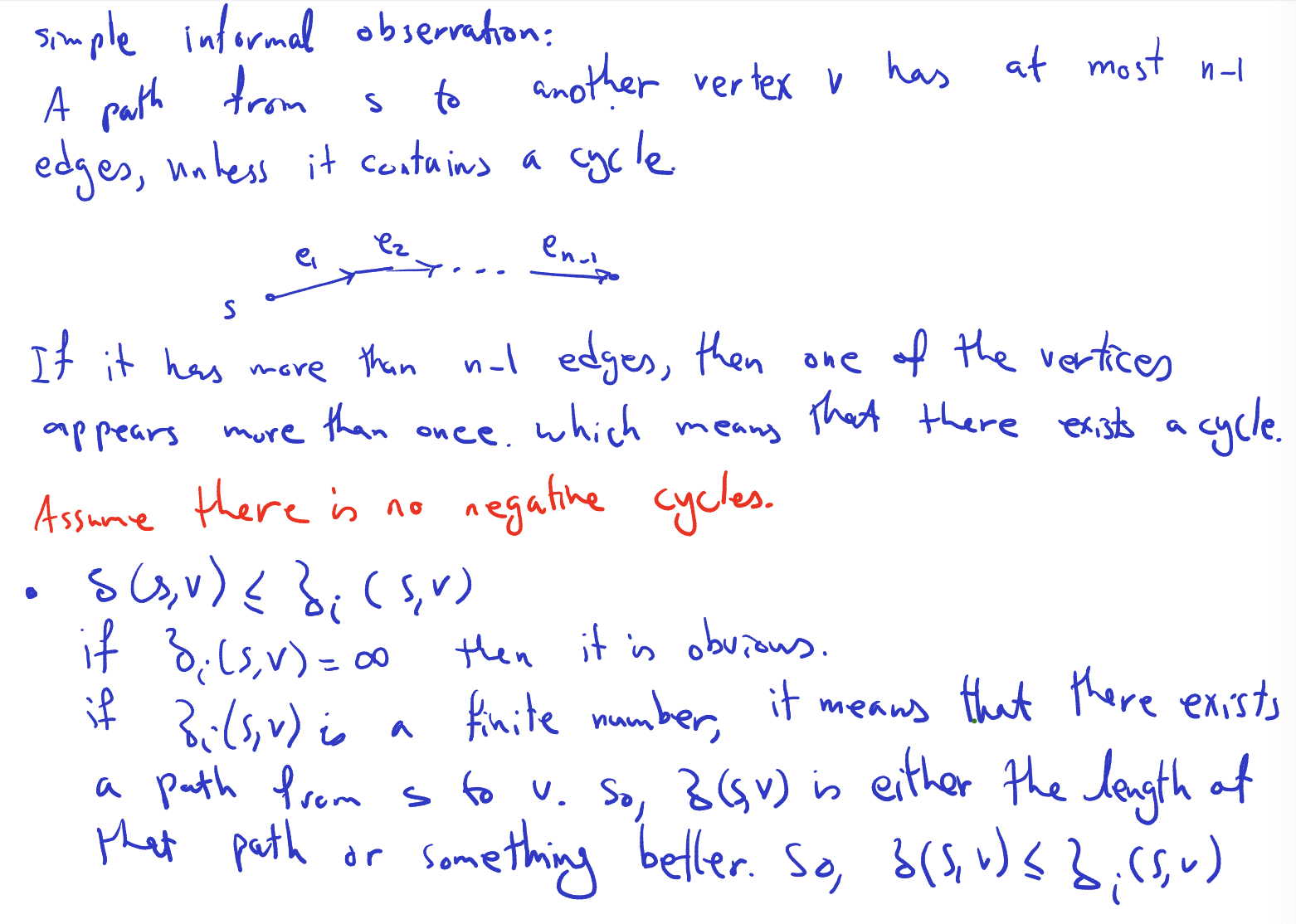

- Independent Sets in Trees

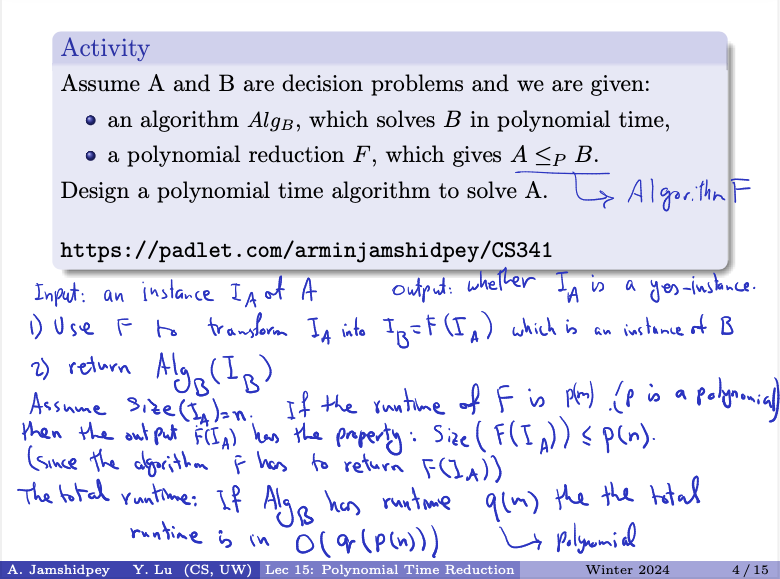

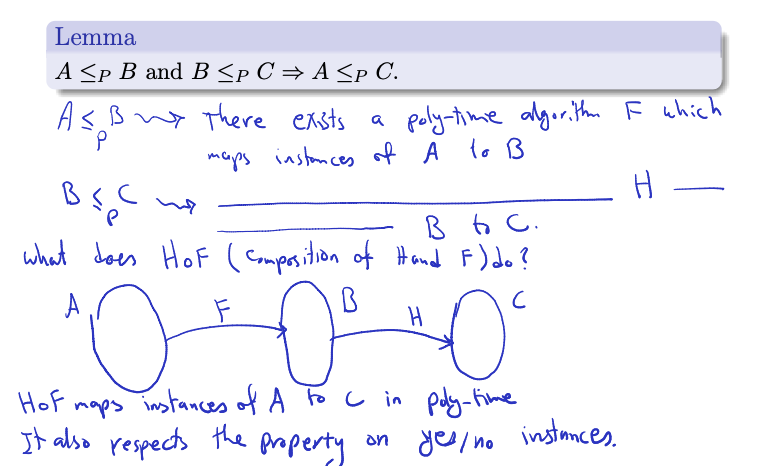

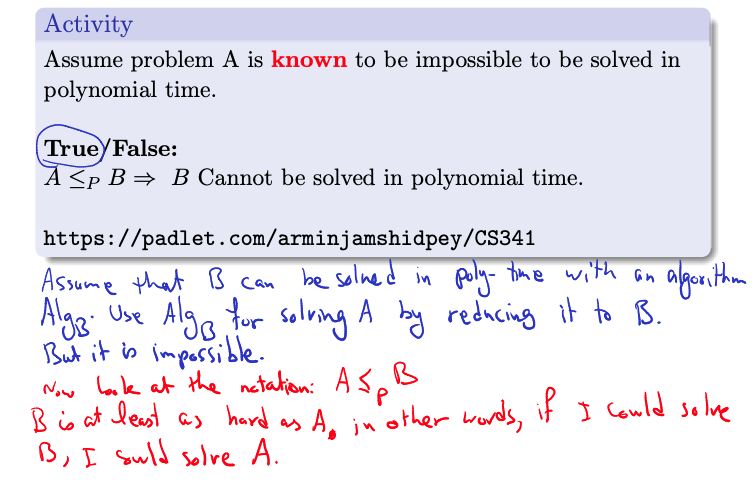

Lecture 15

Lecture 16

Lecture 17 and Lecture (don’t worry about it)

Lecture 3 - Divide an Conquer

Example - Counting inversions

Collaborative filtering:

- matches users preferences (movies, music, …)

- determine users with similar tastes

- recommends n ew things to users based on preferences of similar users

Goal: given an unsorted array , find the number of inversions in it.

Def

is an inversion if and

Example: with

A=[1,5,2,6,3,8,7,4], we get

Remark: we show the indices where inversions occur

Remark: easy algorithm (two nested loops) in

Lecture 4 - Divide and Conquer

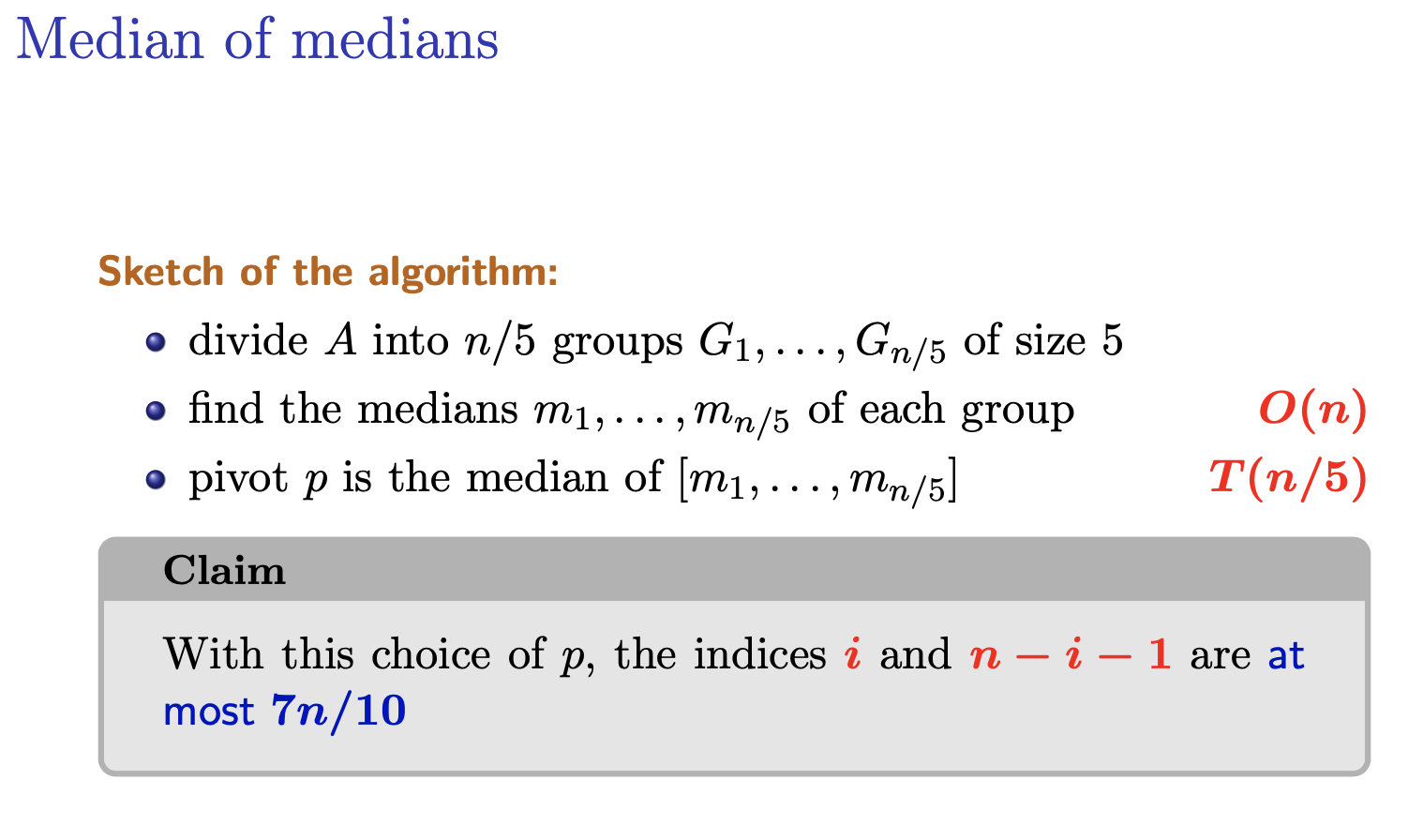

- The Selection Algorithm We don’t choose the pivot randomly now.

How to find a pivot such that both

iandn-i-1are not too large?

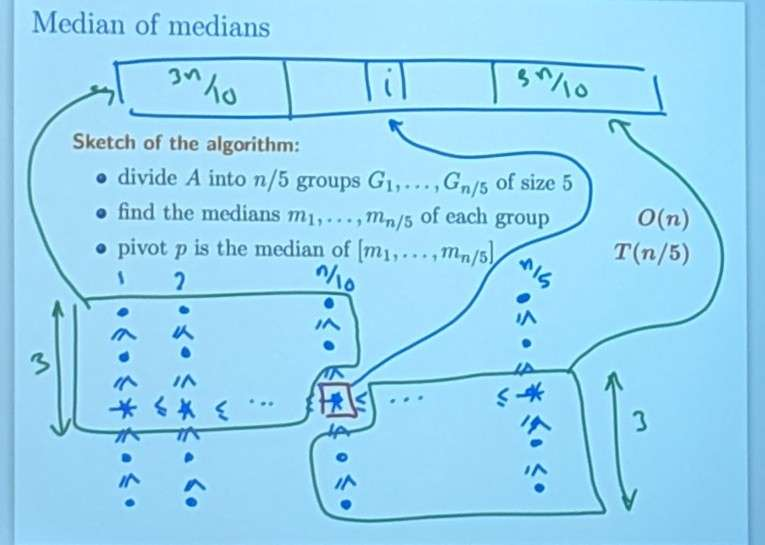

For each group, we spend to find the medians. Then we have to find the median of medians. Why?

For each group, we spend to find the medians. Then we have to find the median of medians. Why?

Why is the runtime of median of medians

O(n)?T(n) = T(n/5) + T(7n/10) + O(n) is the expression given in the slides T(n/5) and O(n) term is from finding median of medians in the groups of 5 This is from selectPivot() The current array is broken up into groups of 5 (takes O(1) time) There are n/2 groups of 5 Calling quickselect on an array of size 5 is fixed time operation (takes O(k) = O(1)) but there are n/5 groups so finding the median of every group of 5 will take O(n) time Thus the terms T(n/5) + O(n)

T(7n/10) is the worst case for the size of the next array This worst case is bounded by the pivot selection from selectPivot(), proof of this in slides (the maximal size of the next recursive call’s array is 7n/10)

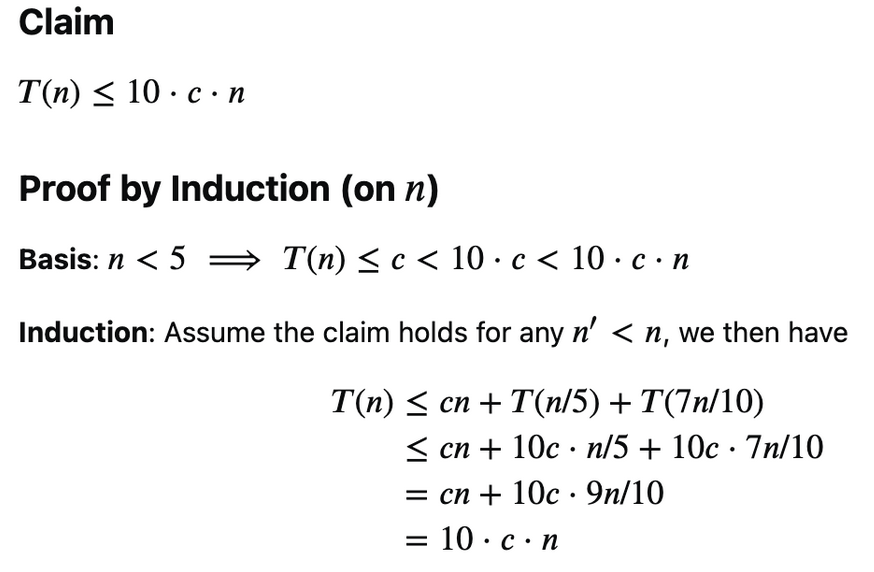

Its an induction proof to show why

Note, in quicksort, you have to sort both sides of the array after the pivot step. In median of medians, after the pivot step, you only have to consider one part of the array, the one that has the median.

and are the sizes of the right and left subarrays. and .

He’s not sorting each group. After finding the median of medians, you can reorder the element (the columns) in the picture.

He’s not sorting each group. After finding the median of medians, you can reorder the element (the columns) in the picture.

After calling the partition algorithm, we get an array where the median of medians is at some index . The values in the green area is smaller than the median of medians. All these values goes before the index. Same argument can be done to the bottom right corner. In the green box, the elements are going to be larger and will appear on the left in the array .

- is the runtime to find the median of medians. we ensure that the pivot chosen is at least in the middle 30%

- Because in the median of medians algorithm, the key idea is to ensure that the pivot chosen is not too small or too large compared to the rest of the elements in the array. Specifically, the pivot selected by the median of medians algorithm is guaranteed to be within the middle 30% of the sorted order of the array.

- Worst case is the recursive call , because it’s quick select and not quick sort. only 7 branches and we ignore the other 3 branches? since quickselect does not partition the array into equal parts, rather it focuses on only one side of the pivot (where the desired element should be located). so worst case, only one side of the array is considered

- is the extra operations to be done, in this case, partitioning We can prove the runtime to be

Why are we grouping them in groups of 5?

Might not work with other grouping numbers. We will see later.

Module 3

Lecture 5 - Breadth First Search

not gonna do bfs and undirected graphs

Goals:

-

basics on undirected graphs

-

undirected BFS and applications (shortest paths, bipartite graphs, connected components)

-

undirected DFS and applications (cut vertices)

-

basics on directed graphs

-

directed DFS and applications (testing for cycles, topological sort, strongly connected components)

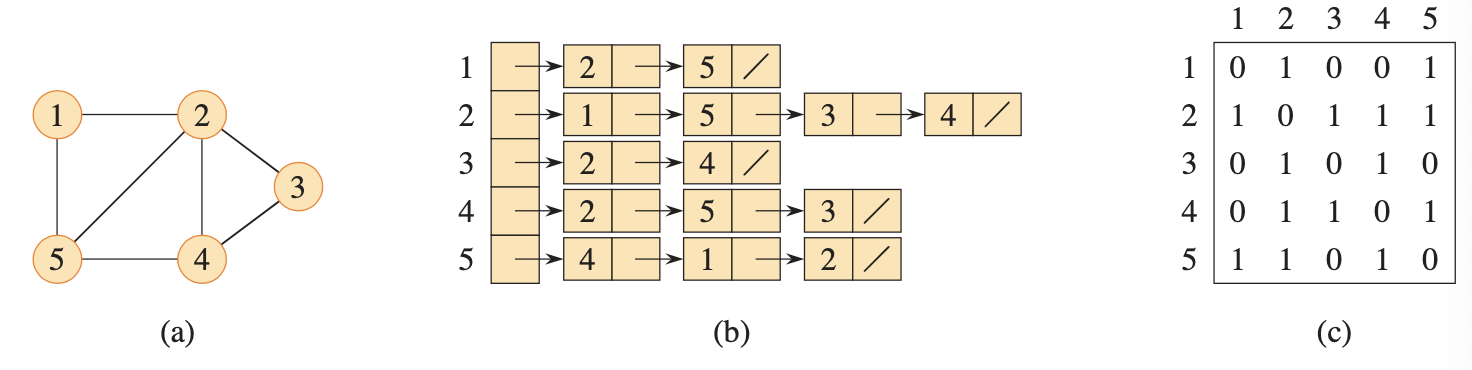

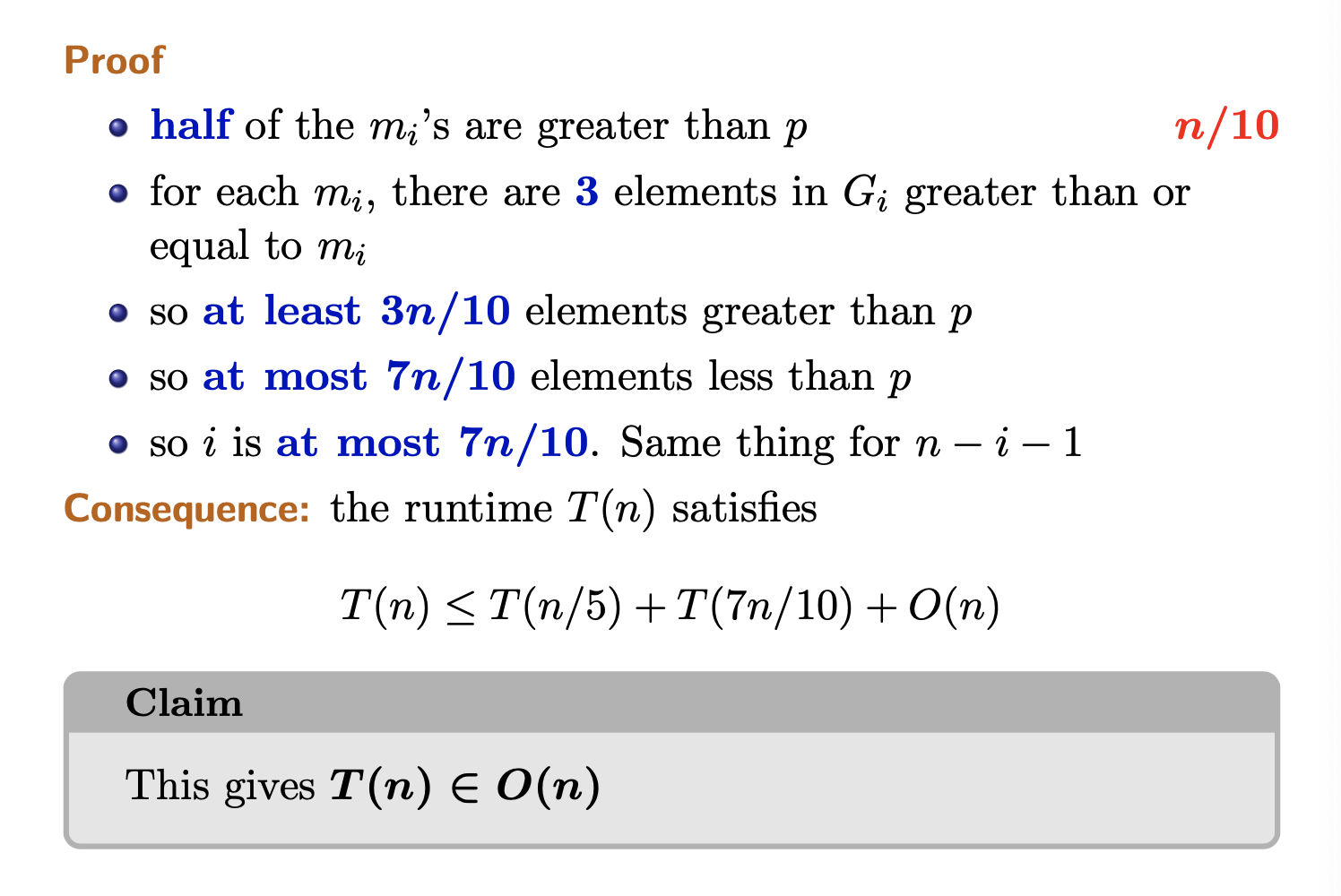

Draw the representation of an undirected graph as an adjacency list and matrix for this graph

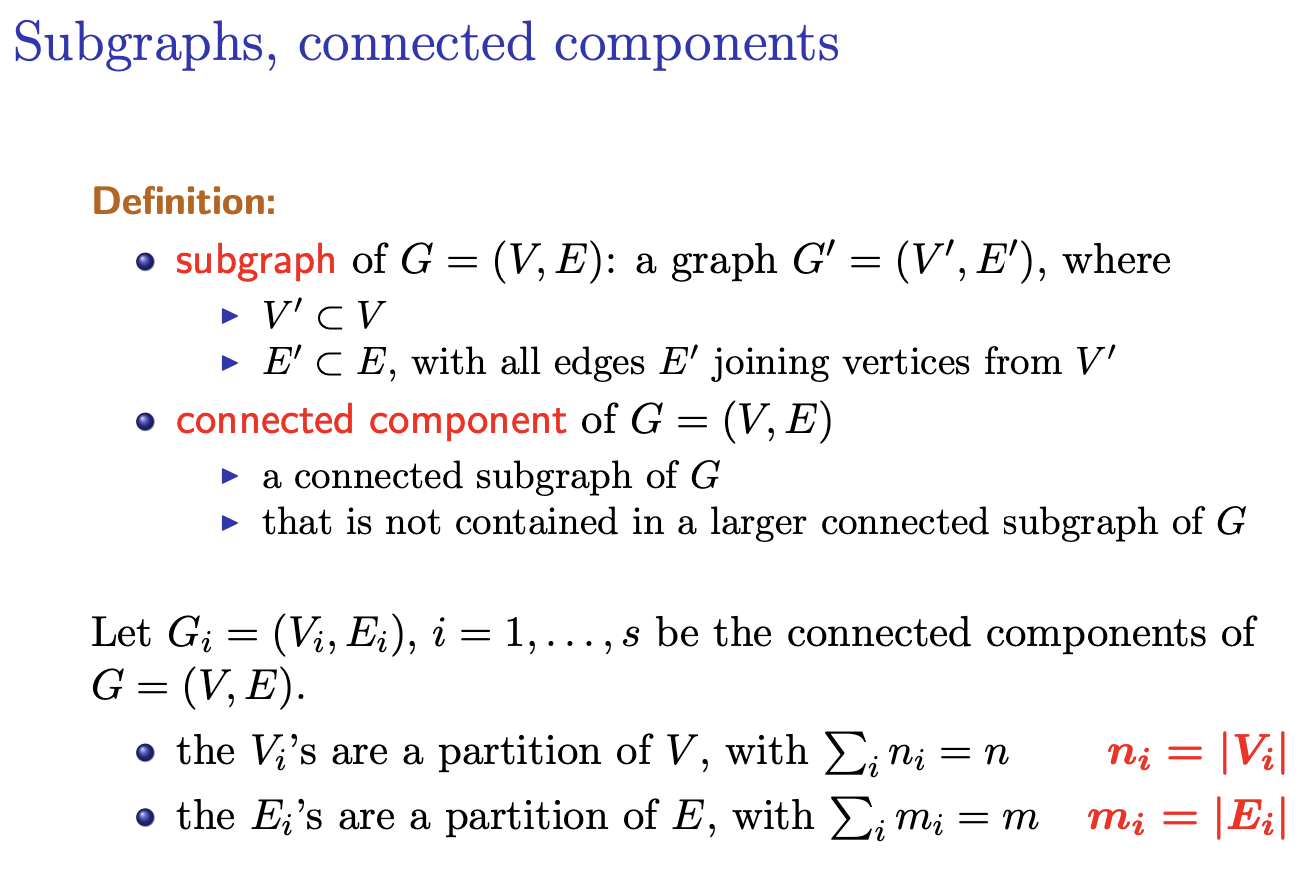

Some definitions

BFS

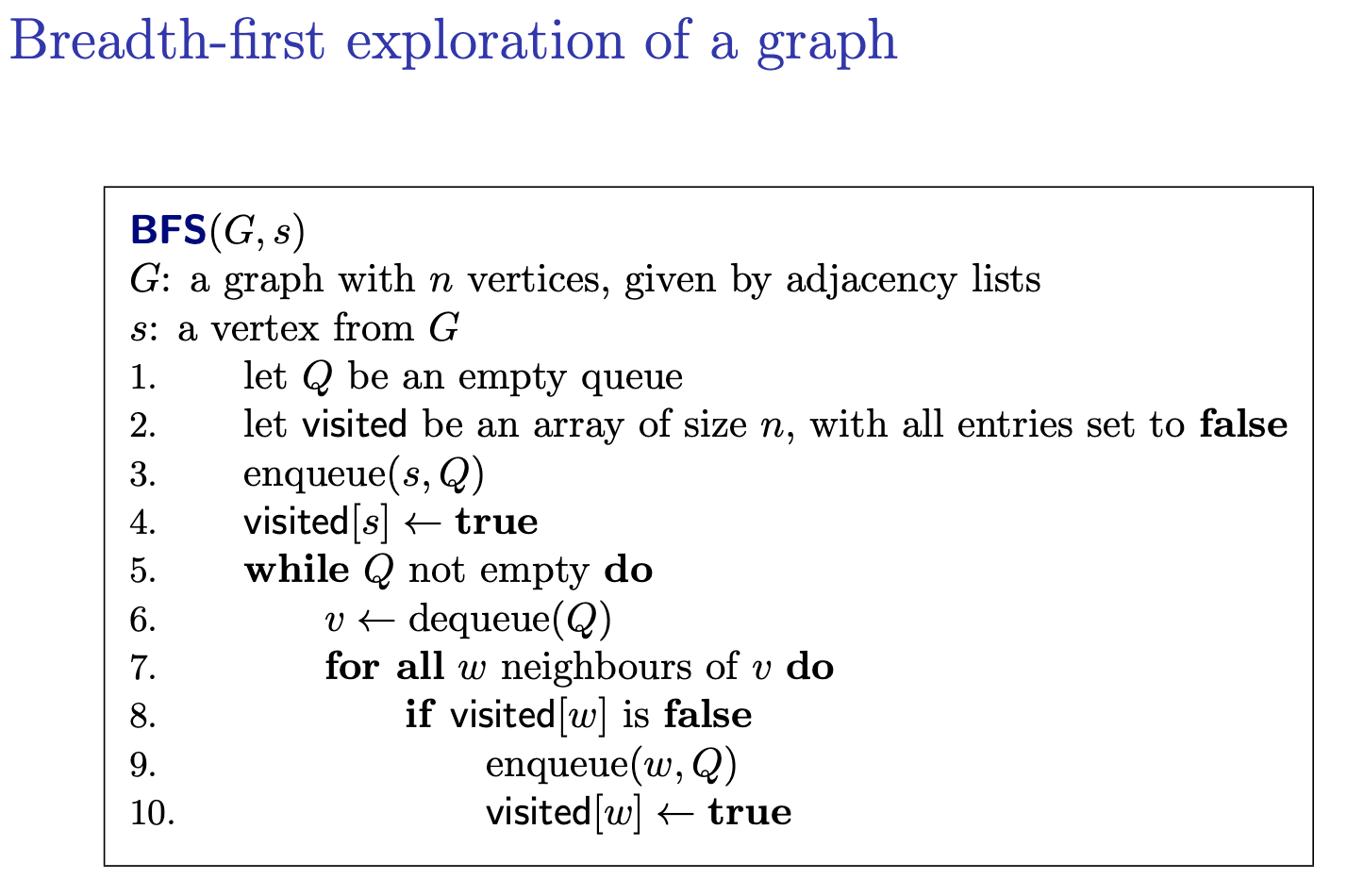

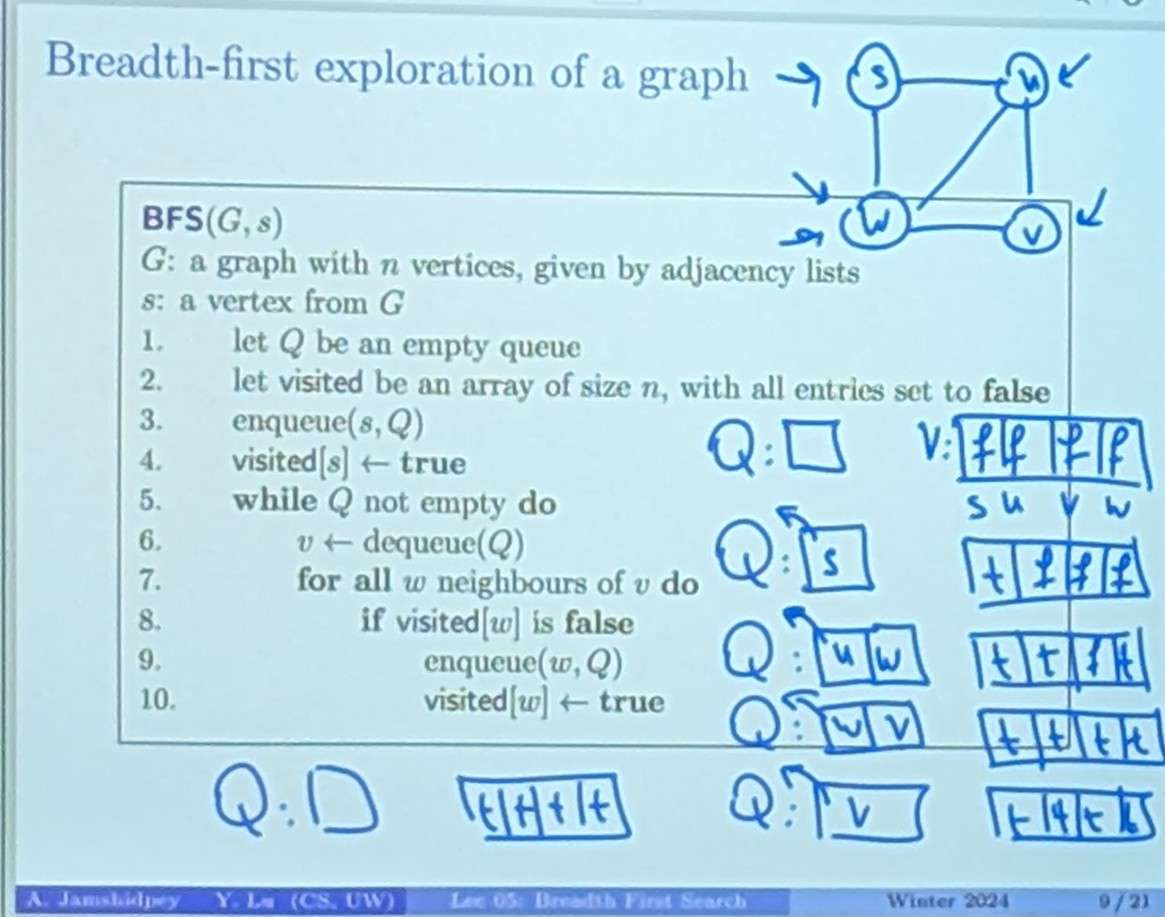

As long as the queue is not empty based on the order of queue. Take things out. and look at all the neighbours. If the neighbours is not visited, we enqueue it.

Time Complexity

Analysis:

- each vertex is enqueued at most once

- so each vertex is dequeued at least once

- so each adjacency list is read at most once (since we visit all the neighbours of a vertex only if it has not been visited)

For all , write number of neighbours of length of degree of .

cf. the adjacency array has cells (Handshaking Lemma)

Total:

Breadth-first exploration idea: start from yourself, and expand.

Thu Jan 25, 2024

To dequeue you need to visit all of the neighbours.

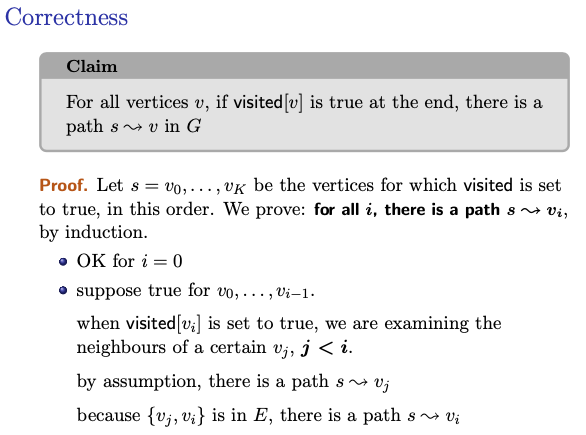

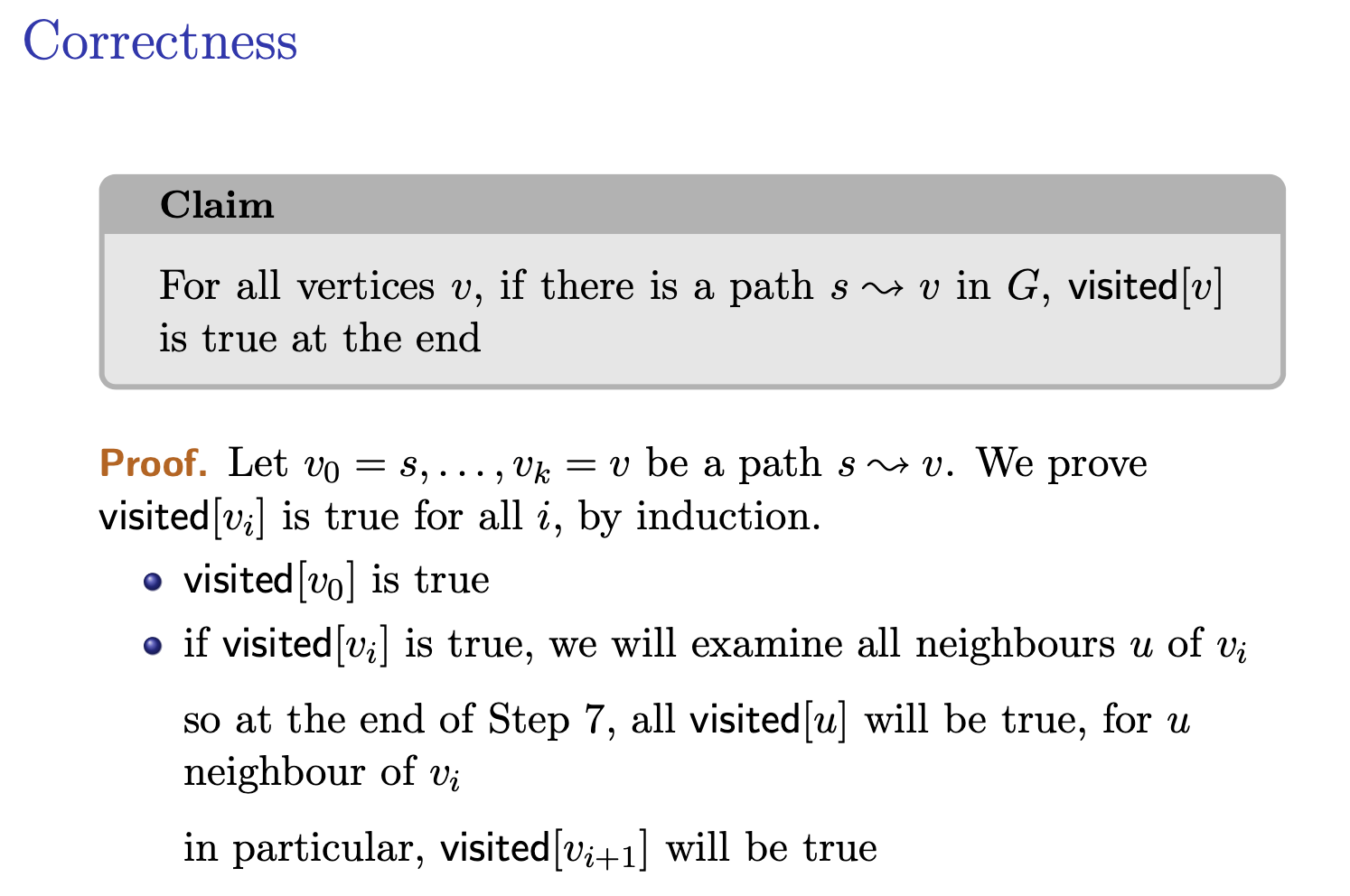

Use induction, assume the statement holds for vi, we want to show that vi+1 is visited. If vi is visited, it means that it enqueue. But to enqueue, we need to dequeue and to dequeue we visit all of the neighbours. And since vi+1 is a neighbour of vi, therefore vi+1 is visited.

Lemma

For all vertices , there is a path v in if and only if is true at the end.

Applications

- testing if there is a path v

- testing if is connected in .

To check if a graph is connected, we need to run DFS.

To test if the and : is the dominant term.

Exercise

For a connected graph, .

Thu Jan 25, 2024

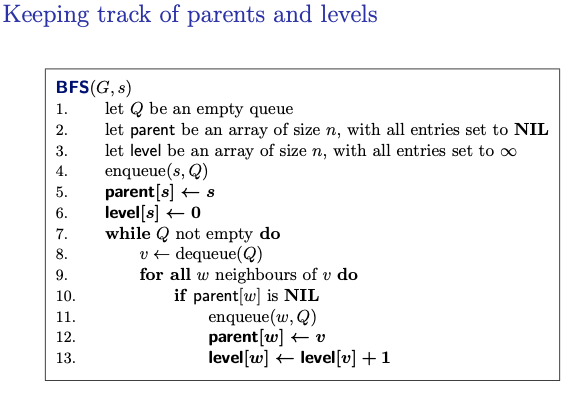

I visit some node , but why do we do that in BFS?

If I have a parent array, do we still need a visited array?

- No, we can just go check the parent array and check if it’s null or it visited the child.

In the textbook, they use predecessor to mean parent. It’s more general.

Based on this algorithm, we can form a tree!

is the parent.

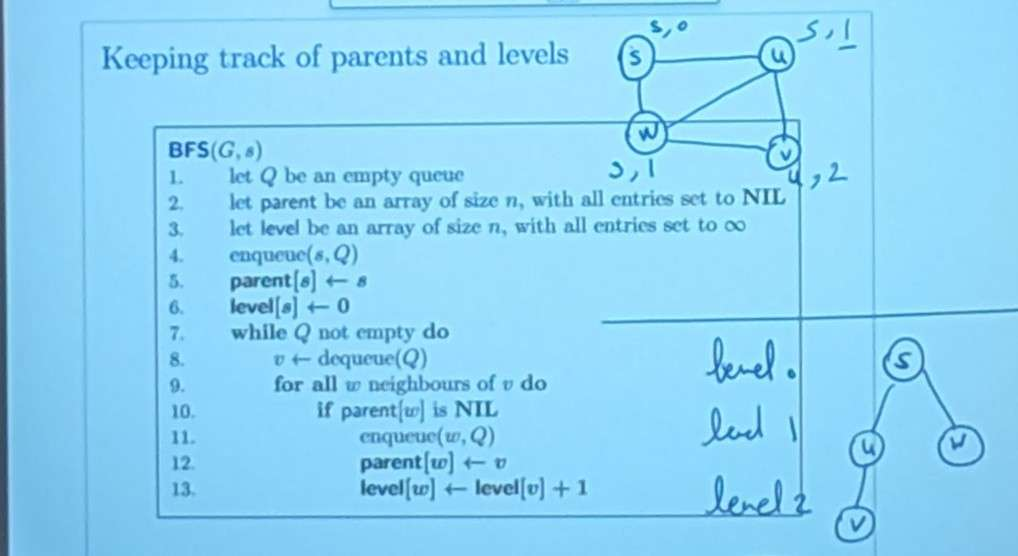

Example for the algorithm (i’ve recorded the whole process):

BFS Tree

BFS Tree

Definition: the BFS Tree is the subgraph made of

- all such that

- all edges , for as above (except )

Claim

The BFS tree is a tree.

Proof: by induction on the vertices for which is not NIL.

- when we set , only one vertex, no edge.

- suppose true before we set was in before, was not, so we add one vertex and one edge to , so remains a tree.

Remark: we make it a rooted tree by choosing as a root.

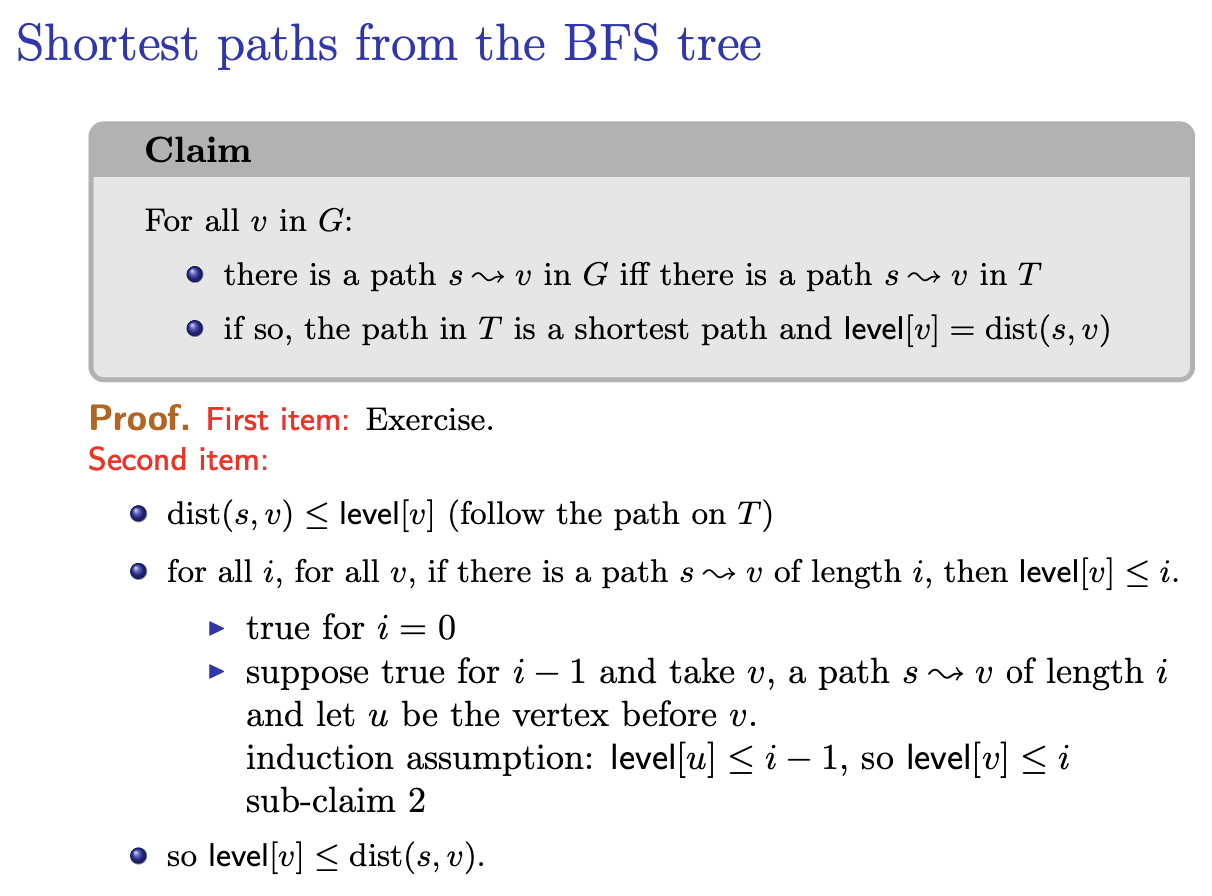

Shortest paths from the BFS tree

Sub-claim 1

The levels in the queue are non-decreasing

Proof: Exercise

Sub-claim 2

For all vertices if there is an edge {}, then .

Proof.

- if we dequeue before , (sub-claim 1)

- if we dequeue before , the parent of is either , or was dequeued before in any case, (sub-claim 1) but , so OK

gap-in-knowledge don’t really understand the proof of sub-claim 2. Does it mean either is the parent of or someone else is the parent of and got dequeue earlier, before dequeueing . Therefore, level of u + 1 is definitely bigger or equal to level of v, since it’s above . Doesn’t make sense, i got it wrong? if we dequeue u before, doesn’t it mean that u is lower level than v? Omg notice that there is an edge {u,v}. But still why is it level of u + 1 is greater or equal to level of v? Is level 1 higher than level 2? I think I’m misunderstanding the lower and higher. If 1 is higher level then I got it right…

What is ? The BFS Tree I assume.

What is ? The BFS Tree I assume.

I’m very confused: I have recordings.gap-in-knowledge

Bipartite Graph

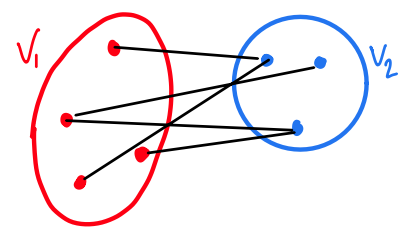

A graph , is bipartite if there is a partition such that all edges have one end in and one end in .

Using BFS to test bipartite-ness

Claim

Suppose connected, run BFS from any , and set

- vertices with odd level

- vertices with even level

Then is bipartite if and only if all edges have one end in and one end in (testable in )

Proof. obvious For , let be a bipartition. Because paths alternate between :

- ( vertices with odd level) is included in (say)

- ( vertices with even level) is included in So and

Computing the connected componentsto-understand

Idea: add an outer loop that runs BFS on successive vertices

Exercise

Fill in the details.

Complexity:

- each pass of BFS , if is the th connected component

- total

Slide explained

It’s just giving the idea of how to find the connected components of an undirected graph. You run a BFS, and then you’re done you’ve visited all the vertices in that component. So you just need to find an unvisited vertex (which means it will be in another component) and then run BFS on that vertex again. You keep doing this until you visit every vertex.

Chatgpt: The approach described is a common method for computing the connected components of an undirected graph using BFS (Breadth-First Search). Let’s break down the steps:

- Initialize: Start with an empty list to store the connected components.

- Outer Loop: Begin an outer loop that iterates over all vertices of the graph. For each vertex, if it has not been visited yet, it means it belongs to a new connected component.

- BFS: Perform a BFS traversal starting from the current unvisited vertex. This BFS traversal will visit all vertices reachable from the current vertex, which form one connected component of the graph.

- Store Component: After completing the BFS traversal, all vertices visited during this traversal belong to the same connected component. Add these vertices to the list representing the current connected component.

- Repeat: Continue the outer loop to find the next unvisited vertex in the graph. This vertex will belong to a new connected component. Repeat steps 3-4 for this vertex.

- Termination: Once all vertices have been visited and assigned to connected components, the process ends.

Let’s analyze the complexity:

- BFS Complexity: Each pass of BFS on a connected component takes time, where is the number of vertices and mimi is the number of edges in the th connected component.

- Total Complexity: Since we perform BFS on each connected component separately, the total complexity is the sum of the complexities of all BFS traversals, which is , where is the total number of vertices and is the total number of edges in the graph.

This approach efficiently computes the connected components of an undirected graph by systematically exploring each component using BFS. It’s a fundamental technique in graph theory and is often used in various applications such as network analysis, social network analysis, and image processing.

Lecture 6 - Depth-First Search

Going depth-first

The idea:

- travel as deep as possible, as long as you can

- when you can’t go further, backtrack

DFS implementations are based on stacks, either implicitly (recursion) or explicitly (as with queues for BFS).

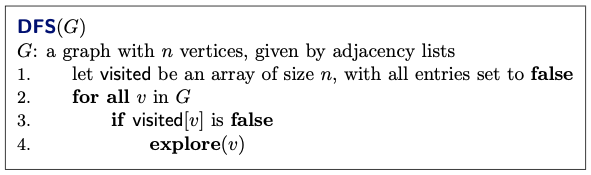

Recursive Algorithm:

Remark: can add parent array as in BFS. What does that mean?:

- Adding a parent array in DFS, similar to BFS, means keeping track of the parent of each vertex encountered during the traversal. In BFS, the parent array is used to reconstruct the shortest paths from the source vertex to all other vertices in the graph. However, in DFS, the parent array serves a slightly different purpose.

- In DFS, the parent array can be used to represent the DFS tree or forest. Each time a vertex is visited and explored, the parent of its neighbouring vertices becomes , since is the vertex from which was discovered. Therefore, by maintaining this information in the parent array, we can construct the DFS tree rooted at any vertex. Here’s how it works:

- Initialize a parent array of size , where nn is the number of vertices in the graph. Initially, all entries in the parent array are set to a special value (e.g., ) to indicate that the vertices have no parent.

- During the DFS traversal, when exploring a vertex , mark as visited (i.e., ) and iterate through all its neighbours .

- For each neighbour , if has not been visited yet, set its parent in the parent array to (i.e., ). By the end of the DFS traversal, the parent array will represent the DFS tree or forest, where each vertex (except the root(s)) has a parent that discovered it during the traversal. This information can be useful for various purposes, such as finding paths in the DFS tree, detecting cycles, or performing other graph-related algorithms.

Notice, it is recursively calling on explore().

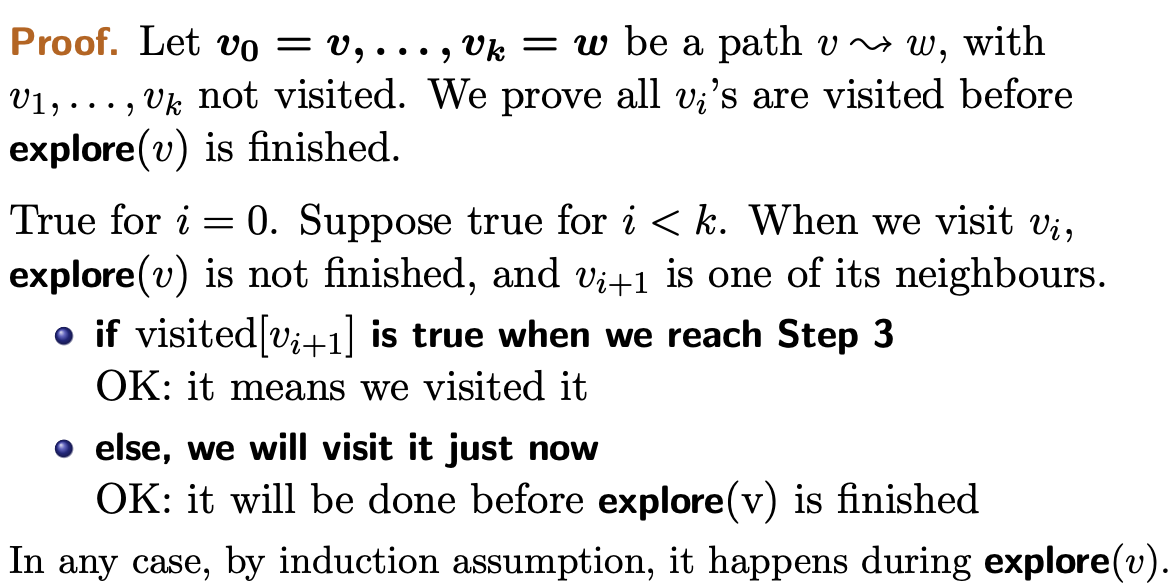

Claim ("white path lemma")

When we start exploring a vertex , any that can be connected to by an unvisited path will be visited during explore().

Proof: Let’s prove this lemma by contradiction. Suppose there exists a vertex that can be reached from by an unvisited path but is not visited during the exploration of .

- Initialization: We begin the DFS traversal with all vertices marked as unvisited.

- Exploration of : When the DFS algorithm starts exploring vertex , it marks as visited. Then, for each neighbour of , if has not been visited yet, DFS explores .

- Contradiction: However, by the nature of DFS, when exploring , all its neighbours are visited recursively. Therefore, if is reachable from , it must be a neighbour of or a neighbour of a neighbour of , and so on. Since DFS explores all neighbours of and their neighbours, should have been visited during the exploration of . But our assumption states otherwise, leading to a contradiction.

Hence, the assumption that there exists a vertex reachable from via an unvisited path but not visited during the exploration of is false. Therefore, any vertex that can be connected to by an unvisited path will indeed be visited during the exploration of . This completes the proof of the “white path lemma”.

Basic property:

Claim

If is visited during explore(), there is a path .

Proof: Same as BFS.

The proof is straightforward and relies on the nature of DFS exploration. During the DFS traversal, when exploring vertex , DFS recursively visits all vertices reachable from in the graph.

- Initialization: The DFS traversal begins with the starting vertex marked as visited.

- Recursive Exploration: For each neighbour of , if has not been visited yet, DFS explores . This process continues recursively until all reachable vertices from have been visited.

- Vertex : If vertex is visited during the exploration of , it means that is reachable from in the graph.

- Path from to : Since is visited during the exploration of , there must be a path from to in the graph. This path is formed by the sequence of edges traversed during the DFS traversal.

Therefore, if vertex is visited during the exploration of vertex in DFS, then there exists a path from to in the graph. This completes the proof of the claim.

Consequences:

- Previous properties: after we call explore at in DFS, we have visited exactly the connected components containing .

- Since it recursively explores all the vertices in the connected component

- Shortest paths: no

- unlike bfs, it does not get you the shortest path

- Runtime: still

Trees, forest, ancestors and descendants Previous observation:

- DFS() gives a partition of into vertex-disjoint rooted trees (DFS forest)

- What does this mean>

Definition. Suppose the DFS forest is and let be two vertices

- is an ancestor of if they are on the same subtree in the DFS forest and is on the path root

- equivalent: is a descendant of if

- belongs to the subtree rooted at in the DFS forest

- equivalent as saying is the ancestor of

In other words, in a DFS forest:

- An ancestor of a vertex is any vertex that lies on the path from the root of the subtree containing the vertex to the vertex itself.

- A descendant of a vertex is any vertex within the subtree rooted at the vertex.

Key Property

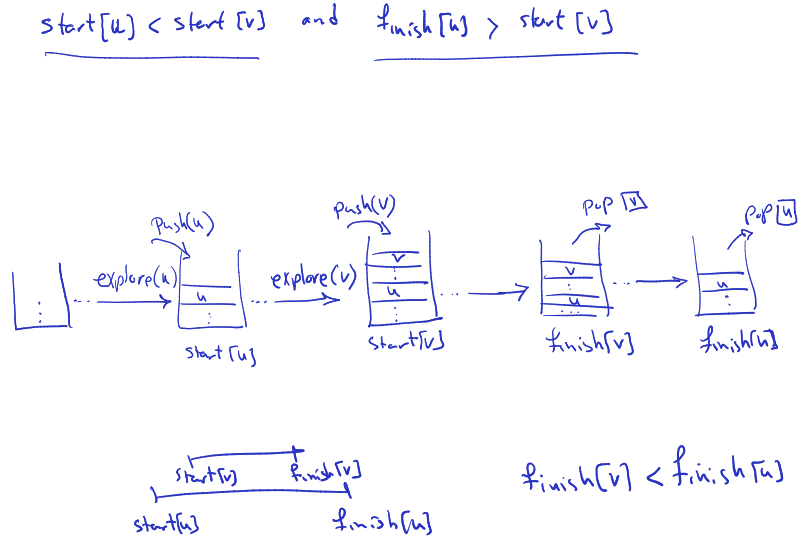

Claim

All edges in connect a vertex to one of its descendants or ancestors.

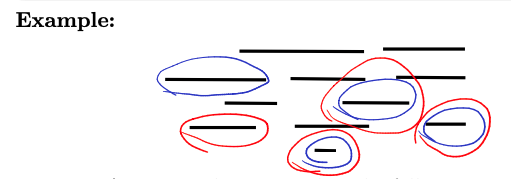

So, there is no edge from subtree to subtree within :

Proof. Let {} be an edge, and suppose we visit first. Then when we visit , is an unvisited path between and , so will become a descendant of (white path lemma).

White Path Lemma: According to the white path lemma, any vertex that can be connected to by an unvisited path will be visited during the exploration of .

Back edges

A back edge is an edge in connecting an ancestor to a descendant, which is not a tree edge.

Observation: All edges are either tree edge or back edges (key property).

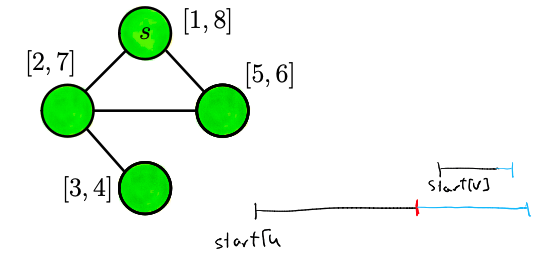

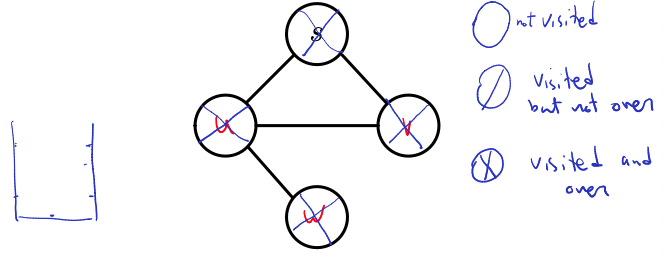

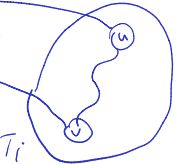

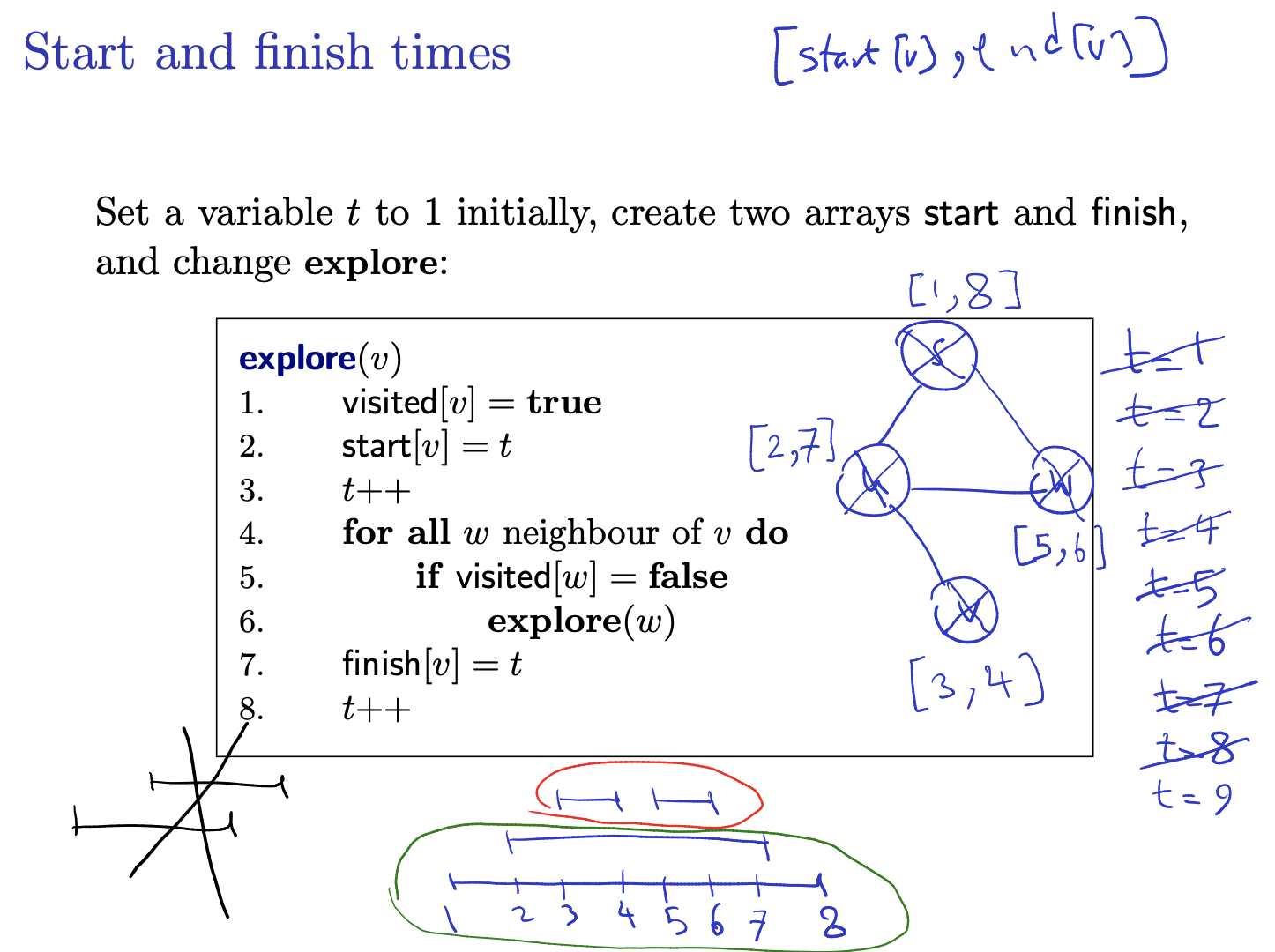

Start and finish times

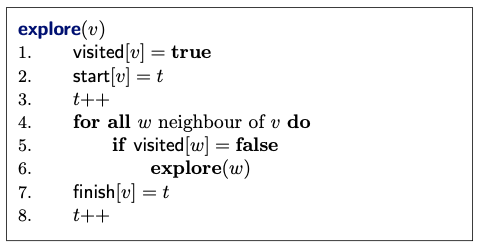

Set a variable to 1 initially, create two arrays start and finish, and change explore:

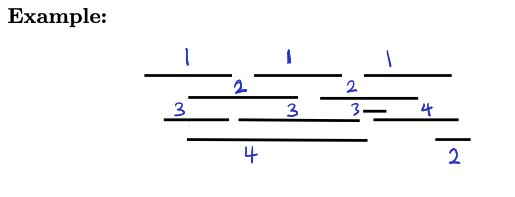

Example:

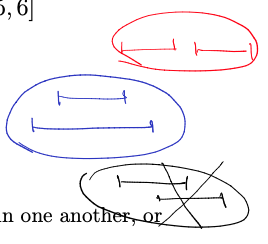

Observation:

- these intervals are either contained in one another, or disjoint

- if , then either or .

- Basically saying that they can’t cross each other, either u finishes before v starts, or u finishes then v finishes.

Proof: if , we push on the stack while is still there, so we pop before we pop .

Cut vertices

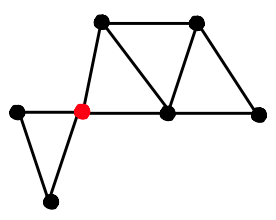

Cut vertices

For connected, a vertex in is a cut vertex if removing (and all edges that contain it) makes disconnected. Also called articulation points

Finding the cut vertices ( connected)

Setup: we start from a rooted DFS tree , knowing parent and level.

Warm-up

The root is a cut vertex if and only if it has more than one child.

Proof.

-

if has one child, removing leaves connected. So is not a cut vertex.

-

suppose has subtrees .

Key property: no edge connecting to for . So removing creates connected components.

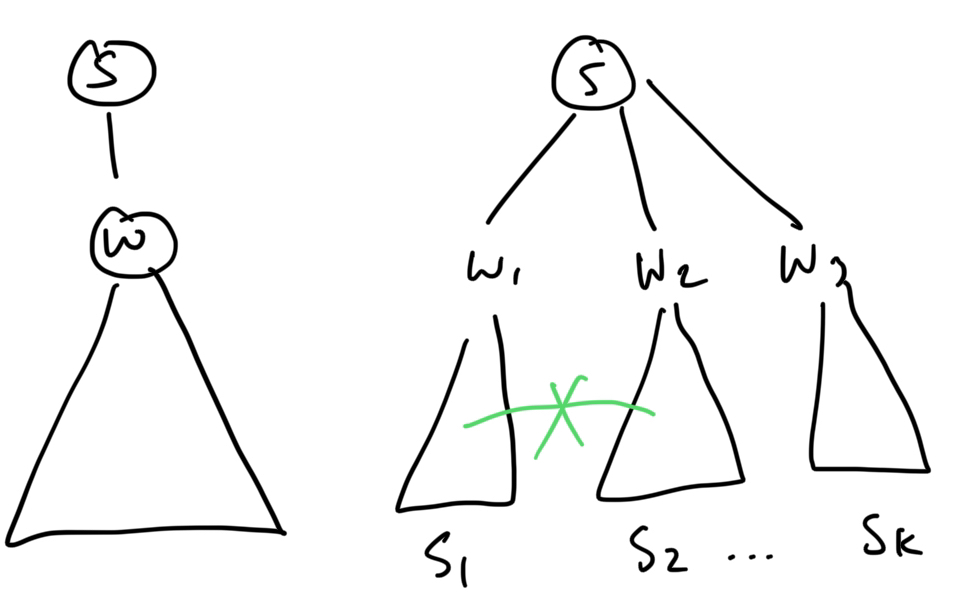

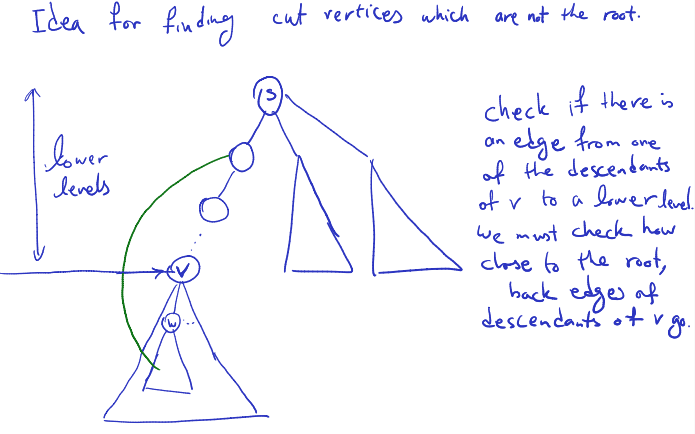

Now, we want to investigate the problem of finding cut vertices which ARE NOT the root.

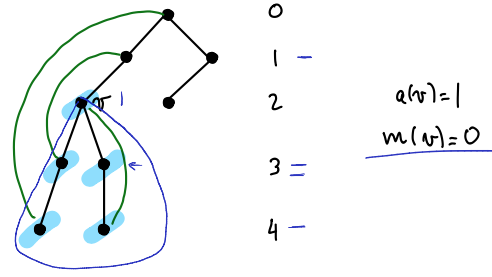

Finding the cut vertices (

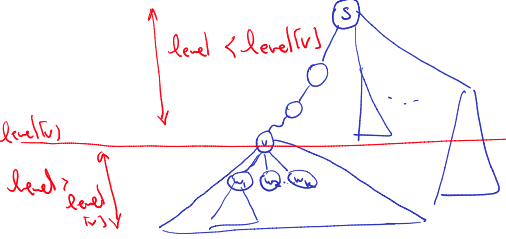

Gconnected)Definition: for a vertex , let

{ {} edge}

{ descendant of }

- a(v) is the lowest level (highest in the tree) of all nodes directly connected to v.

- m(v) is the minimum a(w) value for all w; w is a descendant of v.

- ooo it considers all the descendants of , so basically any descendants of

- I interpret a(v) as the “highest link” that v has, while m(v) is the highest link that v’s subtree has.

In simple words:

- is the smallest level of all of ‘s neighbours

- is the smallest level of any neighbour of a descent of .

- Try calculating and and see if your results match the answers shown to the right of the diagram above!

Using the values

Claim

For any (except the root), is a cut vertex if and only if it has a child with .

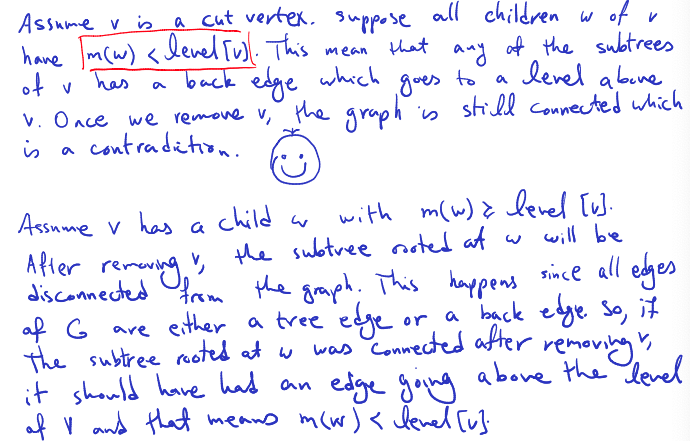

Proof.

- Take a chid of , let be the subtree at .

- If , then there is an edge from to a vertex above . After removing , remains connected to the root.

- If , then all edges originating from end in . Proof: any edge originating from a vertex in ends at a level at least , and connects to one of its ancestors or descendants (key property).

Computing the values Observation:

- if has children , then {}

Conclusion:

- computing is

- knowing all , we get in

- so all values can be computed in (remember when connected)

- testing the cut-vertex condition at is

- testing all is

and are used for the children on a vertex which we are about to check if it is a cut vertex or not. The value gives the lowest level of all neighbours of , this counts for checking to see if a tree rooted at a child of a vertex will become disconnected or not.

Exercise: write the pseudo-code

1. Perform a DFS traversal on the graph G starting from any vertex (e.g., the root vertex).

2. During the DFS traversal, maintain the following information for each vertex v:

- level[v]: the level of vertex v in the DFS tree

- a[v]: the minimum level reachable from vertex v along edges in the DFS tree

- m[v]: the minimum of a[v] and the values m[w] for all children w of v

3. Update a[v] and m[v] as follows:

- When exploring a vertex v:

- Set level[v] = t (where t is the current time)

- Increment t

- Set a[v] = level[v]

- For each child w of v:

- Recursively explore w

- Update m[v] = min(a[v], m[v], m[w])

4. After the DFS traversal completes, iterate through all vertices v:

- If v is not the root vertex and m[v] >= level[v], v is a cut vertex

5. The overall time complexity of this approach is O(m), where m is the number of edges in the graph.

Some questions:

- Running BFS also works exactly the same for finding cut vertices as running DFS right?

- Consider the 4-cycle, in the BFS tree, the claim would tell you that there is a cut vertex, but there is no cut vertex.

- I think this is because you can have edges to other subtrees in a BFS tree but this is impossible in a DFS tree.

Lecture 7 - Directed Graphs

Tue Feb 6 2024

Directed Graph

as in the undirected case, with the difference that edges are (directed) pairs

- edges are also called arcs

- usually, we allow loops, with

- is the source node, is the target

- a path is a sequence of vertices, with in for all . is OK.

- a cycle is a path

- a directed acyclic graph (DAG) is a directed graph with no cycle

Definition:

- the in-degree of is the number of edges of the from

- the out-degree of is the number of edges of the form

Data structures:

- adjacency lists

- adjacency matrix (not symmetric anymore)

BFS and DFS for directed graphs The algorithms work without any change. We will focus on DFS.

Still true:

- we obtain a partition of into vertex-disjoint trees

- when we start exploring a vertex , any with an unvisited path becomes a descendant of (white path lemma)

- properties of start and finish times

But there can exist edges connecting the trees

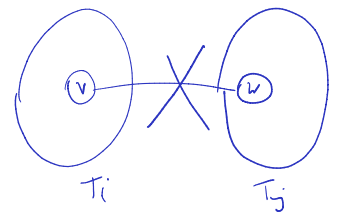

T_i.

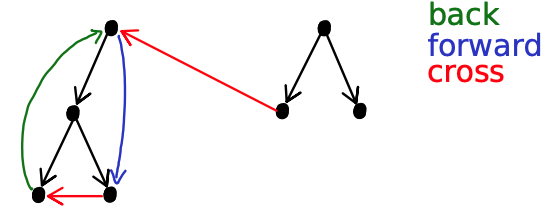

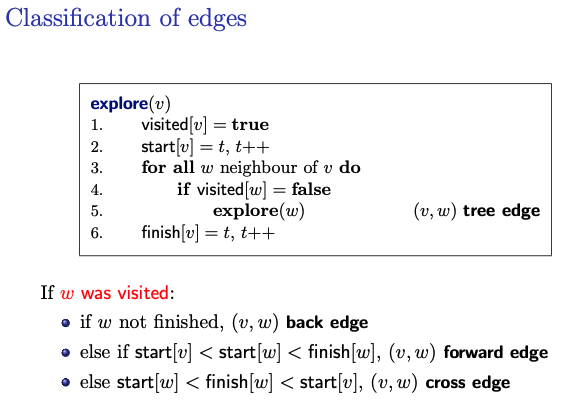

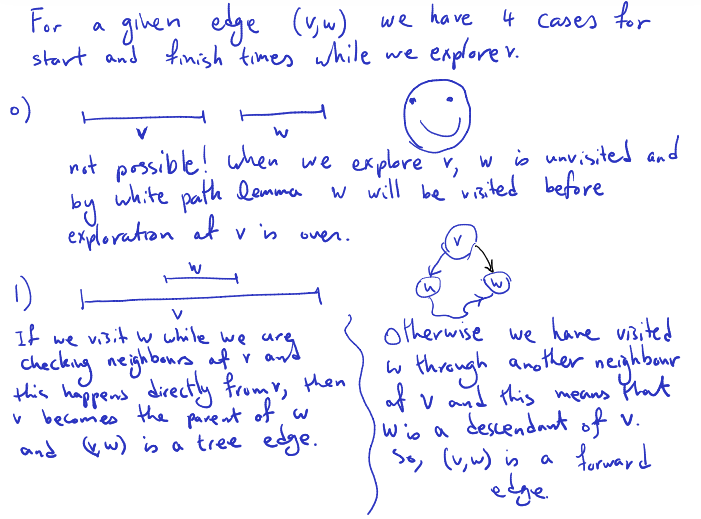

Classification of edges Suppose we have a DFS forest. Edges of are one of the following:

- tree edges

- A tree edge in the context of a DFS (Depth-First Search) forest of a directed graph is an edge that is part of the DFS tree itself. In other words, during the DFS traversal of the graph, when exploring a vertex , if a directed edge leads to a vertex that has not been visited yet, this edge becomes a tree edge and contributes to the construction of the DFS tree.

- back edges: from descendant to ancestor

- forward edges: from ancestor to descendant (but not tree edge)

- cross edges: all others

In this picture, if we were to start at node 1 (the node on the right) then the cross edge would become a tree edge correct?

So the classification of edges is not absolute but depends on where you start the DFS? yes

explore(v)

visited[v] = true

start[v] = t, t++

for all w neighbour of v do

if visited[w] = false

explore(w) (v,w) tree edge

finish[v] = t, t++If was visited:

- if not finished, back edge

- Case 1: is visited and not finished yet:

- If vertex has already been visited but not yet finished (i.e., the DFS traversal is still exploring its descendants), and is an ancestor of , then the edge is considered a back edge.

- This condition indicates that is a descendant of in the DFS tree, and completes a cycle by connecting back to one of its ancestors .

- Case 1: is visited and not finished yet:

- else if forward edge

- else cross edge

- Case 1: : In this scenario, vertex was visited and finished before vertex was started. However, is not an ancestor of because . Therefore, the edge forms a cross edge.

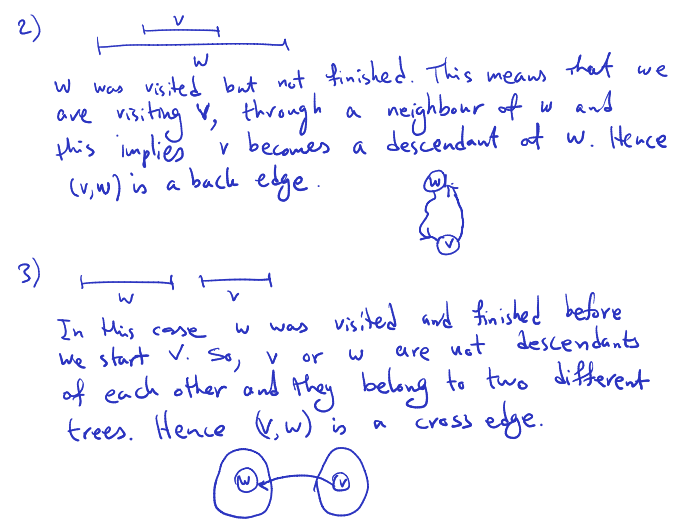

Testing Acyclicity

Claim

has a cycle if and only if there is a back edge in the DFS forest

Proof: : Since there is a back cycle, we have an edge from a descendent to an ancestor. So we have a cycle . Assume has a cycle , . Without loss of generality, we can assume we visit first (in the cycle).

At the time we start , the path is unvisited. By the white path lemma, we will visit before we finish . Hence becomes a descendent of and () is a back edge.

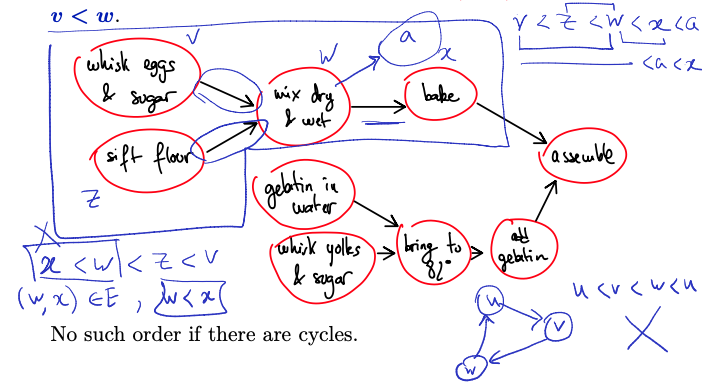

Topological Ordering

Topological ordering

Definition: Suppose is a DAG. A topological order is an ordering < of such that for any edge , we have .

No such order if there are cycles.

Need to write a topological ordering in a line. If two nodes are not connected, we have a choice to which one to write first.

There is no uniqueness. It gives you one of the topological ordering. Prof gave the analogy of prerequisites of the courses. You are in trouble if you have a cycle. No topological ordering???

In-degree node 0, good idea to put it first in your topological ordering. Same thing with out-degree node of 0 to be the last one.

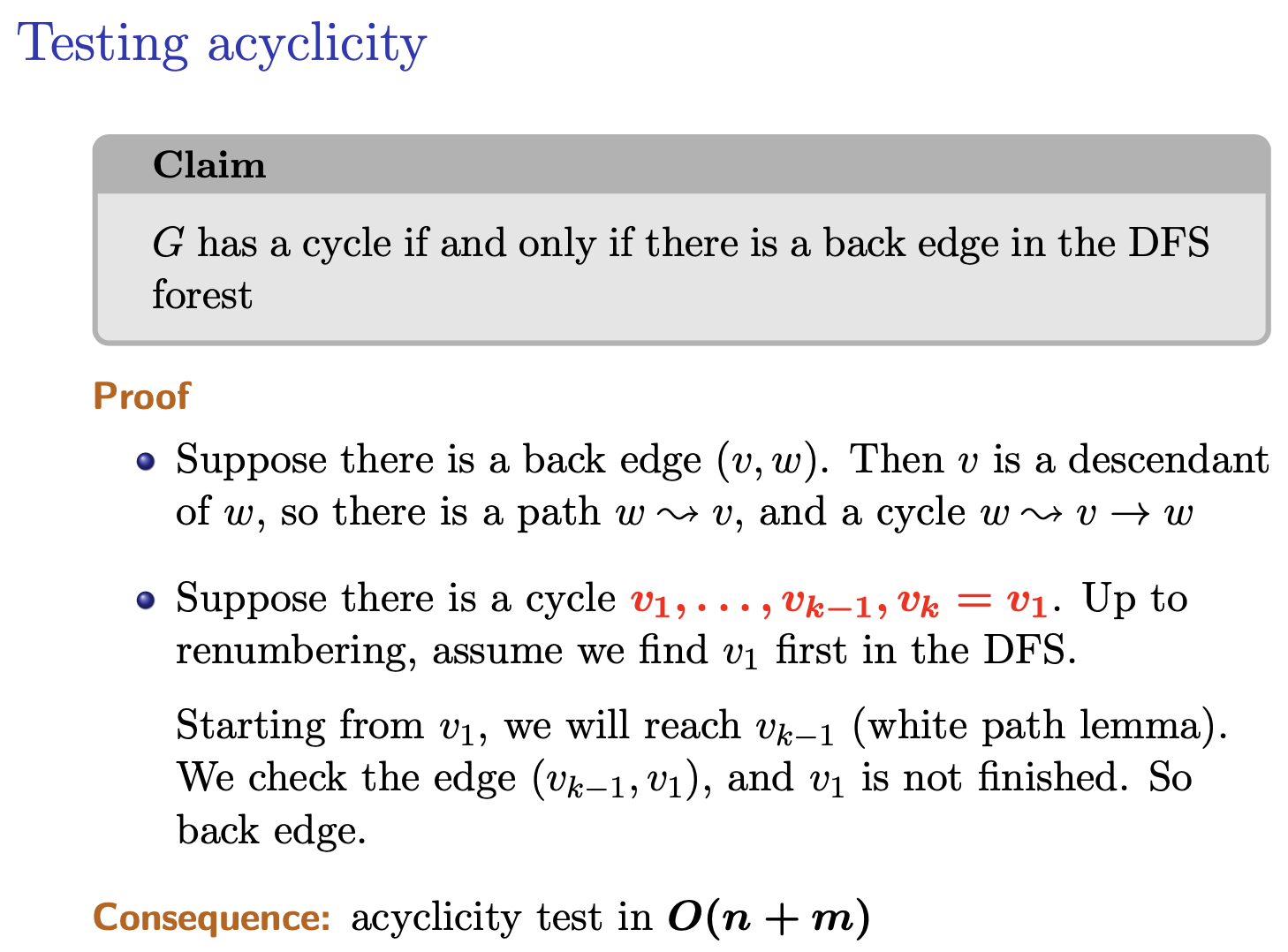

Proposition:

Gis a DAG if and only if there is a topological ordering onv.

is a DAG There is topological ordering on . (It is an if and only if theorem, but prof just showed one way) (Not a proof (sketch)): Claim: must have at last one vertex with in degree 0.

Assume it is not true. Then all vertices at are at in-degree at least one. Take an in-degree 0 node as the first vertex in the order remove it from , the remaining part in a DAG and repeat. At some point, we need to use one of the nodes used to have an edge going in the node at the start.

So we’ve found a contradiction. If we have a DAG, there must have a topological ordering.

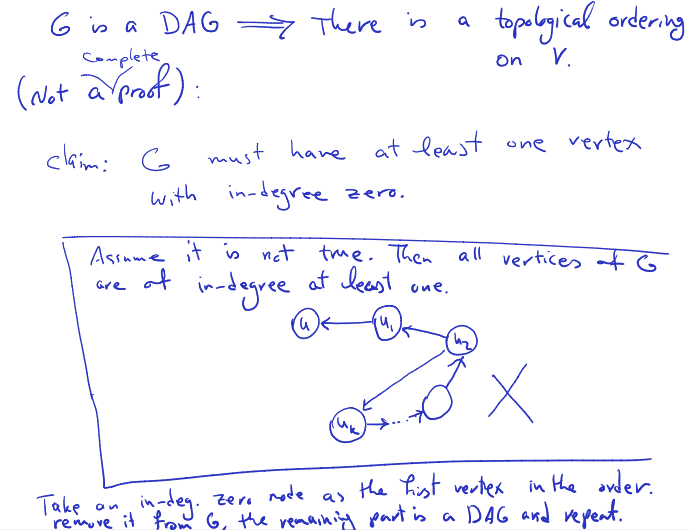

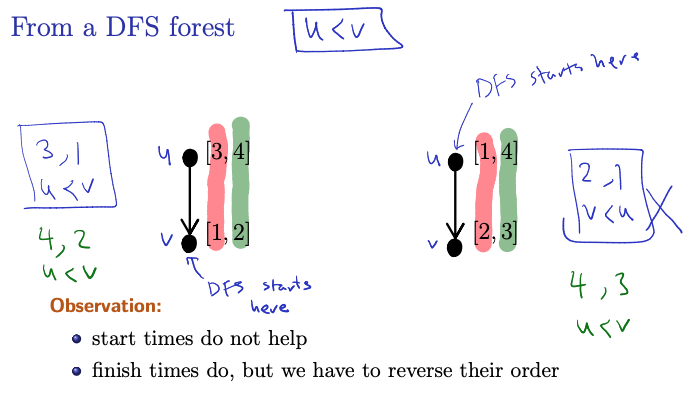

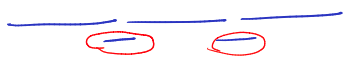

From a DFS forest

Observation:

- start times do not help

- finish times do, but we have to reverse their order!

Left: is our topological order. we have 3,1 and Right: 2,1 Start time is not something we can rely on.

What about finish time? Left: 4,2 and Right: 4, 3 and

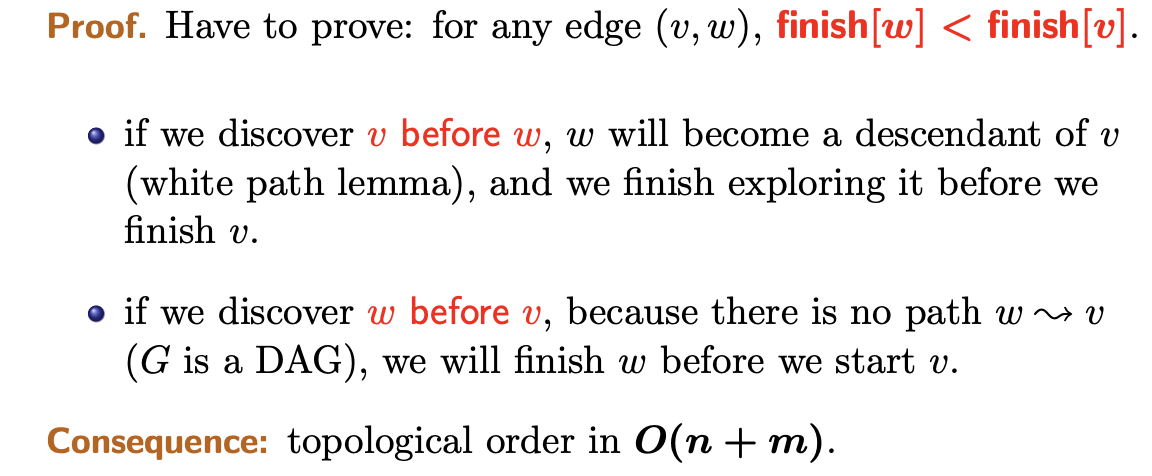

Claim

Suppose that is ordered using the reverse of the finishing order: .

This is a topological order.

SO: Definition of Topological Order: In a topological order, for every directed edge , vertex comes before vertex .

- Using Finish Times:

- In a DFS traversal, finish times indicate when vertices are finished being explored and all their descendants have been visited.

- We consider the reverse of the finishing order, so that vertices with later finish times come before vertices with earlier finish times. This makes sense, since if there is a topological order, there is gonna be a start, and we explore all the descendants starting from that node. It will finish last. Recursively go up.

Proof: Look at notes

- In a directed acyclic graph (DAG), there cannot exist a path from vertex to vertex if is discovered before during a DFS traversal. This is because if there were such a path, it would create a cycle in the graph, contradicting the acyclic property of the graph.

Topological order in .

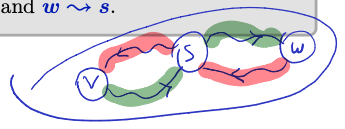

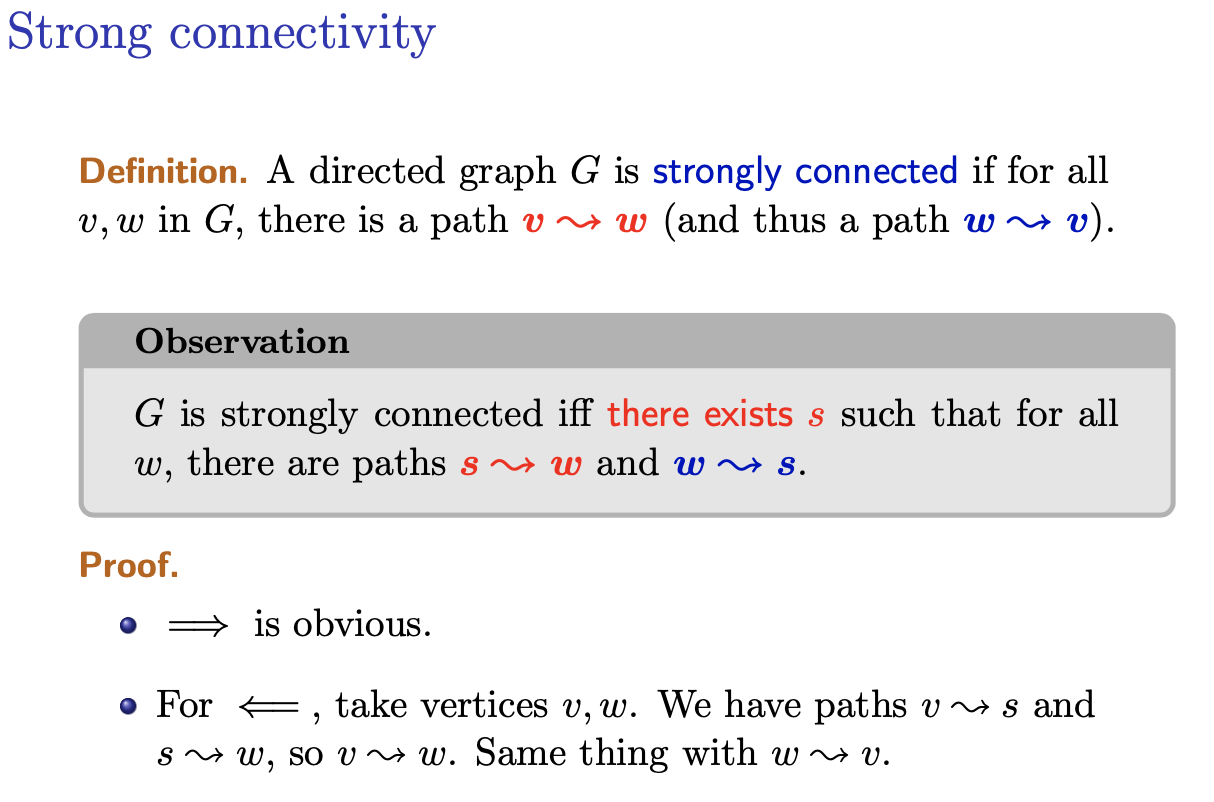

Strong Connectivity

A directed graph is strongly connected if for all in , there is a path (and thus a path ).

Observation

is strongly connected there exists such that for all , there are paths and .

Proof:

- is obvious

- For , that vertices . We have paths and , so . Same thing with .

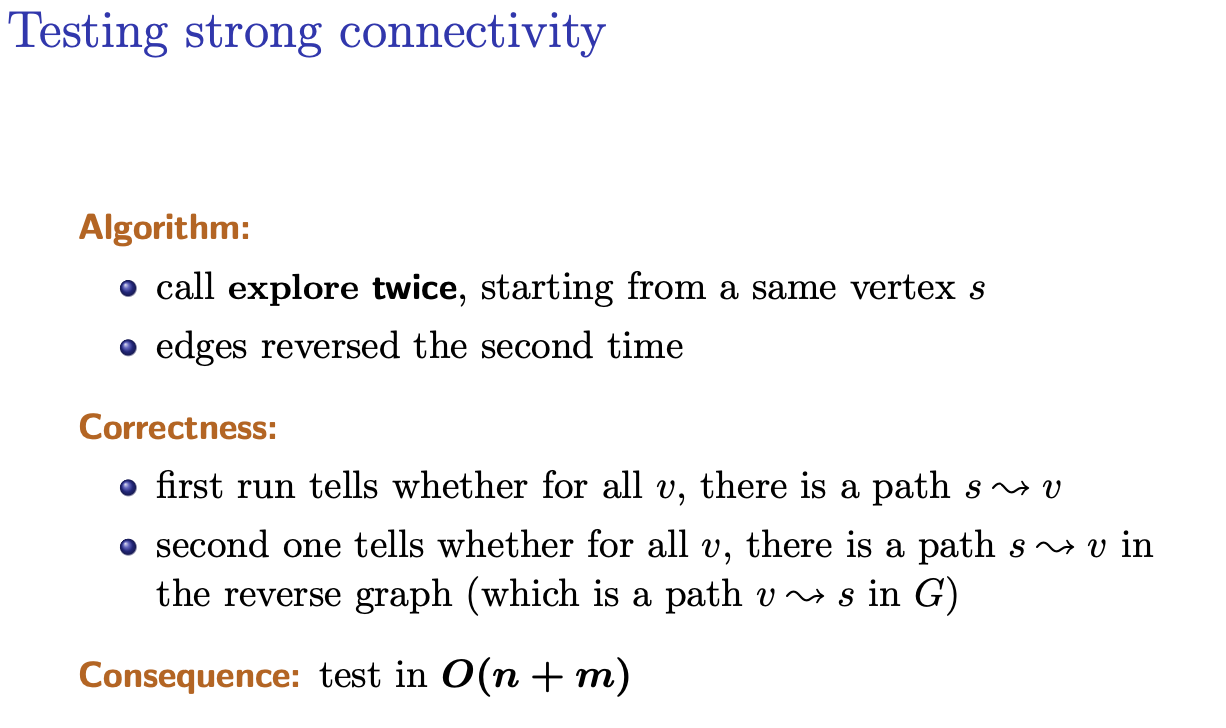

Algorithm:

- Perform a DFS exploration starting from vertex on the original graph .

- Perform another DFS exploration starting from vertex , but this time on the reverse graph , where all edges are reversed.

- If both DFS explorations reach every vertex in the respective graphs, then the graph is strongly connected.

Correctness:

- The first DFS exploration ensures that there is a path from to every other vertex in the original graph .

- The second DFS exploration, conducted on the reverse graph , ensures that there is a path from every vertex back to in the original graph , because reversing the edges effectively explores paths from every vertex back to in .

- Therefore, if both DFS explorations are successful, it implies that there exists a path from to every vertex and from every vertex back to , establishing strong connectivity.

Consequences:

- The algorithm tests for strong connectivity in time complexity, where is the number of vertices and is the number of edges in the graph.

- This approach provides an efficient way to determine whether a directed graph is strongly connected, which is essential for various applications and algorithms that rely on strong connectivity information.

If you give a direct graph and we take the reverse, we get a reverse graph where each edge is reversed. First run without reversing. Second run reverse and run DFS on the same node but on the reverse graph.

In G(T) is reversed, if we run DFS on this graph, there is a path

Have we considered the runtime of reversing the graph?

Might be asked on the midterm?? Do it in linear time. Prof only showed that it is in , but didn’t show the runtime to take to reverse a graph. Look

Approach to Reverse a Graph Efficiently:

- Original Graph Representation:

- Let’s assume the graph is represented using an adjacency list for each vertex, where each list contains the vertices adjacent to the corresponding vertex.

- Reverse Graph Representation:

- To reverse the graph, we need to reverse the direction of each edge. This essentially means swapping the source and target vertices for each edge.

- We can achieve this by creating a new reversed graph and populating its adjacency lists accordingly.

- Efficient Reversal:

- We can reverse the graph in linear time by iterating through each vertex and its adjacency list in the original graph.

- For each edge (u,v)(u,v) in the original graph, we add an edge (v,u)(v,u) to the corresponding adjacency list in the reversed graph.

- This process takes O(n+m)O(n+m) time, where nn is the number of vertices and mm is the number of edges in the original graph Runtime Analysis:

- The time complexity of reversing the graph is O(n+m)O(n+m), where nn is the number of vertices and mm is the number of edges in the original graph.

- This linear time complexity ensures that the graph reversal process is efficient and suitable for practical applications, including the strong connectivity algorithm discussed earlier.

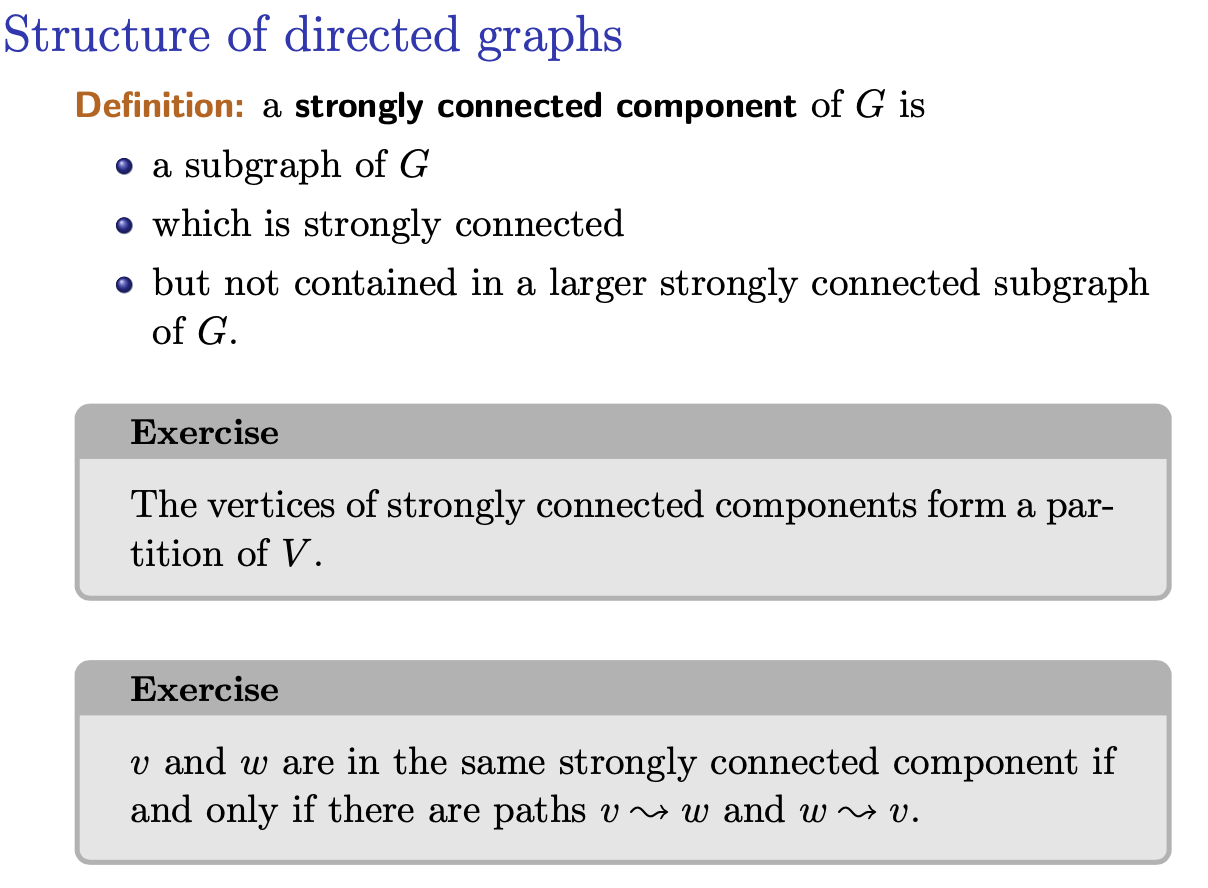

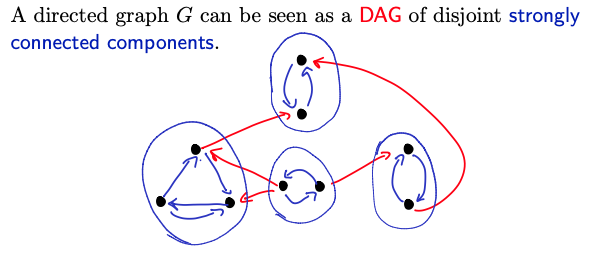

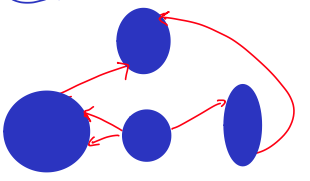

Structure of directed graphs

If given a directed graph we can form a strongly connected components, we can form a DAG.

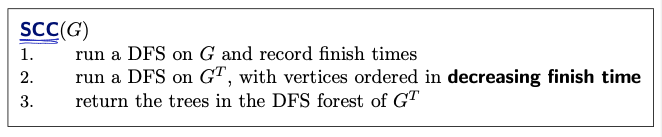

Korasaju's Algorithm

Definition: for a Directed Graph , the reverse (or transpose) graph is the graph with same vertices, and reversed edges.

Complexity: (don’t forget the time to reverse )

Exercise: check that the strongly connected components of and are the same.

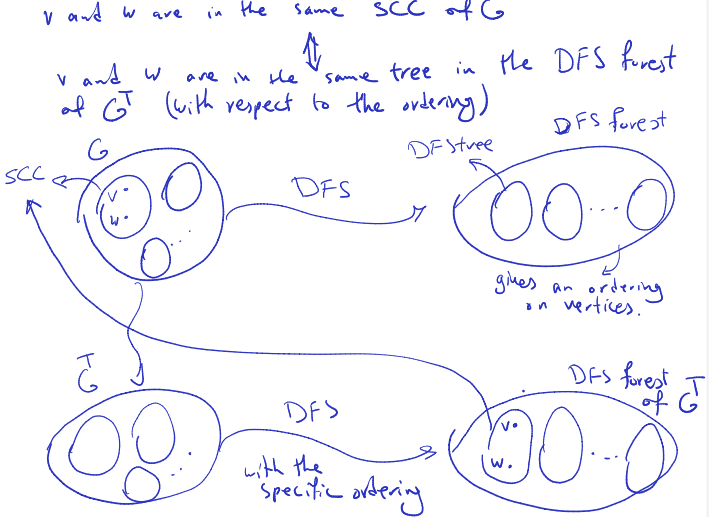

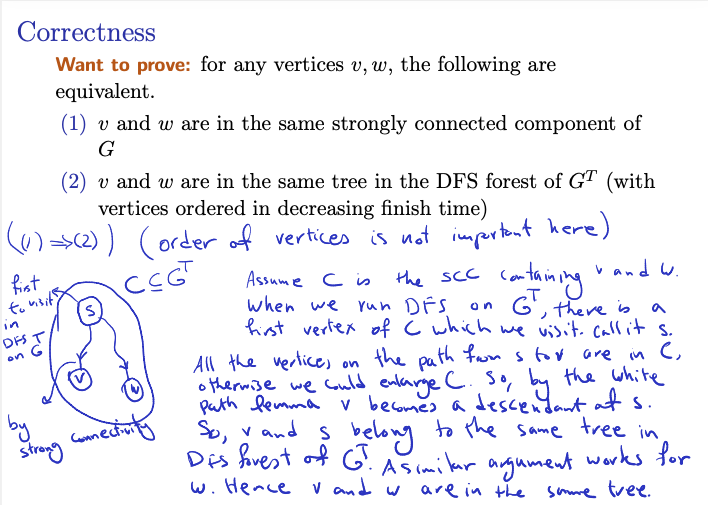

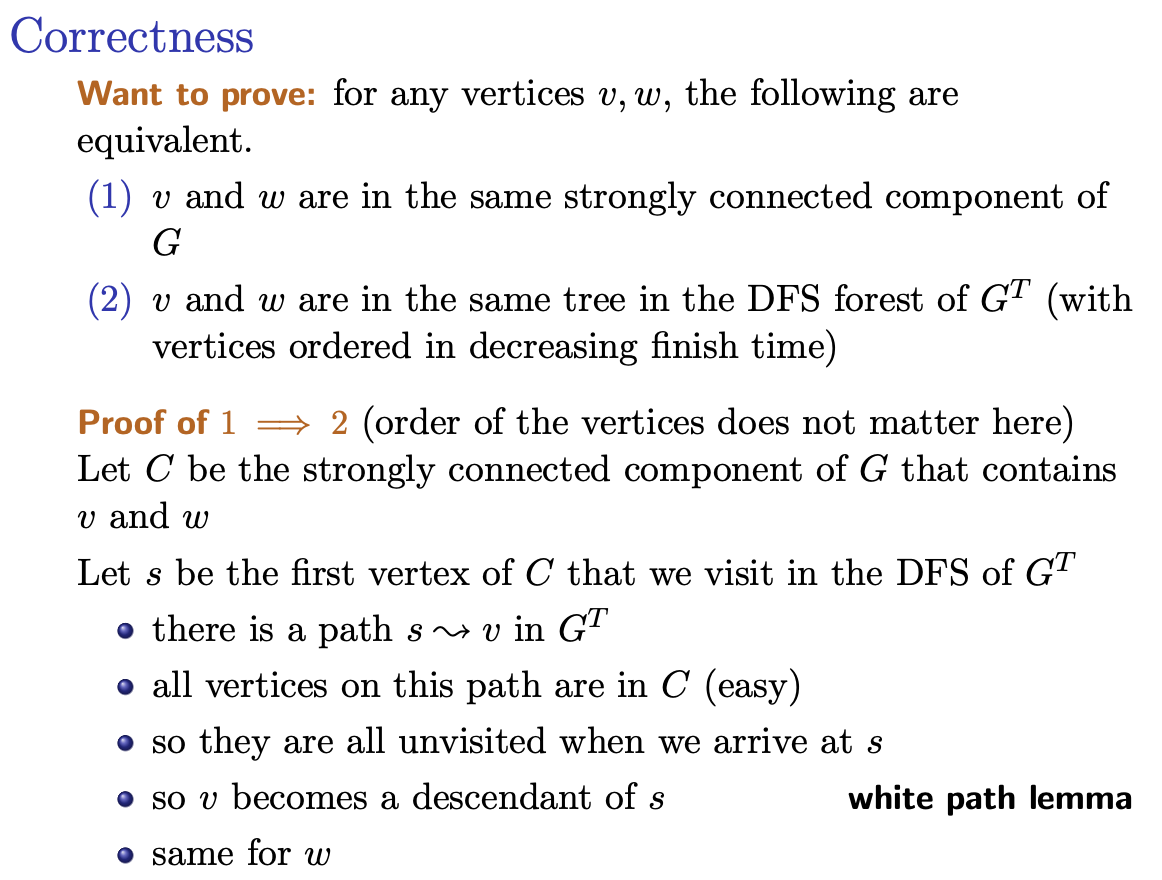

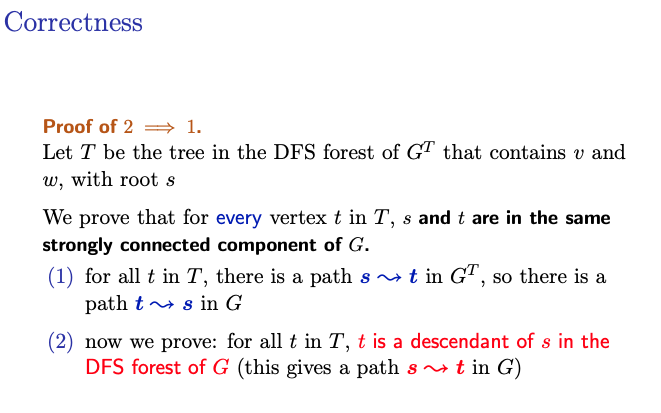

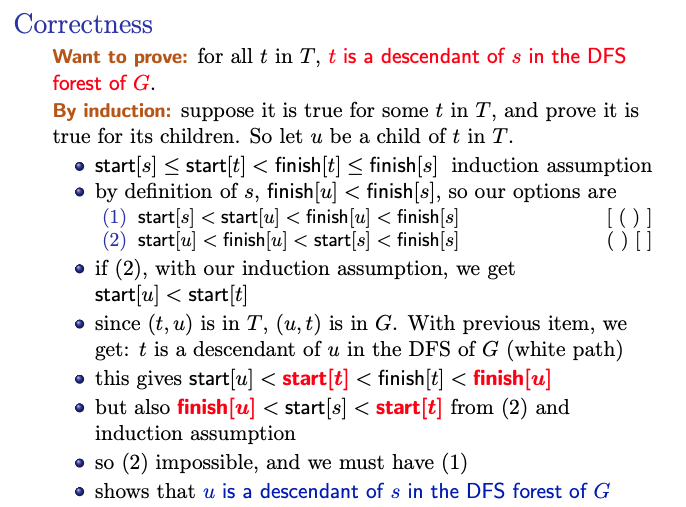

Proof: and are in the same SCC of and are in the same tree in the DFS forest of (with respect to the entering).

Run DFS in () from the node that you finished in by doing the first DFS on

Thu Feb 8 2024

. Show that for every vertex in (including and ) and are in the same SCC of . Since there is a path from to any , which is in , there exists a path from in . Now we show that , is a descendant of in the DFS forest at , which gives a path from to in .

Inducting argument (write the base case). assume it is true for some in and show it is true for children of . Suppose is a child of .

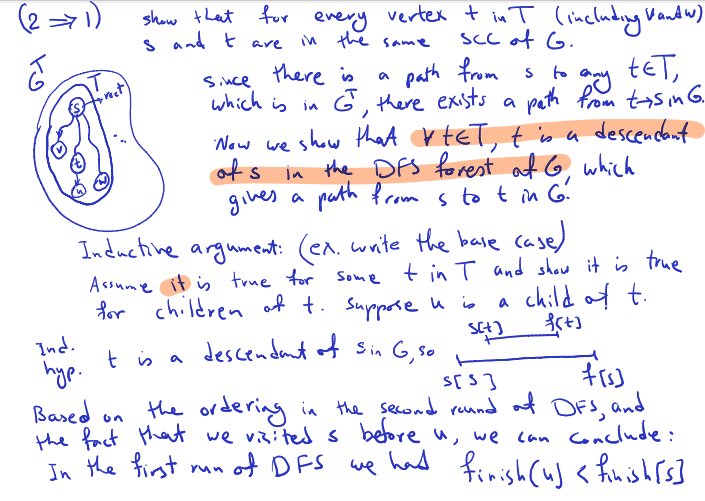

Induction hypothesis: is a descendant of in , so (insert image prof drew)

Based on the ordering in the second round of DFS, and the fact that we visited before , we can calculate: …todo

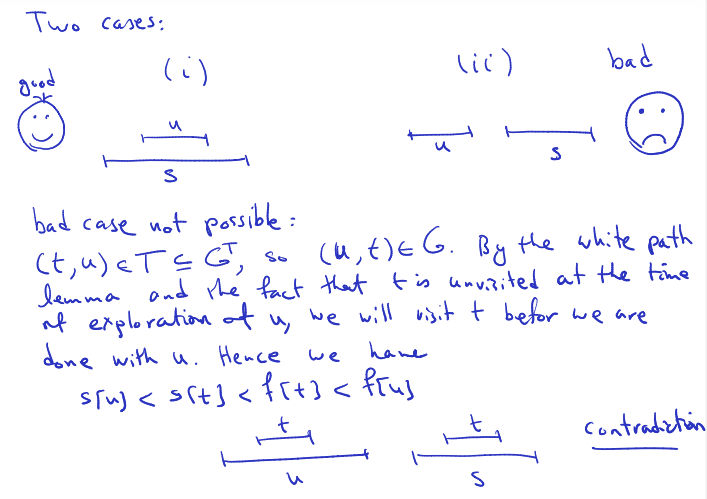

Two cases: (insert picture)

Bad case not possible: todo

Basically this slide:

True/False For a given DAG, , we have a linear time algorithm for deciding whether contains a hamiltonian path.

- can run DFS on and find a topological ordering.

- Finding a hamiltonian path is basically having a unique topological ordering

Module 4

Lecture 8 - Greedy Algorithms

Goals: This module: the greedy paradigm through examples

- interval scheduling

- interval colouring

- minimizing total completion time

- Dijsktra’s algorithm

- minimum spanning tress

Greedy Algorithms

Context: we are trying to solve a combinatorial optimization problem:

have a large, but finite, domain

want to find an element in that minimizes/maximizes a cost function

Greedy strategy:

- build step-by-step

- don’t think ahead, just try to improve as much as you can at every step

- simple algorithms

- but usually, no guarantee to get the optimal

- it is often hard to prove correctness, and easy to prove incorrectness.

Was not paying attention since rankings came out and ended talking to Chester for the whole time…

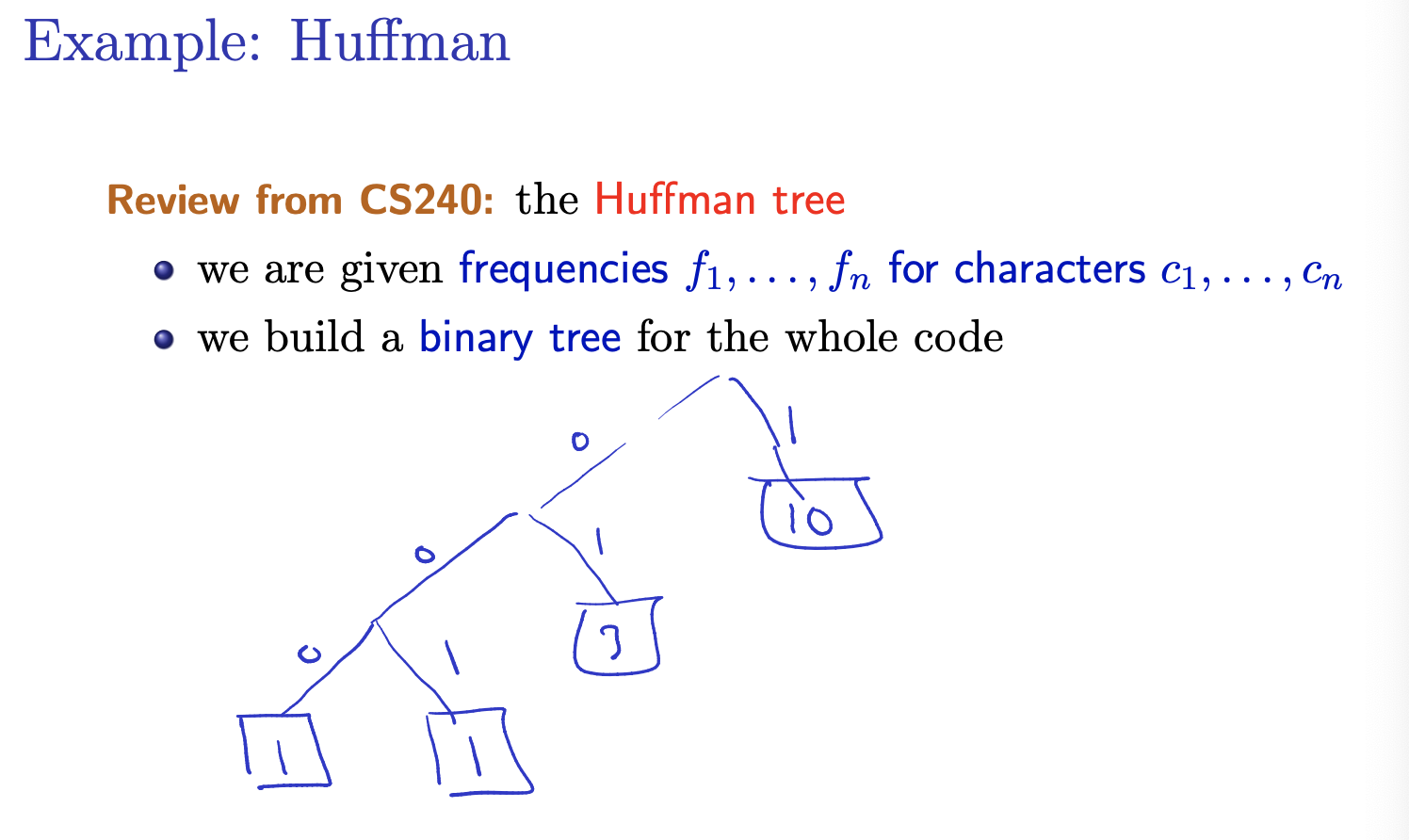

Greedy strategy: we build the tree bottom up.

- create many single-letter trees

- define the frequency of a tree as the sum of the frequencies of the letters in it

- build the final tree by putting together smaller trees: join the two trees with the least frequencies

Claim: this minimizes × {length of encoding of }

We did not look a at the Huffman Tree in CS240.

Interval Scheduling

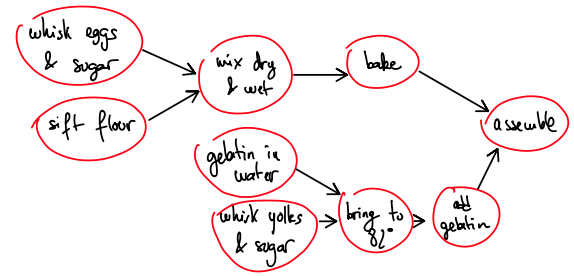

Interval Scheduling Problem

Input: intervals . Output: a maximal subset of disjoint intervals

What does it mean?

A maximal subset of disjoint intervals refers to the largest possible collection of intervals from the given set where none of the intervals overlap with each other. In other words, you’re trying to find the largest number of intervals that can be scheduled without any conflicts in terms of time.

Example: A car rental company has the following requests for a given day

- : 2pm to 8pm

- : 3pm to 4pm

- : 5pm to 6pm Answer is

Greedy Strategies

There are different greedy strategies for solving the Interval Scheduling Problem:

- Consider earliest starting time (Choose the interval with ).

- Earliest Starting Time: This strategy selects intervals based on their starting times. It chooses the interval that starts earliest among the remaining intervals at each step. This often leads to selecting intervals that allow for the maximum number of non-overlapping intervals to be scheduled.

- Consider shortest interval (choose the interval with ).

- Shortest Interval: This strategy focuses on selecting the shortest interval among the remaining intervals at each step. By choosing shorter intervals first, it may allow for more flexibility in scheduling longer intervals later.

- Consider minimum conflicts (choose the interval that overlaps with the minimum number of other intervals).

- Minimum Conflicts: This strategy aims to minimize conflicts by selecting intervals that overlap with the fewest number of other intervals. It prioritizes intervals that have the least overlap with the currently selected intervals.

- Consider earliest finishing time (choose the interval with ).

- Earliest Finishing Time: This strategy selects intervals based on their finishing times. It chooses the interval that finishes earliest among the remaining intervals at each step. This can lead to scheduling intervals that free up resources quickly and potentially allow for more intervals to be scheduled overall.

The algorithm provided outlines a generic approach to solving the Interval Scheduling Problem using a greedy strategy based on the earliest finishing time. It sorts the intervals based on their finishing times and then iterates through them, adding intervals to the schedule if they do not conflict with previously selected intervals. Finally, it returns the selected intervals.

Algorithm: Interval Scheduling

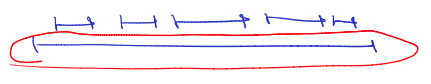

- Sort the intervals such that

- For from to do if interval , , has no conflicts with intervals in add to

- return

Tues Feb 12 2024 (Missed class I was sleeping)

- Ask matthew for notes

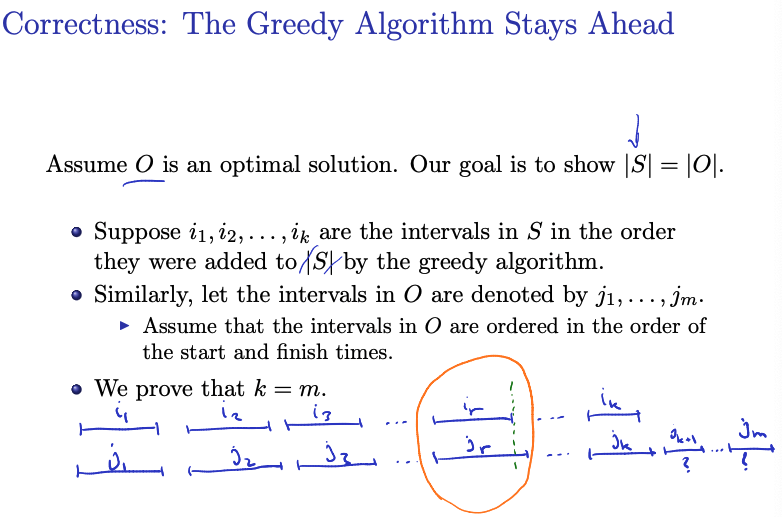

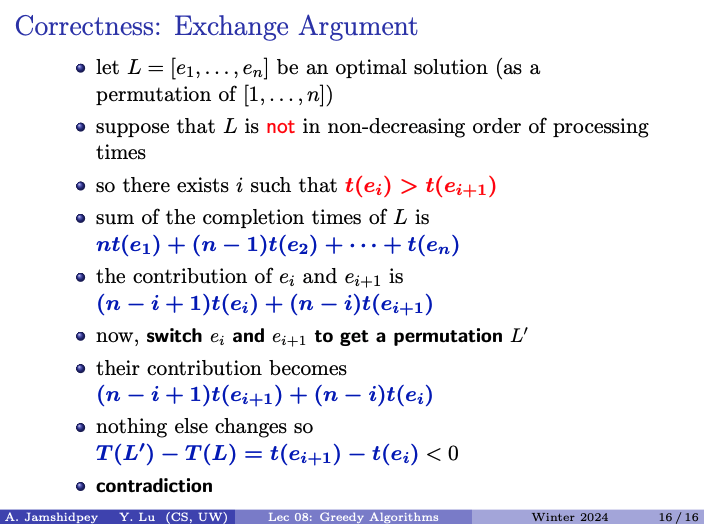

Correctness: The Greedy Algorithm Stays Ahead

Note

- is output of greedy system, is output from optimal algorithm

- If , then it must be that is optimal

- We then sort and by start/finish times

- The proof then follows:

Prof notes / proof:

- By ind. hyp.: 1.

- Compare and 2.

- 1 and 2

- At the time the greedy algorithm had to choose the interval, was an option (it had no intersection with and others). The greedy algorithm chose and it means:

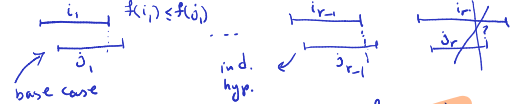

Lemma

For all indices we have .

Proof: We use induction

- For the statement is true.

- Suppose and the statement is true for . We will show that the statement is true for .

- By induction hypothesis we have .

- By the order on we have

- Hence we have

- Thus at the time the greedy algorithm chose , the interval was a possible choice.

- The greedy algorithm chooses an interval with the smallest finish time. So, .

This lemma establishes an important property:

For any index up to , where kk is the number of intervals in the greedy solution, the finish time of the interval chosen by the greedy algorithm is less than or equal to the finish time of the corresponding interval chosen by the optimal solution .

The lemma states: “For all indices , we have .”

Here, denotes the finish time of the r-th interval chosen by the greedy algorithm, and denotes the finish time of the r-th interval chosen by the optimal solution.

Note

- Recall that is the i-th index from the sorted (by start/end position) Greedy output, and is the j-th index from the sorted (by start/end position) Optimal output.

- For (base case), we know that , since for the greedy approach, we always start by taking the one that finishes earliest.

- For , we use induction, and assume that , and try proving it for .

- So, by inductive hypothesis, we have , and by comparing and , we have that . Using both of those, we get that . So, when the greedy algorithm had to choose the r-th interval, was an option (it had no intersection with and others), and thus it would have chosen that, or something better. So, .

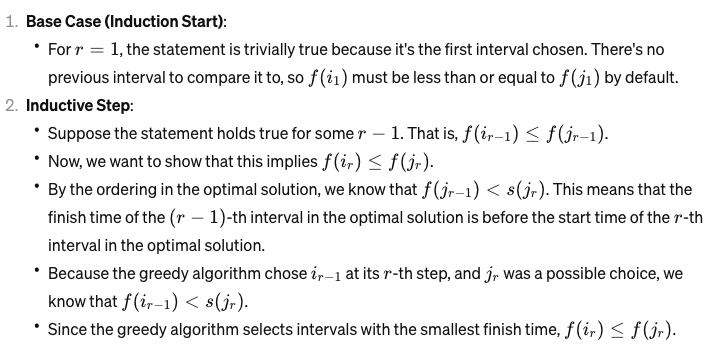

Theorem

The greedy algorithm returns an optimal solution.

Proof:

- Prove by contradiction.

- if the output is not optimal, then .

- is the last interval in and must have an interval .

- Apply the previous lemma with , and we get .

- We have .

- So, was a possible choice to add to by the greedy algorithm. This is a contradiction

Prof notes:

- Show

- Assumer . There exists at least in as it has at least one more element in comparison to .

- By the previous lemma, we know that:

- So the greedy algorithm could add to , but it did not and it is a contradiction.

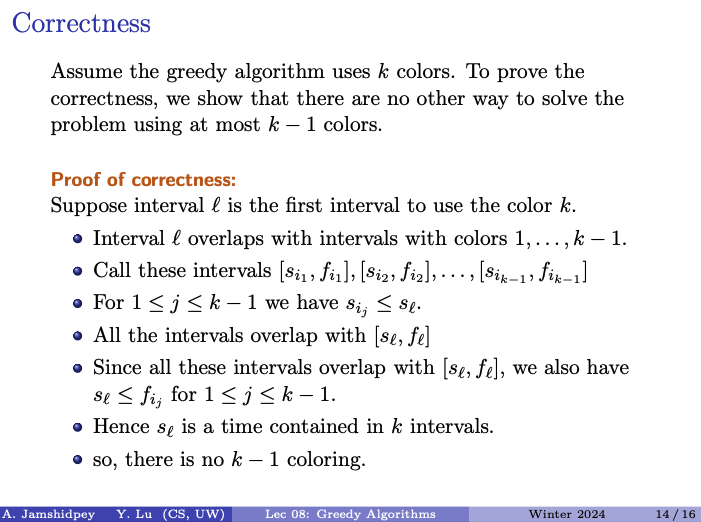

Interval Colouring

Interval Colouring Problem

Input: intervals Output: use the minimum number of colours to colour the intervals, so that each interval gets one colour and two overlapping intervals get two different colours.

Algorithm: Interval Colouring

- Sort the intervals by starting time:

- For from 1 to do Use the minimum available colour to colour the interval . (i.e. use the minimum number to colour the interval so that it doesn’t conflict with the colours of the intervals that are already coloured.)

Note

He gives an algorithm, but he said that an one exists with a min heap and stuff, but he doesn’t care.

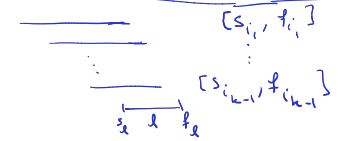

Prof’s proof:

-

If the greedy algorithm uses colours, then is the minimum number of colours needed. In other words, no other algorithm can solve the problem with colours.

-

Idea: Show that there exists a time such that it is contained in intervals.

-

Assume is the first interval which uses colour .

-

Since we have an increasing order on start times: for .

-

On the other hand all intervals have overlap with , hence for .

-

So, is the time which is contained in intervals. So, no colouring with less than colours exists.

Sanity check: Recap

This algorithm is a greedy approach to solve the Interval Colouring Problem. It sorts the intervals by their starting times and then iterate through each interval, assigning it the minimum available colour that does not conflict with the colours already assigned to overlapping intervals.

Correctness showing that if the greedy algorithm uses colours, no other way to solve problem using at most colours.

Me trying to make sense of the proof

- Suppose Intervals is the First to Use Colour :

- The proof starts by considering a interval that is the first one required colour (a new colour dumbass). This means that all previous intervals have been coloured with colours , and intervals is the first one requiring colour because it overlaps with intervals already coloured with colours .

- Interval Overlaps with Previously Coloured Intervals:

- The intervals that have been coloured with colours all overlap with interval . This means that they share some time interval with interval .

- Interval Contains Times from Intervals:

- Because all intervals have been coloured with colours overlap with interval , the start time of the interval is less than or equal to the finish time of these intervals (). This means that is a time point that is contained within intervals.

- Conclusion: No Colouring Exists:

- Because is contained within intervals, it implies that colouring interval with colour is necessary to ensure that it does not have the same colour as any of the intervals it overlaps with. If we tried to colour interval with colour or less, it would conflict with one of the intervals it overlaps with. Thus, there is no way to colour the intervals using fewer than colours while ensuring that overlapping intervals receive different colours.

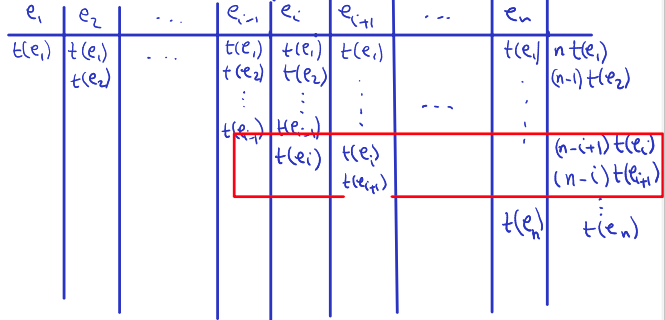

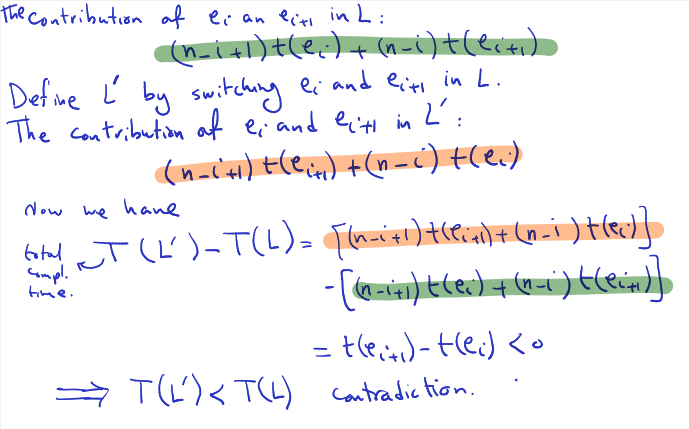

Minimizing Total Completion Time

The problem

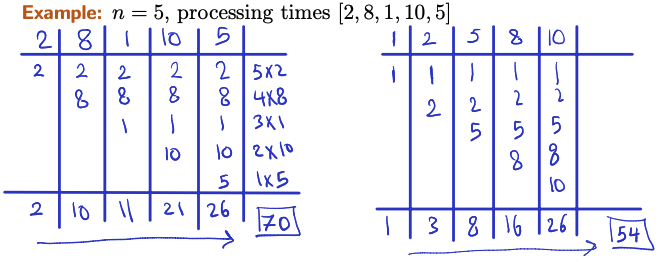

Input: jobs, each requiring processing time Output: An ordering of the jobs such that the total completion time is minimized.

Note: The completion time of a job is defined as the time when it is finished.

Algorithm:

- order the jobs in non-decreasing processing times

Switching position of and in the permutation , and we obtain a new permutation . And the difference in total completion time between and is calculated as: . This difference is negative because the processing time of is less than the processing time of . We have reached a contradiction which implies that by switching and we obtain a permutation with a lower total completion time than , contradicting the assumption that is an optimal solution. Therefore, the assumption that an optimal solution exists that is not in non-decreasing order of processing times leads to a contradiction, establishing the correctness of the algorithm that orders jobs in non-decreasing processing times.

Prof’s proof:

- Assume there is an optimal solution with a different ordering in comparison to the solution given by the greedy algorithm. Suppose is an optimal solution and is not in a non-decreasing order of processing times.

- So there exists an index such that the processing time of is greater than the processing time of . If this does not happen, the optimal solution has the exact same order as greedy, so if we want them to be different, it must happen.

- You can see that the cost is greater in such a case, than if you swapped and to their proper non-decreasing orders.

- So, as long as we find 1 ordering reversed, we can find something better. Thus, the optimal solution would have the ordering in non-decreasing.

Thur Feb 15 2024 (Missed class because studying for cs341 midterm)

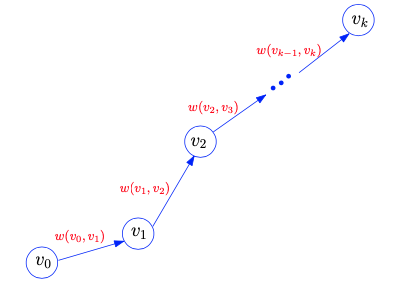

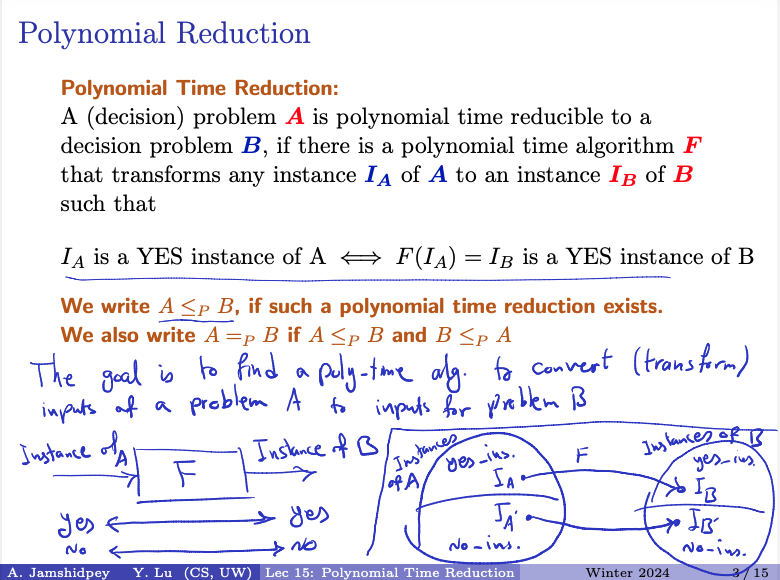

Lecture 9 - Dijkstra’s Algorithms

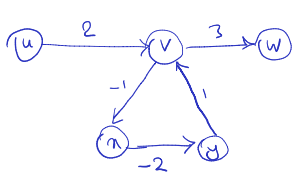

Preliminaries

- is a directed graph with a weight function:

- Each edge has an associated weight .

- The weight of path is:

- The weight of path is the sum of weights of the edges along that path, calculated as above.

- The weight of path is the sum of weights of the edges along that path, calculated as above.

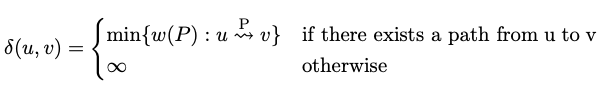

Padlet (True/False)

Shortest path exists in any directed weighted graph. https://padlet.com/arminjamshidpey/CS341

- First note that in this class, “walk vs. path” “path vs. simple path”.

- Even if there is a path between every 2 nodes, if edges can have negative weights, then it is possible that there is a cycle with negative total weight, and thus going through the cycle many times can lead to infinitely low weights. So, it is false.

- Even if there is a path between every pair of nodes, negative-weight cycles can lead to infinitely low weights by traversing the cycle multiple times.

- Negative Weights:

- When negative weights are introduced into the graph, the situation becomes more complex.

- Negative weights can lead to scenarios where paths that include negative-weight edges have shorter total weights than paths without negative-weight edges.

- This can lead to unexpected behavior, such as negative-weight cycles, where starting and ending at the same node repeatedly can result in an infinitely negative total weight.

- Impact on Shortest Path Existence:

- In graphs with negative weights, the existence of shortest paths is not guaranteed.

- Negative-weight cycles introduce ambiguity into the concept of shortest paths. If a negative-weight cycle exists, one could repeatedly traverse this cycle to achieve arbitrarily low total weights, leading to no well-defined shortest path.

- Consequently, the presence of negative-weight cycles can invalidate the concept of shortest paths in a graph.

Why Dijkstra's algorithm doesn't work with negative weights

- Dijkstra’s algorithm employs a greedy strategy, always selecting the vertex with the smallest known distance from the source vertex at each step.

- This strategy assumes that adding a new vertex to the set of vertices with known shortest distances will never result in a shorter path to any vertex than the paths already considered.

- However, in the presence of negative weights, this assumption is violated because the distance to a neighbouring vertex can decrease when traversing an edge with negative weight.

- When negative weights are present, Dijkstra’s algorithm may select a vertex based on the current shortest path information, leading to incorrect shortest path calculations.

- Negative-weight edges can create cycles where repeatedly traversing the cycle reduces the total path weight, violating the assumption made by Dijkstra’s algorithm.

- Negative-weight cycles pose a particularly challenging problem for Dijkstra’s algorithm.

- If a negative-weight cycle exists reachable from the source vertex, it can be traversed repeatedly to produce arbitrarily low total path weights, leading to no well-defined shortest path.

- Dijkstra’s algorithm, which does not account for negative weights, cannot handle this scenario and may produce incorrect results or enter into an infinite loop.

- Assumption: has no negative-weight cycles

- The shortest path weight from to :

Single-Source Shortest Path Problem

Input: and a source Output: A shortest path from to each

- Aims to find the shortest path from to every other node in the graph.

- The shortest path weight from node to node , denoted as , is defined as the minimum weight among all paths from to node . If there’s no path from to , .

- It is true. Can prove by contradiction easily. If there was a better way, then our longer path could be shorter, which is a contradiction.

- The proof by contradiction is straightforward: Assume there exists a shorter path from to vivi for some , contradicting the assumption that is the shortest path from to .

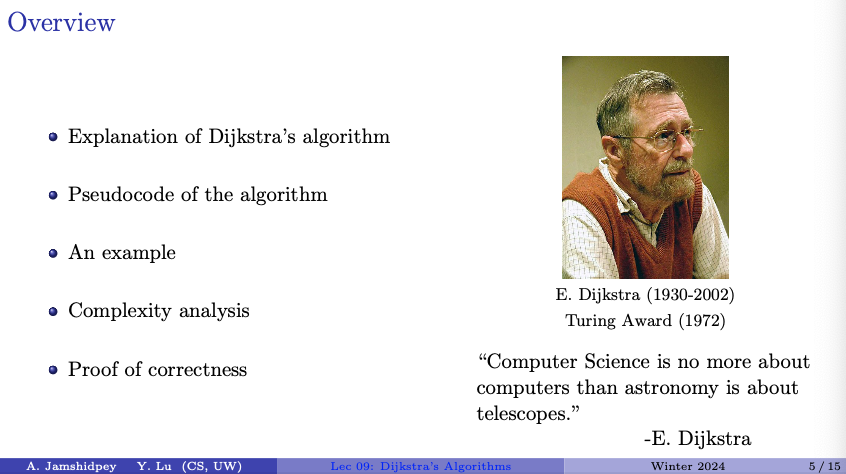

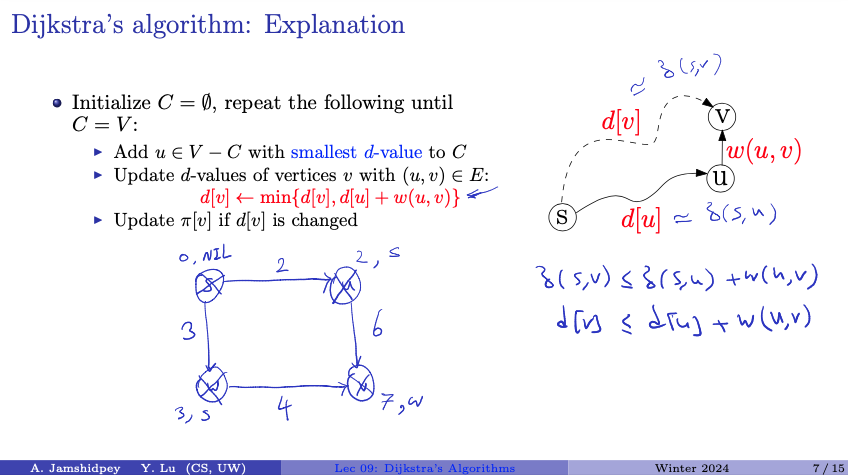

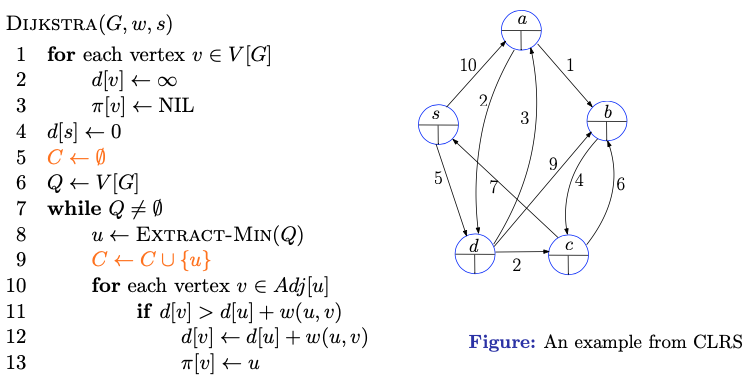

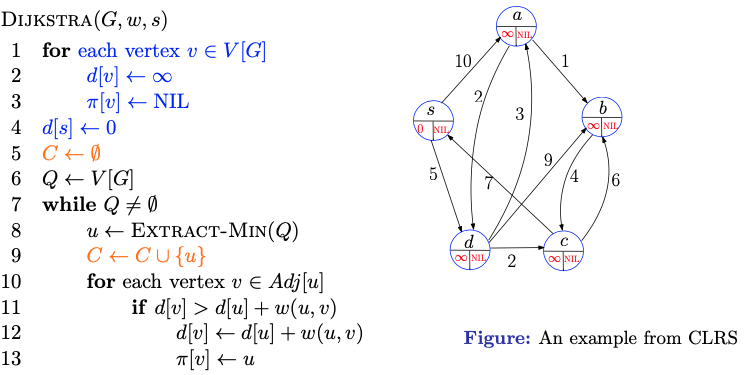

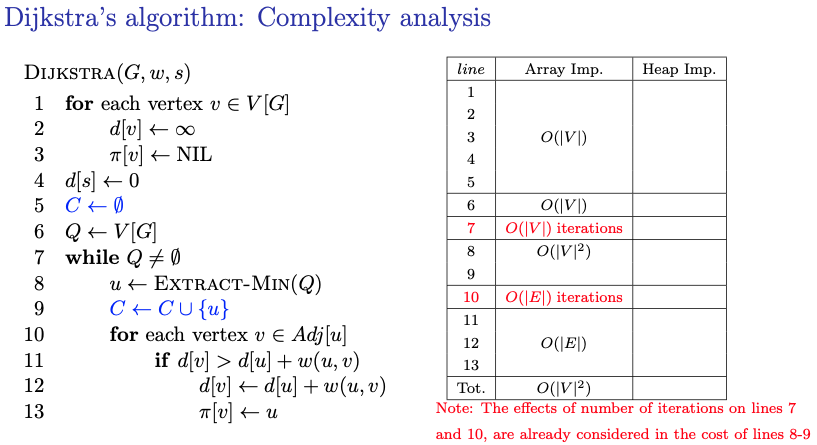

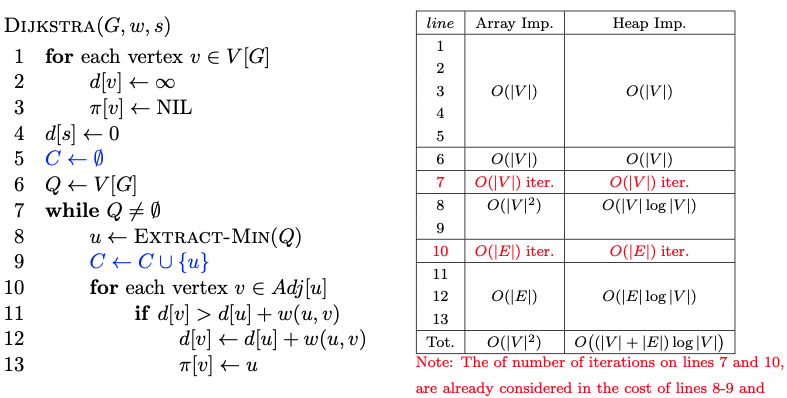

Dijkstra’s Algorithm: Explanation

Dijsktra's algorithm is a greedy algorithm.

Input: A weighted directed graph with non-negative edge weights

- For all vertices, maintain quantities

- a shortest-path estimate from to

- predecessor in the path (a vertex or NIL)

- the predecessor in the shortest path from the source vertex to vertex .

Note:

- This is stricter than what we were saying before; not only can we not have negative weight cycles, but not even negative weight edges.

- distance level

- I think he said that refers to level

- We start by putting every parent as NIL (this is the list), and set the shortest path to infinity

- Good visualization: Dijkstra’s - Shortest Path Algorithm (SPT) Animation

- I think keeps track of vertices whose shortest paths have already been determined. So we start with being empty.

- Repeat the following steps until includes all vertices :

- Select a vertex from (i.e., the set of vertices not yet included in ) with the smallest value and add it to . This vertex becomes the next vertex to consider in the alogrithm.

- Update the of the vertices adjacent to , i.e., for each vertex such that is an edge in the graph:

- If the of any vertex changes due to the update, also update the predecessor to reflect the new shortest path.

- Termination:

- Once all vertices are included in , the algorithm terminates, and the arrays and contain the shortest-path estimates and predecessors, respectively, for each vertex from the source vertex .

I don't understand why we update predecessor

\pi[v]. I guess the question is what is the predecessor\pi[v]represent?The predecessor of a vertex in the context of Dijkstra’s algorithm represents the vertex that precedes in the shortest path from the source vertex . By updating when the -value of is changed, we ensure that always points to the vertex that is immediately before in the currently known shortest path from the source vertex . (we can use this array to reconstruct the shortest paths from the source vertex to all other vertices. starting from any , the predecessor allows us to do this.)

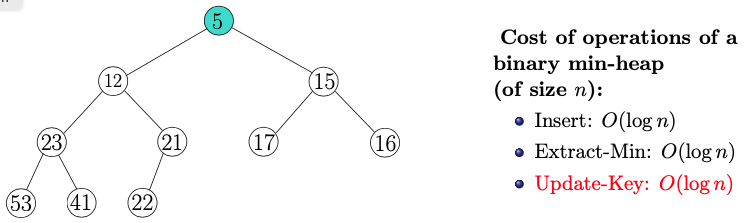

Which Abstract Data Type ( ADT) should we use for vertices?

Values in this tree representing a distance from a source node!

Adjacency list is my first thought

- he said no because it is a graph representation and not a vertex representation?

Prof said he would do a priority queue, which perhaps could be implemented by a min Heap.

Note:

- There is the order property (left and right are larger), and the structure property (every node is full except maybe the last)

- Insert is to add a new node

- Extract-Min is to take 5 out, and fix the heap

- Apparently all the vertices are put into the min heap with values corresponding to their -value from ?

- We start by initializing everything

- The heap Q kinda keeps track of the current -values from everything.

Refer to the slides to be honest, it has a whole ass animation 20+ slides

Read this part in the textbook CLRS instead.

-

Initializes the and values and empt set to keep track of vertices whose shortest paths have already been determined.

-

Initialize a priority queue containing all vertices in the graph

-

Each time through the while loop, extract a vertex from (with -value from priority queue ) and add it to set indicating that its shortest path has been determined

-

The first time through this loop . Vertex , thus, has the smallest shortest-path estimate of any vertex in .

-

For each vertex adjacent to :

- If the distance is greater than the sum of the distance and the weight of the edge from to :

- Update to , as this path is shorter

- Update to , indicating that is the new predecessor of in the shortest path from to .

- If the distance is greater than the sum of the distance and the weight of the edge from to :

-

Terminate:

- Once all vertices have been added to the set , indicating that their shortest paths have been determined, the algorithm terminates.

-

What does the orange part mean?

- We don’t need Heapify to make our heap because it starts with everything at

- Knowing which implementation to use depends on if the graph is sparse or not. If there are few edges (the graph is sparse), then heap is better. If there are many edges (the graph is dense), then the array implementation might be better.

Prof’s advice for the upcoming midterm tomorrow:

"It's not time for emotion, it is time for skill" - Armin

It is from michael jordan

Reading week

Ask NOTES for feb 12, 15 and 27.

Bless mattloulou

Tues Feb 27 2024 (didn’t attend class, was studying for sci250)

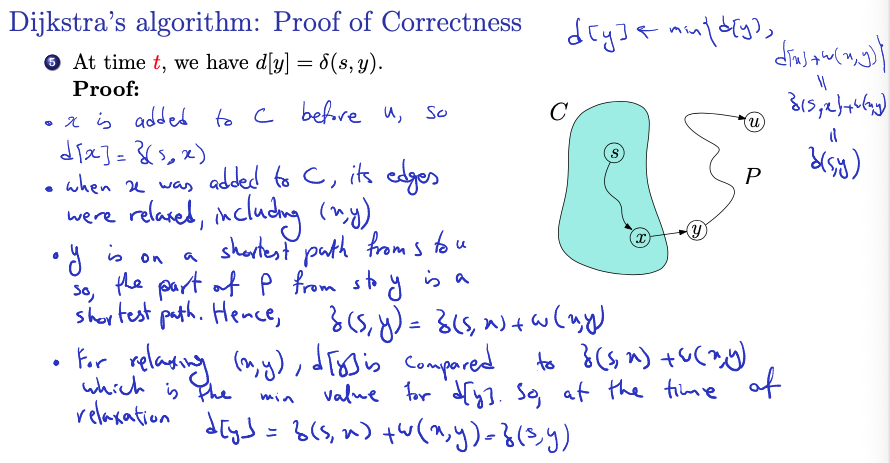

Proof:

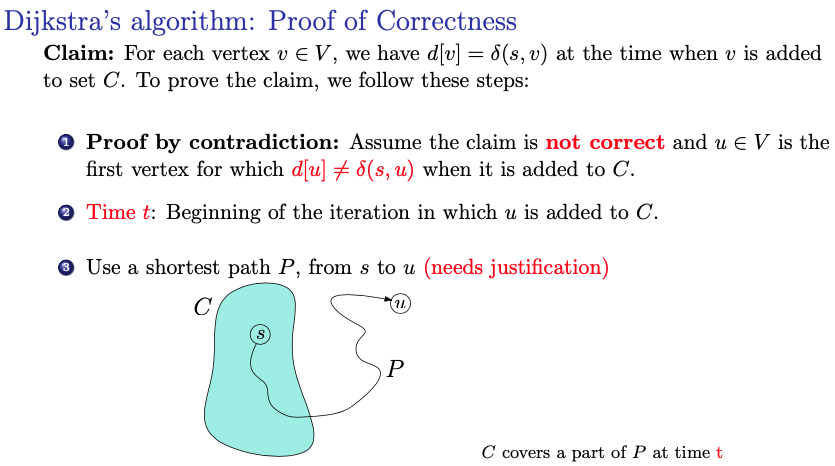

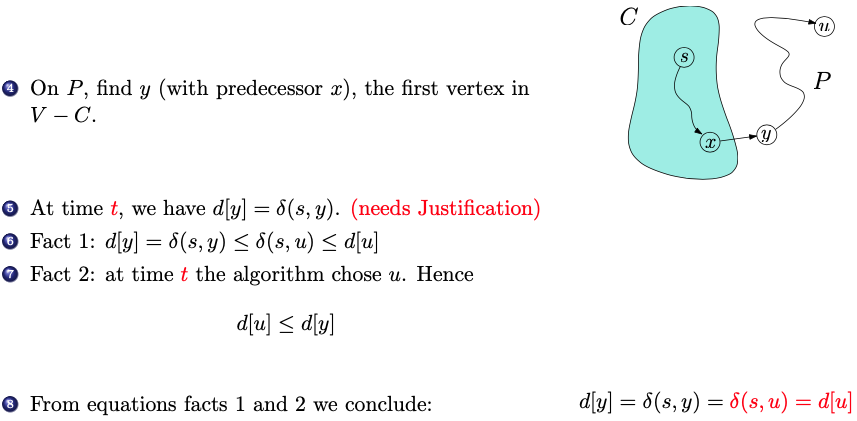

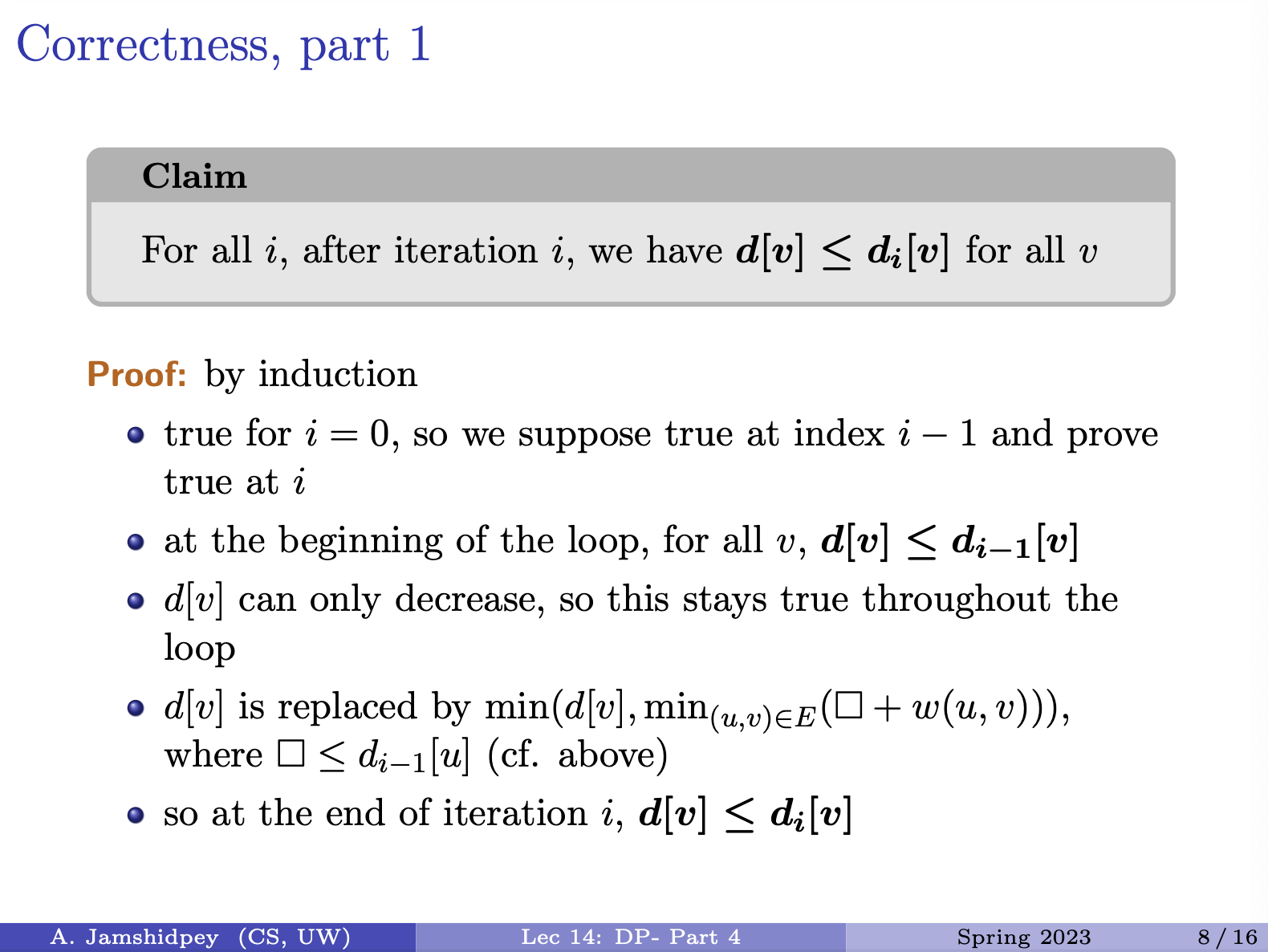

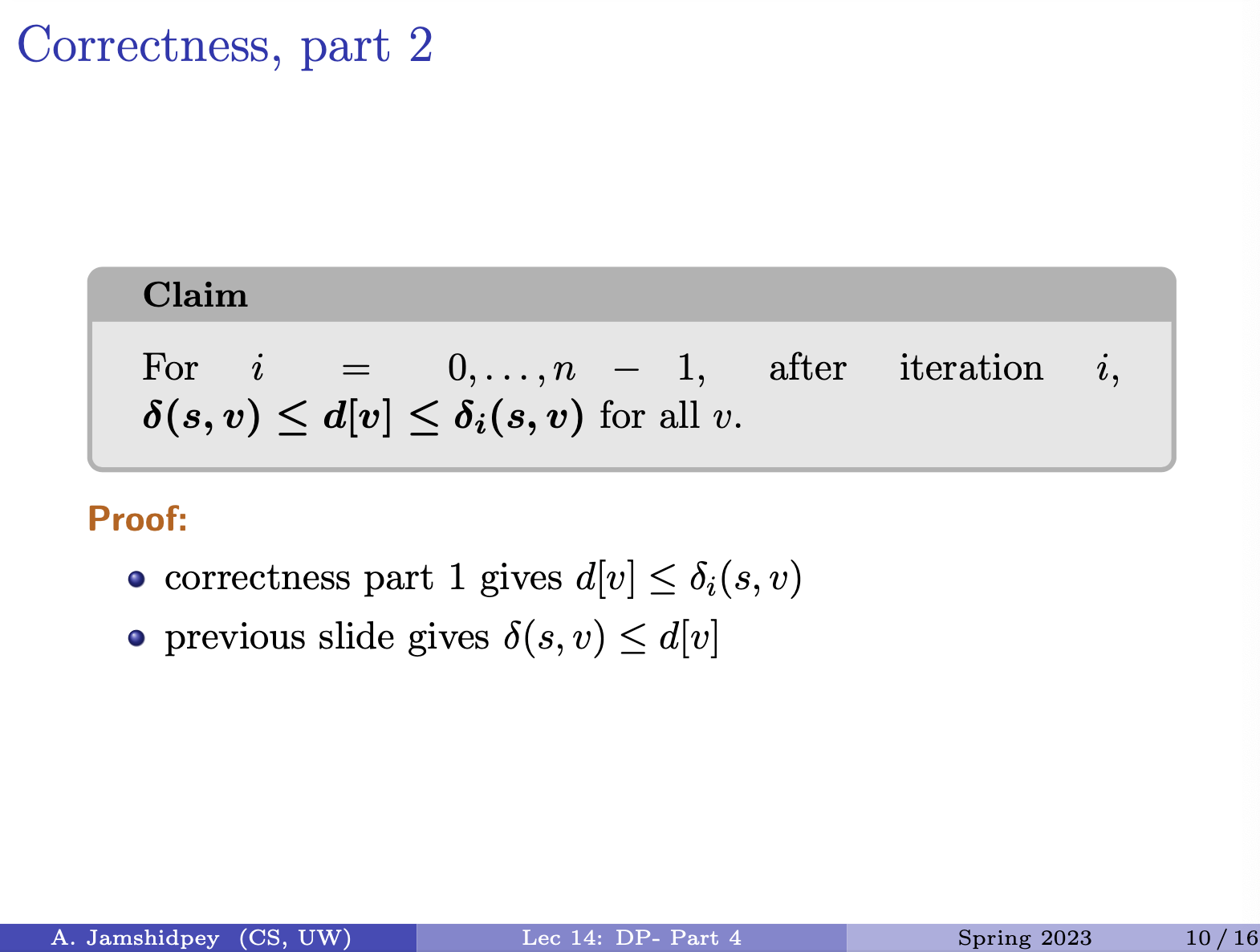

Proof:

- By contradiction: We begin by assuming that the claim is not correct, meaning there exists a vertex for which when it is added to set .

- The aim is to show that this assumption leads to a contradiction, implying that the claim must be true

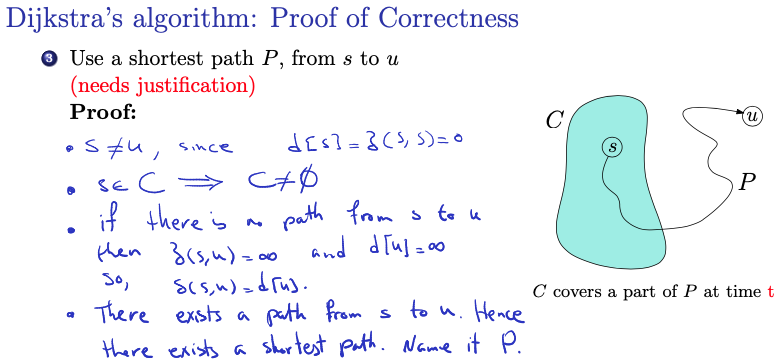

- Time :

- This refers to the beginning of the iteration in which vertex is added to set .

- Shortest path :

- The existence of a shortest path from the source vertex to vertex is assumed here. This is a key assumption in the proof and must be justified.

-

Finding vertex :

- On the shortest path , we find vertex as the first vertex encountered that is not already in set . This implies that is added to set after but before . (makes sense)

-

is the min weight between 2 nodes among all passes

-

The prof said that ensures that we are using non-negative weights

- We have that , since

- and must be distinct

- This statement ensures that the source vertex and the vertex are distinct. Which is necessary because by definition of shortest path distances.

- , so is not empty

- Since the source vertex is added to set initially, the set is not empty!

- If there is no path from to then the shortest path distance by definition and so, .

- This argument ensures that the claim holds trivially when there is no path from to .

- There exists a path from to . Hence, there exists a shortest path. Name it .

Don’t understand the last two point.

- Since is added to before , its shortest path estimate

- When was added to , its edges were relaxed, including

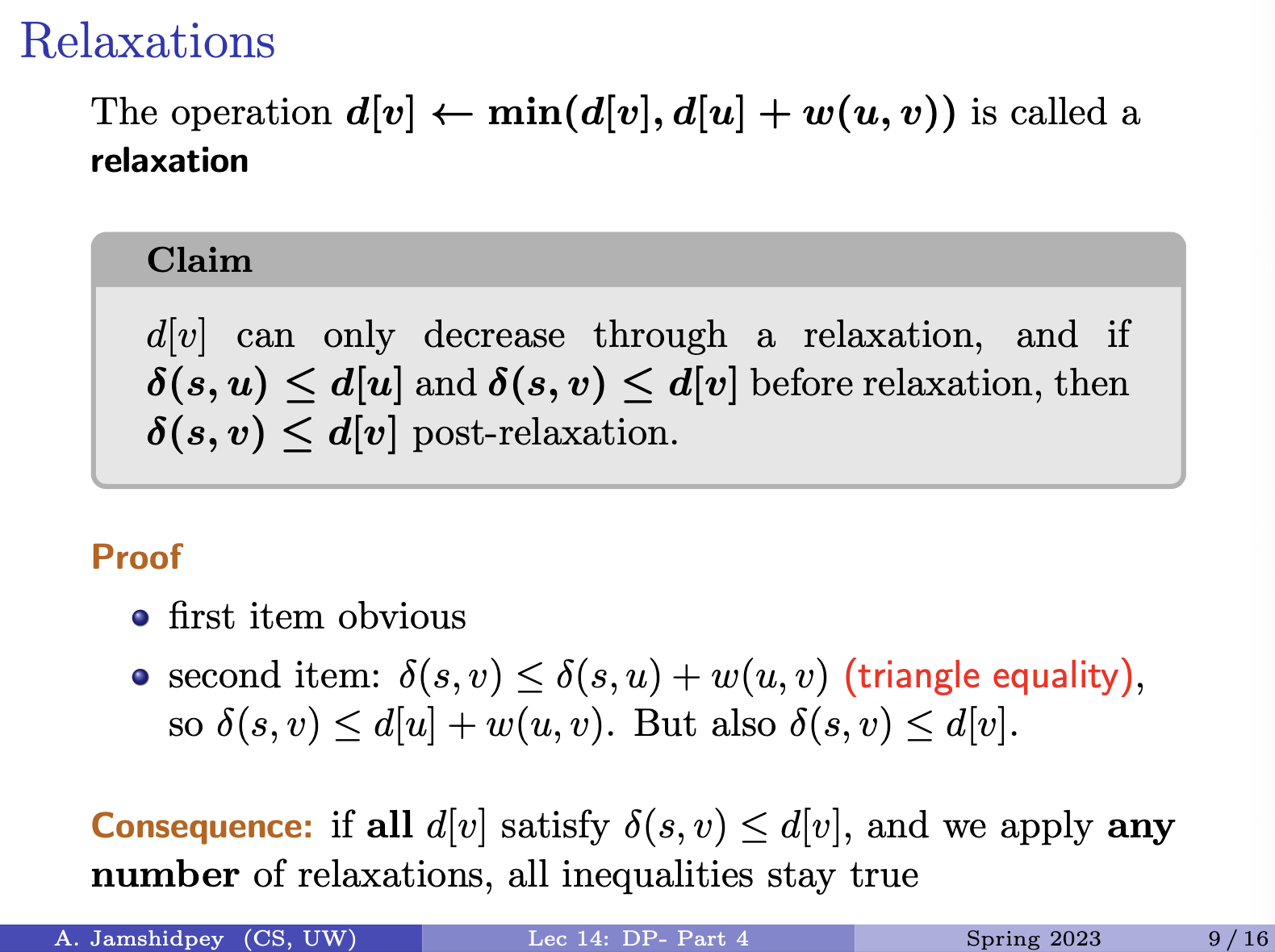

- What does it mean to be relaxed??? I saw it mentioned in the textbook as well

- ”Relaxation” refers to the process of updating the shortest path estimate and predecessors when a shorter path to a vertex is found during the algorithm’s execution

- When vertex is added to set , its outgoing edges, including the edge to vertex , are relaxed.

- Since is on a shortest path from to so, the part of from to is a shortest path. Hence, .

- For relaxation of edge :

- During the relaxation of edge , the algorithm compares to , which is the min value for if the path from to is indeed a shortest path.

- Since the minimum value is chosen during relaxation, at the time of relaxation

In summary

these steps provide a rigorous proof of correctness for Dijkstra’s algorithm, demonstrating that the shortest-path estimates computed by the algorithm are equal to the true shortest-path distances at the time each vertex is added to set C.

Lecture 10 - Minimum Spanning Trees

Spanning Trees

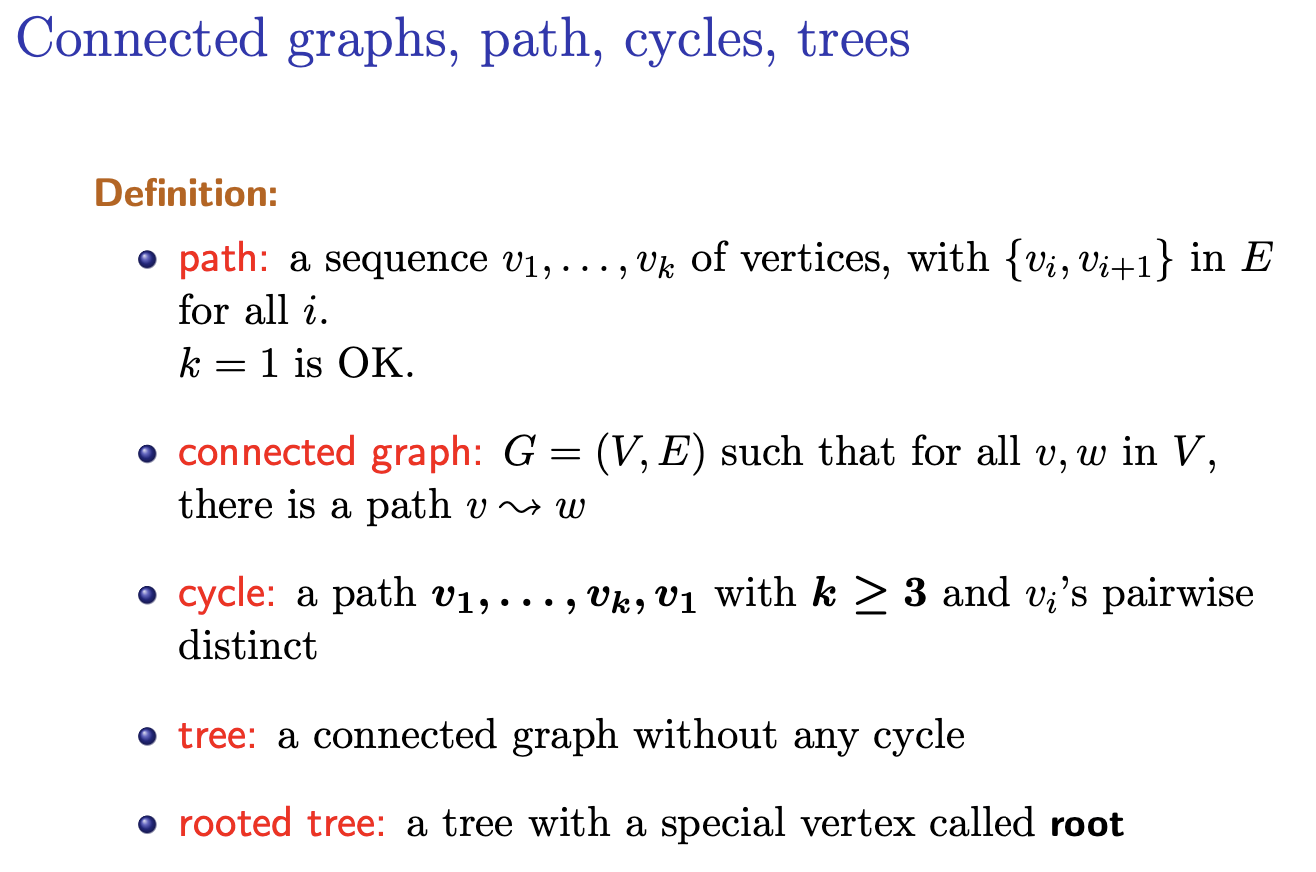

Definition:

- is a connected graph

- a spanning tree in is a tree of the form , with a subset of

- in other words: a tree with edges from that covers all vertices of the graph

- without creating any cycles

- examples: BFS tree, DFS tree

Now, suppose the edges have weights

- in many real-world scenarios, edges of a graph are associated with weights, representing costs, distances, or other measures

- each edge in the graph is assigned a weight

Goal:

- a spanning tree with minimal weight

Minimum Spanning Tree (MST):

- goal of finding a minimum spanning tree is to identify a spanning tree that includes the minimum possible total weight among all possible spanning trees of the graph.

- In other words, an MST is a spanning tree with the lowest possible total weight, considering the weights of its edges.

Note:

- This concept is particularly important in network design, where one aims to minimize costs (or maximize efficiency) while ensuring connectivity.

- This is like if you have a network and you want to minimize the cost of links, but still have everything connected, you want a minimal weight spanning tree.

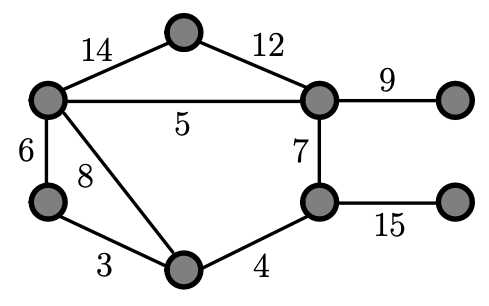

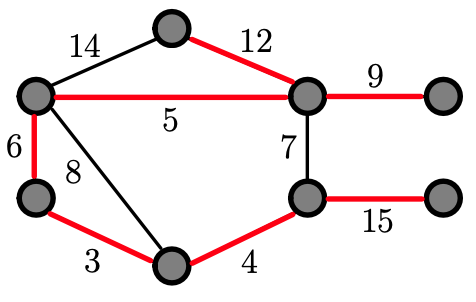

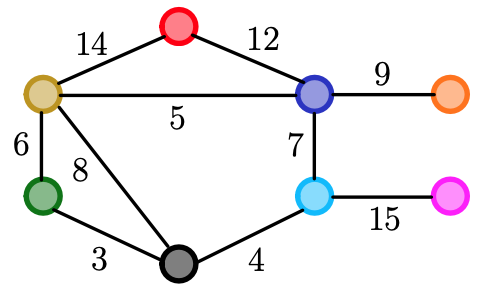

Example

- You start off by choosing the smallest weight edges in this graph, so in the next three slides, you can see that he marked edge with weight 3, 4, 5, 6, 9 and 12, then we don’t pick 14 since we will be creating a cycle (it won’t be a minimum spanning tree)! Thus, we pick 15.

Remember the goal: We have to connect each vertices without creating any circles by choosing smaller weighting edges.

Remark: In the example, we have 8 vertices, therefore we should en up picking 7 edges, and we did. And there is no cycle and it is a tree.

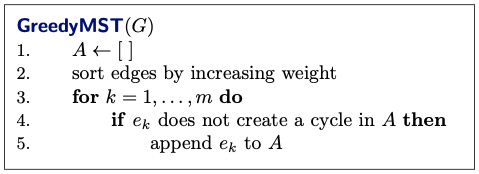

Kruskal’s Algorithm

- Kruskal’s Algorithm Kruskal’s algorithm is a greedy approach to finding the minimum spanning tree (MST) of a connected, weighted graph.

GreedyMST(G)

1. A <- []

2. sort edges by increasing weight

3. for k = 1,...,m do

4. if e_k does not create a cycle in A then

5. append e_k to A- If there is a cycle, then it is not a tree, and so can’t be an optimal solution (assuming that edges have positive weights)

- We want to show that the result will be a tree, and so equivalently we have to show that it is connected and that there are no cycles.

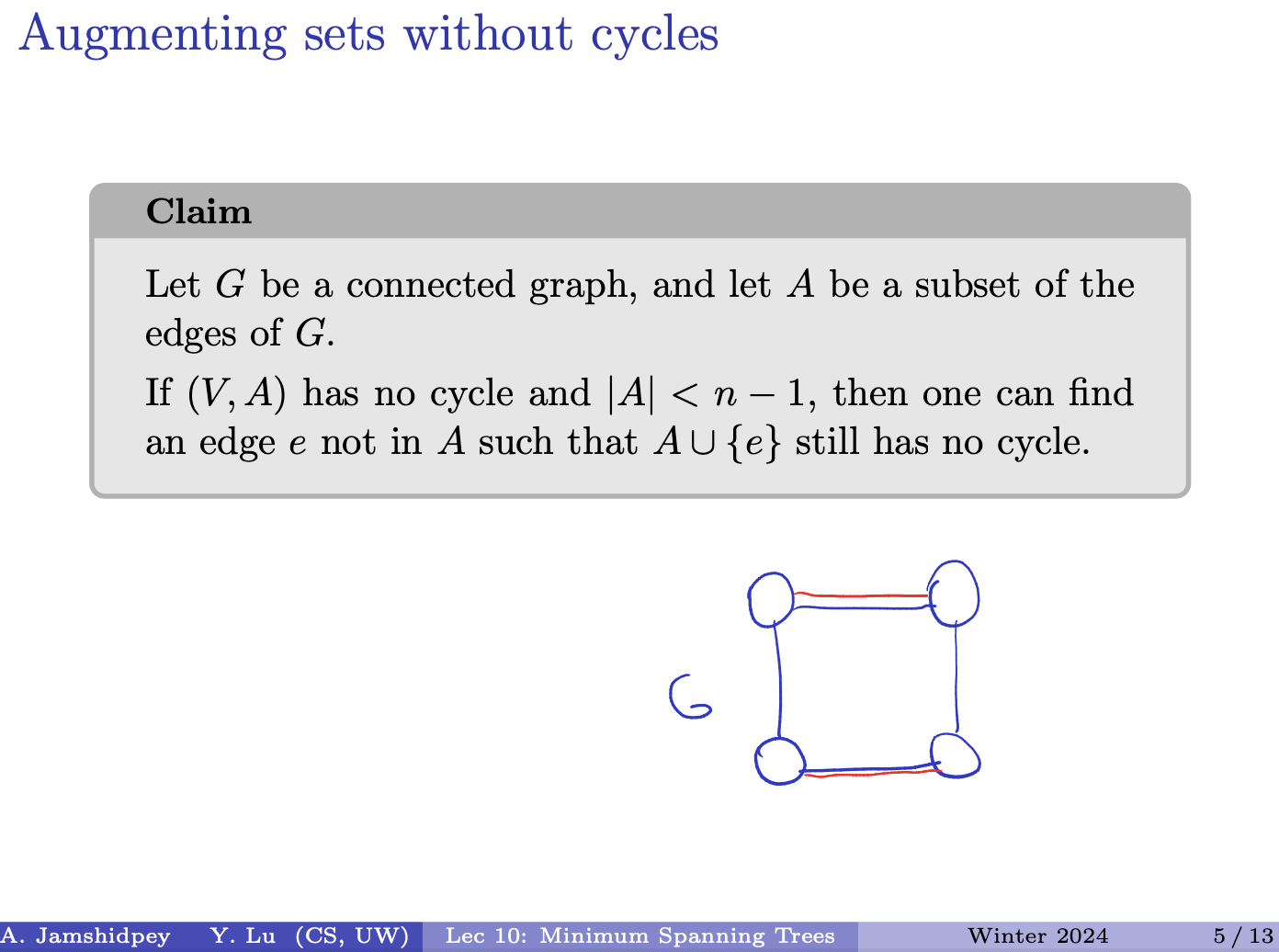

Augmenting sets without cycles

Claim

Let be a connected graph, and let be a subset of the edges of .

If has no cycle and , then one can find an edge not in such that still has no cycle.

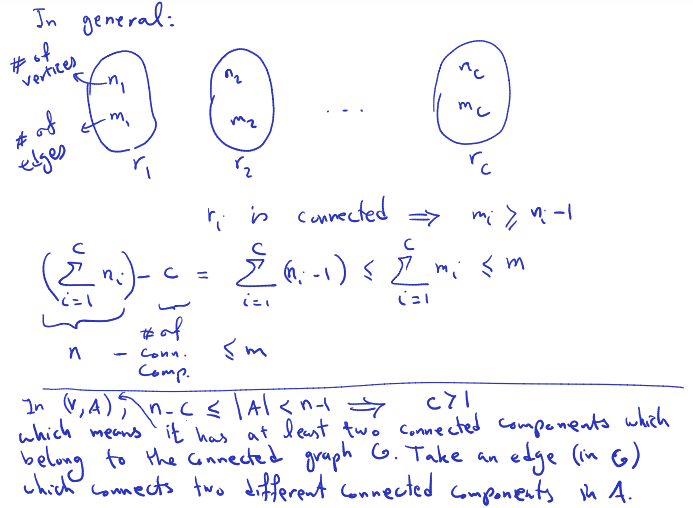

In prof’s annotations, he proves that #vertices - #connected components #edges

- Insert Prof’s proof:

- In

- which means it has at least two connected components which belong to the connected graph . Take an edge (in ) which connects two different connected components in .

Need to understand the claim and the idea of proof.

I’m so tired right now (March 2, 2024)

Consider the connected components in the subgraph . Since , there are at least two connected components. Let’s call these connected components and .

- Each of these connected components is a subset of vertices in , and they are disconnected from each other in the subgraph .

- Since is connected, there must be at least one edge in that connects vertices from to . If there weren’t such an edge, would have been disconnected, which contradicts the fact that is connected.

- Now, let’s choose an edge from that connects a vertex from to a vertex from . Such an edge exists because is connected.

Properties of the output

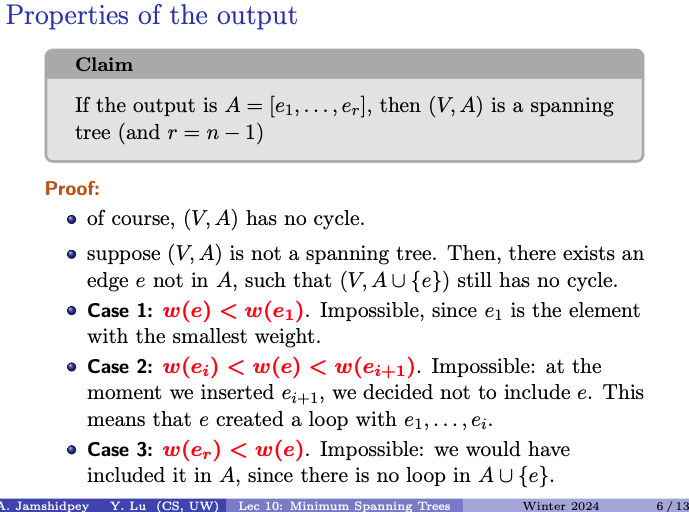

Claim

If the output is , then is a spanning tree (and ).

A spanning tree of a graph is a subgraph that is a tree (a connected acyclic graph) and includes all the vertices of .

The claim asserts that if the output of this process is a set of edges that form edges (i.e., the maximum number of edges for a spanning tree in a graph with vertices), then this set of edges indeed forms a spanning tree of the original graph .

Note: the output has edges 1 up to .

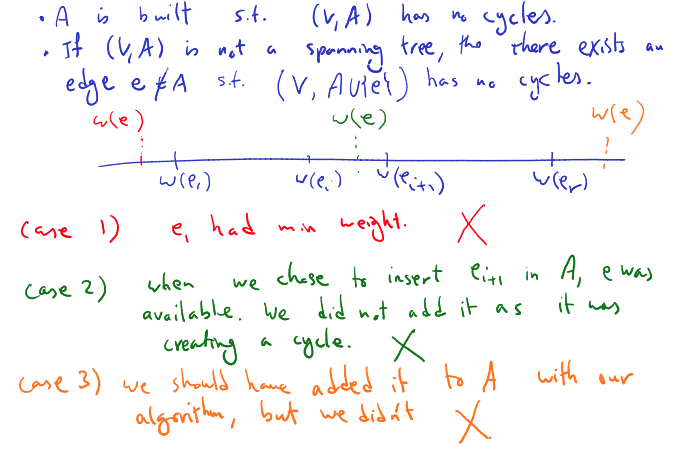

Proof:

- of course, has no cycle

- suppose is not a spanning tree. Then there exists an edge not in , such that still has no cycle.

- Case 1:

- Impossible, since is the element with the smallest weight.

- Thus, if has a smaller weight than , it should have been selected instead of . It contradicts the initial assumption.

- Case 2:

- Impossible: at the moment we inserted , we decided not to include . This means that created a loop with .

- If were to be added between and , it would create a loop with . This contradicts the fact that is assumed to be a spanning tree, which by definition is acyclic

- Case 3:

- Impossible: we would have included it in , since there is no loop in .

- If adding does not create a loop in , then it would have been included in in the first place. Since there is no loop in , it means that adding maintains the acyclic property of the subgraph, which contradicts the assumption that was no initially included in .

- These cases show that if is not a spanning tree, then there exists no edge that can be added to without creating a cycle, which contradicts the assumption that is not a spanning tree. Thus, must be a spanning tree.

Prof’s annotations:

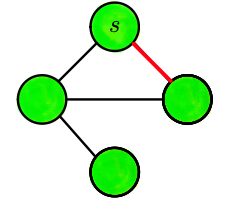

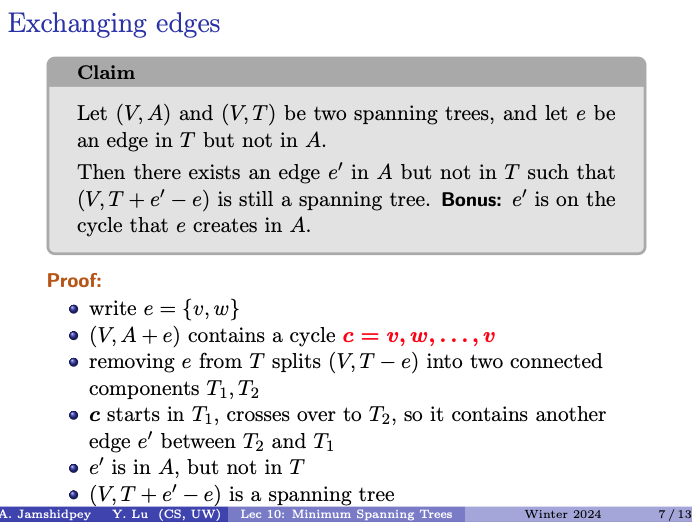

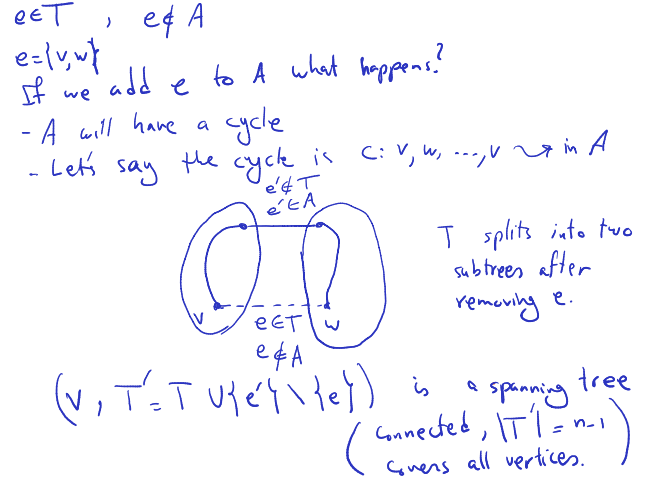

Exchanging edges

Claim

Let and be two spanning trees, and let be an edge in but not in .

Then there exists an edge in but not in such that is still a spanning tree. Bonus: is on the cycle that creates in .

Proof:

- write

- contains a cycle

- removing from splits into two connected components

- starts in , crosses over to , so it contains another edge between and

- is in , but not in

- since c spans both and , it must contain another edge connecting and . This edge is in (since it’s part of the cycle ) but not in .

- is a spanning tree

- By replacing in with , the resulting graph remains connected and acyclic, and thus forms a spanning tree.

This completes the proof that for any edge in but not in , there exists an edge in but not in such that replacing with still results in a spanning tree.

Prof’s annotations:

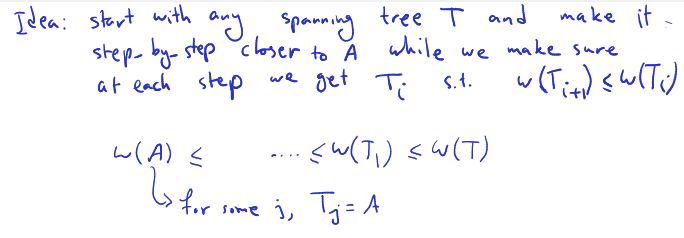

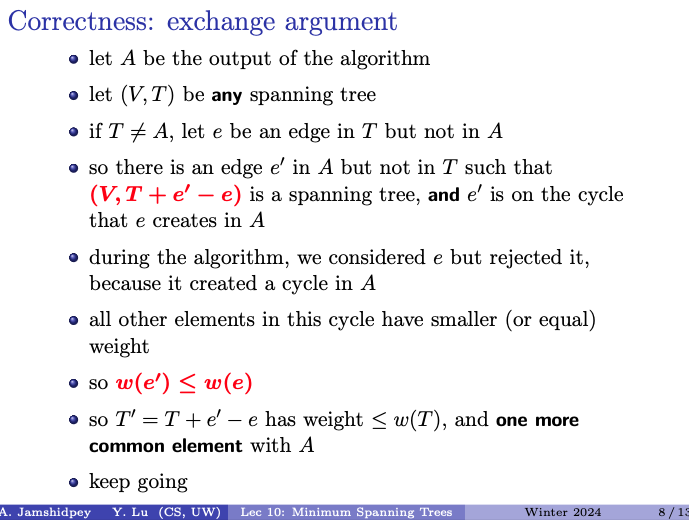

Correctness: exchange argument

- Initial Assumption: Let be the output of the algorithm and be any spanning tree. If is not equal to , meaning there exists an edge in but not in .

- Edge Exchange: Since is in but not in , according to the claim, there must exist an edge in but not in such that replacing with results in a spanning tree .

- Weight Comparison: The claim states that is on the cycle that creates in , and during the algorithm, was considered but rejected because it created a cycle in . Moreover, all other elements in this cycle have smaller or equal weight compared to .

- Weight Inequality: Therefore, , indicating that the weight of is less than or equal to the weight of .

- Spanning Tree Construction: By replacing with , the resulting spanning tree has weight less than or equal to and one more common element with , thus maintaining the validity of the spanning tree while improving its similarity to .

- Iteration: This process can be iterated until becomes equal to , ensuring that the output of the algorithm is indeed a spanning tree similar to in terms of contained edges.

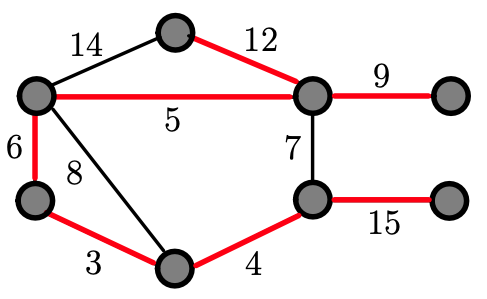

Merging connected sets of vertices

There is a slideshow of this in the notes:

…

Doesn’t choose 7 because it will form a cycle. Doesn’t choose 14 because we already have all edges and by picking edge with weight 12.

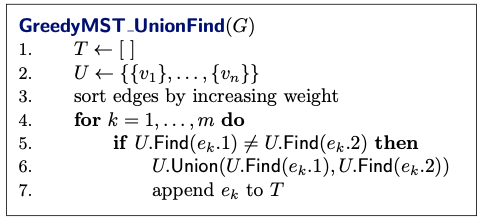

Data Structures

Operations on disjoint sets of vertices:

- Find: identify which set contains a given vertex

- Union: replace two sets by their union

This pseudocode outlines the implementation of Kruskal’s algorithm for finding the Minimum Spanning Tree (MST) of a graph using disjoint sets (also known as Union-Find data structure).

- Initialization: Initialize an empty set to store the edges of the MST, and initialize a set containing disjoint sets, each representing a single vertex of the graph.

- Sort Edges: Sort the edges of the graph by increasing weight. This step ensures that we process edges in non-decreasing order of weight.

- Iterate Through Edges: Iterate through the sorted edges , where is the total number of edges in the graph.

- Check Connectivity: For each edge , check whether the vertices and belong to different sets in (i.e., they are not already connected in the MST).

- Union Operation: If the vertices of are in different sets, perform the Union operation on the sets containing these vertices in the MST.

- Update MST: add the edge to the MST .

- Output MST: After processing all edges, the set contains the edges of the MST.

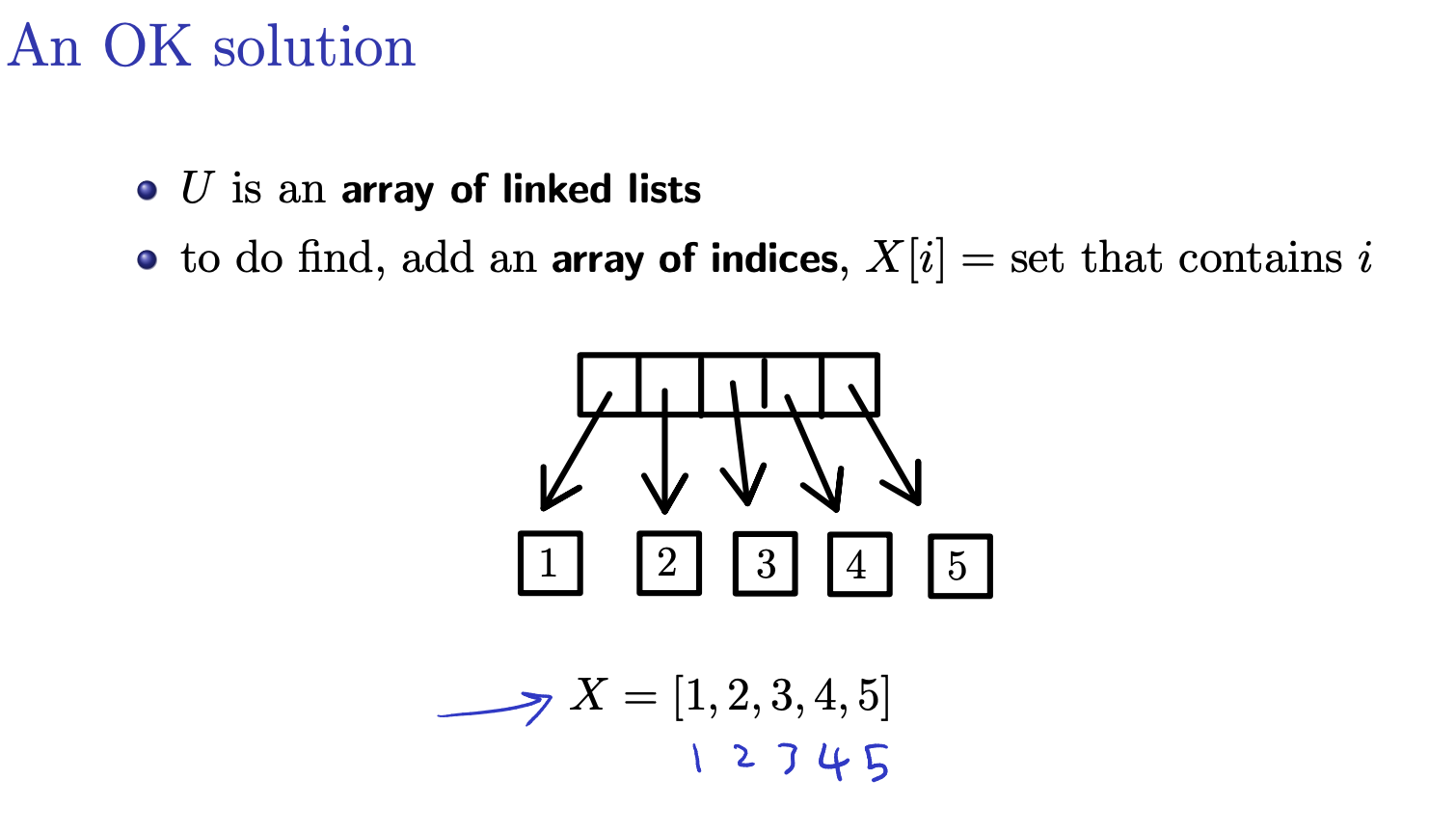

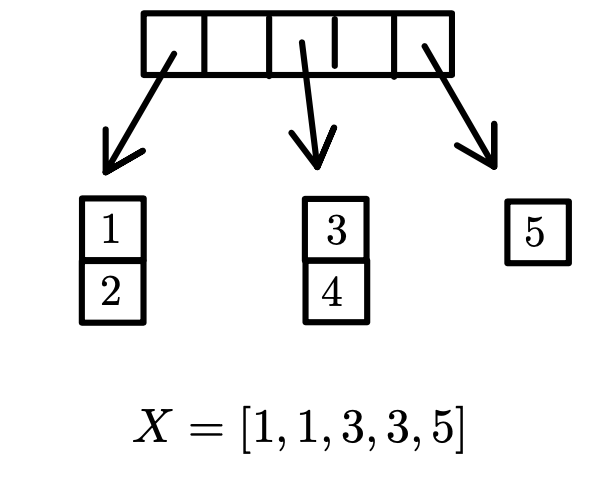

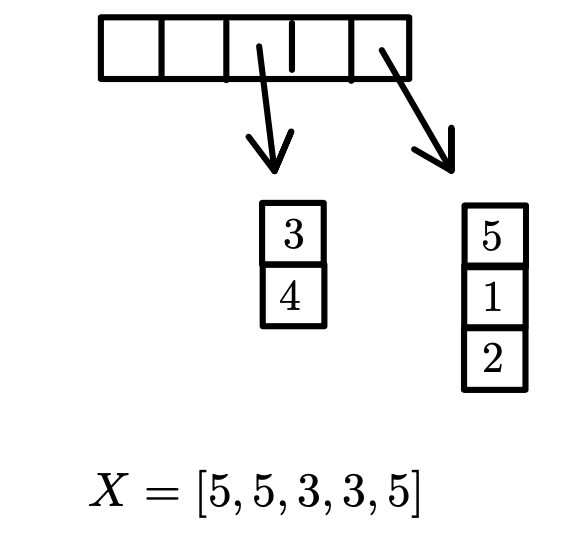

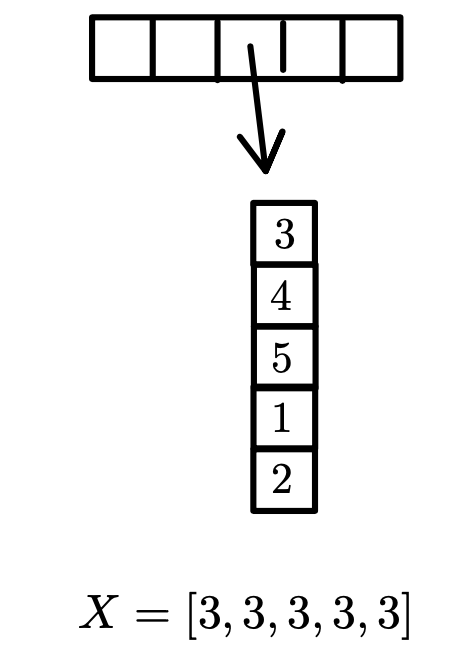

Another slideshow of An OK solution (look in notes):

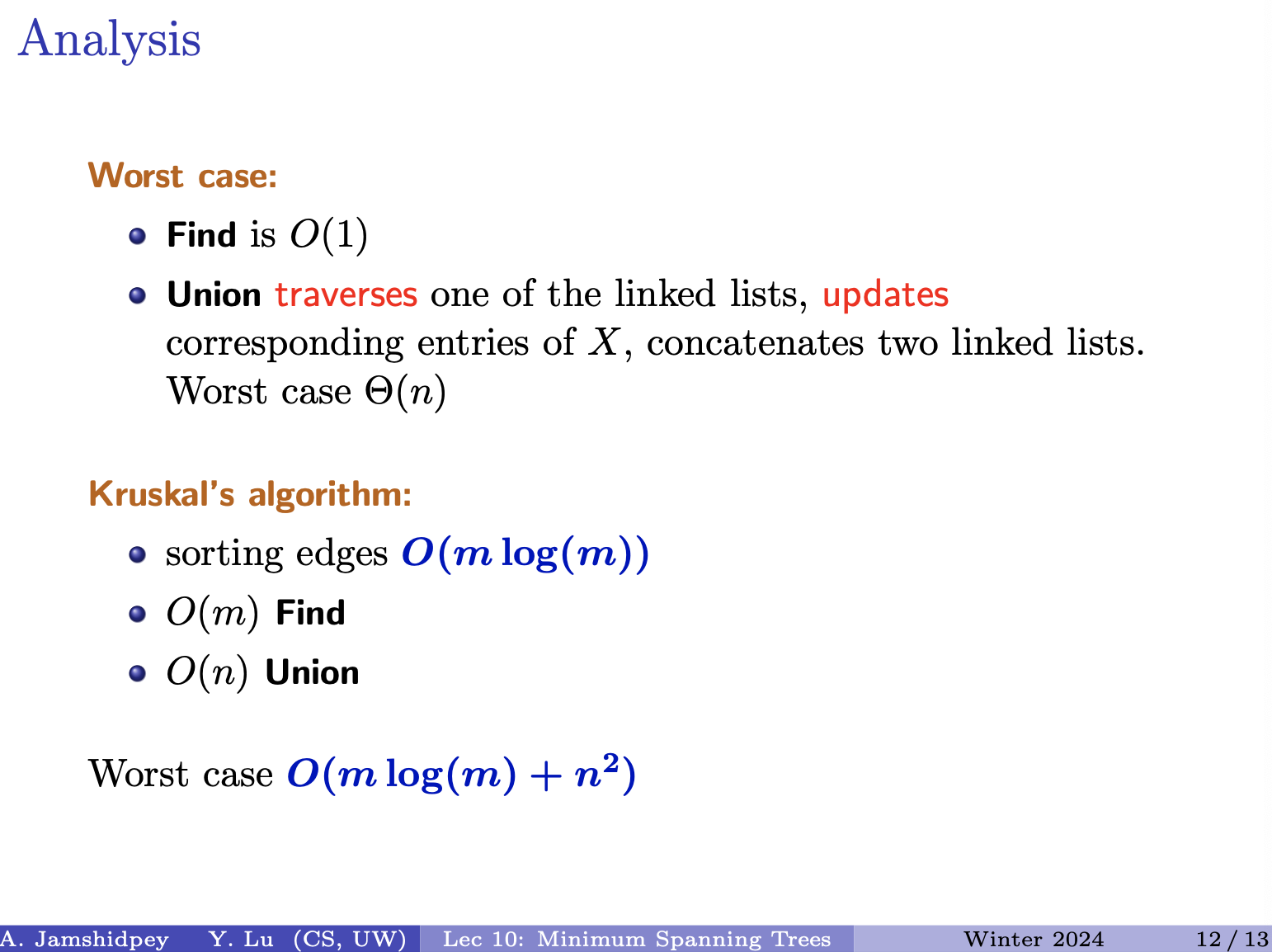

Worst case:

- Find is

- finding the set that contains a given vertex is because it simply requires a constant-time lookup in the array.

- Union traverses one of the linked lists, updates corresponding entries of , concatenates two linked lists. Worst case .

- this operation involves traversing one of the linked lists (which may contain up to elements), updating corresponding entries of , and concatenating two linked lists. In the worst case, takes time.

Krushal’s Algorithm:

- sorting edges

- using an efficient sorting algorithm

- Find

- performed for each edge to determine whether the vertices of the edge belong to different sets. Since the find operation is , total time complexity for find operations in Kruskal’s algorithm is .

- Union

- Union operations are performed whenever two vertices from different sets are found. In the provided implementation, the worst-case time complexity for a single union operation is . In Kruskal’s algorithm, there can be at most union operations (when all vertices are initially in separate sets and are merged one by one). Thus, the total time complexity for union operations in Kruskal’s algorithm is .

Worst case

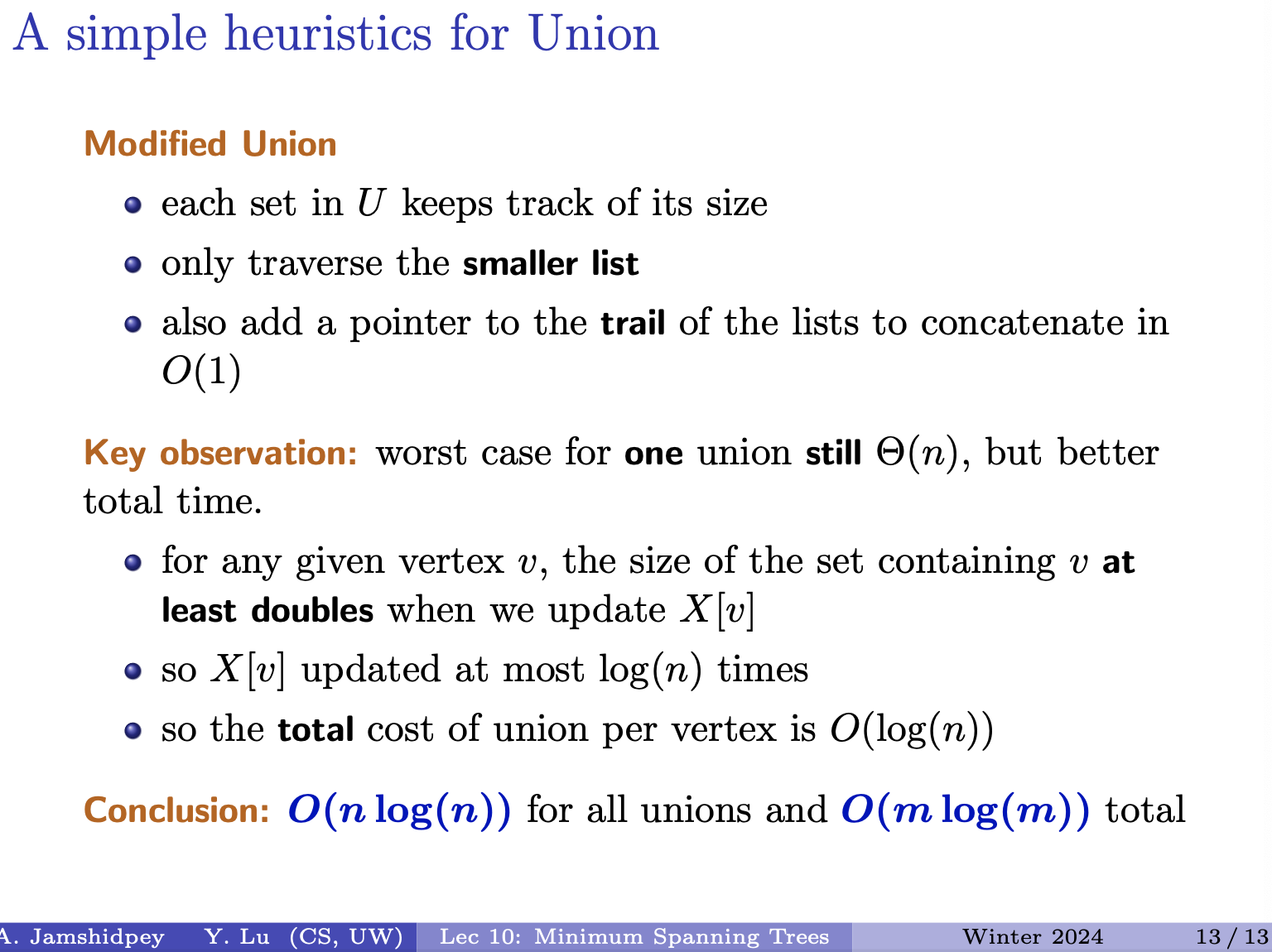

For optimizing the union operation in the disjoint set data structure which improves the overall time complexity of Kruskal’s algorithm.

- Size Tracking: Each set in now keeps track of its size. This allows us to determine which set is smaller and traverse only the smaller list during the union operation.

- Efficient Concatenation: A pointer to the tail of the lists is added to facilitate concatenation in constant time .

Analysis of Improved Version:

- Key Observation: Although the worst-case time complexity for a single union operation remains , the total time is significantly reduced due to the optimization.

- Size Doubling: For any given vertex , the size of the set containing at least doubles when we update during a union operation.

- Frequency of Updates: Since is updated at most times (as the set size doubles), the total cost of union per vertex is .

- Total Time Complexity: With the improved union operation, the total complexity for all unions becomes , considering vertices.

Combining everything: for all unions and total

Thu Feb 29 2024

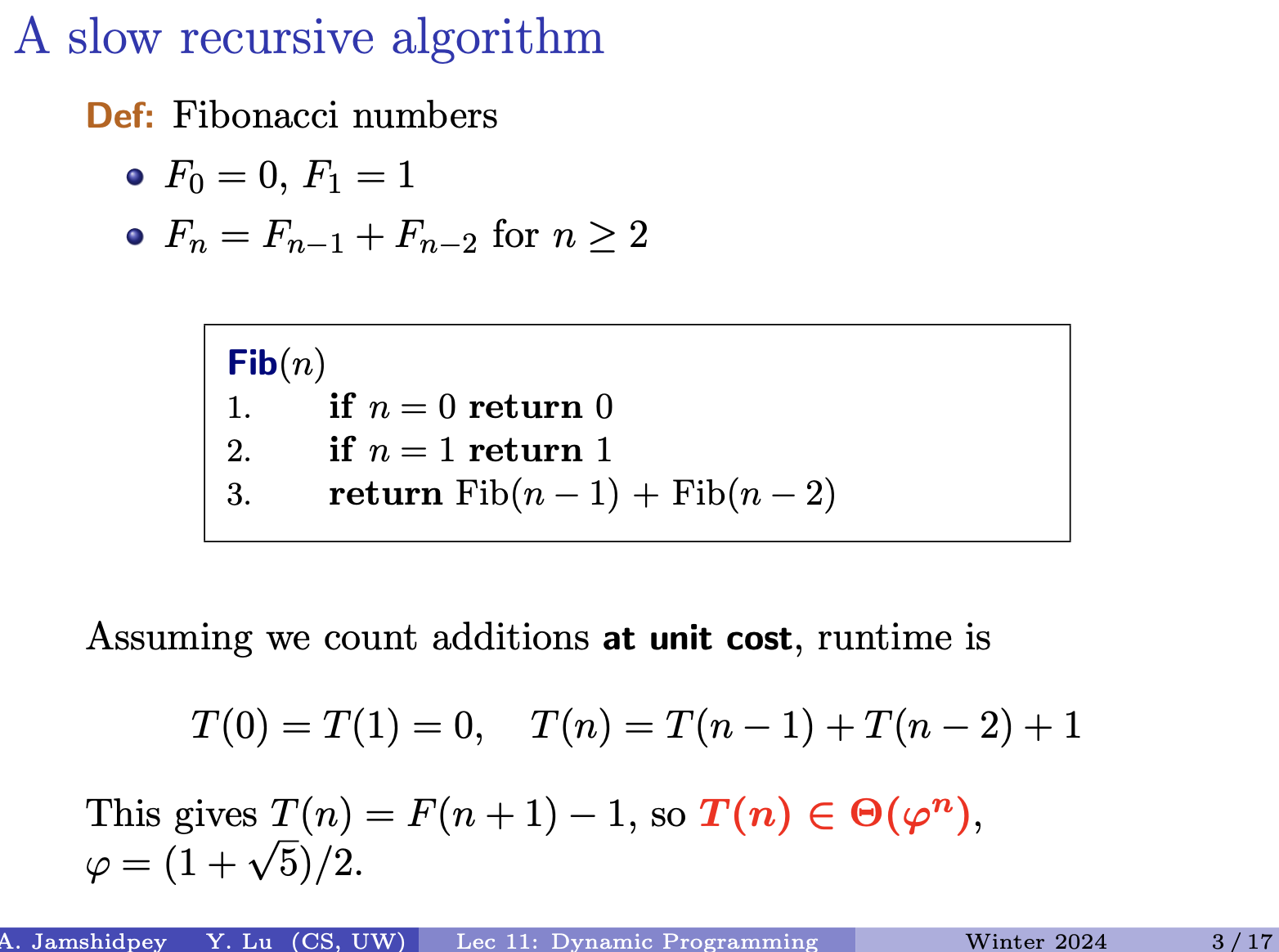

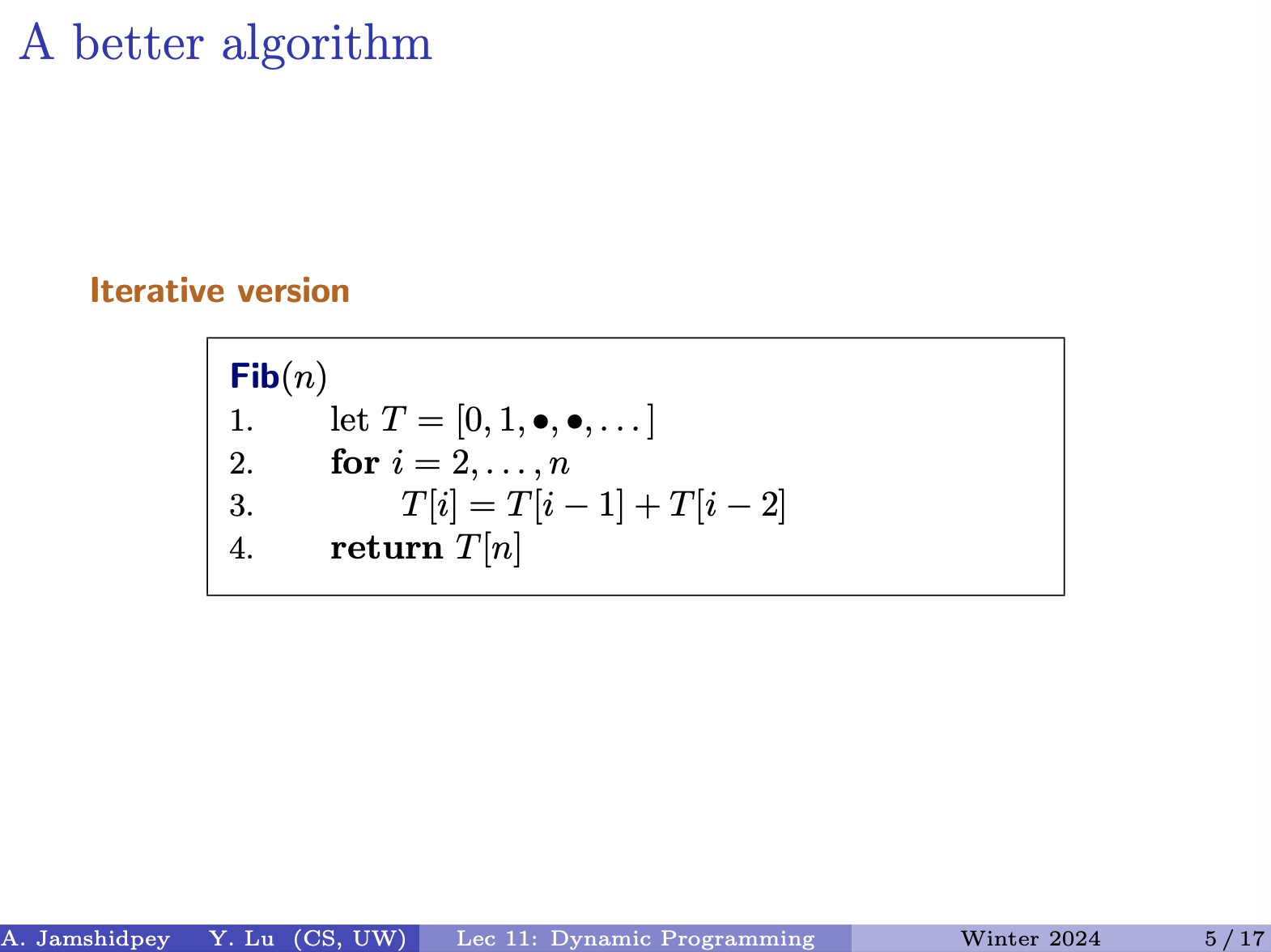

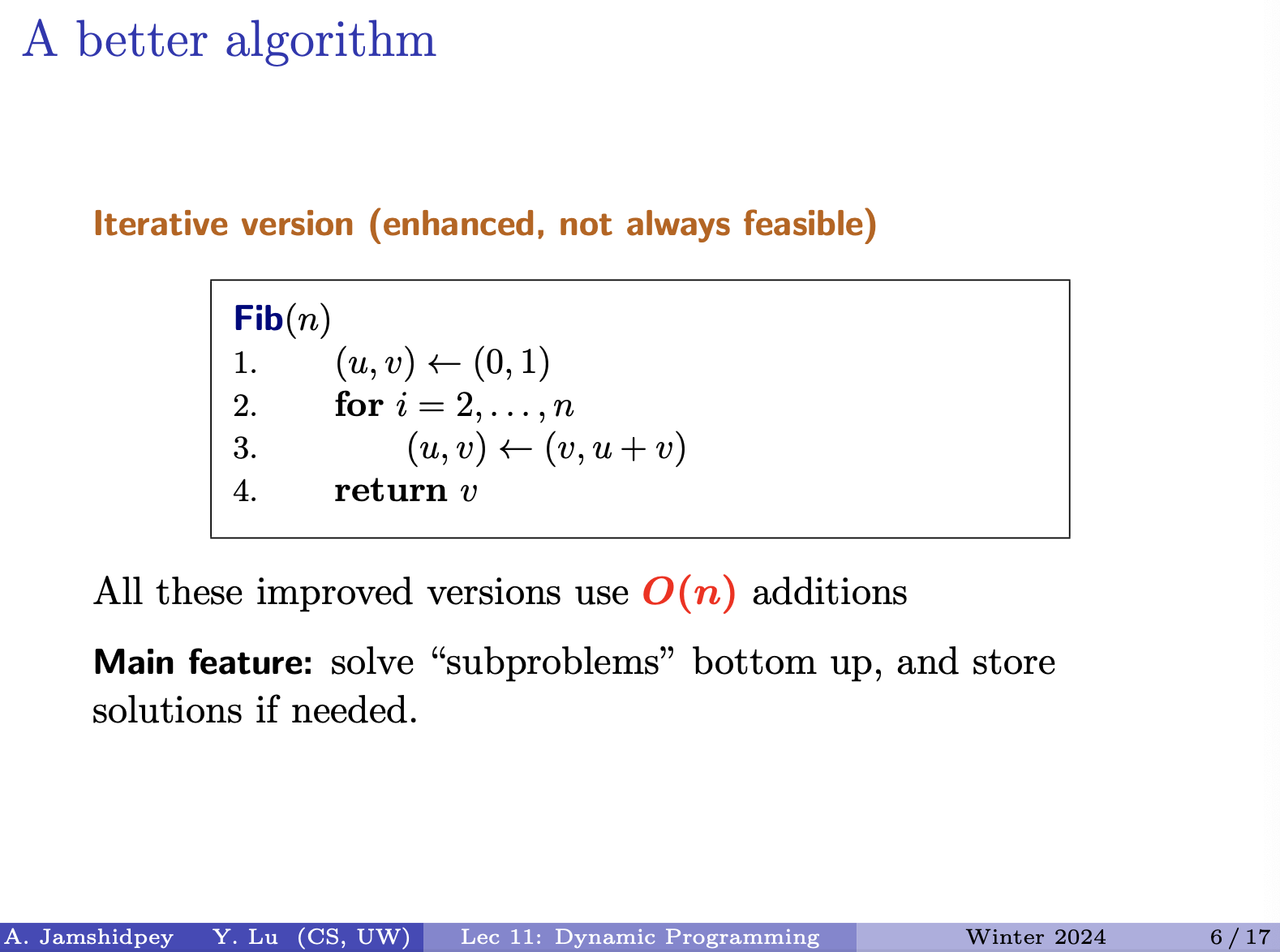

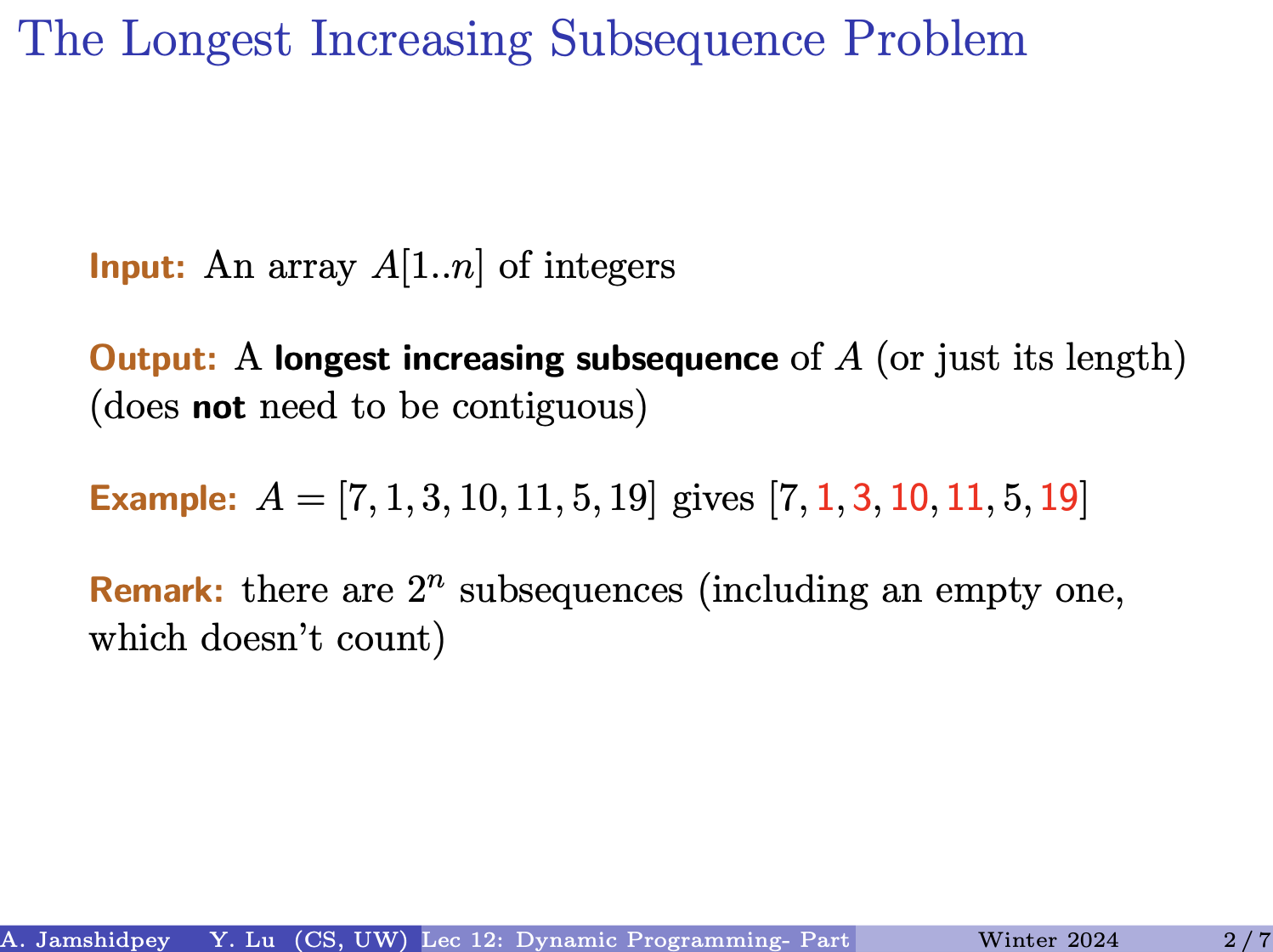

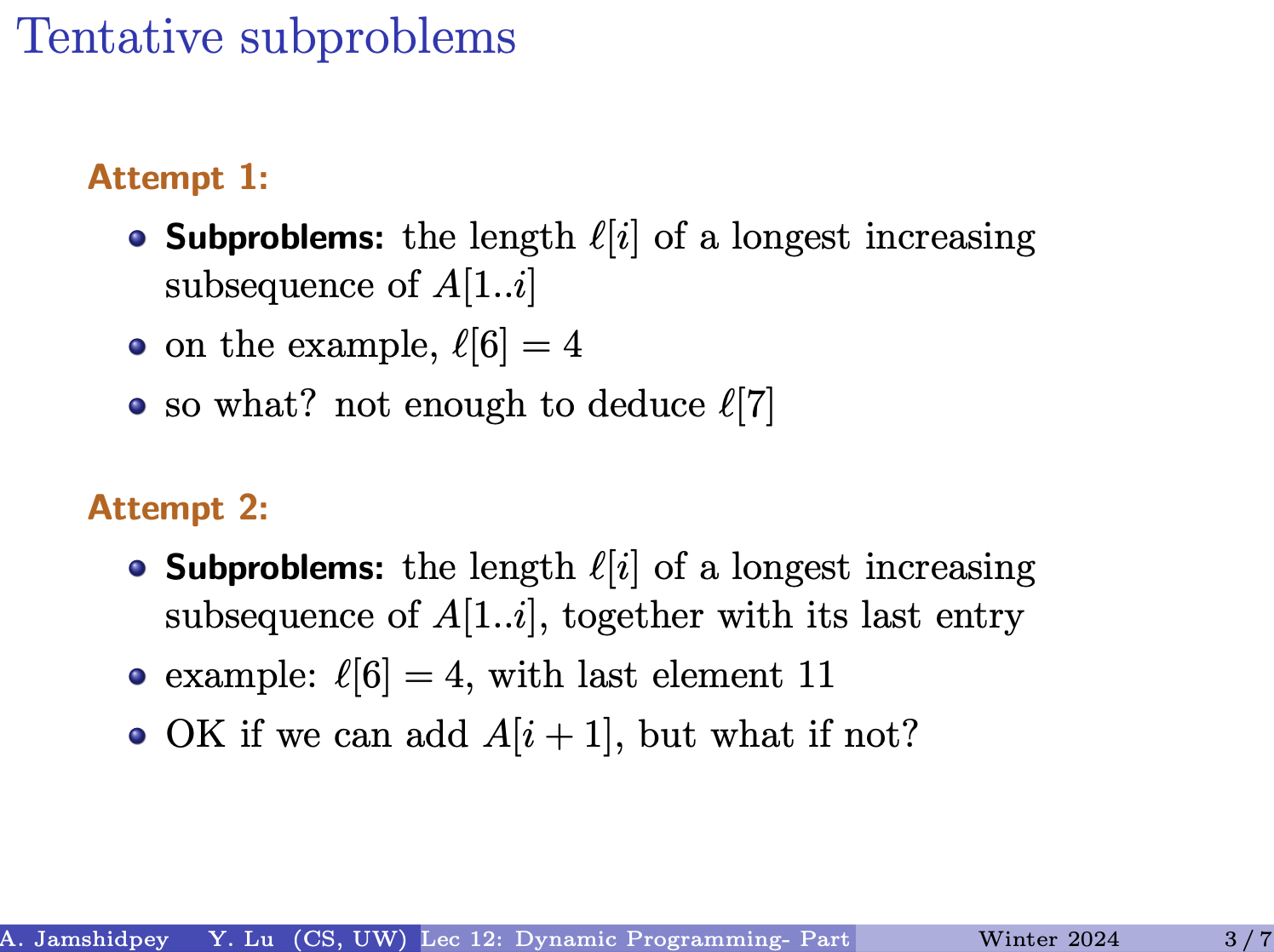

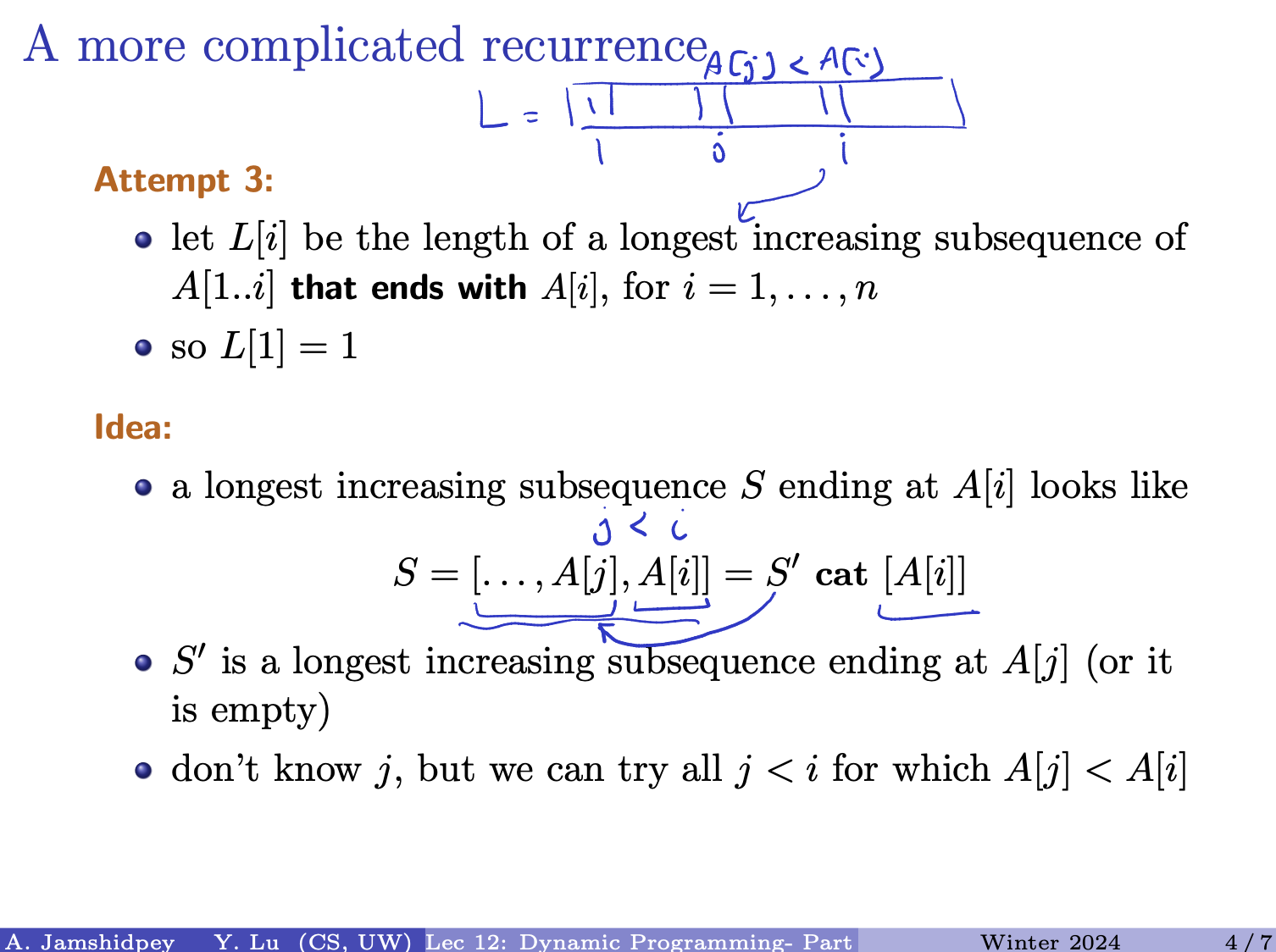

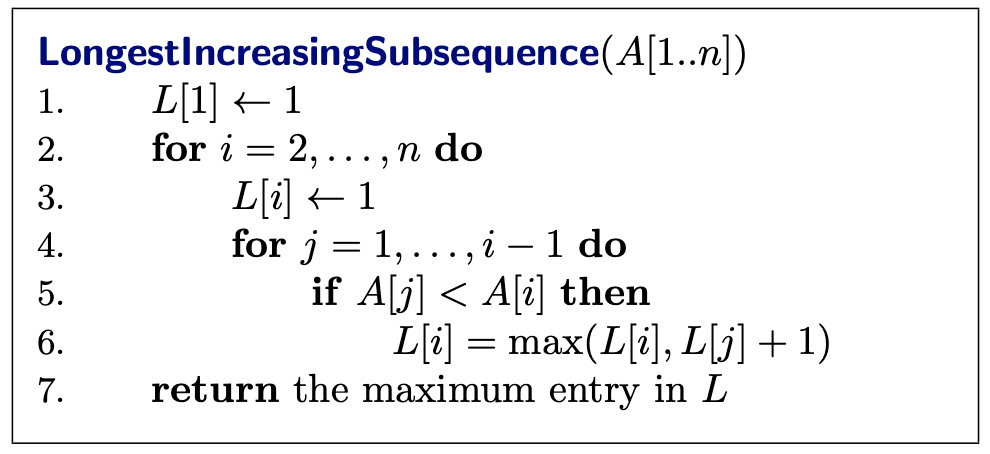

Lecture 11 - Dynamic Programming

Goals: This module: the dynamic programming paradigm through examples

- interval scheduling, longest increasing subsequence, longest common subsequence, etc.

What about the name?

- programming as in decision making

- dynamic because it sounds cool.

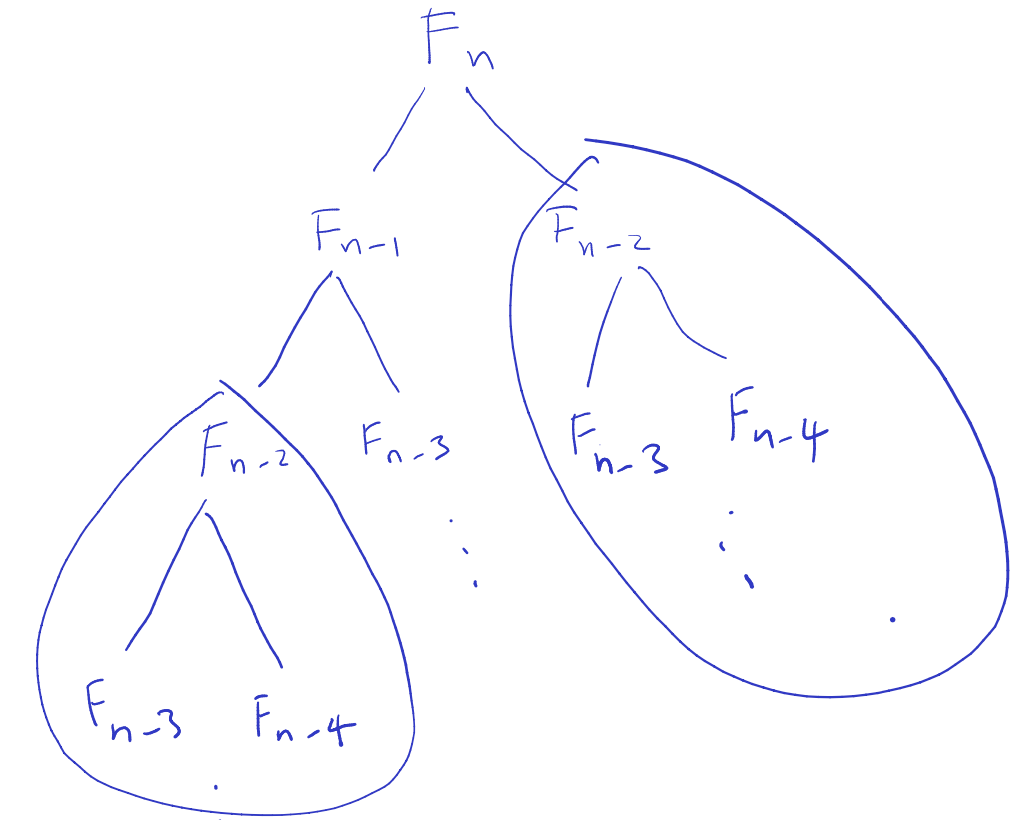

- Runtime that is exponential bigger than 1. He doesn’t look happy.

Insert picture:

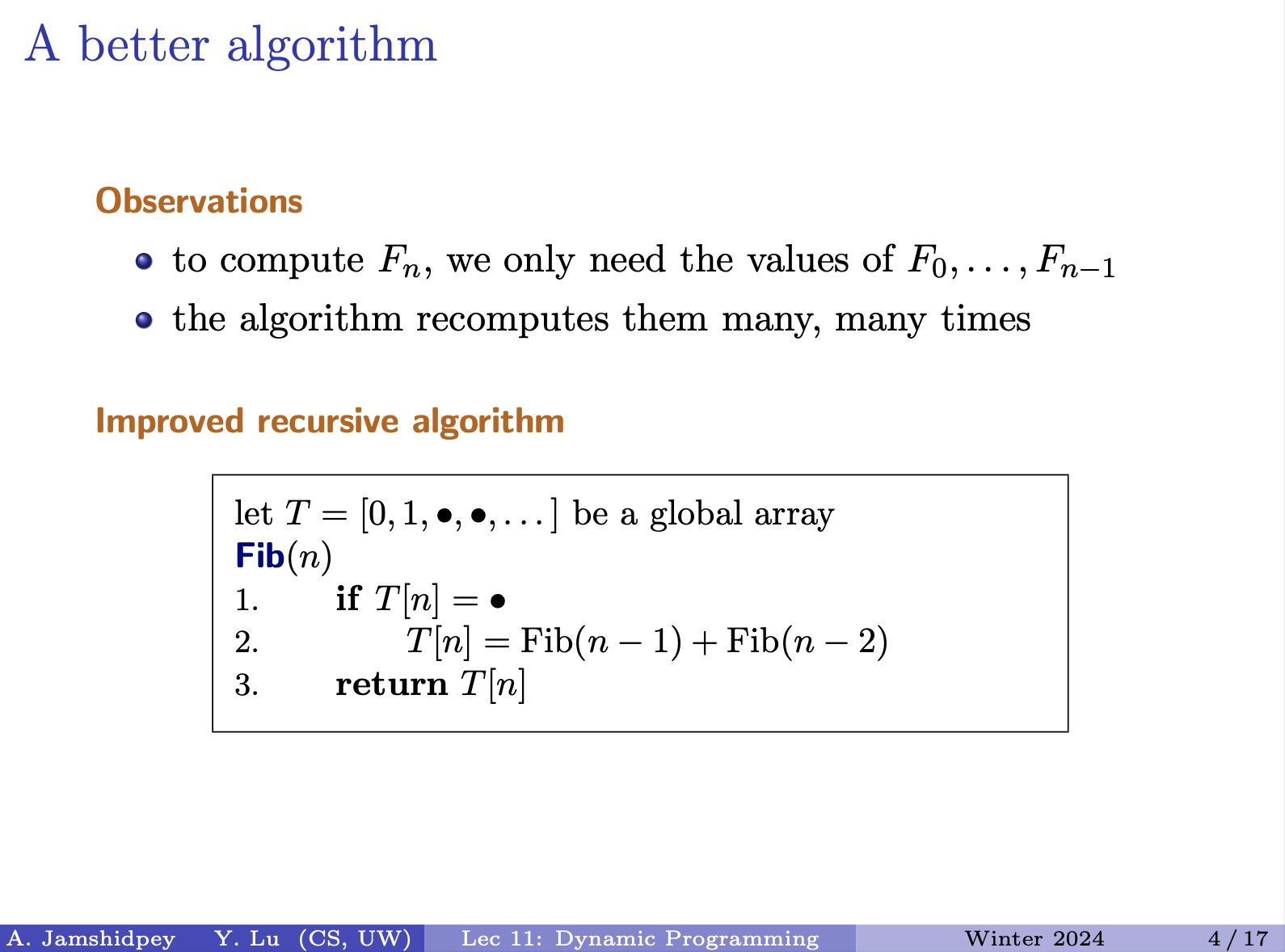

Remember what we’ve computed with an array. This is still a recursive solution. Why start by top of the tree when we can go from bottom of the tree?

Runtime:

For the data structure we will use to store , we just need an array of size (cause we start at .

- In this top-down approach, it uses the “memoization” technique, also known as “top-down dynamic programming”. Where you start by solving the larger problem by breaking it down into the subproblems. Then to avoid redundancy, you keep track of everything in a data structure, in this case an array of already computed numbers (of my subproblems).

How can we improve it? Not necessarily the complexity?

We don’t care about the whole array when we only need the previous two values to compute the next.

- Therefore, we have solved the subproblems from bottom up? What does that actually mean?

From chatgpt "bottom up"

In the context of dynamic programming, “bottom-up” refers to an approach where you start solving the problem from the smallest subproblems and gradually build up solutions to larger subproblems until you reach the desired solution.

In summary

- Bottom-up dynamic programming starts from the smallest subproblems and builds up solutions to larger subproblems iteratively.

- Top-down dynamic programming starts from the largest problem and breaks it down into smaller subproblems, recursively solving them while storing solutions to avoid redundant computations.

We just need an array to store stuff. No need of a hash-map. What would be the size of the array. What about ?

: so to compute the value at position we add up the values at and . What kind of relation is this?

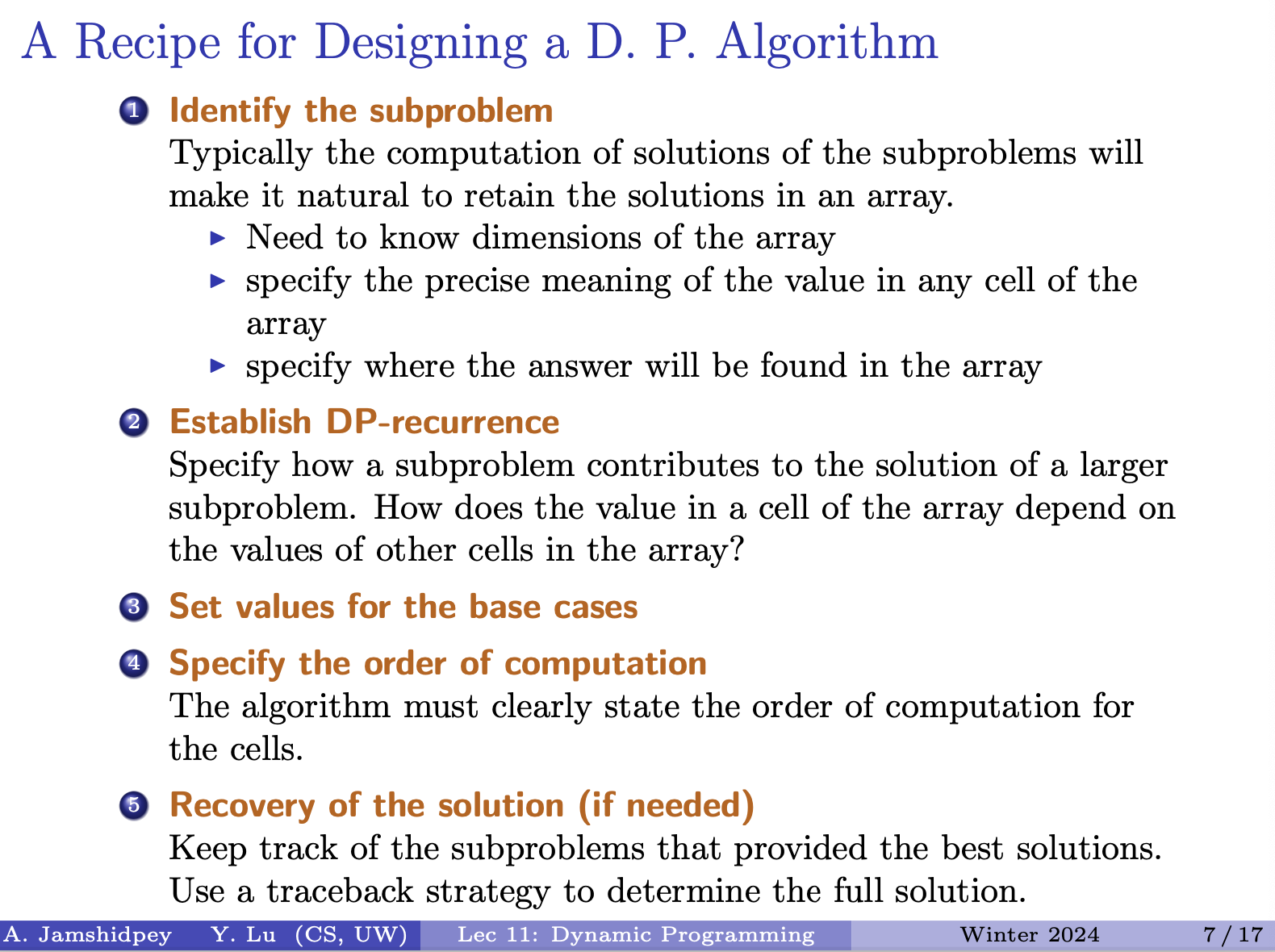

Came up with a plan, identified the subproblems. Need a place to keep the information. Use an array, size and we know how to compute , then we tell it how to solve it.

Prof doesn’t like this slide:

Use your creativity instead of following this recipe. - Armin

Freedom comes with a cost. Any good things come with a cost - Armin

From Matthew’s notes:

- The prof mentioned that you often might have the proof of correctness embedded into your description of the algorithm, since you often need to do a lot of justification to make the recurrence relation between your subproblems / for your algorithm to make sense.

- The prof said that in this course, point #5 is always needed. He said that in the following examples, what he is talking about will become apparent / will make sense.

- In this course, the prof said that if he asks you to do DP, you must do things iteratively, and not recursively.

Be precise on the exam, what are your subproblems, and HOW you can find the solution in the array. Then come up with a recurrence.

Recovering of the solution it is needed in this course for exams and assignments (although the slide says if needed).

In this course, when they asked dynamic programming, do it iteratively not recursively!

Dynamic Programming

Key features

- solve problems through recursion

- use a small (polynomial) number of nested subproblems

- may have to store results for all subproblems

- can often be turned into one (or more) loops

Dynamic programming vs. divide-and-conquer (PROF DOESN’T CARE ABOUT DIFFERENCES)

- dynamic programming usually deals with all input sizes

- Does not go through all the subproblems in divide-and-conquer

- DAC may not solve “subproblems”

- DAC algorithms not easy to rewrite iteratively

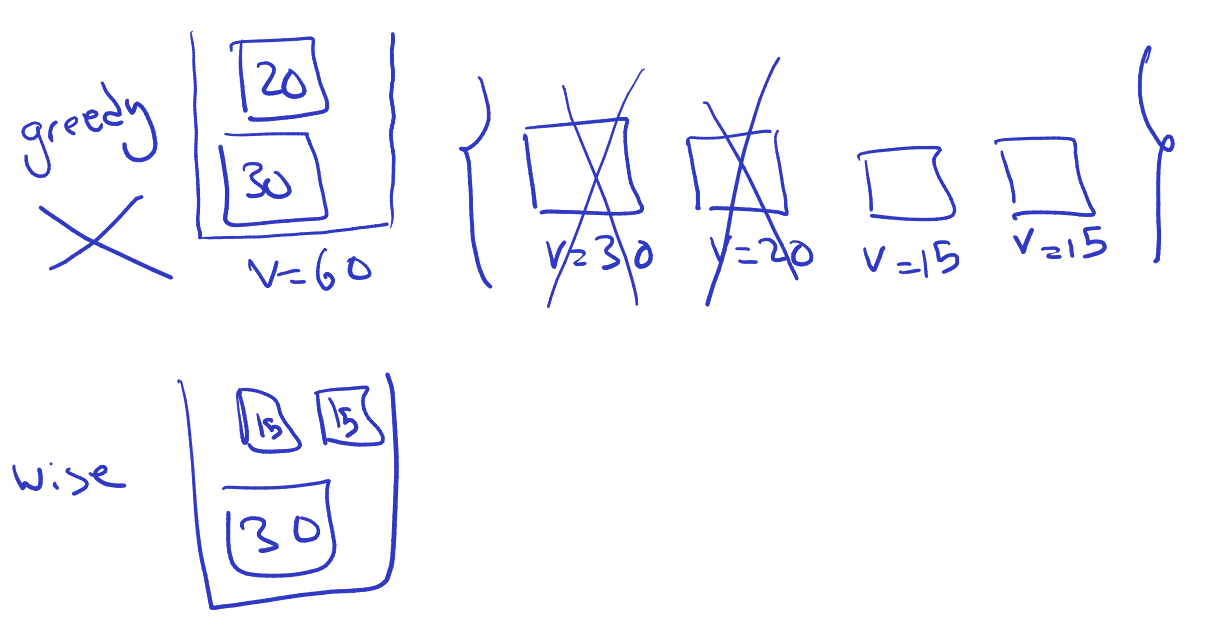

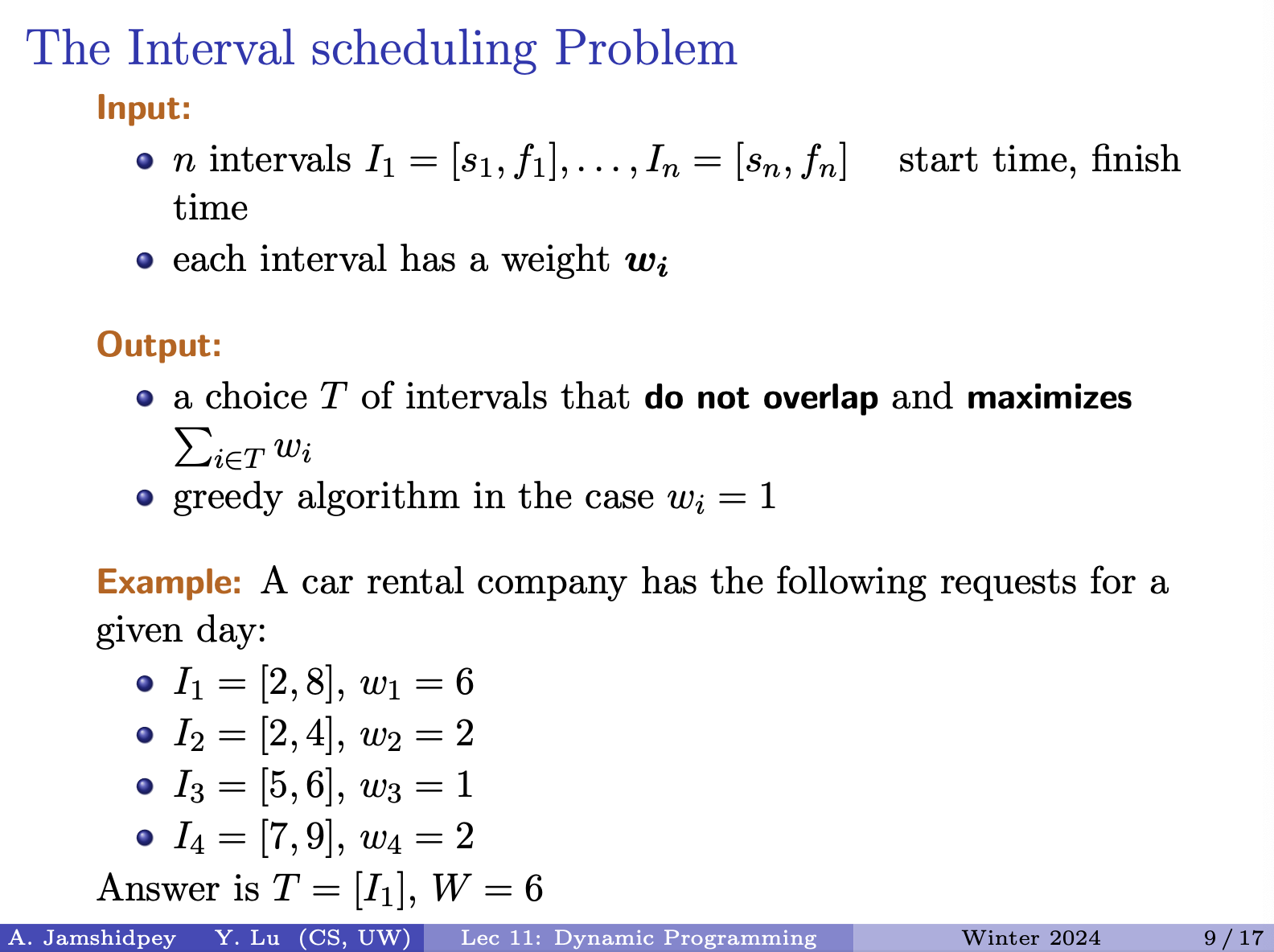

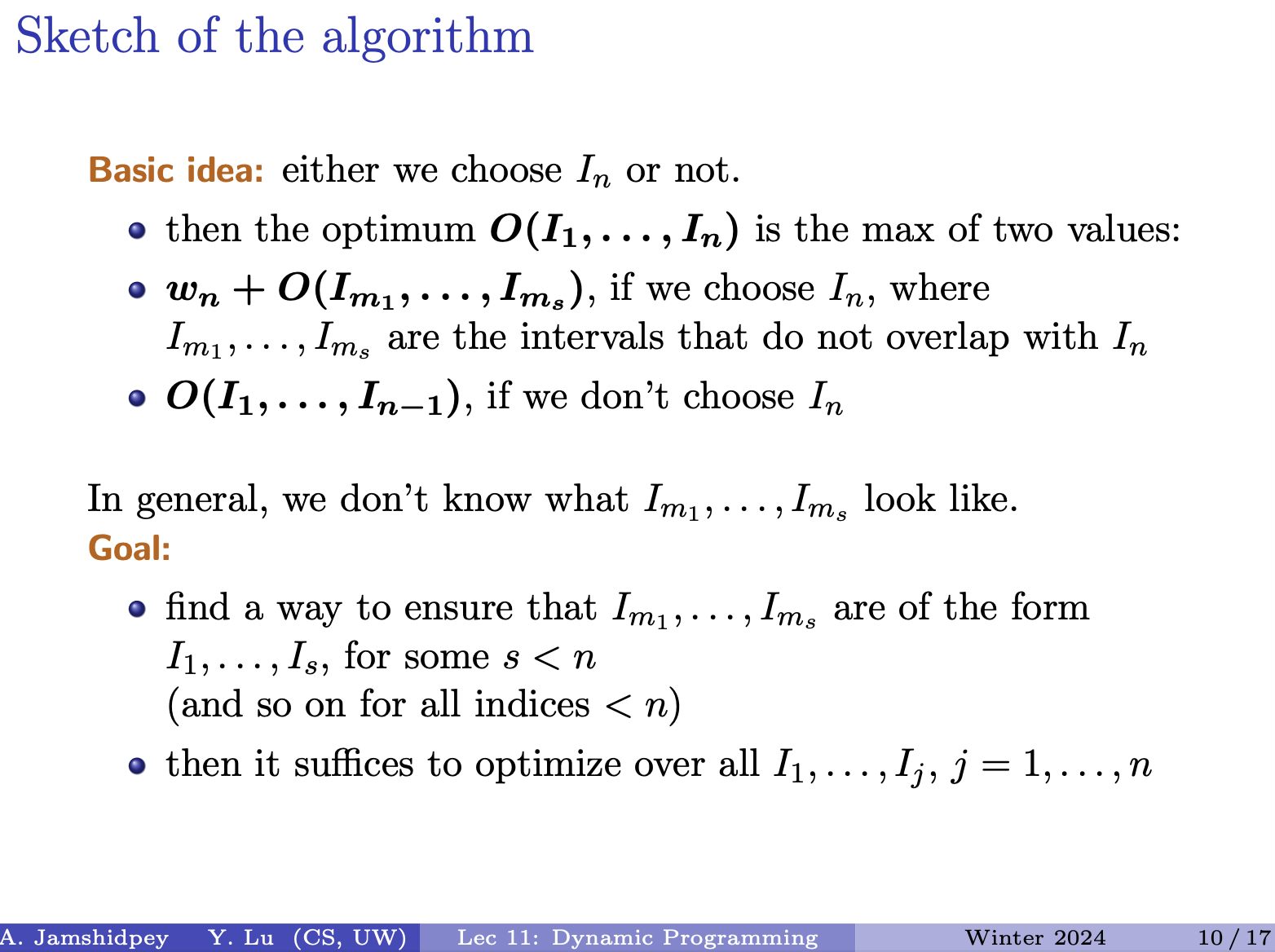

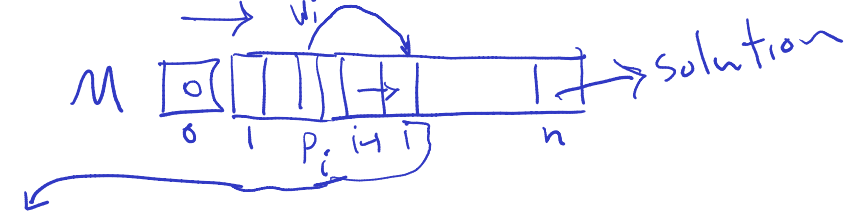

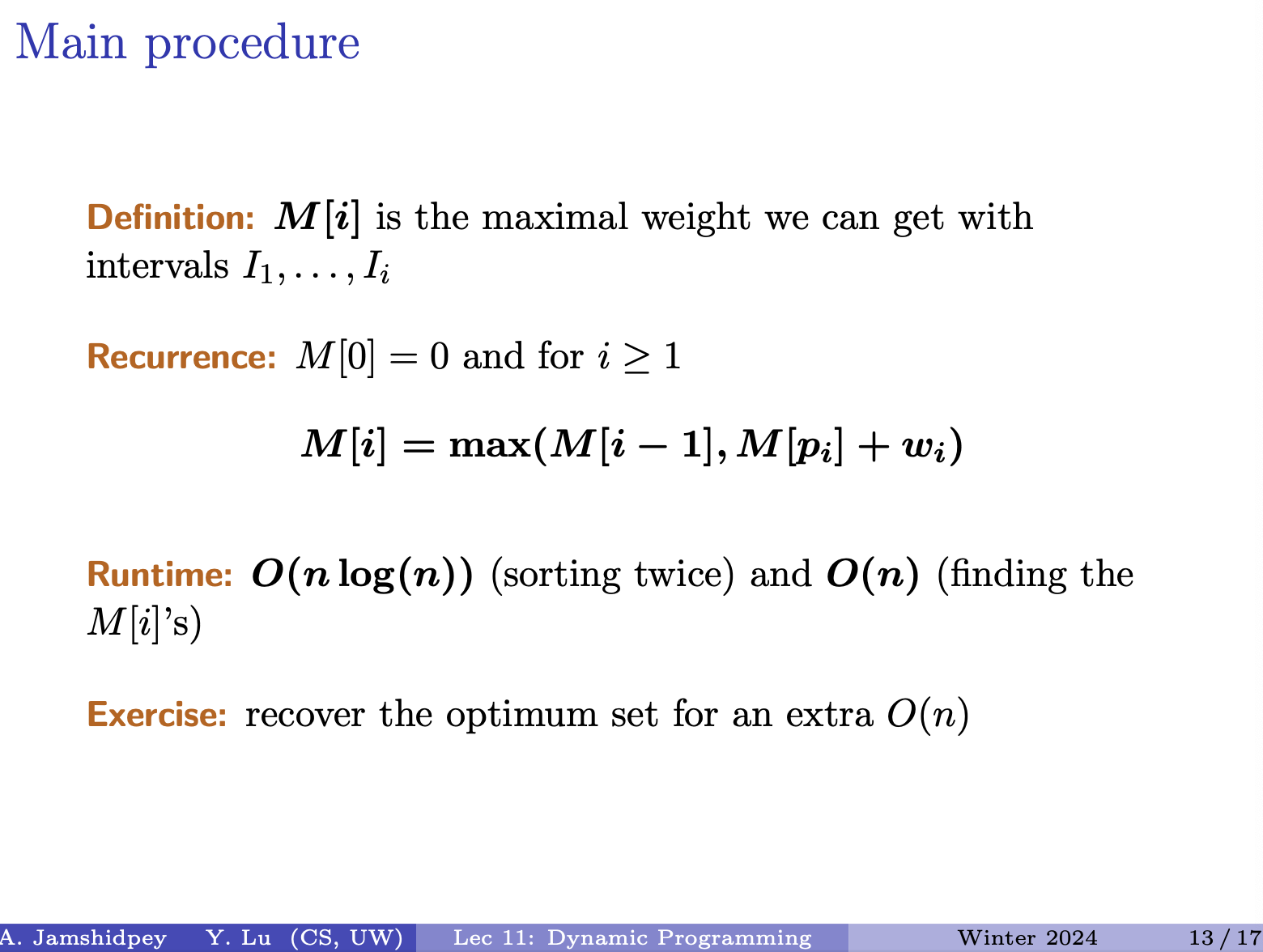

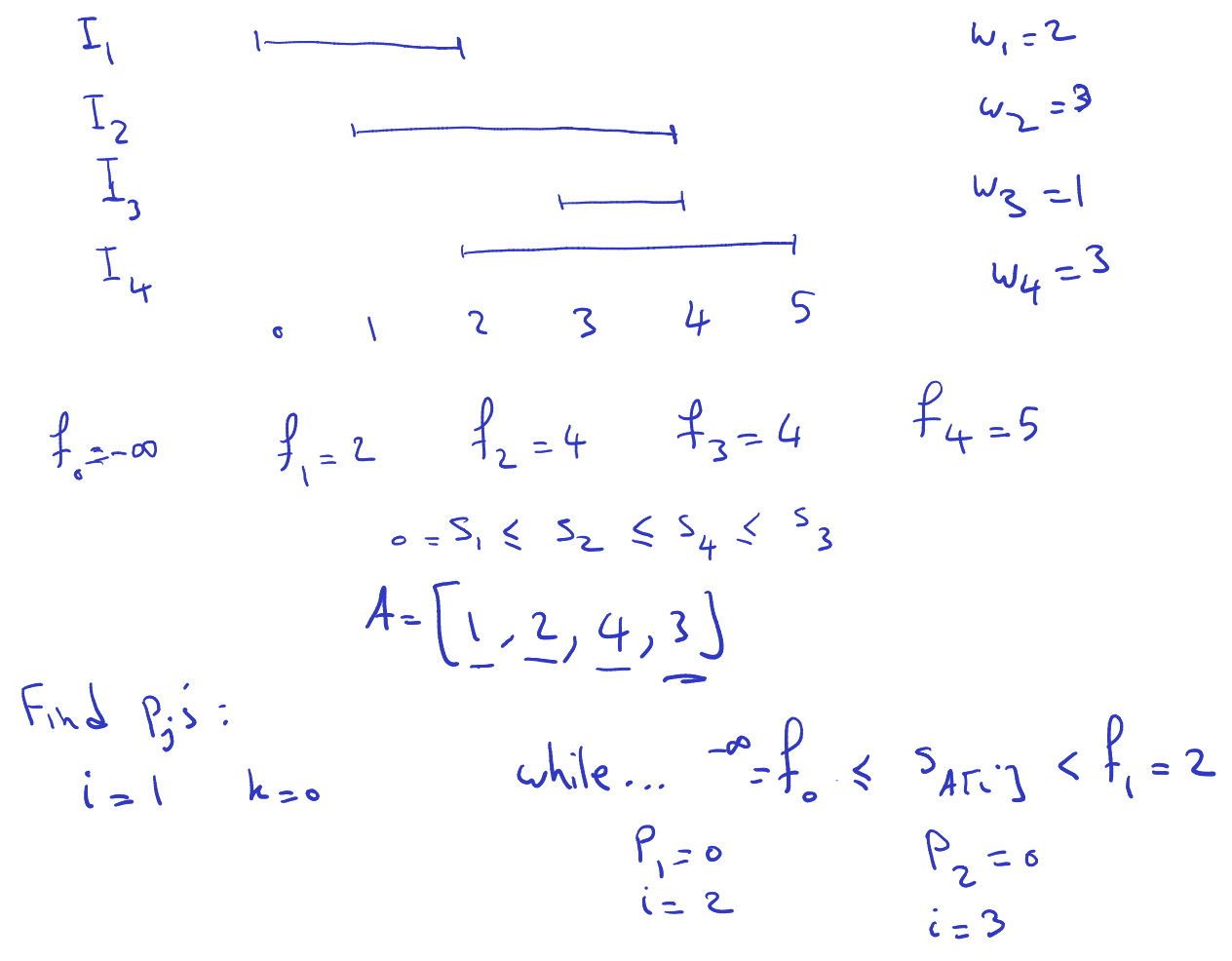

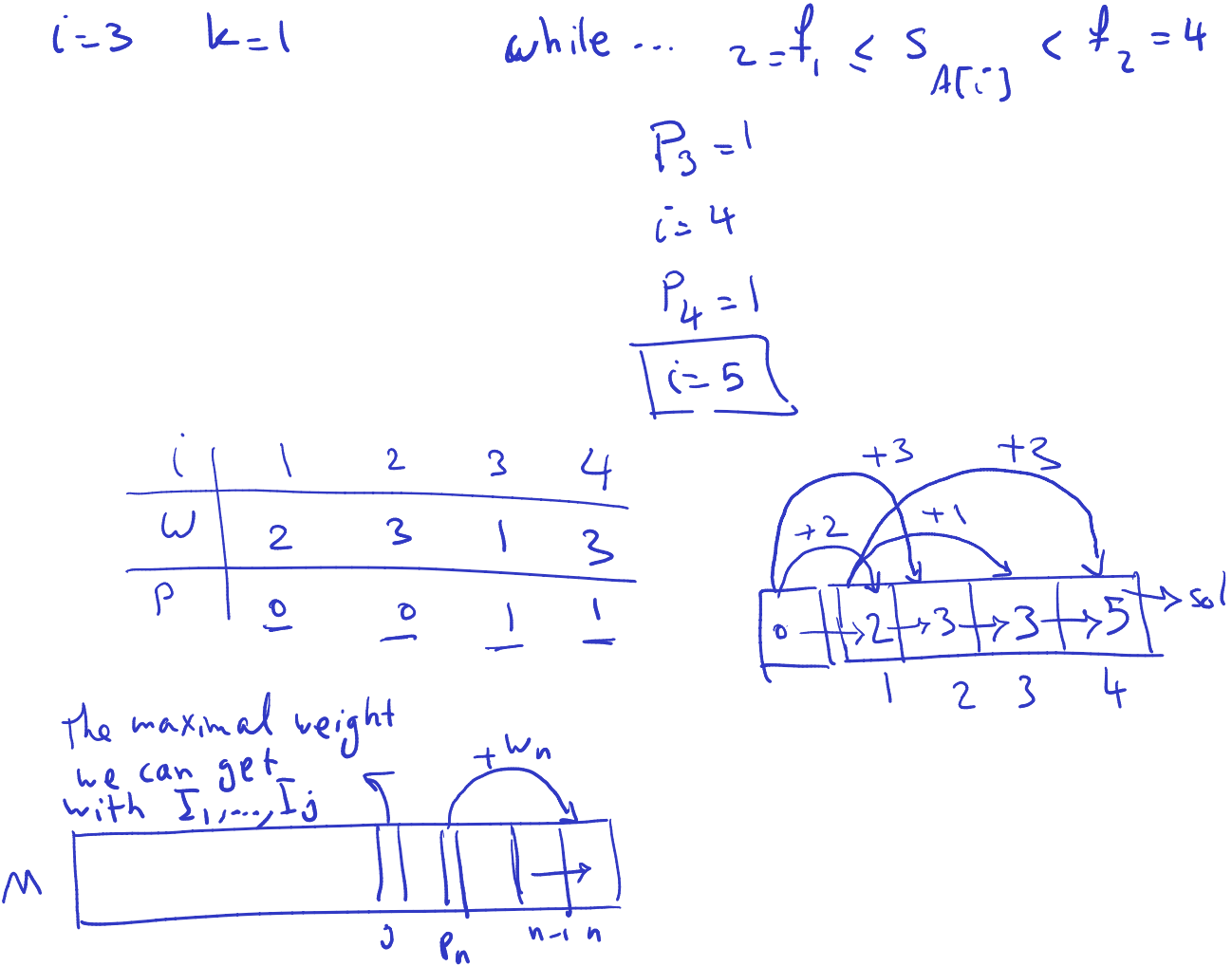

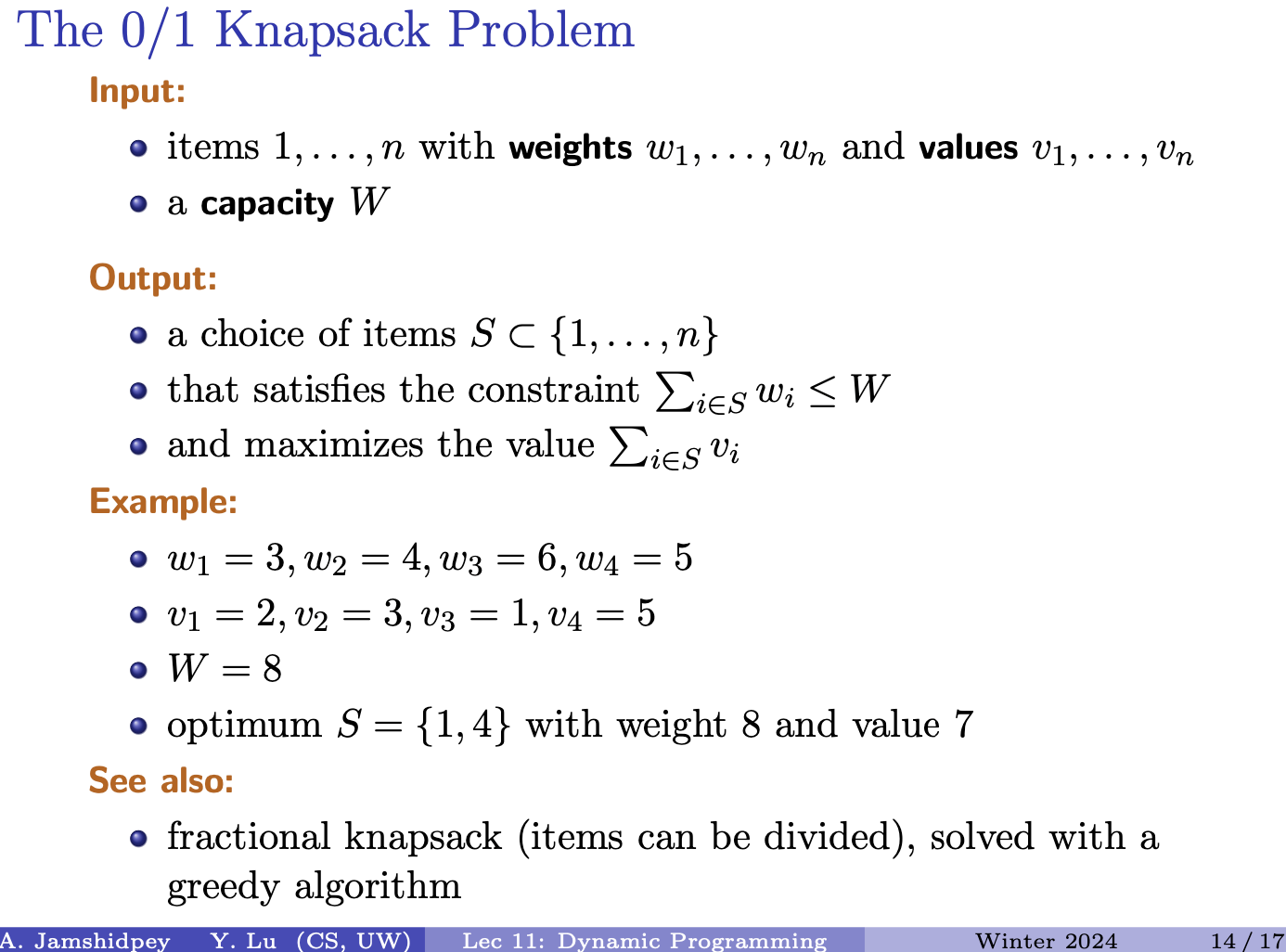

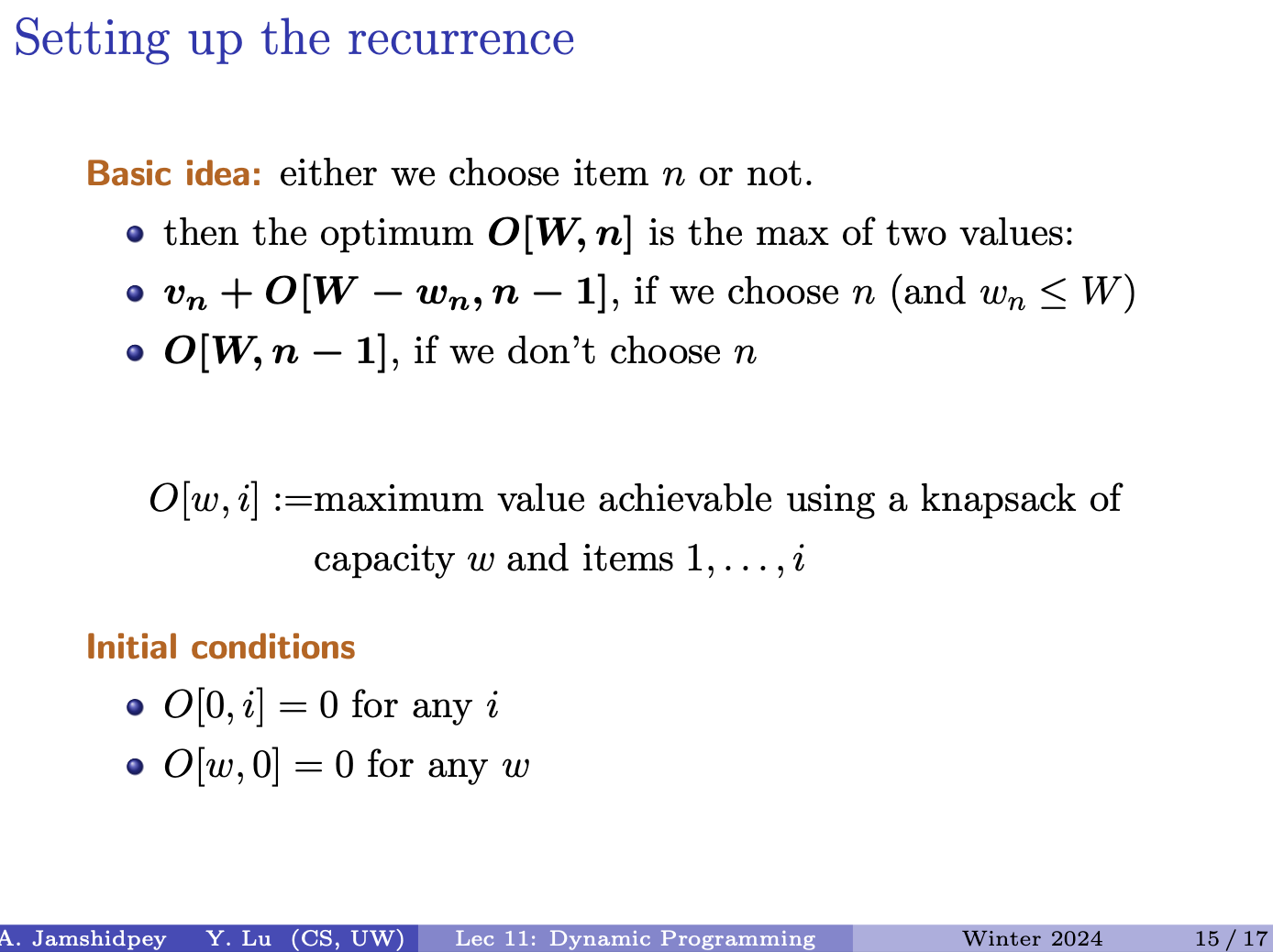

- We want the weight of the sum of the intervals to be maximized. dynamic programming solves this problem.

- We did the greedy algorithm for it before, but that only works for when the weight of each interval is 0.

- What is the input and output. Input is the number of intervals and output intervals that do not overlap and maximizes the weight.

Insert picture: second is solution not containing

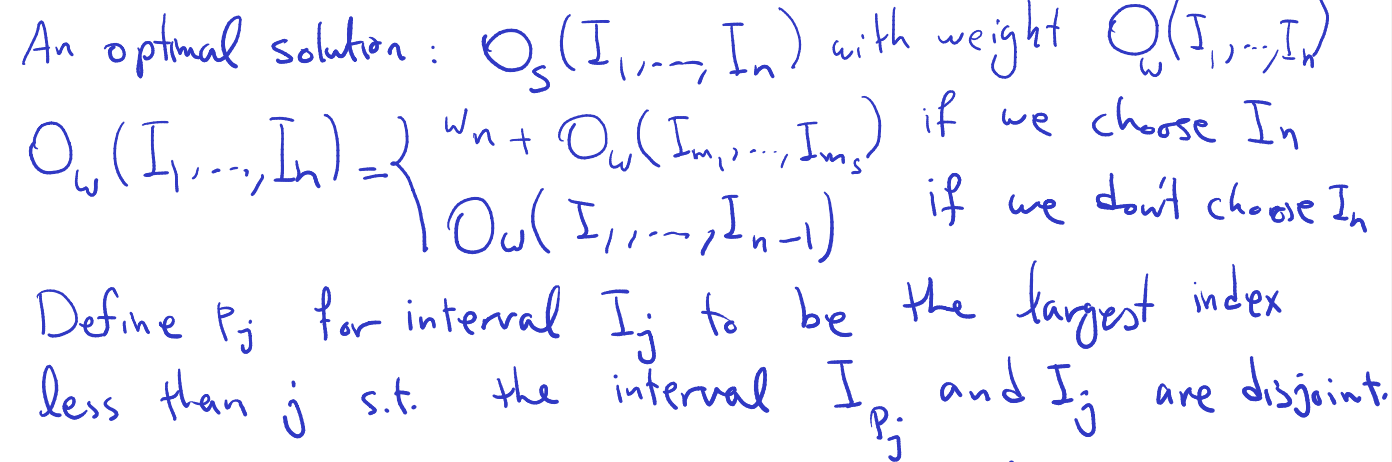

An optimal solution: with weight

- The s in the brackets which is the optimal solution, are possible candidates contributing to the optimal solution

- But we don’t know the indices of these items!

Plan to figure out the intervals that play a role in our optimal solution:

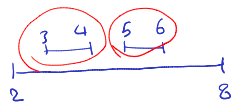

- sorting based on their finishing time. first finishing will have 1, then 2, etc.

There are two options: either we choose or we don’t choose .

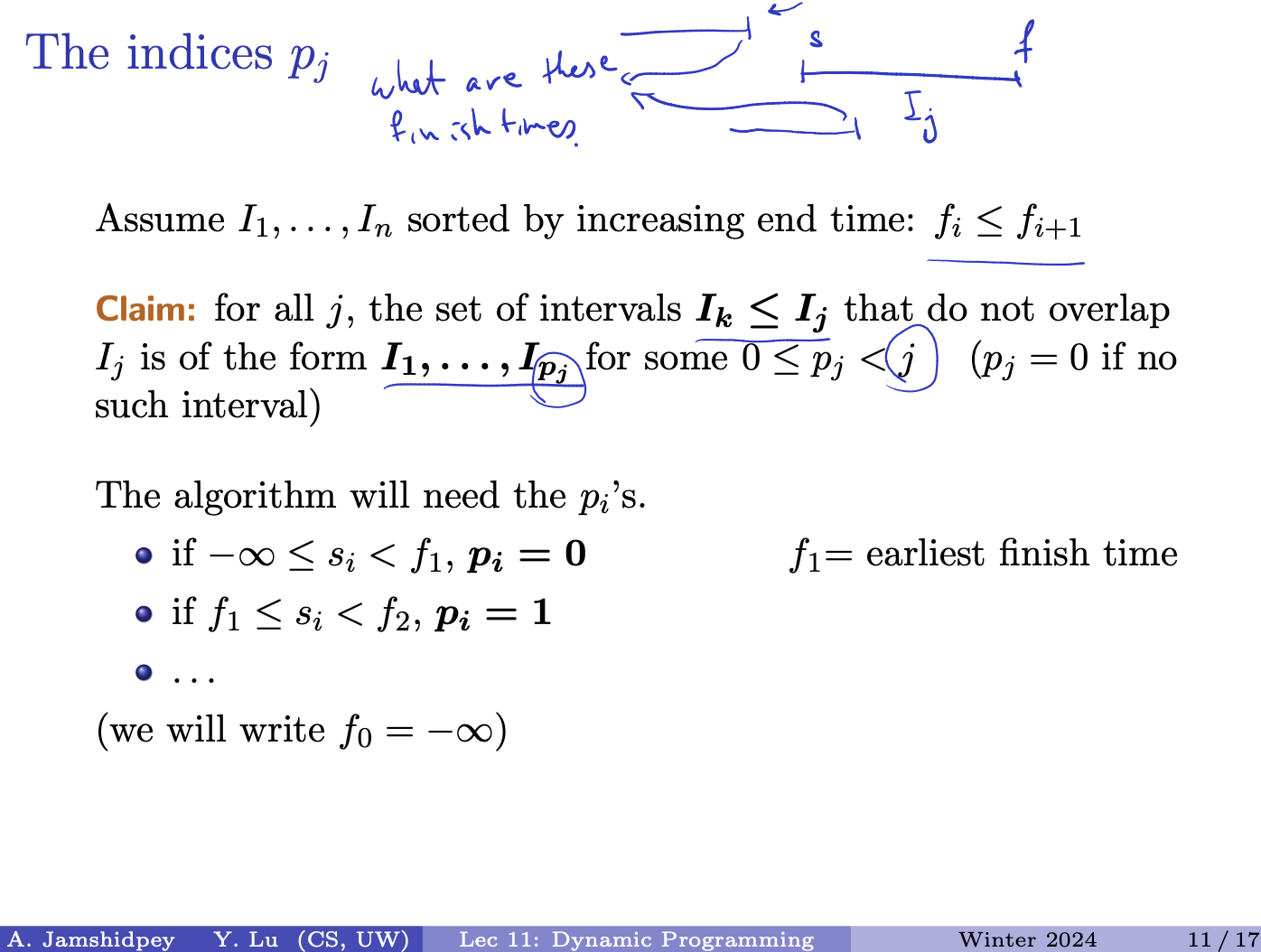

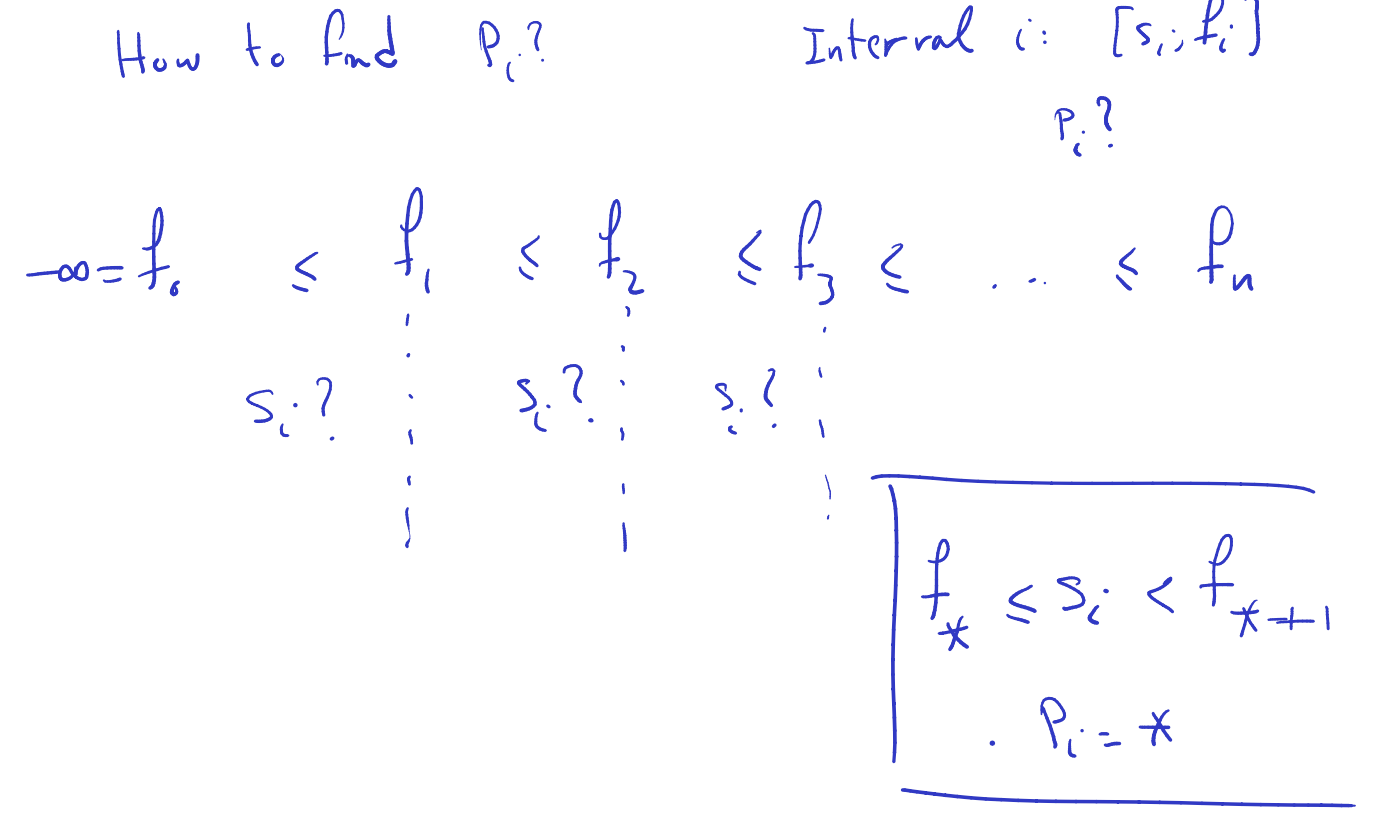

Define for interval to be the largest index less than such that the interval are disjoint. (so the next interval will have interval between those two.)to-understand

- O(…) here has nothing to do with Big-O, it is just used to show “the optimal solution that involves them”. So, the things inside the brackets are things that could be in the optimal solution, but they don’t have to be. The solution will just be a subset of them.

The idea:

- Sort the finishing time in non-decreasing order

- Initialize empty set T to store the selected intervals

- What exactly is over here?

- all the intervals that don’t overlap with the j’s Interval. Of the form

- And the ‘s? Oh maybe some sort of numbering for the intervals that belongs in between the actual Intervals depending on the finishing time of the interval. So we, that is < then . Then in this case , since it’s technically the first one.

- You somehow use to find the

- seems to be a numbering system for intervals based on their finishing times. helps in determining the index of the previous non-overlapping interval

and : represents the largest index less than such that the interval is disjoint from . This means that identifies the last interval before that doesn’t overlap with . As for , it seems to be a numbering system for intervals based on their finishing times. It helps in determining the index of the previous non-overlapping interval.

- How can I find ?

- (Interval )

- ?

- do for all the , but don’t sort because we don’t want to scramble the finish times we’ve sorted

= sorting permutation for the array of s’s

- He said that finding the sorting permutation can be found in (same time as sorting)

- Go through smallest and move forward checking for the next one.

- I will never go back in computation

- refers to the indexes of

- cost: we sort once to get A, and loop cost is linear. And we are always moving forward.

Runtime: sorting and loops

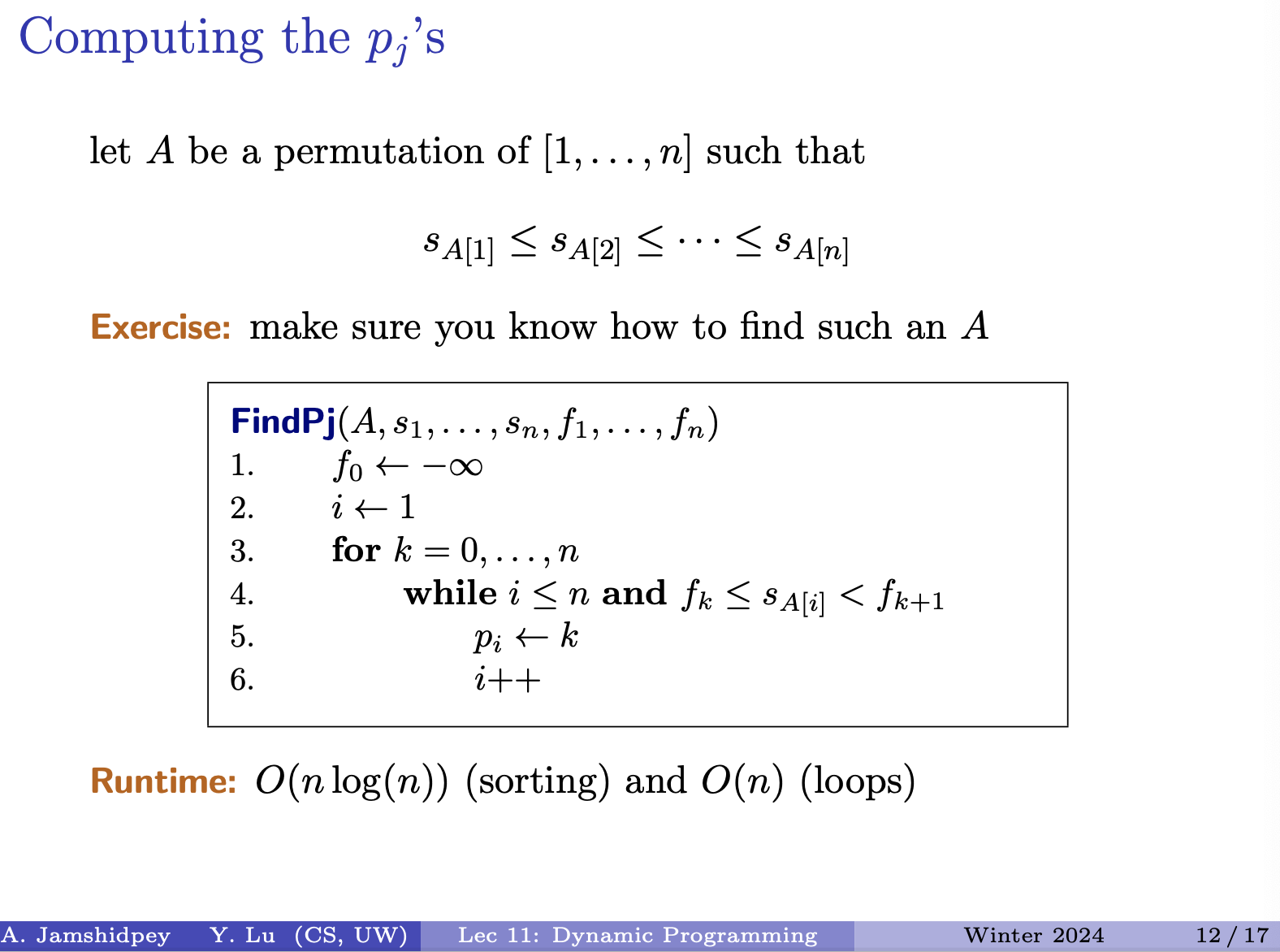

The algorithm outlined is for computing the values of which represent the largest index less than such that the interval does not overlap with . Here’s a breakdown of how the algorithm works:e

- Begin by initializing to and set to 1.

- Iterate over each index from 0 to .

- While is less than or equal to and is less than or equal to (the starting time of the -th interval in the sorted order), and is strictly less than , set to and increment .

- Continue this process until either all intervals are considered or the condition is not met.

- The resulting values will indicate the largest index less than such that the intervals at those indices do not overlap with the -th interval.

The runtime of this algorithm is dominated by the sorting step, which is , and the subsequent loop, which is . Therefore, the overall runtime complexity is , which simplifies to . This makes the algorithm efficient for computing the values for the intervals.

Note: The while loop

The while loop condition in the algorithm ensures that the algorithm iterates through the intervals until it finds an interval that does not overlap with the current interval being considered. Let’s break down the condition:

while i ≤ n and fk ≤ sA[i] < fk+1

i ≤ n: This condition ensures that the algorithm does not attempt to access an index beyond the bounds of the array of intervals.

fk ≤ sA[i] < fk+1: This condition checks whether the starting time of the interval indexed byA[i]falls within the range of the current interval being considered, which is defined byfkandfk+1. If it does, then it means that the intervalA[i]overlaps with the current interval. The loop continues until an interval is found that doesn’t overlap with the current interval.The while loop condition ensures that the algorithm iterates through the intervals until it finds an interval that does not overlap with the current interval being considered (

I_k). Once such an interval is found, the algorithm assigns the appropriate value ofp_iand moves on to the next interval.

- is the last index of interval that doesn’t have intersection

- Solution is the maximum weight. That is recovering solution. Finding that set.

Matthew’s notes

- To find the optimal set, you have to first find the element in M that is maximum. Then, you have to figure out what elements actually contribute to that. To do that, you have to trace back the calculation for that index and see what contributed to it. You have to backtrack.

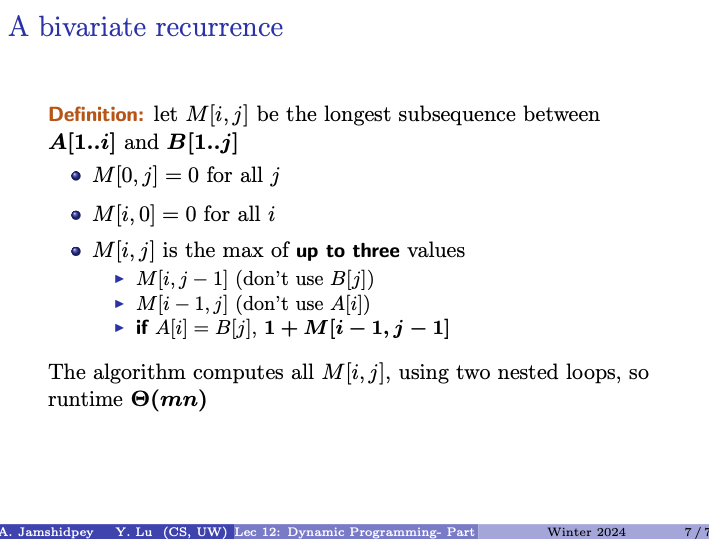

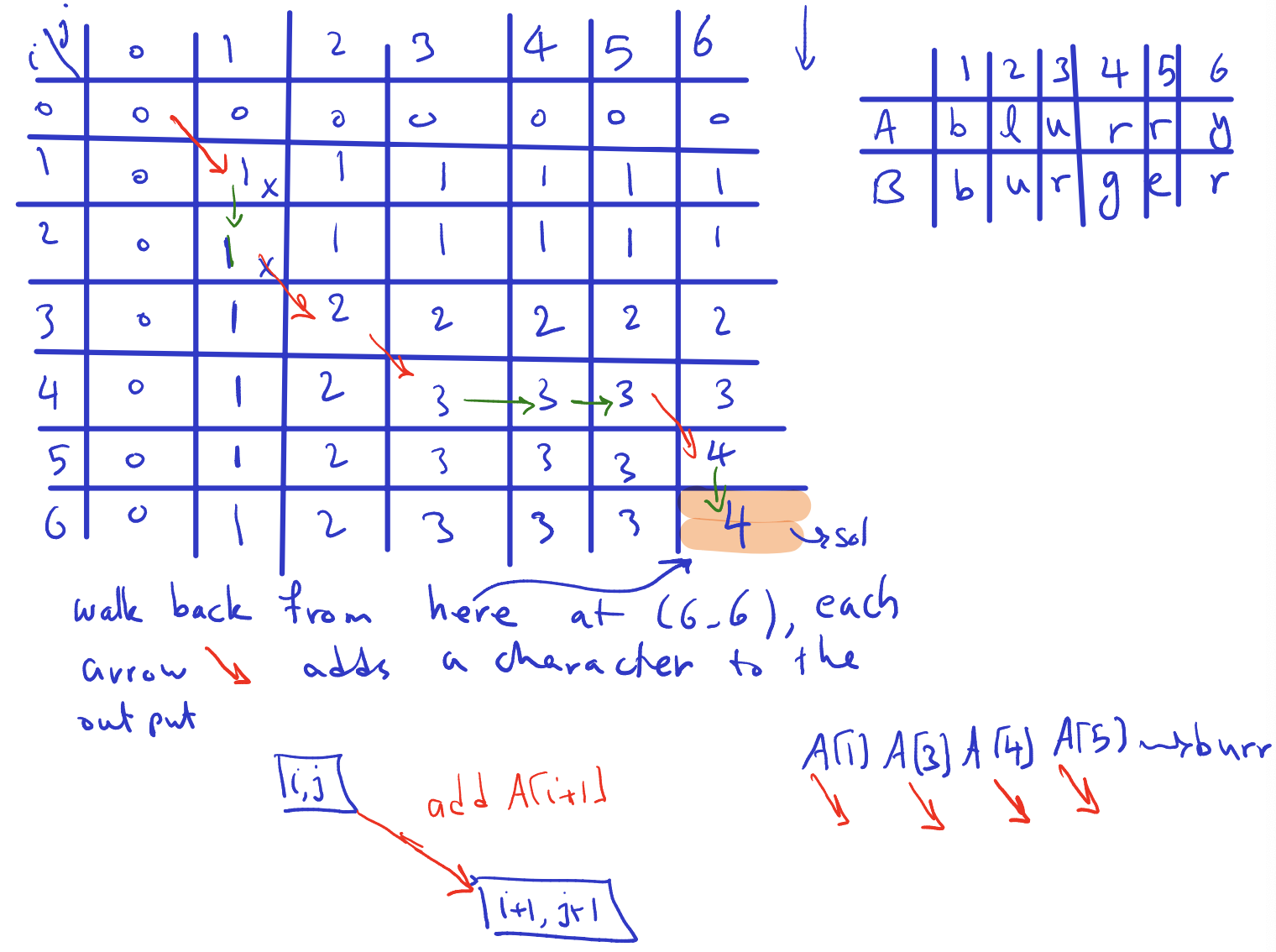

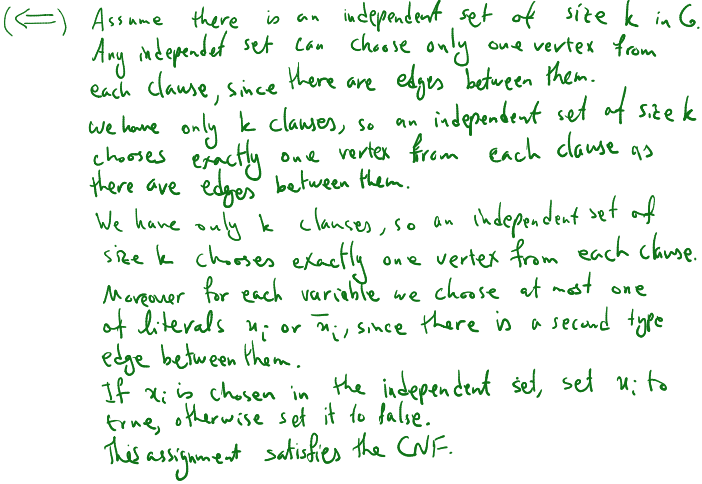

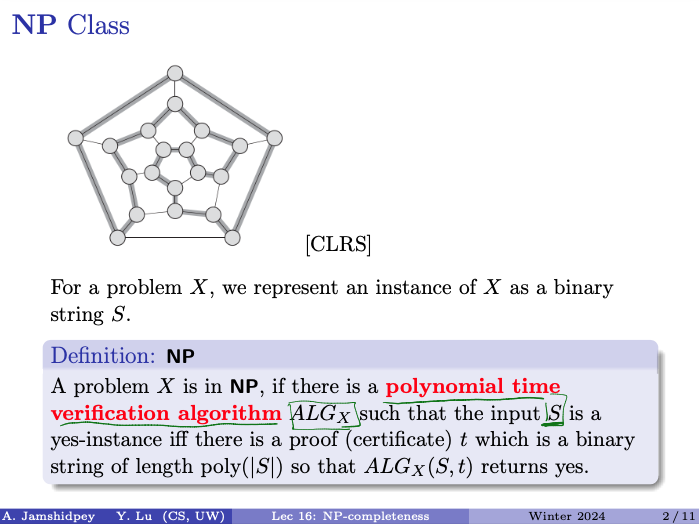

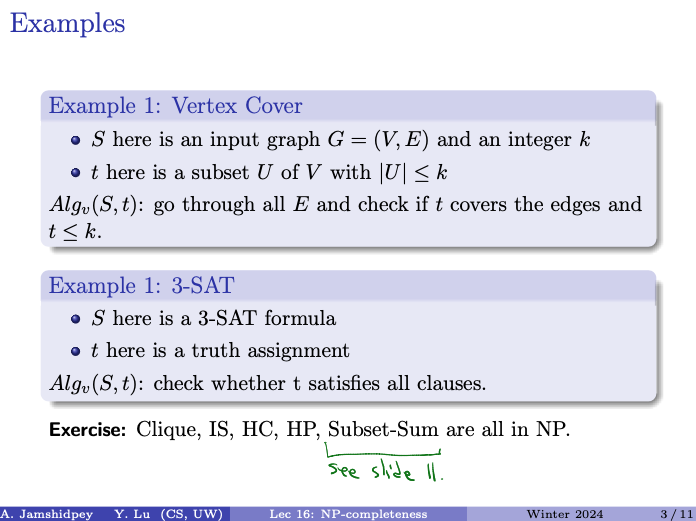

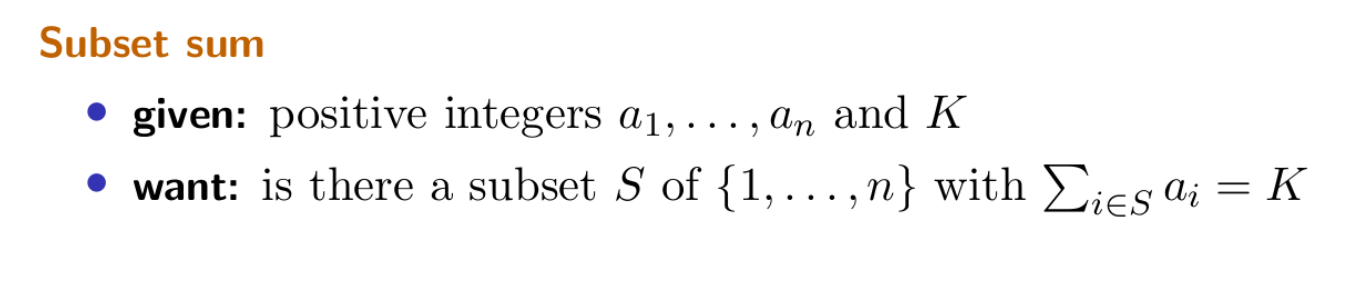

- The prof then ran the algorithm on a sample dataset.